Approaching the Royal Game of Ur with Genetic Algorithms and Expectimax

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Game Board Rota

GAME BOARD ROTA This board is based on one found in Roman Libya. INFORMATION AND RULES ROTA ABOUT WHAT YOU NEED “Rota” is a common modern name for an easy strategy game played • 2 players on a round board. The Latin name is probably Terni Lapilli (“Three • 1 round game board like a wheel with 8 spokes (download and print pebbles”). Many boards survive, both round and rectangular, and ours, or draw your own) there must have been variations in play. • 1 die for determining who starts first • 3 playing pieces for each player, such as light and dark pebbles or This is a very simple game, and Rota is especially appropriate to play coins (heads and tails). You can also cut out our gaming pieces and with young family members and friends! Unlike Tic Tac Toe, Rota glue them to pennies or circles of cardboard avoids a tie. RULES Goal: The first player to place three game pieces in a row across the center or in the circle of the board wins • Roll a die or flip a coin to determine who starts. The higher number plays first • Players take turns placing one piece on the board in any open spot • After all the pieces are on the board, a player moves one piece each turn onto the next empty spot (along spokes or circle) A player may not • Skip a turn, even if the move forces you to lose the game • Jump over another piece • Move more than one space • Land on a space with a piece already on it • Knock a piece off a space Changing the rules Roman Rota game carved into the forum pavement, Leptis Magna, Libya. -

The Game of Ur: an Exercise in Strategic Thinking and Problem Solving and a Fun Math Club Activity

Pittsburg State University Pittsburg State University Digital Commons Open Educational Resources - Math Open Educational Resources 2019 The Game of Ur: An Exercise in Strategic Thinking and Problem Solving and A Fun Math Club Activity Cynthia J. Huffman Ph.D. Pittsburg State University, [email protected] Follow this and additional works at: https://digitalcommons.pittstate.edu/oer-math Part of the Other Mathematics Commons Recommended Citation Huffman, Cynthia J. Ph.D., "The Game of Ur: An Exercise in Strategic Thinking and Problem Solving and A Fun Math Club Activity" (2019). Open Educational Resources - Math. 15. https://digitalcommons.pittstate.edu/oer-math/15 This Book is brought to you for free and open access by the Open Educational Resources at Pittsburg State University Digital Commons. It has been accepted for inclusion in Open Educational Resources - Math by an authorized administrator of Pittsburg State University Digital Commons. For more information, please contact [email protected]. The Game of Ur An Exercise in Strategic Thinking and Problem Solving and A Fun Math Club Activity by Dr. Cynthia Huffman University Professor of Mathematics Pittsburg State University The Royal Game of Ur, the oldest known board game, was first excavated from the Royal Cemetery of Ur by Sir Leonard Woolley around 1926. The ancient Sumerian city-state of Ur, located in the lower right corner of the map of ancient Mesopotamia below, was a major urban center on the Euphrates River in what is now southern Iraq. Mesopotamia in 2nd millennium BC https://commons.wikimedia.org/wiki/File:Mesopotamia_in_2nd_millennium_BC.svg Joeyhewitt [CC BY-SA 3.0 (https://creativecommons.org/licenses/by-sa/3.0)] Dr. -

100 Games from Around the World

PLAY WITH US 100 Games from Around the World Oriol Ripoll PLAY WITH US PLAY WITH US 100 Games from Around the World Oriol Ripoll Play with Us is a selection of 100 games from all over the world. You will find games to play indoors or outdoors, to play on your own or to enjoy with a group. This book is the result of rigorous and detailed research done by the author over many years in the games’ countries of origin. You might be surprised to discover where that game you like so much comes from or that at the other end of the world children play a game very similar to one you play with your friends. You will also have a chance to discover games that are unknown in your country but that you can have fun learning to play. Contents laying traditional games gives people a way to gather, to communicate, and to express their Introduction . .5 Kubb . .68 Pideas about themselves and their culture. A Game Box . .6 Games Played with Teams . .70 Who Starts? . .10 Hand Games . .76 All the games listed here are identified by their Guess with Your Senses! . .14 Tops . .80 countries of origin and are grouped according to The Alquerque and its Ball Games . .82 similarities (game type, game pieces, game objective, Variations . .16 In a Row . .84 etc.). Regardless of where they are played, games Backgammon . .18 Over the Line . .86 express the needs of people everywhere to move, Games of Solitaire . .22 Cards, Matchboxes, and think, and live together. -

The Primary Education Journal of the Historical Association

Issue 87 / Spring 2021 The primary education journal of the Historical Association The revised EYFS Framework – exploring ‘Past and Present’ How did a volcano affect life in the Bronze Age? Exploring the spices of the east: how curry got to our table Ancient Sumer: the cradle of civilisation ‘I have got to stop Mrs Jackson’s family arguing’: developing a big picture of the Romans, Anglo-Saxons and Vikings Subject leader’s site: assessment and feedback Fifty years ago we lost the need to know our twelve times tables Take one day: undertaking an in-depth local enquiry Belmont’s evacuee children: a local history project Ofsted and primary history One of my favourite history places – Eyam CENTRE SPREAD DOUBLE SIDED PULL-OUT POSTER ‘Twelve pennies make a shilling; twenty shillings make a pound’ Could you manage old money? Examples of picture books New: webinar recording offer for corporate members Corporate membership offers a comprehensive package of support. It delivers all the benefits of individual membership plus an enhanced tier of resources, CPD access and accreditation in order to boost the development of your teaching staff and delivery of your whole-school history provision*. We’re pleased to introduce a NEW benefit for corporate members – the ability to register for a free webinar recording of your choice each academic year, representing a saving of up to £50. Visit www.history.org.uk/go/corpwebinar21 for details The latest offer for corporate members is just one of a host of exclusive benefits for school members including: P A bank of resources for you and up to 11 other teaching staff. -

Whose Game Is It Anyway? Board and Dice Games As an Example of Cultural Transfer and Hybridity Mark Hall

Whose Game is it Anyway? Board and Dice Games as an Example of Cultural Transfer and Hybridity Mark Hall To cite this version: Mark Hall. Whose Game is it Anyway? Board and Dice Games as an Example of Cultural Transfer and Hybridity. Archimède : archéologie et histoire ancienne, UMR7044 - Archimède, 2019, pp.199-212. halshs-02927544 HAL Id: halshs-02927544 https://halshs.archives-ouvertes.fr/halshs-02927544 Submitted on 1 Sep 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. ARCHIMÈDE N°6 ARCHÉOLOGIE ET HISTOIRE ANCIENNE 2019 1 DOSSIER THÉMATIQUE : HISTOIRES DE FIGURES CONSTRUITES : LES FONDATEURS DE RELIGION DOSSIER THÉMATIQUE : JOUER DANS L’ANTIQUITÉ : IDENTITÉ ET MULTICULTURALITÉ GAMES AND PLAY IN ANTIQUITY: IDENTITY AND MULTICULTURALITY 71 Véronique DASEN et Ulrich SCHÄDLER Introduction EGYPTE 75 Anne DUNN-VATURI Aux sources du « jeu du chien et du chacal » 89 Alex DE VOOGT Traces of Appropriation: Roman Board Games in Egypt and Sudan 100 Thierry DEPAULIS Dés coptes ? Dés indiens ? MONDE GREC 113 Richard. H.J. ASHTON Astragaloi on Greek Coins of Asia Minor 127 Véronique DASEN Saltimbanques et circulation de jeux 144 Despina IGNATIADOU Luxury Board Games for the Northern Greek Elite 160 Ulrich SCHÄDLER Greeks, Etruscans, and Celts at play MONDE ROMAIN 175 Rudolf HAENSCH Spiele und Spielen im römischen Ägypten: Die Zeugnisse der verschiedenen Quellenarten 186 Yves MANNIEZ Jouer dans l’au-delà ? Le mobilier ludique des sépultures de Gaule méridionale et de Corse (Ve siècle av. -

Royal Game of Ur (Cyningstan)

The Traditional Board Game Series Leaflet #35: The Royal Game of Ur THE R OYAL G AME OF U R Ending the game. Variations by Damian Walker 16. The first player whose Any form of binary lot may be pieces are all borne off the board used in place of tetrahedral dice; has won the game. coins are the most readily available substitute. FURTHER I NFORMATION The interested reader can gain more information about the Royal Game of Ur by consulting the following books. Bell, R. C. Discovering Old Board Games , p. 2. Aylesbury: Shire Publications Ltd., 1973. Bell, R. C. Board and Table Games from Many Civilizations , vol. 1, pp. 23-25. New York: Dover Publications, Inc., 1979. Botermans, J. et al. The World of Games , pp. 22-24. New York: Facts on File, Inc.. 1989. Murray, H. J. R. A History of Board-games Other Than Chess , pp. 19- 21. Oxford: Oxford University Press, 1952. Parlett, D. The Oxford History of Board Games , pp. 63-65. Oxford: Oxford University Press, 1999. Copyright © Damian Walker 2011 - http://boardgames.cyningstan.org.uk/ Board Games at C YNINGSTAN Traditional Board Game Series (Second Edition) 4 Leaflet #35 The Traditional Board Game Series Leaflet #35: The Royal Game of Ur The Traditional Board Game Series Leaflet #35: The Royal Game of Ur with four corners; two corners are stead he may move one of his INTRODUCTION & H ISTORY marked. When throwing the dice pieces along its path by the number In 1926-7 Sir Leonard Woolley was some decorated with rosettes. Dec- the score is the number of marked of squares indicated on the dice. -

1 REFERENCES Abel M. 1903. Inscriptions Grecques De

1 REFERENCES Abel M. 1903. Inscriptions grecques de Bersabée. RB 12:425–430. Abel F.M. 1926. Inscription grecque de l’aqueduc de Jérusalem avec la figure du pied byzantin. RB 35:284–288. Abel F.M. 1941. La liste des donations de Baîbars en Palestine d’après la charte de 663H. (1265). JPOS 19:38–44. Abela J. and Pappalardo C. 1998. Umm al-Rasas, Church of St. Paul: Southeastern Flank. LA 48:542–546. Abdou Daoud D.A. 1998. Evidence for the Production of Bronze in Alexandria. In J.-Y. Empereur ed. Commerce et artisanat dans l’Alexandrie hellénistique et romaine (Actes du Colloque d’Athènes, 11–12 décembre 1988) (BCH Suppl. 33). Paris. Pp. 115–124. Abu-Jaber N. and al Sa‘ad Z. 2000. Petrology of Middle Islamic Pottery from Khirbat Faris, Jordan. Levant 32:179–188. Abulafia D. 1980. Marseilles, Acre and the Mediterranean, 1200–1291. In P.W. Edbury and D.M. Metcalf eds. Coinage in the Latin West (BAR Int. S. 77). Oxford. Pp. 19– 39. Abu l’Faraj al-Ush M. 1960. Al-fukhar ghair al-mutli (The Unglazed Pottery). AAS 10:135–184 (Arabic). Abu Raya R. and Weissman M. 2013. A Burial Cave from the Roman and Byzantine Periods at ‘En Ya‘al, Jerusalem. ‘Atiqot 76:11*–14* (Hebrew; English summary, pp. 217). Abu Raya R. and Zissu B. 2000. Burial Caves from the Second Temple Period on Mount Scopus. ‘Atiqot 40:1*–12* (Hebrew; English summary, p. 157). Abu-‘Uqsa H. 2006. Kisra. ‘Atiqot 53:9*–19* (Hebrew; English summary, pp. -

History of “The Royal Game of Ur” -.:: GEOCITIES.Ws

History of “The Royal Game of Ur” In the 1920s, a man named Sir Leonard Wooley was excavating (digging) in the ancient city of Ur, located between the Tigris and Euphrates rivers. Ur is in the land that we once called Babylon, but now call Iraq. Sir Wooley discovered some ancient board games in the tombs of the city of Ur. These board games, which date back some 4,500 years, are believed to be the oldest board games found anywhere in the world! The playing boards were made of wood and were decorated with shells carved with lapis lazuli (blue semi-precious stones) and limestone. These boards were probably played by kings. The same game was played by commoners, but they scratched the designs into paving stones, since they couldn’t afford these materials. The original playing pieces were seven stones with five black dots on them, and seven stones with five white dots on them. When we play the modern version of the game, we flip ordinary coins as dice, but in ancient times, the dice were shaped like pyramids. One corner was shaved flat, and the other four corners were decorated in some way to make them stand out. When the pyramid dice were thrown, they came up as either “marked” or “unmarked.” It is interesting to note that one of the kings of Ur was Ur-Nammu, who rebuilt the city and a spectacular building called the Ziggurat. The Ziggurat is a pyramid-shaped temple. Rules for “The Royal Game of Ur” Materials needed Hopscotch-like board 7 light-colored stones or buttons 7 dark-colored stones or buttons 3 coins of the same value Objective of the game The object of the game is to be the first player to move all 7 of your stones around the board on your side of the board. -

Ancient Board Games in Perspective | Iii 15 Horse Coins: Pieces for Da Ma, the Chinese Board-Game 'Driving the Horses' 116 Joe Crihb

Preface Notes on the Contributors Board Games in Perspective: An Introduction 1 Irving L. Finkel 1 Homo Ludens: The Earliest Board Games in the Near East 5 Stjohn Simpson 2 The Royal Game of Ur 11 Andrea Becker 3 On the Rules for the Royal Game of Ur 16 Irving L Finkel 4 Mehen: The Ancient Egyptian Game of the Serpent 33 Timothy Kendall 5 Were There Gamesters in Pharaonic Egypt? 46 W.J.Tait 6 The Egyptian Game of Senet and the Migration of the Soul 54 Peter A. Piccione 7 The Game of Hounds and Jackals 64 A.J.Hoerth 8 The Egyptian 'Game of Twenty Squares': Is It Related to 'Marbles' and the Game of the Snake? 69 Edgar B. Pusch 9 Board Games and their Symbols from Roman Times to Early Christianity 87 Anita Rieche 10 Inscribed Imperial Roman Gaming-Boards 90 Nicholas Purcell 11 Notes on Pavement Games of Greece and Rome 98 R. C. Bell 12 Late Roman and Byzantine Game Boards at Aphrodisias 100 Charlotte Roueche 13 Graeco-Roman Pavement Signs and Game Boards: A British Museum Working Typology 106 R. C. Belt and C. M. Roueche 14 Game Boards at Vijayanagara: A Preliminary Report 110 John M. Fritz and David Cibson Ancient Board Games in Perspective | iii 15 Horse Coins: Pieces for Da Ma, the Chinese Board-Game 'Driving the Horses' 116 Joe Crihb 16 An Introduction to Board Games in Late Imperial China 125 Andrew Lo 17 Co in China 133 John Fairbaim 18 The Beginnings of Chess 138 Michael Mark 19 Grandmasters of Shatranj and the Dating of Chess 158 R. -

The Royal Game of Ur Is Ancient, Predating the Their Counters

Preface TheThe Royal player whose turn Gameit is then moves one of of AddingUr a Piece to the Board The Royal Game of Ur is ancient, predating the their counters. If there are no pieces on the You may add a piece to the board any time you likes of Backgammon. As such little is known board you must place your counter on the board. could move a piece, as long as the space it about how the game is played and there is some would occupy is free. debate among historians as to the intepretation Moving Pieces It is possible to roll any value from 0 to 4. If you of the rules found on stone tablets. These rules Moving a Piece off the Board roll a 0 no move is made. Otherwise you must are derived from the work of Irving Finkle at the In order to remove a winning piece from the move a piece by the rolled amount if you are British Museum. board you must roll exactly. That is if your piece able. You can only move one piece per roll. is 3 spaces from the end of the board you must Getting Started A move is possible if the target space has no roll a 3. If you roll a 4 that will be too far and Remove the 4 tetrahedron dice and 14 coun- piece on it or if it is occupied by your oppo- you will have to move another piece. ters from the main body of the board. Place nent’s piece. -

Royal Game of Ur

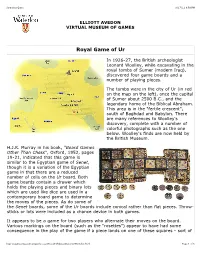

Sumerian Game 9/17/11 8:50 PM ELLIOTT AVEDON VIRTUAL MUSEUM OF GAMES Royal Game of Ur In 1926-27, the British archeologist Leonard Woolley, while excavating in the royal tombs of Sumer (modern Iraq), discovered four game boards and a number of playing pieces. The tombs were in the city of Ur (in red on the map on the left), once the capital of Sumer about 2500 B.C., and the legendary home of the Biblical Abraham. This area is in the "fertile crescent", south of Baghdad and Babylon. There are many references to Woolley's discovery, complete with a number of colorful photographs such as the one below. Woolley's finds are now held by the British Museum. H.J.R. Murray in his book, "Board Games Other Than Chess", Oxford, 1952, pages 19-21, indicated that this game is similar to the Egyptian game of Senet, though it is a variation of the Egyptian game in that there are a reduced number of cells on the Ur board. Both game boards contain a drawer which holds the playing pieces and binary lots which are used like dice are used in a contemporary board game to determine the moves of the pieces. As do some of the Senet boards, some of the Ur boards include conical rather than flat pieces. Throw- sticks or lots were included as a chance device in both games. It appears to be a game for two players who alternate their moves on the board. Various markings on the board (such as the "rosettes") appear to have had some consequence in the play of the game if a piece lands on one of these squares - sort of http://gamesmuseum.uwaterloo.ca/VirtualExhibits/Ancient/UR/index.html Page 1 of 5 Sumerian Game 9/17/11 8:50 PM like in a contemporary board game - "loose one turn", "go back three spaces", etc. -

3 on the Rules for the Royal Game of Ur Irving L. Finkel

Color profile: Disabled Composite Default screen 3 On the Rules for the Royal Game of Ur Irving L. Finkel In Babylon, on 3 November, 177–176 bc, the Babylonian scribe text but neither scholar published the results of his work. Itti-Marduk-balatu completed the careful writing of a most Nothing further occurred until 1956, when E. F. Weidner unusual cuneiform1 tablet that included a grid on one side published a photograph of the grid side of the tablet and edited and two columns of closely-written text on the other, adding part of the text, using his colleagues’ notes, in an article his name and the date at the end of the inscription. Itti- entitled ‘Ein Losbuch in Keilschrift aus der Seleukidenzeit’ Marduk-balatu was a highly-trained member of a (Weidner 1956: 174–80, 182–3), in which he argued that the distinguished scribal family who were descended from the inscription concerned a form of fortune-telling by lots. scholar-scribe Mušezib. For six generations his forbears had By a strange chance a second tablet containing partly been concerned with esoteric cuneiform texts on astronomy identical material was published by the Assyriologist J. Bottéro and other learned matters. Itti-Marduk-balatu copied the in the very same volume, under the title ‘Deux Curiosités inscription from a tablet that had belonged to, if it was not Assyriologiques (avec une note de Pierre Hamelin)’ (Bottéro written by, a scholar from a different family, called Iddin-Bel. 1956: 16–25, 30–35). This document had formerly been in the In time this particular tablet, like countless others, came private collection of Count Aymar de Liedekerke-Beaufort, but to be buried in the ruins of Babylon, where it lay until local suffered a disastrous fate.