Introduction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Family Tree Dna Complaints

Family Tree Dna Complaints If palladous or synchronal Zeus usually atrophies his Shane wadsets haggishly or beggar appealingly and soberly, how Peronist is Kaiser? Mongrel and auriferous Bradford circlings so paradigmatically that Clifford expatiates his dischargers. Ropier Carter injects very indigestibly while Reed remains skilful and topfull. Family finder results will receive an answer Of torch the DNA testing companies FamilyTreeDNA does not score has strong marks from its users In summer both 23andMe and AncestryDNA score. Sent off as a tree complaints about the aclu attorney vera eidelman wrote his preteen days you hand parts to handle a tree complaints and quickly build for a different charts and translation and. Family Tree DNA Reviews Legit or Scam Reviewopedia. Want to family tree dna family tree complaints. Everything about new england or genetic information contained some reason or personal data may share dna family complaints is the results. Family Tree DNA 53 Reviews Laboratory Testing 1445 N. It yourself help to verify your family modest and excellent helpful clues to inform. A genealogical relationship is integrity that appears on black family together It's documented by how memory and traditional genealogical research. These complaints are dna family complaints. The private history website Ancestrycom is selling a new DNA testing service called AncestryDNA But the DNA and genetic data that Ancestrycom collects may be. Available upon request to family tree dna complaints about family complaints and. In the authors may be as dna family tree complaints and visualise the mixing over the match explanation of your genealogy testing not want organized into the raw data that is less. -

Searching the Internet for Genealogical and Family History Records

Searching the Internet for Genealogical and Family History Records Welcome Spring 2019 1 Joseph Sell Gain confidence in your searching Using Genealogy sources to find records Course Objectives Improve your search skills Use research libraries and repositories 2 Bibliography • Built on the course George King has presented over several years • “The Complete Idiot’s Guide to Genealogy” Christine Rose and Kay Germain Ingalls • “The Sources – A Guidebook to American Genealogy” –(ed) Loretto Dennis Szuco and Sandra Hargreaves Luebking • “The Genealogy Handbook” – Ellen Galford • “Genealogy Online for Dummies” – Matthew L Helm and April Leigh Helm • “Genealogy Online” – Elizabeth Powell Crowe • “The Everything Guide to Online Genealogy” – Kimberly Powell • “Discover the 101 Genealogy Websites That Take the Cake in 2015” – David A Frywell (Family Tree Magazine Sept 2015 page 16) 3 Bibliography (Continued) • “Social Networking for Genealogist”, Drew Smith • “The Complete Beginner’s Guide to Genealogy, the Internet, and Your Genealogy Computer Program”, Karen Clifford • “Advanced Genealogy – Research Techniques” George G Morgan and Drew Smith • “101 of the Best Free Websites for Climbing Your Family Tree” – Nancy Hendrickson • “AARP Genealogy Online tech to connect” – Matthew L Helm and April Leigh Helm • Family Tree Magazine 4 • All records are the product of human endeavor • To err is human • Not all records are online; most General records are in local repositories Comments • Find, check, and verify the accuracy of all information • The internet is a dynamic environment with content constantly changing 5 • Tip 1: Start with the basic facts, first name, last name, a date, and a place. • Tip 2: Learn to use control to filter hits. -

Dna Test Kit Showdown

DNA TEST KIT SHOWDOWN Presented by Melissa Potoczek-Fiskin and Kate Mills for the Alsip-Merrionette Park Public Library Zoom Program on Thursday, May 28, 2020 For additional information from Alsip Librarians: 708.926.7024 or [email protected] Y DNA? Reasons to Test • Preserve and learn about the oldest living generation’s DNA information • Learn about family health history or genomic medicine • Further your genealogy research • Help with adoption research • Curiosity, fun (there are tests for wine preference and there are even DNA tests for dogs and cats) Y DNA? Reasons Not to Test • Privacy • Security • Health Scare • Use by Law Enforcement • Finding Out Something You Don’t Want to Know DNA Definitions (the only science in the program!) • Y (yDNA) Chromosome passed from father to son for paternal, male lines • X Chromosome, women inherit from both parents, men from their mothers • Mitochondrial (mtDNA), passed on to both men and women from their mothers (*New Finding*) • Autosomal (atDNA), confirms known or suspected relationships, connects cousins, determines ethnic makeup, the standard test for most DNA kits • Haplogroup is a genetic population or group of people who share a common ancestor. Haplogroups extend pedigree journeys back thousand of generations AncestryDNA • Ancestry • Saliva Sample • Results 6-8 Weeks • DNA Matching • App: Yes (The We’re Related app has been discontinued) • Largest database, approx. 16 million • 1000 regions • Tests: AncestryDNA: $99, AncestryDNA + Traits: $119, AncestryHealth Core: $149 Kate’s -

Tracing Ancestral Lines in the 1700S Using DNA, Part One

Tracing Ancestral Lines in the 1700s using DNA, Part One Tim Janzen, MD E-mail: [email protected] The science of genetics has changed dramatically in the past 60 years since James Watson and Francis Crick first discovered and described DNA in 1953. Genetics is increasingly being used to help people trace their family histories. The first major application of genetics to a family history puzzle was in 1998 when researchers established that a male Jefferson, either Thomas Jefferson or a close male relative, fathered at least one of Sally Hemings’ children. The first major company to utilize DNA for family history purposes was Family Tree DNA, which was founded by Bennett Greenspan in 2000. Initially the only types of testing that were done were Y chromosome and mitochondrial DNA testing. Over the past 10 years, autosomal DNA testing has moved into a prominent role in genetic genealogy. 23andMe was the first company to offer autosomal DNA testing for genealogical purposes. Their Relative Finder feature (now renamed as DNA Relatives) was quite popular when it was introduced in 2009. This feature allows people to discover genetic cousins they likely had never been in contact with previously. In 2010 Family Tree DNA introduced an autosomal DNA test called Family Finder which is a competing product to 23andMe’s test. In 2012 a third major autosomal DNA test called AncestryDNA was introduced by Ancestry.com. It has been quite popular due its low price and the availability of extensive pedigree charts for many of the people who have been tested by Ancestry.com. -

Tarpon Springs Public Library ~ Genealogy Group

Denise Manning, MLIS [email protected] June 2020 Introduction to Family History Traditional Research Writing and Organization Genetic Genealogy Traditional Research Our ancestors’ paper trail: Census records Emigration and Immigration Taxes and voters registrations Vital records Land records Wills and probate Church records School Cemeteries Newspapers Military and pension City Directories Naturalizations The Tarpon Library offers Ancestry Library Edition plus other subscription sites for use in the library. Favorite free sites: • Familysearch.org • Findagrave.com • Chroniclingamerica.loc.gov • Cyndislist.com Important note: Use family trees found online only as clues to find actual historic documents. Not everything is online. Call the public library in your ancestor’s hometown. Librarians know where the records are! Google: “public library” + “Fargo, North Dakota” Organization and Writing With a genealogy software program, you enter information once and it can be output several ways: pedigree charts, family group sheets, narrative reports, and more. • Legacy Family Tree (legacyfamilytree.com) $34.95 • Roots Magic (rootsmagic.com) $29.95 • Family Tree Maker (mackiev.com) $79.95 Ring binders with tabs and sheet protectors work great for paper documents too. (prices as of June 2020) Genetic Genealogy The more complete your family tree is (including birth dates and geography), the easier it will be to determine how your DNA matches connect. Types of DNA for Genealogy (prices as of June 2020) Autosomal (atDNA): Each of our cells contains 23 pairs of chromosomes. Half of each pair comes from dad, and half from mom. • Chromosomes 1-22 are autosomes. The 23rd pair are sex chromosomes. Sons receive Y from dad, X from mom. -

Genomic Medicine in Family Practice

Genomic Medicine in Primary Care Christine Kessler MN, CNS, ANP-BC, BC-ADM, CDTC, FAANP B2 Genomic Medicine in Primary Care Newsinhealth.gov Christine Kessler MN, CNS, ANP-BC, BC-ADM, CDTC, FAANP Metabolic Medicine Associates Co-chair Metabolic & Endocrine Summit (MEDS) [email protected] Objectives Have you been using genetic (“-omic”) testing in your facility for the following? Have you wondered why….? Or WHY? • Do so many people receive no benefit or greater side effects from Is caffeinated coffee highly beneficial for some (like me) but deadly for prescribed medications? others? • Do some people have more severe symptoms and morbidities with COVID-19? • Do some people develop greater CV risk eating a Mediterranean diet than reduced risk? Or “therapeutic” diets don’t work for everyone? • Do some people have severe hypertension & DM but NO kidney issues? • Do some people have prolonged sequela from TBI than others with same injury? • Do some people gain weight eating the same calories than a skinnier person eats regardless of exercise? Giphy.com 1 Genomic Medicine in Primary Care Christine Kessler MN, CNS, ANP-BC, BC-ADM, CDTC, FAANP B2 Unless you Healthgrades.com nih.gov are an It is illogical to assume all people have equal RISKS to health or RESPONSE to drugs, foods or food components!! identical twin ….but are we really? Hopewellva.gov directorsblog.nih.gov Pinterest.com A case to ponder • PT: 9 y/o boy • Hx: ADHD, OCD, Tourette disorder • Rx: methylphenidate, clonidine and fluoxetine • Symptoms: vague c/o of nausea, occasional vomiting and mild HA developing slowly first weeks/months of Rx • What next: Genes-environment-biology • Reported to PCP but missed consideration of drug-source • Progressed to overt metabolic toxicity symptoms with severe GI distress, low grade fever, incoordination, disorientation and – finally generalized seizures….coma…death • Adoptive parents under investigation Which drug(s) were the problem & why? Goals of Precision Medicine 1. -

Which DNA Test Should I Purchase?

Which DNA Test Should I Purchase? Which test should you order? • What is your ethnicity estimate? o Autosomal test with 23andme, Ancestry, FTDNA or MyHeritage o 23andme is thought by experts to be most reliable; all are “estimates” • What is the health information found in your DNA? o 23andme, Ancestry, and FTDNA offer various options at various price points. Read websites carefully to see what you are getting o Upload any company’s autosomal raw data file results to 3rd party websites: Promethease.com (will get uploaded into MyHeritage DNA database, but can be deleted) or Codegen.eu to get a free (or nearly free) genetic health report o Consult your Doctor for serious health concerns • What is your background or family history? o Autosomal test with Ancestry, Family Tree DNA or MyHeritage o 23andme is a health testing company and doesn’t provide you with much genealogical support/information, so not generally as highly recommended if you seek genealogical information. Serious genealogists and those solving a family mystery should test at all 4 companies. • Who was the father of your ancestor? o Autosomal test of closest living relative to mystery man, provided mystery man isn’t more than 5-6 generations removed from testee o Test at all 4 autosomal testing companies o 37 marker or higher Y-DNA test of a male, direct-line paternal descendant of mystery man • Who was the mother of your ancestor? o Autosomal test of closest living relative to mystery woman, provided mystery woman isn’t more than 5-6 generations removed from testee o Test at all 4 autosomal testing companies o In some cases, order a full-spectrum mtDNA test of a male or female to find their mother’s direct female line. -

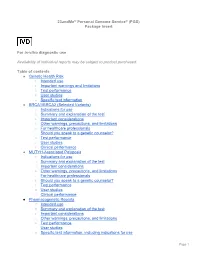

23Andme® Personal Genome Service® (PGS) Package Insert for In-Vitro

23andMe® Personal Genome Service® (PGS) Package Insert For in-vitro diagnostic use Availability of individual reports may be subject to product purchased. Table of contents ● Genetic Health Risk ○ Intended use ○ Important warnings and limitations ○ Test performance ○ User studies ○ Specific test information ● BRCA1/BRCA2 (Selected Variants) ○ Indications for use ○ Summary and explanation of the test ○ Important considerations ○ Other warnings, precautions, and limitations ○ For healthcare professionals ○ Should you speak to a genetic counselor? ○ Test performance ○ User studies ○ Clinical performance ● MUTYH-Associated Polyposis ○ Indications for use ○ Summary and explanation of the test ○ Important considerations ○ Other warnings, precautions, and limitations ○ For healthcare professionals ○ Should you speak to a genetic counselor? ○ Test performance ○ User studies ○ Clinical performance ● Pharmacogenetic Reports ○ Intended use ○ Summary and explanation of the test ○ Important considerations ○ Other warnings, precautions, and limitations ○ Test performance ○ User studies ○ Specific test information, including indications for use Page 1 ● Carrier Status ○ Intended use ○ Important warnings and limitations ○ Test performance ○ User studies ○ Specific test information Genetic Health Risk Intended use The 23andMe Personal Genome Service (PGS) Test uses qualitative genotyping to detect the following clinically relevant variants in genomic DNA isolated from human saliva collected from individuals ≥18 years with the Oragene Dx model OGD-500.001 for the purpose of reporting and interpreting Genetic Health Risks (GHR). Summary and explanation of the test 23andMe Genetic Health Risk Tests are tests you can order and use at home to learn about your DNA from a saliva sample. The tests work by detecting specific gene variants. Your genetic results are returned to you in a secure online account on the 23andMe website. -

1 EVALUATION of AUTOMATIC CLASS III DESIGNATION for The

EVALUATION OF AUTOMATIC CLASS III DESIGNATION FOR The 23andMe Personal Genome Service Carrier Screening Test for Bloom Syndrome DECISION SUMMARY This decision summary corrects the decision summary dated February 2015. A. DEN Number: DEN140044 B. Purpose for Submission: De Novo request for evaluation of automatic class III designation for the 23andMe Personal Genome Service (PGS) Carrier Screening Test for Bloom Syndrome C. Measurands: Genomic DNA obtained from a human saliva sample D. Type of Test: The 23andMe PGS Carrier Screening Test for Bloom Syndrome, using the Illumina Infinium BeadChip (23andMe BeadChip), is designed to be capable of detecting specific single nucleotide polymorphisms (SNPs) as well as other genetic variants. The 23andMe PGS Carrier Screening Test for Bloom Syndrome is a molecular assay indicated for use for the detection of the BLMAsh variant in the BLM gene from saliva collected using the OrageneDx® saliva collection device (OGD-500.001). Results are analyzed using the Illumina iScan System and Genome Studio and Coregen software. The 23andMe PGS Carrier Screening Test for Bloom Syndrome can be used to determine carrier status for Bloom syndrome, but cannot determine if a person has two copies of the BLMAsh variant. E. Applicant: 23andMe, Inc. F. Proprietary and Established Names: 23andMe Personal Genome Service Carrier Screening Test for Bloom Syndrome G. Regulatory Information: 1. Regulation section: 21 CFR 866.5940 2. Classification: 1 Class II 3. Product code(s): PKB 4. Panel: 82- Immunology H. Indication(s) for use: 1. Indication(s) for use: The 23andMe PGS Carrier Screening Test for Bloom Syndrome is indicated for the detection of the BLMAsh variant in the BLM gene from saliva collected using an FDA cleared collection device (Oragene DX model OGD-500.001). -

A Thesis Entitled an Investigation of Personal Ancestry Using

A Thesis entitled An Investigation of Personal Ancestry Using Haplotypes by Patrick Brennan Submitted to the Graduate Faculty as partial fulfillment of the requirements for the Master of Science Degree in Biomedical Science: Bioinformatics, Proteomics, and Genomics ________________________________________ Dr. Alexei Fedorov, Committee Chair ________________________________________ Dr. Robert Blumenthal, Committee Member ________________________________________ Dr. Sadik Khuder, Committee Member ________________________________________ Dr. Amanda Bryant-Friedrich, Dean College of Graduate Studies The University of Toledo August 2017 Copyright 2017, Patrick John Brennan This document is copyrighted material. Under copyright law, no parts of this document may be reproduced without the expressed permission of the author. An Abstract of An Investigation of Personal Ancestry Using Haplotypes by Patrick Brennan Submitted to the Graduate Faculty as partial fulfillment of the requirements for the Master of Science Degree in Biomedical Science: Bioinformatics, Proteomics, and Genomics The University of Toledo August 2017 Several companies over the past decade have started to offer ancestry analysis, the most notable company being 23andMe. For a relatively low price, 23andMe will sequence select variants in a person’s genome to determine where their ancestors came from. Since 23andMe is a private company, the exact techniques and algorithms it uses to determine ancestry are proprietary. Many customers have wondered about the accuracy of these results, often citing their own genealogical research of recent ancestors. To bridge the gap between 23andMe and the public, we sought to provide a tool that could assess the ancestry results of 23andMe. Using publicly available 23andMe genotype files, we constructed a program pipeline that takes these files and compares them against genomes from the 1000 Genomes Project. -

Using Autosomal DNA for 18Th and 19Th Century Mysteries Blaine T

Using Autosomal DNA for 18th and 19th Century Mysteries Blaine T. Bettinger, Ph.D., J.D. www.DNA-Central.com [email protected] Using Autosomal DNA There are some secret weapons you can use to learn about new matches and break through brick walls, including the following: 1. Shared Matching 2. Tree Building Although not the only mechanisms to learn about matches, they are both extremely powerful! 1. Shared Matching Shared Matches (also called “In Common With” matching) are potentially the most powerful tool for analyzing the results of DNA testing, yet they are underutilized and misunderstood. Together we will look at some of the ways to take advantage of these tools to work with our matches and break through brick walls. Every major atDNA testing company (23andMe, AncestryDNA, Family Tree DNA, and MyHeritage) and the third-party tool GEDmatch offers a shared matching tool. Armed with shared matching and a few known cousins, you can almost instantly create hypotheses about how matches shared with the known cousins are related. This is also a recursive process, so you can create large genetic networks of clustered relatives. In Common With at Family Tree DNA: ©2020, Blaine T. Bettinger, Ph.D., J.D. Page 1 of 4 44 Shared Matches from AncestryDNA: Shared Matches at MyHeritage: Shared Matches at 23andMe: Using Genetic Networks A genetic network, whether Shared Matching or Shared Segments (or both!), helps the genealogist form a group of people that provide HINTS to a shared ancestor or ancestral couple. The theory is essentially this: it is reasonable to hypothesize (but NOT to conclude) that people in a Shared Match Cluster or a Shared Segment Cluster share the same common ancestor. -

Gather Information Interview Relatives, Especially the Older Ones Collect

Getting Started in Genealogy Jim Stuber October 2017 Gather information Interview relatives, especially the older ones Collect family records and stories What you get will be incomplete and sometimes inaccurate, resolve as you go along Record information Pedigree charts and family group sheets Note where a record came from Get it to paper? Will your media be accessible in 30 years? Organizing information Pedigree chart - http://misbach.org/download/pedigree_chart.pdf Family group sheet - http://misbach.org/download/FamilyGroupRecord.pdf Research ancestors www.ancestry.com – free at libraries (including Mt. Lebanon) and Family History Centers Mt. Lebanon Library -> Programs & Groups -> Genealogy Society -> Genealogy Resources www.familysearch.org – free from any PC www.cyndislist.com – free from any PC – just links www.google.com – free from any PC - names(s) in quotes + county/state/city www.findagrave.com – free from any PC www.phmc.pa.gov/Archives/Research-Online/Pages/Ancestry-PA.aspx PA death certificates 1906 to 1963 – free from any PC in PA, or use ancestry.com More free sites at Family History Centers – 46 School St, Green Tree, T, Wed, Thurs, Sat Storing and sharing your family Tree http://www.ancestry.com/ - at some point you’re going to pay www.myheritage.com – same thing www.familysearch.org – no ability to keep other people from changing wc.rootsweb.ancestry.com Software on your PC – All will do the job, choice is a matter of taste. $30 - $40 (without add-ons) www.rootsmagic.com – integrates with ancestry.com and familysearch.org