Web Applications Security

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Web Hacking 101 How to Make Money Hacking Ethically

Web Hacking 101 How to Make Money Hacking Ethically Peter Yaworski © 2015 - 2016 Peter Yaworski Tweet This Book! Please help Peter Yaworski by spreading the word about this book on Twitter! The suggested tweet for this book is: Can’t wait to read Web Hacking 101: How to Make Money Hacking Ethically by @yaworsk #bugbounty The suggested hashtag for this book is #bugbounty. Find out what other people are saying about the book by clicking on this link to search for this hashtag on Twitter: https://twitter.com/search?q=#bugbounty For Andrea and Ellie. Thanks for supporting my constant roller coaster of motivation and confidence. This book wouldn’t be what it is if it were not for the HackerOne Team, thank you for all the support, feedback and work that you contributed to make this book more than just an analysis of 30 disclosures. Contents 1. Foreword ....................................... 1 2. Attention Hackers! .................................. 3 3. Introduction ..................................... 4 How It All Started ................................. 4 Just 30 Examples and My First Sale ........................ 5 Who This Book Is Written For ........................... 7 Chapter Overview ................................. 8 Word of Warning and a Favour .......................... 10 4. Background ...................................... 11 5. HTML Injection .................................... 14 Description ....................................... 14 Examples ........................................ 14 1. Coinbase Comments ............................. -

The Next Digital Decade Essays on the Future of the Internet

THE NEXT DIGITAL DECADE ESSAYS ON THE FUTURE OF THE INTERNET Edited by Berin Szoka & Adam Marcus THE NEXT DIGITAL DECADE ESSAYS ON THE FUTURE OF THE INTERNET Edited by Berin Szoka & Adam Marcus NextDigitalDecade.com TechFreedom techfreedom.org Washington, D.C. This work was published by TechFreedom (TechFreedom.org), a non-profit public policy think tank based in Washington, D.C. TechFreedom’s mission is to unleash the progress of technology that improves the human condition and expands individual capacity to choose. We gratefully acknowledge the generous and unconditional support for this project provided by VeriSign, Inc. More information about this book is available at NextDigitalDecade.com ISBN 978-1-4357-6786-7 © 2010 by TechFreedom, Washington, D.C. This work is licensed under the Creative Commons Attribution- NonCommercial-ShareAlike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA. Cover Designed by Jeff Fielding. THE NEXT DIGITAL DECADE: ESSAYS ON THE FUTURE OF THE INTERNET 3 TABLE OF CONTENTS Foreword 7 Berin Szoka 25 Years After .COM: Ten Questions 9 Berin Szoka Contributors 29 Part I: The Big Picture & New Frameworks CHAPTER 1: The Internet’s Impact on Culture & Society: Good or Bad? 49 Why We Must Resist the Temptation of Web 2.0 51 Andrew Keen The Case for Internet Optimism, Part 1: Saving the Net from Its Detractors 57 Adam Thierer CHAPTER 2: Is the Generative -

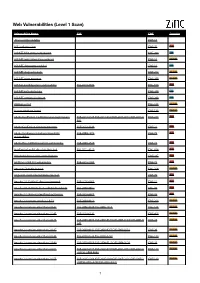

Web Vulnerabilities (Level 1 Scan)

Web Vulnerabilities (Level 1 Scan) Vulnerability Name CVE CWE Severity .htaccess file readable CWE-16 ASP code injection CWE-95 High ASP.NET MVC version disclosure CWE-200 Low ASP.NET application trace enabled CWE-16 Medium ASP.NET debugging enabled CWE-16 Low ASP.NET diagnostic page CWE-200 Medium ASP.NET error message CWE-200 Medium ASP.NET padding oracle vulnerability CVE-2010-3332 CWE-310 High ASP.NET path disclosure CWE-200 Low ASP.NET version disclosure CWE-200 Low AWStats script CWE-538 Medium Access database found CWE-538 Medium Adobe ColdFusion 9 administrative login bypass CVE-2013-0625 CVE-2013-0629CVE-2013-0631 CVE-2013-0 CWE-287 High 632 Adobe ColdFusion directory traversal CVE-2013-3336 CWE-22 High Adobe Coldfusion 8 multiple linked XSS CVE-2009-1872 CWE-79 High vulnerabilies Adobe Flex 3 DOM-based XSS vulnerability CVE-2008-2640 CWE-79 High AjaxControlToolkit directory traversal CVE-2015-4670 CWE-434 High Akeeba backup access control bypass CWE-287 High AmCharts SWF XSS vulnerability CVE-2012-1303 CWE-79 High Amazon S3 public bucket CWE-264 Medium AngularJS client-side template injection CWE-79 High Apache 2.0.39 Win32 directory traversal CVE-2002-0661 CWE-22 High Apache 2.0.43 Win32 file reading vulnerability CVE-2003-0017 CWE-20 High Apache 2.2.14 mod_isapi Dangling Pointer CVE-2010-0425 CWE-20 High Apache 2.x version equal to 2.0.51 CVE-2004-0811 CWE-264 Medium Apache 2.x version older than 2.0.43 CVE-2002-0840 CVE-2002-1156 CWE-538 Medium Apache 2.x version older than 2.0.45 CVE-2003-0132 CWE-400 Medium Apache 2.x version -

HTTP Parameter Pollution Vulnerabilities in Web Applications @ Blackhat Europe 2011 @

HTTP Parameter Pollution Vulnerabilities in Web Applications @ BlackHat Europe 2011 @ Marco ‘embyte’ Balduzzi embyte(at)madlab(dot)it http://www.madlab.it Contents 1 Introduction 2 2 HTTP Parameter Pollution Attacks 3 2.1 Parameter Precedence in Web Applications . .3 2.2 Parameter Pollution . .4 2.2.1 Cross-Channel Pollution . .5 2.2.2 HPP to bypass CSRF tokens . .5 2.2.3 Bypass WAFs input validation checks . .6 3 Automated HPP Vulnerability Detection 6 3.1 Browser and Crawler Components . .7 3.2 P-Scan: Analysis of the Parameter Precedence . .7 3.3 V-Scan: Testing for HPP vulnerabilities . .9 3.3.1 Handling special cases . 10 3.4 Implementation . 10 3.4.1 Online Service . 11 3.5 Limitations . 11 4 Evaluation 11 4.1 HPP Prevalence in Popular Websites . 11 4.1.1 Parameter Precedence . 13 4.1.2 HPP Vulnerabilities . 14 4.1.3 False Positives . 15 4.2 Examples of Discovered Vulnerabilities . 15 4.2.1 Facebook Share . 16 4.2.2 CSRF via HPP Injection . 16 4.2.3 Shopping Carts . 16 4.2.4 Financial Institutions . 16 4.2.5 Tampering with Query Results . 17 5 Related work 17 6 Conclusion 18 7 Acknowledgments 18 1 1 Introduction In the last twenty years, web applications have grown from simple, static pages to complex, full-fledged dynamic applications. Typically, these applications are built using heterogeneous technologies and consist of code that runs on the client (e.g., Javascript) and code that runs on the server (e.g., Java servlets). Even simple web applications today may accept and process hundreds of different HTTP parameters to be able to provide users with rich, inter- active services. -

Developer Report Testphp Vulnweb Com.Pdf

Acunetix Website Audit 31 October, 2014 Developer Report Generated by Acunetix WVS Reporter (v9.0 Build 20140422) Scan of http://testphp.vulnweb.com:80/ Scan details Scan information Start time 31/10/2014 12:40:34 Finish time 31/10/2014 12:49:30 Scan time 8 minutes, 56 seconds Profile Default Server information Responsive True Server banner nginx/1.4.1 Server OS Unknown Server technologies PHP Threat level Acunetix Threat Level 3 One or more high-severity type vulnerabilities have been discovered by the scanner. A malicious user can exploit these vulnerabilities and compromise the backend database and/or deface your website. Alerts distribution Total alerts found 190 High 93 Medium 48 Low 8 Informational 41 Knowledge base WordPress web application WordPress web application was detected in directory /bxss/adminPan3l. List of file extensions File extensions can provide information on what technologies are being used on this website. List of file extensions detected: - php => 50 file(s) - css => 4 file(s) - swf => 1 file(s) - fla => 1 file(s) - conf => 1 file(s) - htaccess => 1 file(s) - htm => 1 file(s) - xml => 8 file(s) - name => 1 file(s) - iml => 1 file(s) - Log => 1 file(s) - tn => 8 file(s) - LOG => 1 file(s) - bak => 2 file(s) - txt => 2 file(s) - html => 2 file(s) - sql => 1 file(s) Acunetix Website Audit 2 - js => 1 file(s) List of client scripts These files contain Javascript code referenced from the website. - /medias/js/common_functions.js List of files with inputs These files have at least one input (GET or POST). -

![WEB APPLICATION PENETRATION TESTING] March 1, 2018](https://docslib.b-cdn.net/cover/5918/web-application-penetration-testing-march-1-2018-925918.webp)

WEB APPLICATION PENETRATION TESTING] March 1, 2018

[WEB APPLICATION PENETRATION TESTING] March 1, 2018 Contents Information Gathering .................................................................................................................................. 4 1. Conduct Search Engine Discovery and Reconnaissance for Information Leakage .......................... 4 2. Fingerprint Web Server ..................................................................................................................... 5 3. Review Webserver Metafiles for Information Leakage .................................................................... 7 4. Enumerate Applications on Webserver ............................................................................................. 8 5. Review Webpage Comments and Metadata for Information Leakage ........................................... 11 6. Identify Application Entry Points ................................................................................................... 11 7. Map execution paths through application ....................................................................................... 13 8. Fingerprint Web Application & Web Application Framework ...................................................... 14 Configuration and Deployment Management Testing ................................................................................ 18 1. Test Network/Infrastructure Configuration..................................................................................... 18 2. Test Application Platform Configuration....................................................................................... -

OWASP Annotated Application Security Verification Standard

OWASP Annotated Application Security Verification Standard Documentation Release 3.0.0 Boy Baukema Oct 03, 2017 Browse by chapter: 1 v1 Architecture, design and threat modelling1 2 v2 Authentication verification requirements3 3 v3 Session management verification requirements 13 4 v4 Access control verification requirements 19 5 v5 Malicious input handling verification requirements 23 6 v6 Output encoding / escaping 27 7 v7 Cryptography at rest verification requirements 29 8 v8 Error handling and logging verification requirements 31 9 v9 Data protection verification requirements 35 10 v10 Communications security verification requirements 39 11 v11 HTTP security configuration verification requirements 43 12 v12 Security configuration verification requirements 47 13 v13 Malicious controls verification requirements 49 14 v14 Internal security verification requirements 51 15 v15 Business logic verification requirements 53 16 v16 Files and resources verification requirements 55 17 v17 Mobile verification requirements 59 18 v18 Web services verification requirements 63 19 v19 Configuration 65 20 Level 1: Opportunistic 67 i 21 Level 2: Standard 69 22 Level 3: Advanced 71 ii CHAPTER 1 v1 Architecture, design and threat modelling 1.1 All components are identified Verify that all application components are identified and are known to be needed. Levels: 1, 2, 3 General The purpose of this requirement is twofold: 1. Discover third party components that may contain (public) vulnerabilities. 2. Discover ‘dead code / dependencies’ that increase attack surface without any benefit in functionality. Developers may be tempted to keep dead code / dependencies around ‘in case I ever need it’, however this is not an argument for a shippable product, such dead code / dependencies is better left on a source control system. -

The Virtual Sphere 2.0: the Internet, the Public Sphere, and Beyond 230 Zizi Papacharissi

Routledge Handbook of Internet Politics Edited by Andrew Chadwick and Philip N. Howard t&f proofs First published 2009 by Routledge 2 Park Square, Milton Park, Abingdon, Oxon OX14 4RN Simultaneously published in the USA and Canada by Routledge 270 Madison Avenue, New York, NY 10016 Routledge is an imprint of the Taylor & Francis Group, an Informa business © 2009 Editorial selection and matter, Andrew Chadwick and Philip N. Howard; individual chapters the contributors Typeset in Times New Roman by Taylor & Francis Books Printed and bound in Great Britain by MPG Books Ltd, Bodmin All rights reserved. No part of this book may be reprinted or reproduced or utilized in any form or by any electronic, mechanical, or other means, now known or hereafter invented, including photocopying and recording, or in any information storage or retrieval system, without permission in writing from the publishers. British Library Cataloguing in Publication Data A catalogue record for this book is available from the British Library Library of Congress Cataloging in Publication Data Routledge handbook of Internet politicst&f / edited proofs by Andrew Chadwick and Philip N. Howard. p. cm. Includes bibliographical references and index. 1. Internet – Political aspects. 2. Political participation – computer network resources. 3. Communication in politics – computer network resources. I. Chadwick, Andrew. II. Howard, Philip N. III. Title: Handbook of Internet Politics. IV. Title: Internet Politics. HM851.R6795 2008 320.0285'4678 – dc22 2008003045 ISBN 978-0-415-42914-6 (hbk) ISBN 978-0-203-96254-1 (ebk) Contents List of figures ix List of tables x List of contributors xii Acknowledgments xvi 1 Introduction: new directions in internet politics research 1 Andrew Chadwick and Philip N. -

Defending an Open, Global, Secure, and Resilient Internet

Spine Should Adjust depending on page count Defending an Open,Defending Global, Secure, and Resilient Internet The Council on Foreign Relations sponsors Independent Task Forces to assess issues of current and critical importance to U.S. foreign policy and provide policymakers with concrete judgments and recommendations. Diverse in backgrounds and perspectives, Task Force members aim to reach a meaningful consensus on policy through private and nonpartisan deliberations. Once launched, Task Forces are independent of CFR and solely responsible for the content of their reports. Task Force members are asked to join a consensus signifying that they endorse “the general policy thrust and judgments reached by the group, though not necessarily every finding and recommendation.” Each Task Force member also has the option of putting forward an additional or a dissenting view. Members’ affiliations are listed for identification purposes only and do not imply institutional endorsement. Task Force observers participate in discussions, but are not asked to join the consensus. Task Force Members Elana Berkowitz Craig James Mundie McKinsey & Company, Inc. Microsoft Corporation Bob Boorstin John D. Negroponte Google, Inc. McLarty Associates Jeff A. Brueggeman Joseph S. Nye Jr. AT&T Harvard University Peter Matthews Cleveland Samuel J. Palmisano Intel Corporation IBM Corporation Esther Dyson Neal A. Pollard EDventure Holdings, Inc. PricewaterhouseCoopers LLP Martha Finnemore Elliot J. Schrage George Washington University Facebook Patrick Gorman Adam Segal Bank of America Council on Foreign Relations Independent Task Force Report No. 70 Michael V. Hayden Anne-Marie Slaughter Chertoff Group Princeton University Eugene J. Huang James B. Steinberg John D. Negroponte and Samuel J. -

European Capability for Situational Awareness

European Capability for Situational Awareness A study to evaluate the feasibility of an ECSA in relation to internet censorship and attacks which threaten human rights FINAL REPORT A study prepared for the European Commission DG Communications Networks, Content & Technology by: Digital Agenda for Europe This study was carried out for the European Commission by Free Press Unlimited Ecorys NL Weesperstraat 3 Watermanweg 44 1018 DN Amsterdam 3067 GG Rotterdam The Netherlands The Netherlands and subcontractors: Internal identification Contract number: 30-CE-0606300/00-14 SMART 2013/N004 DISCLAIMER By the European Commission, Directorate-General of Communications Networks, Content & Technology. The information and views set out in this publication are those of the author(s) and do not necessarily reflect the official opinion of the Commission. The Commission does not guarantee the accuracy of the data included in this study. Neither the Commission nor any person acting on the Commission’s behalf may be held responsible for the use which may be made of the information contained therein. ISBN 978-92-79-50396-2 DOI: 10.2759/338748 © European Union, 2015. All rights reserved. Certain parts are licensed under conditions to the EU. Reproduction is authorised provided the source is acknowledged. European Capability for Situational Awareness – Final Report Table of contents Glossary ............................................................................................................................................................4 Abstract -

Transcript 061106.Pdf (393.64

1 1 FEDERAL TRADE COMMISSION 2 3 I N D E X 4 5 6 WELCOMING REMARKS PAGE 7 MS. HARRINGTON 3 8 CHAIRMAN MAJORAS 5 9 10 PANEL/PRESENTATION NUMBER PAGE 11 1 19 12 2 70 13 3 153 14 4 203 15 5 254 16 17 18 19 20 21 22 23 24 25 For The Record, Inc. (301) 870-8025 - www.ftrinc.net - (800) 921-5555 2 1 FEDERAL TRADE COMMISSION 2 3 4 IN RE: ) 5 PROTECTING CONSUMERS ) 6 IN THE NEXT TECH-ADE ) Matter No. 7 ) P064101 8 ) 9 ---------------------------------) 10 11 MONDAY, NOVEMBER 6, 2006 12 13 14 GEORGE WASHINGTON UNIVERSITY 15 LISNER AUDITORIUM 16 730 21st Street, N.W. 17 Washington, D.C. 18 19 20 The above-entitled workshop commenced, 21 pursuant to notice, at 9:00 a.m., reported by Debra L. 22 Maheux. 23 24 25 For The Record, Inc. (301) 870-8025 - www.ftrinc.net - (800) 921-5555 3 1 P R O C E E D I N G S 2 - - - - - 3 MS. HARRINGTON: Good morning, and welcome to 4 Protecting Consumers in The Next Tech-Ade. It's my 5 privilege to introduce our Chairman, Deborah Platt 6 Majoras, who is leading the Federal Trade Commission 7 into the next Tech-ade. She has been incredibly 8 supportive of all of the efforts to make these hearings 9 happen, and I'm just very proud that she's our boss, and 10 I'm very happy to introduce her to kick things off. 11 Thank you. 12 CHAIRMAN MAJORAS: Thank you very much, and good 13 morning, everyone. -

Berin Szoka & Adam Marcus

THE NEXT DIGITAL DECADE ESSAYS ON THE FUTURE OF THE INTERNET Edited by Berin Szoka & Adam Marcus THE NEXT DIGITAL DECADE ESSAYS ON THE FUTURE OF THE INTERNET Edited by Berin Szoka & Adam Marcus NextDigitalDecade.com TechFreedom techfreedom.org Washington, D.C. This work was published by TechFreedom (TechFreedom.org), a non-profit public policy think tank based in Washington, D.C. TechFreedom’s mission is to unleash the progress of technology that improves the human condition and expands individual capacity to choose. We gratefully acknowledge the generous and unconditional support for this project provided by VeriSign, Inc. More information about this book is available at NextDigitalDecade.com ISBN 978-1-4357-6786-7 © 2010 by TechFreedom, Washington, D.C. This work is licensed under the Creative Commons Attribution- NonCommercial-ShareAlike 3.0 Unported License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-sa/3.0/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA. Cover Designed by Jeff Fielding. THE NEXT DIGITAL DECADE: ESSAYS ON THE FUTURE OF THE INTERNET 3 TABLE OF CONTENTS Foreword 7 Berin Szoka 25 Years After .COM: Ten Questions 9 Berin Szoka Contributors 29 Part I: The Big Picture & New Frameworks CHAPTER 1: The Internet’s Impact on Culture & Society: Good or Bad? 49 Why We Must Resist the Temptation of Web 2.0 51 Andrew Keen The Case for Internet Optimism, Part 1: Saving the Net from Its Detractors 57 Adam Thierer CHAPTER 2: Is the Generative