HTTP Parameter Pollution Vulnerabilities in Web Applications @ Blackhat Europe 2011 @

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Web Hacking 101 How to Make Money Hacking Ethically

Web Hacking 101 How to Make Money Hacking Ethically Peter Yaworski © 2015 - 2016 Peter Yaworski Tweet This Book! Please help Peter Yaworski by spreading the word about this book on Twitter! The suggested tweet for this book is: Can’t wait to read Web Hacking 101: How to Make Money Hacking Ethically by @yaworsk #bugbounty The suggested hashtag for this book is #bugbounty. Find out what other people are saying about the book by clicking on this link to search for this hashtag on Twitter: https://twitter.com/search?q=#bugbounty For Andrea and Ellie. Thanks for supporting my constant roller coaster of motivation and confidence. This book wouldn’t be what it is if it were not for the HackerOne Team, thank you for all the support, feedback and work that you contributed to make this book more than just an analysis of 30 disclosures. Contents 1. Foreword ....................................... 1 2. Attention Hackers! .................................. 3 3. Introduction ..................................... 4 How It All Started ................................. 4 Just 30 Examples and My First Sale ........................ 5 Who This Book Is Written For ........................... 7 Chapter Overview ................................. 8 Word of Warning and a Favour .......................... 10 4. Background ...................................... 11 5. HTML Injection .................................... 14 Description ....................................... 14 Examples ........................................ 14 1. Coinbase Comments ............................. -

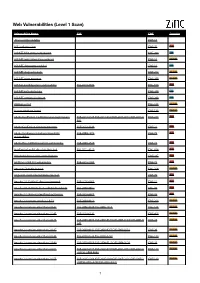

Web Vulnerabilities (Level 1 Scan)

Web Vulnerabilities (Level 1 Scan) Vulnerability Name CVE CWE Severity .htaccess file readable CWE-16 ASP code injection CWE-95 High ASP.NET MVC version disclosure CWE-200 Low ASP.NET application trace enabled CWE-16 Medium ASP.NET debugging enabled CWE-16 Low ASP.NET diagnostic page CWE-200 Medium ASP.NET error message CWE-200 Medium ASP.NET padding oracle vulnerability CVE-2010-3332 CWE-310 High ASP.NET path disclosure CWE-200 Low ASP.NET version disclosure CWE-200 Low AWStats script CWE-538 Medium Access database found CWE-538 Medium Adobe ColdFusion 9 administrative login bypass CVE-2013-0625 CVE-2013-0629CVE-2013-0631 CVE-2013-0 CWE-287 High 632 Adobe ColdFusion directory traversal CVE-2013-3336 CWE-22 High Adobe Coldfusion 8 multiple linked XSS CVE-2009-1872 CWE-79 High vulnerabilies Adobe Flex 3 DOM-based XSS vulnerability CVE-2008-2640 CWE-79 High AjaxControlToolkit directory traversal CVE-2015-4670 CWE-434 High Akeeba backup access control bypass CWE-287 High AmCharts SWF XSS vulnerability CVE-2012-1303 CWE-79 High Amazon S3 public bucket CWE-264 Medium AngularJS client-side template injection CWE-79 High Apache 2.0.39 Win32 directory traversal CVE-2002-0661 CWE-22 High Apache 2.0.43 Win32 file reading vulnerability CVE-2003-0017 CWE-20 High Apache 2.2.14 mod_isapi Dangling Pointer CVE-2010-0425 CWE-20 High Apache 2.x version equal to 2.0.51 CVE-2004-0811 CWE-264 Medium Apache 2.x version older than 2.0.43 CVE-2002-0840 CVE-2002-1156 CWE-538 Medium Apache 2.x version older than 2.0.45 CVE-2003-0132 CWE-400 Medium Apache 2.x version -

Developer Report Testphp Vulnweb Com.Pdf

Acunetix Website Audit 31 October, 2014 Developer Report Generated by Acunetix WVS Reporter (v9.0 Build 20140422) Scan of http://testphp.vulnweb.com:80/ Scan details Scan information Start time 31/10/2014 12:40:34 Finish time 31/10/2014 12:49:30 Scan time 8 minutes, 56 seconds Profile Default Server information Responsive True Server banner nginx/1.4.1 Server OS Unknown Server technologies PHP Threat level Acunetix Threat Level 3 One or more high-severity type vulnerabilities have been discovered by the scanner. A malicious user can exploit these vulnerabilities and compromise the backend database and/or deface your website. Alerts distribution Total alerts found 190 High 93 Medium 48 Low 8 Informational 41 Knowledge base WordPress web application WordPress web application was detected in directory /bxss/adminPan3l. List of file extensions File extensions can provide information on what technologies are being used on this website. List of file extensions detected: - php => 50 file(s) - css => 4 file(s) - swf => 1 file(s) - fla => 1 file(s) - conf => 1 file(s) - htaccess => 1 file(s) - htm => 1 file(s) - xml => 8 file(s) - name => 1 file(s) - iml => 1 file(s) - Log => 1 file(s) - tn => 8 file(s) - LOG => 1 file(s) - bak => 2 file(s) - txt => 2 file(s) - html => 2 file(s) - sql => 1 file(s) Acunetix Website Audit 2 - js => 1 file(s) List of client scripts These files contain Javascript code referenced from the website. - /medias/js/common_functions.js List of files with inputs These files have at least one input (GET or POST). -

![WEB APPLICATION PENETRATION TESTING] March 1, 2018](https://docslib.b-cdn.net/cover/5918/web-application-penetration-testing-march-1-2018-925918.webp)

WEB APPLICATION PENETRATION TESTING] March 1, 2018

[WEB APPLICATION PENETRATION TESTING] March 1, 2018 Contents Information Gathering .................................................................................................................................. 4 1. Conduct Search Engine Discovery and Reconnaissance for Information Leakage .......................... 4 2. Fingerprint Web Server ..................................................................................................................... 5 3. Review Webserver Metafiles for Information Leakage .................................................................... 7 4. Enumerate Applications on Webserver ............................................................................................. 8 5. Review Webpage Comments and Metadata for Information Leakage ........................................... 11 6. Identify Application Entry Points ................................................................................................... 11 7. Map execution paths through application ....................................................................................... 13 8. Fingerprint Web Application & Web Application Framework ...................................................... 14 Configuration and Deployment Management Testing ................................................................................ 18 1. Test Network/Infrastructure Configuration..................................................................................... 18 2. Test Application Platform Configuration....................................................................................... -

OWASP Annotated Application Security Verification Standard

OWASP Annotated Application Security Verification Standard Documentation Release 3.0.0 Boy Baukema Oct 03, 2017 Browse by chapter: 1 v1 Architecture, design and threat modelling1 2 v2 Authentication verification requirements3 3 v3 Session management verification requirements 13 4 v4 Access control verification requirements 19 5 v5 Malicious input handling verification requirements 23 6 v6 Output encoding / escaping 27 7 v7 Cryptography at rest verification requirements 29 8 v8 Error handling and logging verification requirements 31 9 v9 Data protection verification requirements 35 10 v10 Communications security verification requirements 39 11 v11 HTTP security configuration verification requirements 43 12 v12 Security configuration verification requirements 47 13 v13 Malicious controls verification requirements 49 14 v14 Internal security verification requirements 51 15 v15 Business logic verification requirements 53 16 v16 Files and resources verification requirements 55 17 v17 Mobile verification requirements 59 18 v18 Web services verification requirements 63 19 v19 Configuration 65 20 Level 1: Opportunistic 67 i 21 Level 2: Standard 69 22 Level 3: Advanced 71 ii CHAPTER 1 v1 Architecture, design and threat modelling 1.1 All components are identified Verify that all application components are identified and are known to be needed. Levels: 1, 2, 3 General The purpose of this requirement is twofold: 1. Discover third party components that may contain (public) vulnerabilities. 2. Discover ‘dead code / dependencies’ that increase attack surface without any benefit in functionality. Developers may be tempted to keep dead code / dependencies around ‘in case I ever need it’, however this is not an argument for a shippable product, such dead code / dependencies is better left on a source control system. -

Web Applications Security

UNIVERSITY OF NICE - SOPHIA ANTIPOLIS These d’Habilitation a Diriger des Recherches presented by Davide BALZAROTTI Web Applications Security Submitted in total fulfillment of the requirements of the degree of Habilitation a Diriger des Recherches Committee: Reviewers: Evangelos Markatos - University of Crete Frank Piessens - Katholieke Universiteit Leuven Frank Kargl - University of Ulm Examinators: Marc Dacier - Qatar Computing Research Institute Roberto Di Pietro - University of Padova Acknowledgments Even though this document is mostly written in first person, every single “I” should be replaced by a very large “We” to pay the due respect to everyone who made this hundred-or-so pages possible. Let me start with my two mentors, who toke me fresh after my Ph.D. and taught me how to do research and how to supervise students. The work of a professor requires good ideas, but also strong management skills, and a good number of tricks of the trade. Giovanni Vigna, at UCSB, was the first to introduce me to web security and to teach me how to do things right. Engin Kirda, at Eurecom, filled up the missing part – teaching me how to find and manage money and how to supervise other Ph.D. students. A big thank to both of them. The second group of people that made this possible are the students I was lucky to work with and supervise in the past five years. Giancarlo Pellegrino, Jelena Isacenkova, Davide Canali, Jonas Zaddach, Mariano Graziano, Andrei Costin, and Onur Catakoglu. They are not all represented in this document, but it is their hard work that transformed some of the papers that are part of this dissertation from an idea to a real scientific study. -

Developer Report

Acunetix Website Audit 6 May, 2014 Developer Report Generated by Acunetix WVS Reporter (v9.5 Build 20140505) Scan of http://testphp.vulnweb.com:80/ Scan details Scan information Start time 06-May-14 09:44:49 Finish time 06-May-14 09:58:15 Scan time 13 minutes, 26 seconds Profile Default Server information Responsive True Server banner nginx/1.4.1 Server OS Unknown Server technologies PHP Threat level Acunetix Threat Level 3 One or more high-severity type vulnerabilities have been discovered by the scanner. A malicious user can exploit these vulnerabilities and compromise the backend database and/or deface your website. Alerts distribution Total alerts found 249 High 125 Medium 56 Low 17 Informational 51 Knowledge base List of file extensions File extensions can provide information on what technologies are being used on this website. List of file extensions detected: - php => 45 file(s) - css => 3 file(s) - swf => 1 file(s) - fla => 1 file(s) - conf => 1 file(s) - htm => 1 file(s) - xml => 8 file(s) - name => 1 file(s) - iml => 1 file(s) - Log => 1 file(s) - htaccess => 1 file(s) - tn => 8 file(s) - LOG => 1 file(s) - bak => 2 file(s) - txt => 2 file(s) - html => 2 file(s) - sql => 1 file(s) List of client scripts These files contain Javascript code referenced from the website. Acunetix Website Audit 2 - /medias/js/common_functions.js List of files with inputs These files have at least one input (GET or POST). - / - 1 inputs - /userinfo.php - 4 inputs - /search.php - 2 inputs - /cart.php - 4 inputs - /artists.php - 1 inputs - /guestbook.php -

Securing Web Applications from Injection and Logic Vulnerabilities: Approaches and Challenges

Information and Software Technology 74 (2016) 160–180 Contents lists available at ScienceDirect Information and Software Technology journal homepage: www.elsevier.com/locate/infsof Securing web applications from injection and logic vulnerabilities: Approaches and challenges ∗ G. Deepa , P. Santhi Thilagam Department of Computer Science and Engineering, National Institute of Technology Karnataka, Surathkal, India a r t i c l e i n f o a b s t r a c t Article history: Context: Web applications are trusted by billions of users for performing day-to-day activities. Accessi- Received 6 June 2015 bility, availability and omnipresence of web applications have made them a prime target for attackers. A Revised 17 February 2016 simple implementation flaw in the application could allow an attacker to steal sensitive information and Accepted 18 February 2016 perform adversary actions, and hence it is important to secure web applications from attacks. Defensive Available online 27 February 2016 mechanisms for securing web applications from the flaws have received attention from both academia Keywords: and industry. SQL injection Objective: The objective of this literature review is to summarize the current state of the art for securing Cross-site scripting web applications from major flaws such as injection and logic flaws. Though different kinds of injection Business logic vulnerabilities Application logic vulnerabilities flaws exist, the scope is restricted to SQL Injection (SQLI) and Cross-site scripting (XSS), since they are Web application security rated as the top most threats by different security consortiums. Injection flaws Method: The relevant articles recently published are identified from well-known digital libraries, and a total of 86 primary studies are considered. -

Comptia® Pentest+Cert Guide

CompTIA® PenTest+ Cert Guide Omar Santos Ron Taylor CompTIA® PenTest+ Cert Guide Editor-in-Chief Omar Santos Mark Taub Ron Taylor Product Line Manager Copyright © 2019 by Pearson Education, Inc. Brett Bartow All rights reserved. No part of this book shall be reproduced, stored in a retrieval system, or transmitted by any means, electronic, mechanical, photocopying, recording, or otherwise, without written permission Acquisitions Editor from the publisher. No patent liability is assumed with respect to the use of the information contained Paul Carlstroem herein. Although every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions. Nor is any liability assumed for damages Managing Editor resulting from the use of the information contained herein. Sandra Schroeder ISBN-13: 978-0-7897-6035-7 ISBN-10: 0-7897-6035-5 Development Editor Library of Congress Control Number: 2018956261 Christopher Cleveland 01 18 Project Editor Trademarks Mandie Frank All terms mentioned in this book that are known to be trademarks or service marks have been appropriately capitalized. Pearson IT Certifi cation cannot attest to the accuracy of this information. Use of a term in this Copy Editor book should not be regarded as affecting the validity of any trademark or service mark. Kitty Wilson MICROSOFT® WINDOWS®, AND MICROSOFT OFFICE® ARE REGISTERED TRADEMARKS OF THE MICROSOFT CORPORATION IN THE U.S.A. AND OTHER COUNTRIES. THIS Technical Editors BOOK IS NOT SPONSORED OR ENDORSED BY OR AFFILIATED WITH THE MICROSOFT Chris McCoy CORPORATION. Benjamin Taylor Warning and Disclaimer This book is designed to provide information about the CompTIA PenTest+ exam. -

Development and Implementation of Secure Web Applications

DEVELOPMENT AND IMPLEMENTATION OF SECURE WEB APPLICATIONS AUGUST 2011 Acknowledgements CPNI would like to acknowledge and thank Daniel Martin and NGS Secure for their help in the preparation of this document. Abstract This guide is intended for professional web application developers and technical project managers who want to understand the current threats and trends in the web application security realm, and ensure that the systems they are building will not expose their organisations to an excessive level of risk. Disclaimer: Reference to any specific commercial product, process or service by trade name, trademark, manufacturer, or otherwise, does not constitute or imply its endorsement, recommendation, or favoring by CPNI. The views and opinions of authors expressed within this document shall not be used for advertising or product endorsement purposes. To the fullest extent permitted by law, CPNI accepts no liability for any loss or damage (whether direct, indirect or consequential and including, but not limited to, loss of profits or anticipated profits, loss of data, business or goodwill) incurred by any person and howsoever caused arising from or connected with any error or omission in this document or from any person acting, omitting to act or refraining from acting upon, or otherwise using, the information contained in this document or its references. You should make your own judgment as regards use of this document and seek independent professional advice on your particular circumstances. Executive summary Document scope This document is a practical guide on how to design and implement secure web applications. Any such analysis must start with an understanding of the risks to which your application will be exposed. -

Cheat Sheet Book

OWASP Cheat Sheets Martin Woschek, [email protected] April 9, 2015 Contents I Developer Cheat Sheets (Builder) 11 1 Authentication Cheat Sheet 12 1.1 Introduction . 12 1.2 Authentication General Guidelines . 12 1.3 Use of authentication protocols that require no password . 17 1.4 Session Management General Guidelines . 19 1.5 Password Managers . 19 1.6 Authors and Primary Editors . 19 1.7 References . 19 2 Choosing and Using Security Questions Cheat Sheet 20 2.1 Introduction . 20 2.2 The Problem . 20 2.3 Choosing Security Questions and/or Identity Data . 20 2.4 Using Security Questions . 23 2.5 Related Articles . 25 2.6 Authors and Primary Editors . 25 2.7 References . 25 3 Clickjacking Defense Cheat Sheet 26 3.1 Introduction . 26 3.2 Defending with Content Security Policy frame-ancestors directive . 26 3.3 Defending with X-Frame-Options Response Headers . 26 3.4 Best-for-now Legacy Browser Frame Breaking Script . 28 3.5 window.confirm() Protection . 29 3.6 Non-Working Scripts . 29 3.7 Authors and Primary Editors . 32 3.8 References . 32 4 C-Based Toolchain Hardening Cheat Sheet 34 4.1 Introduction . 34 4.2 Actionable Items . 34 4.3 Build Configurations . 34 4.4 Library Integration . 36 4.5 Static Analysis . 37 4.6 Platform Security . 38 4.7 Authors and Editors . 38 4.8 References . 38 5 Cross-Site Request Forgery (CSRF) Prevention Cheat Sheet 40 5.1 Introduction . 40 5.2 Prevention Measures That Do NOT Work . 40 5.3 General Recommendation: Synchronizer Token Pattern . 41 5.4 CSRF Prevention without a Synchronizer Token . -

Client-Side Security in the Modern Web

Client-side Security in the modern web Mauro Gentile Security Consultant @ Minded Security [email protected] OWASP EU Tour 2013 - Rome Rome, 27th June 2013 Copyright © 2008 - The OWASP Foundation Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License. The OWASP Foundation http://www.owasp.org About me Mauro Gentile MSc. in Computer Engineering Application Security Consultant @ Minded Security Creator of snuck – open source automatic XSS filter evasion tool Security research and vulnerabilities @ http://www.sneaked.net Twitter: @sneak_ Keywords: web application security, web browser security OWASP EU Tour 2013 - Rome Current state of the Web It’s a matter of fact that the Web is evolving Modern web applications actually resemble desktop apps Small granularity of exchanged data through Ajax implies negligible response times and user satisfaction We are moving towards more code client-side Web browsers are the doors to the Web Great user-experience Perform tasks faster than ever Web browsers offer sensational capabilities HTML5 CORS JavaScript OWASP EU Tour 2013 - Rome Clearly, expanding capabilities in any type of application generates progress, but possibly enlarges the possibilities to exploit new features with malicious intent. OWASP EU Tour 2013 - Rome Objectives and Motivation Create awareness about possible security issues Show how attack vectors are changing Discuss real world attack examples Describe interesting countermeasures: Advantages Drawbacks OWASP EU Tour 2013 - Rome Reflected and Stored XSS Smart and compact definition: «External JavaScript running in our domain» Developer’s perspective: Dev. A: «My blacklist-based XSS filter is so robust that there is no chance for you to bypass it!» Dev.