Cheat Sheet Book

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Web Hacking 101 How to Make Money Hacking Ethically

Web Hacking 101 How to Make Money Hacking Ethically Peter Yaworski © 2015 - 2016 Peter Yaworski Tweet This Book! Please help Peter Yaworski by spreading the word about this book on Twitter! The suggested tweet for this book is: Can’t wait to read Web Hacking 101: How to Make Money Hacking Ethically by @yaworsk #bugbounty The suggested hashtag for this book is #bugbounty. Find out what other people are saying about the book by clicking on this link to search for this hashtag on Twitter: https://twitter.com/search?q=#bugbounty For Andrea and Ellie. Thanks for supporting my constant roller coaster of motivation and confidence. This book wouldn’t be what it is if it were not for the HackerOne Team, thank you for all the support, feedback and work that you contributed to make this book more than just an analysis of 30 disclosures. Contents 1. Foreword ....................................... 1 2. Attention Hackers! .................................. 3 3. Introduction ..................................... 4 How It All Started ................................. 4 Just 30 Examples and My First Sale ........................ 5 Who This Book Is Written For ........................... 7 Chapter Overview ................................. 8 Word of Warning and a Favour .......................... 10 4. Background ...................................... 11 5. HTML Injection .................................... 14 Description ....................................... 14 Examples ........................................ 14 1. Coinbase Comments ............................. -

Modern Web Application Frameworks

MASARYKOVA UNIVERZITA FAKULTA INFORMATIKY Û¡¢£¤¥¦§¨ª«¬Æ°±²³´µ·¸¹º»¼½¾¿Ý Modern Web Application Frameworks MASTER’S THESIS Bc. Jan Pater Brno, autumn 2015 Declaration Hereby I declare, that this paper is my original authorial work, which I have worked out by my own. All sources, references and literature used or ex- cerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Bc. Jan Pater Advisor: doc. RNDr. Petr Sojka, Ph.D. i Abstract The aim of this paper was the analysis of major web application frameworks and the design and implementation of applications for website content ma- nagement of Laboratory of Multimedia Electronic Applications and Film festival organized by Faculty of Informatics. The paper introduces readers into web application development problematic and focuses on characte- ristics and specifics of ten selected modern web application frameworks, which were described and compared on the basis of relevant criteria. Practi- cal part of the paper includes the selection of a suitable framework for im- plementation of both applications and describes their design, development process and deployment within the laboratory. ii Keywords Web application, Framework, PHP,Java, Ruby, Python, Laravel, Nette, Phal- con, Rails, Padrino, Django, Flask, Grails, Vaadin, Play, LEMMA, Film fes- tival iii Acknowledgement I would like to show my gratitude to my supervisor doc. RNDr. Petr So- jka, Ph.D. for his advice and comments on this thesis as well as to RNDr. Lukáš Hejtmánek, Ph.D. for his assistance with application deployment and server setup. Many thanks also go to OndˇrejTom for his valuable help and advice during application development. -

Michigan Strategic Fund

MICHIGAN STRATEGIC FUND MEMORANDUM DATE: March 12, 2021 TO: The Honorable Gretchen Whitmer, Governor of Michigan Members of the Michigan Legislature FROM: Mark Burton, President, Michigan Strategic Fund SUBJECT: FY 2020 MSF/MEDC Annual Report The Michigan Strategic Fund (MSF) is required to submit an annual report to the Governor and the Michigan Legislature summarizing activities and program spending for the previous fiscal year. This requirement is contained within the Michigan Strategic Fund Act (Public Act 270 of 1984) and budget boilerplate. Attached you will find the annual report for the MSF and the Michigan Economic Development Corporation (MEDC) as required in by Section 1004 of Public Act 166 of 2020 as well as the consolidated MSF Act reporting requirements found in Section 125.2009 of the MSF Act. Additionally, you will find an executive summary at the forefront of the report that provides a year-in-review snapshot of activities, including COVID-19 relief programs to support Michigan businesses and communities. To further consolidate legislative reporting, the attachment includes the following budget boilerplate reports: • Michigan Business Development Program and Michigan Community Revitalization Program amendments (Section 1006) • Corporate budget, revenue, expenditures/activities and state vs. corporate FTEs (Section 1007) • Jobs for Michigan Investment Fund (Section 1010) • Michigan Film incentives status (Section 1032) • Michigan Film & Digital Media Office activities ( Section 1033) • Business incubators and accelerators annual report (Section 1034) The following programs are not included in the FY 2020 report: • The Community College Skilled Trades Equipment Program was created in 2015 to provide funding to community colleges to purchase equipment required for educational programs in high-wage, high-skill, and high-demand occupations. -

DNS Spoofing 2

Professor Vahab COMP 424 13 November 2016 DNS Spoofing DNS spoofing, also known as DNS Cache Poisoning, is one of the most widely used man-in-the-middle attacks that capitalizes on vulnerabilities in the domain name system that returns a false IP address and routes the user to a malicious domain. Whenever a machine contacts a domain name such as www.bankofamerica.com, it must first contact its DNS server which responds with multiple IP addresses where your machine can reach the website. Your computer is then able to connect directly to one of the IP addresses and the DNS is able to convert the IP addresses into a human-readable domain name. If an attacker is able to gain control of a DNS server and change some of its properties such as routing Bank of America’s website to an attacker’s IP address. At that location, the attacker is then able to unsuspectingly steal the user’s credentials and account information. Attackers use spam and other forms of attack to deliver malware that changes DNS settings and installs a rogue Certificate Authority. The DNS changes point to the hacker's secret DNS name server so that when the users access the web they are directed to proxy servers instead of authorized sites. They can also start to blacklist domains and frustrate the user with their day to day activities. All blacklisted domains would have their traffic dropped instead of forwarded to their intended destination. Based on the rogue Certificate Authority the system has no sign that an attack is taking place or ever took place. -

Secure Shell- Its Significance in Networking (Ssh)

International Journal of Application or Innovation in Engineering & Management (IJAIEM) Web Site: www.ijaiem.org Email: [email protected] Volume 4, Issue 3, March 2015 ISSN 2319 - 4847 SECURE SHELL- ITS SIGNIFICANCE IN NETWORKING (SSH) ANOOSHA GARIMELLA , D.RAKESH KUMAR 1. B. TECH, COMPUTER SCIENCE AND ENGINEERING Student, 3rd year-2nd Semester GITAM UNIVERSITY Visakhapatnam, Andhra Pradesh India 2.Assistant Professor Computer Science and Engineering GITAM UNIVERSITY Visakhapatnam, Andhra Pradesh India ABSTRACT This paper is focused on the evolution of SSH, the need for SSH, working of SSH, its major components and features of SSH. As the number of users over the Internet is increasing, there is a greater threat of your data being vulnerable. Secure Shell (SSH) Protocol provides a secure method for remote login and other secure network services over an insecure network. The SSH protocol has been designed to support many features along with proper security. This architecture with the help of its inbuilt layers which are independent of each other provides user authentication, integrity, and confidentiality, connection- oriented end to end delivery, multiplexes encrypted tunnel into several logical channels, provides datagram delivery across multiple networks and may optionally provide compression. Here, we have also described in detail what every layer of the architecture does along with the connection establishment. Some of the threats which Ssh can encounter, applications, advantages and disadvantages have also been mentioned in this document. Keywords: SSH, Cryptography, Port Forwarding, Secure SSH Tunnel, Key Exchange, IP spoofing, Connection- Hijacking. 1. INTRODUCTION SSH Secure Shell was first created in 1995 by Tatu Ylonen with the release of version 1.0 of SSH Secure Shell and the Internet Draft “The SSH Secure Shell Remote Login Protocol”. -

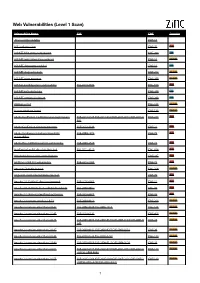

Web Vulnerabilities (Level 1 Scan)

Web Vulnerabilities (Level 1 Scan) Vulnerability Name CVE CWE Severity .htaccess file readable CWE-16 ASP code injection CWE-95 High ASP.NET MVC version disclosure CWE-200 Low ASP.NET application trace enabled CWE-16 Medium ASP.NET debugging enabled CWE-16 Low ASP.NET diagnostic page CWE-200 Medium ASP.NET error message CWE-200 Medium ASP.NET padding oracle vulnerability CVE-2010-3332 CWE-310 High ASP.NET path disclosure CWE-200 Low ASP.NET version disclosure CWE-200 Low AWStats script CWE-538 Medium Access database found CWE-538 Medium Adobe ColdFusion 9 administrative login bypass CVE-2013-0625 CVE-2013-0629CVE-2013-0631 CVE-2013-0 CWE-287 High 632 Adobe ColdFusion directory traversal CVE-2013-3336 CWE-22 High Adobe Coldfusion 8 multiple linked XSS CVE-2009-1872 CWE-79 High vulnerabilies Adobe Flex 3 DOM-based XSS vulnerability CVE-2008-2640 CWE-79 High AjaxControlToolkit directory traversal CVE-2015-4670 CWE-434 High Akeeba backup access control bypass CWE-287 High AmCharts SWF XSS vulnerability CVE-2012-1303 CWE-79 High Amazon S3 public bucket CWE-264 Medium AngularJS client-side template injection CWE-79 High Apache 2.0.39 Win32 directory traversal CVE-2002-0661 CWE-22 High Apache 2.0.43 Win32 file reading vulnerability CVE-2003-0017 CWE-20 High Apache 2.2.14 mod_isapi Dangling Pointer CVE-2010-0425 CWE-20 High Apache 2.x version equal to 2.0.51 CVE-2004-0811 CWE-264 Medium Apache 2.x version older than 2.0.43 CVE-2002-0840 CVE-2002-1156 CWE-538 Medium Apache 2.x version older than 2.0.45 CVE-2003-0132 CWE-400 Medium Apache 2.x version -

Code Review Guide

CODE REVIEW GUIDE 3.0 RELEASE Project leaders: Mr. John Doe and Jane Doe Creative Commons (CC) Attribution Free Version at: https://www.owasp.org 1 2 F I 1 Forward - Eoin Keary Introduction How to use the Code Review Guide 7 8 10 2 Secure Code Review 11 Framework Specific Configuration: Jetty 16 2.1 Why does code have vulnerabilities? 12 Framework Specific Configuration: JBoss AS 17 2.2 What is secure code review? 13 Framework Specific Configuration: Oracle WebLogic 18 2.3 What is the difference between code review and secure code review? 13 Programmatic Configuration: JEE 18 2.4 Determining the scale of a secure source code review? 14 Microsoft IIS 20 2.5 We can’t hack ourselves secure 15 Framework Specific Configuration: Microsoft IIS 40 2.6 Coupling source code review and penetration testing 19 Programmatic Configuration: Microsoft IIS 43 2.7 Implicit advantages of code review to development practices 20 2.8 Technical aspects of secure code review 21 2.9 Code reviews and regulatory compliance 22 5 A1 3 Injection 51 Injection 52 Blind SQL Injection 53 Methodology 25 Parameterized SQL Queries 53 3.1 Factors to Consider when Developing a Code Review Process 25 Safe String Concatenation? 53 3.2 Integrating Code Reviews in the S-SDLC 26 Using Flexible Parameterized Statements 54 3.3 When to Code Review 27 PHP SQL Injection 55 3.4 Security Code Review for Agile and Waterfall Development 28 JAVA SQL Injection 56 3.5 A Risk Based Approach to Code Review 29 .NET Sql Injection 56 3.6 Code Review Preparation 31 Parameter collections 57 3.7 Code Review Discovery and Gathering the Information 32 3.8 Static Code Analysis 35 3.9 Application Threat Modeling 39 4.3.2. -

Jailbreak D'ios

Jailbreak d'iOS Débridage d'iOS Un outil similaire à Blackra1n, Limera1n, est par la suite développé et publié lors de la sortie de la version 4.1 Pour les articles homonymes, voir Jailbreak. d'iOS, malgré un problème présent dans la première ver- sion de cet outil. Le jailbreak d'iOS, également appelé débridage Des outils comme redsn0w, Pwnage Tool et Sn0wbreeze d'iOS, déverrouillage ou déplombage est un proces- ainsi que GreenPois0n, Absinthe (pour l'iPhone 4S et sus permettant aux appareils tournant sous le système l'iPad 2) permettent de jailbreaker les versions plus ré- d'exploitation mobile d'Apple iOS (tels que l'iPad, centes du terminal. l'iPhone, l'iPod touch, et plus récemment, l'Apple TV) d'obtenir un accès complet pour déverrouiller toutes les Depuis le 6 janvier 2013, il est possible de jailbreaker fonctionnalités du système d'exploitation, éliminant ain- les terminaux sous l'iOS 6, et ce jusqu'à la version 6.1.2, si les restrictions posées par Apple. Une fois iOS débri- par le biais d'un outil connu sous le nom d'Evasi0n. Cette dé, ses utilisateurs sont en mesure de télécharger d'autres méthode s’applique pour tous les terminaux supportant applications, des extensions ainsi que des thèmes qui ne IOS 6 mise à part l'Apple TV. Les versions 6.1.2 jus- sont pas proposés sur la boutique d'application officielle qu’à 6.1.6 ont été libéré en janvier 2014 grâce au logiciel d'Apple, l'App Store, via des installeurs comme Cydia. -

Multi-Step Scanning in ZAP Handling Sequences in OWASP ZAP

M.Sc. Thesis Master of Science in Engineering Multi-step scanning in ZAP Handling sequences in OWASP ZAP Lars Kristensen (s072662) Stefan Østergaard Pedersen (s072653) Kongens Lyngby 2014 DTU Compute Department of Applied Mathematics and Computer Science Technical University of Denmark Matematiktorvet Building 303B 2800 Kongens Lyngby, Denmark Phone +45 4525 3031 [email protected] www.compute.dtu.dk Summary English This report presents a solution for scanning sequences of HTTP requests in the open source penetration testing tool, Zed Attack Proxy or ZAP. The report documents the analysis, design and implementation phases of the project, as well as explain how the different test scenarios were set up and used for verification of the functionality devel- oped in this project. The proposed solution will serve as a proof-of-concept, before being integrated with the publically available version of the application. Dansk Denne rapport præsenterer en løsning der gør det muligt at skanne HTTP fore- spørgsler i open source værktøjet til penetrationstest, Zed Attack Proxy eller ZAP. Rapporten dokumenterer faserne for analyse, design og implementering af løsningen, samt hvordan forskellige test scenarier blev opstillet og anvendt til at verificere funk- tionaliteten udviklet i dette projekt. Den foreslåede løsning vil fungere som et proof- of-concept, før det integreres med den offentligt tilgængelige version af applikationen. ii Preface This thesis was prepared at the department of Applied Mathematics and Computer Science at the Technical University of Denmark in fulfilment of the requirements for acquiring a M.Sc. degree in respectivly Computer Science and Engineering, and in Digital Media Engineering. Kongens Lyngby, September 5. -

Pre-Requisites

WordPress is very popular content management system and widely used by many big companies for their business web sites. It offers more flexibility for creating personal and professional web sites. With this increasing popularity of WordPress demand of skilled WordPress professional also increaes. This 3 module WordPress course is designed to learn eaverthing you need to become a professional WordPress developer. We includes all the WordPress concepts that are used while development of real time web sites using WordPress. Every concept is explain with the help of live practical example _________________________________________________________ Pre-requisites • Basic Knowledge of PHP/MySQL • Basic knowledge of CSS/HTML • You can use any suitable editor for programming like dreamweaver,notepad++ etc. Who Can Join • Anyone looking for easy and afforable way to create their own web site. • Any WordPress user or developer to expand their knowledge in WordPress development. • Any web developer want to become a highly skilled and professional WordPress Developer. Module-1 [ WordPress Basics ] Section 1 [WordPress Introduction] Section 2 [Getting Started with WordPress • Getting into WordPress • Using wordpress.com to create free blog • Understanding the common terms • How to Install WordPress on localhost • Why to use WordPress • wp-admin panel • Features of WordPress latest version • wp-admin dashboard . • WP resources,codex,plugin and • What is Gravatar theme Section 3 [Blog Content using post] Section 4 [Pages, Menus,Media libraries] • Adding -

Automattic Drives Customer-Centric Improvements on Wordpress.Com

CASE STUDY Automattic drives customer-centric improvements on Wordpress.com Headquarters: San Francisco, CA Founded: 2005 Unites a geographically distributed team to focus on optimizations Industry: Consumer technology with greatest impact The challenge The challenge Making product improvements It is estimated that about 25 percent of all websites are built using Wordpress, a based on support requests popular website platform managed by parent company Automattic. As an open source didn’t yield substantially better project run by the Wordpress Foundation, the platform is built and maintained by experiences hundreds of community volunteers as well as several ‘Automatticians.’ The solution Due to its open source nature and because there isn’t tracking analytics on Outside perspective provided by Wordpress.org, the team does not have much insight into what’s working and what’s UserTesting panel yields valuable, not working on the site. And this was a serious issue for the organization, which shareable customer insights actively cultivates a culture that is focused on its customers. The outcome Alignment across a distributed The solution team for optimized product releases results in higher quality The team turned to UserTesting to identify potential pain points and areas of friction products for users. Collecting user feedback and sharing findings has enabled the team to make strategic product improvements before each release. Additionally, without a central office and with teams meeting in-person only a few times each year, the ability to easily share insights has been invaluable. Design Engineer Mel Choyce notes, “It’s particularly useful for us as a distributed team because we can’t all do everything in person. -

The Role of Hosting Providers in Fighting Command and Control Infrastructure of Financial Malware

The Role of Hosting Providers in Fighting Command and Control Infrastructure of Financial Malware Samaneh Tajalizadehkhoob, Carlos Gañán, Arman Noroozian, and Michel van Eeten Faculty of Technology, Policy and Management, Delft University of Technology Delft, the Netherlands [email protected] ABSTRACT command and control (C&C) infrastructure and the cleanup A variety of botnets are used in attacks on financial ser- process of the infected end user machines (bots) [12,32,43]. vices. Banks and security firms invest a lot of effort in The first strategy has the promise of being the most effec- detecting and combating malware-assisted takeover of cus- tive, taking away control of the botnet from the botmasters. tomer accounts. A critical resource of these botnets is their In reality, however, this is often not possible. The second command-and-control (C&C) infrastructure. Attackers rent strategy is not about striking a fatal blow, but about the or compromise servers to operate their C&C infrastructure. war of attrition to remove malware, one machine at a time. Hosting providers routinely take down C&C servers, but the It has not been without success, however. Infection levels effectiveness of this mitigation strategy depends on under- have been stable in many countries [5]. standing how attackers select the hosting providers to host In practice, a third strategy is also being pursued. Similar their servers. Do they prefer, for example, providers who to access providers cleaning up end user machines, there is are slow or unwilling in taking down C&Cs? In this paper, a persistent effort by hosting providers to take down C&C we analyze 7 years of data on the C&C servers of botnets servers, one at a time.