Web-Based Secure Application Control

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

How to Secure Your Web Site Picked up SQL Injection and Cross-Site Scripting As Sample Cases of Failure Because These Two Are the Two Most Reported Vulnerabilities

How to Secure your Website rd 3 Edition Approaches to Improve Web Application and Web Site Security June 2008 IT SECURITY CENTER (ISEC) INFORMATION-TECHNOLOGY PROMOTION AGENCY, JAPAN This document is a translation of the original Japanese edition. Please be advises that most of the references referred in this book are offered in Japanese only. Both English and Japanese edition are available for download at: http://www.ipa.go.jp/security/english/third.html (English web page) http://www.ipa.go.jp/security/vuln/websecurity.html (Japanese web page) Translated by Hiroko Okashita (IPA), June 11 2008 Contents Contents ......................................................................................................................................... 1 Preface ........................................................................................................................................... 2 Organization of This Book ........................................................................................................... 3 Intended Reader ......................................................................................................................... 3 Fixing Vulnerabilities – Fundamental Solution and Mitigation Measure - .................................... 3 1. Web Application Security Implementation ............................................................................... 5 1.1 SQL Injection .................................................................................................................... 6 1.2 -

Web Hacking 101 How to Make Money Hacking Ethically

Web Hacking 101 How to Make Money Hacking Ethically Peter Yaworski © 2015 - 2016 Peter Yaworski Tweet This Book! Please help Peter Yaworski by spreading the word about this book on Twitter! The suggested tweet for this book is: Can’t wait to read Web Hacking 101: How to Make Money Hacking Ethically by @yaworsk #bugbounty The suggested hashtag for this book is #bugbounty. Find out what other people are saying about the book by clicking on this link to search for this hashtag on Twitter: https://twitter.com/search?q=#bugbounty For Andrea and Ellie. Thanks for supporting my constant roller coaster of motivation and confidence. This book wouldn’t be what it is if it were not for the HackerOne Team, thank you for all the support, feedback and work that you contributed to make this book more than just an analysis of 30 disclosures. Contents 1. Foreword ....................................... 1 2. Attention Hackers! .................................. 3 3. Introduction ..................................... 4 How It All Started ................................. 4 Just 30 Examples and My First Sale ........................ 5 Who This Book Is Written For ........................... 7 Chapter Overview ................................. 8 Word of Warning and a Favour .......................... 10 4. Background ...................................... 11 5. HTML Injection .................................... 14 Description ....................................... 14 Examples ........................................ 14 1. Coinbase Comments ............................. -

Bakalářská Práce

TECHNICKÁ UNIVERZITA V LIBERCI Fakulta mechatroniky, informatiky a mezioborových studií BAKALÁŘSKÁ PRÁCE Liberec 2013 Jaroslav Jakoubě Příloha A TECHNICKÁ UNIVERZITA V LIBERCI Fakulta mechatroniky, informatiky a mezioborových studií Studijní program: B2646 – Informační technologie Studijní obor: 1802R007 – Informační technologie Srovnání databázových knihoven v PHP Benchmark of database libraries for PHP Bakalářská práce Autor: Jaroslav Jakoubě Vedoucí práce: Mgr. Jiří Vraný, Ph.D. V Liberci 15. 5. 2013 Prohlášení Byl(a) jsem seznámen(a) s tím, že na mou bakalářskou práci se plně vztahuje zákon č. 121/2000 Sb., o právu autorském, zejména § 60 – školní dílo. Beru na vědomí, že Technická univerzita v Liberci (TUL) nezasahuje do mých autorských práv užitím mé bakalářské práce pro vnitřní potřebu TUL. Užiji-li bakalářskou práci nebo poskytnu-li licenci k jejímu využití, jsem si vědom povinnosti informovat o této skutečnosti TUL; v tomto případě má TUL právo ode mne požadovat úhradu nákladů, které vynaložila na vytvoření díla, až do jejich skutečné výše. Bakalářskou práci jsem vypracoval(a) samostatně s použitím uvedené literatury a na základě konzultací s vedoucím bakalářské práce a konzultantem. Datum Podpis 3 Abstrakt Česká verze: Tato bakalářská práce se zabývá srovnávacím testem webových aplikací psaných v programovacím skriptovacím jazyce PHP, které využívají různé knihovny pro komunikaci s databází. Hlavní důraz při hodnocení výsledků byl kladen na rychlost odezvy při zasílání jednotlivých požadavků. V rámci řešení byly zjišťovány dostupné metodiky určené na porovnávání těchto projektů. Byl také proveden průzkum zjišťující, které frameworky jsou nejvíce používané. Klíčová slova: Testování, PHP, webové aplikace, framework, knihovny English version: This bachelor’s thesis is focused on benchmarking of the PHP frameworks and their database libraries used for creating web applications. -

Lightweight Django USING REST, WEBSOCKETS & BACKBONE

Lightweight Django USING REST, WEBSOCKETS & BACKBONE Julia Elman & Mark Lavin Lightweight Django LightweightDjango How can you take advantage of the Django framework to integrate complex “A great resource for client-side interactions and real-time features into your web applications? going beyond traditional Through a series of rapid application development projects, this hands-on book shows experienced Django developers how to include REST APIs, apps and learning how WebSockets, and client-side MVC frameworks such as Backbone.js into Django can power the new or existing projects. backend of single-page Learn how to make the most of Django’s decoupled design by choosing web applications.” the components you need to build the lightweight applications you want. —Aymeric Augustin Once you finish this book, you’ll know how to build single-page applications Django core developer, CTO, oscaro.com that respond to interactions in real time. If you’re familiar with Python and JavaScript, you’re good to go. “Such a good idea—I think this will lower the barrier ■ Learn a lightweight approach for starting a new Django project of entry for developers ■ Break reusable applications into smaller services that even more… the more communicate with one another I read, the more excited ■ Create a static, rapid prototyping site as a scaffold for websites and applications I am!” —Barbara Shaurette ■ Build a REST API with django-rest-framework Python Developer, Cox Media Group ■ Learn how to use Django with the Backbone.js MVC framework ■ Create a single-page web application on top of your REST API Lightweight ■ Integrate real-time features with WebSockets and the Tornado networking library ■ Use the book’s code-driven examples in your own projects Julia Elman, a frontend developer and tech education advocate, started learning Django in 2008 while working at World Online. -

What Is Dart?

1 Dart in Action By Chris Buckett As a language on its own, Dart might be just another language, but when you take into account the whole Dart ecosystem, Dart represents an exciting prospect in the world of web development. In this green paper based on Dart in Action, author Chris Buckett explains how Dart, with its ability to either run natively or be converted to JavaScript and coupled with HTML5 is an ideal solution for building web applications that do not need external plugins to provide all the features. You may also be interested in… What is Dart? The quick answer to the question of what Dart is that it is an open-source structured programming language for creating complex browser based web applications. You can run applications created in Dart by either using a browser that directly supports Dart code, or by converting your Dart code to JavaScript (which happens seamlessly). It is class based, optionally typed, and single threaded (but supports multiple threads through a mechanism called isolates) and has a familiar syntax. In addition to running in browsers, you can also run Dart code on the server, hosted in the Dart virtual machine. The language itself is very similar to Java, C#, and JavaScript. One of the primary goals of the Dart developers is that the language seems familiar. This is a tiny dart script: main() { #A var d = “Dart”; #B String w = “World”; #C print(“Hello ${d} ${w}”); #D } #A Single entry point function main() executes when the script is fully loaded #B Optional typing (no type specified) #C Static typing (String type specified) #D Outputs “Hello Dart World” to the browser console or stdout This script can be embedded within <script type=“application/dart”> tags and run in the Dartium experimental browser, converted to JavaScript using the Frog tool and run in all modern browsers, or saved to a .dart file and run directly on the server using the dart virtual machine executable. -

TIBCO Activematrix® BPM Web Client Developer's Guide Software Release 4.3 April 2019 2

TIBCO ActiveMatrix® BPM Web Client Developer's Guide Software Release 4.3 April 2019 2 Important Information SOME TIBCO SOFTWARE EMBEDS OR BUNDLES OTHER TIBCO SOFTWARE. USE OF SUCH EMBEDDED OR BUNDLED TIBCO SOFTWARE IS SOLELY TO ENABLE THE FUNCTIONALITY (OR PROVIDE LIMITED ADD-ON FUNCTIONALITY) OF THE LICENSED TIBCO SOFTWARE. THE EMBEDDED OR BUNDLED SOFTWARE IS NOT LICENSED TO BE USED OR ACCESSED BY ANY OTHER TIBCO SOFTWARE OR FOR ANY OTHER PURPOSE. USE OF TIBCO SOFTWARE AND THIS DOCUMENT IS SUBJECT TO THE TERMS AND CONDITIONS OF A LICENSE AGREEMENT FOUND IN EITHER A SEPARATELY EXECUTED SOFTWARE LICENSE AGREEMENT, OR, IF THERE IS NO SUCH SEPARATE AGREEMENT, THE CLICKWRAP END USER LICENSE AGREEMENT WHICH IS DISPLAYED DURING DOWNLOAD OR INSTALLATION OF THE SOFTWARE (AND WHICH IS DUPLICATED IN THE LICENSE FILE) OR IF THERE IS NO SUCH SOFTWARE LICENSE AGREEMENT OR CLICKWRAP END USER LICENSE AGREEMENT, THE LICENSE(S) LOCATED IN THE “LICENSE” FILE(S) OF THE SOFTWARE. USE OF THIS DOCUMENT IS SUBJECT TO THOSE TERMS AND CONDITIONS, AND YOUR USE HEREOF SHALL CONSTITUTE ACCEPTANCE OF AND AN AGREEMENT TO BE BOUND BY THE SAME. ANY SOFTWARE ITEM IDENTIFIED AS THIRD PARTY LIBRARY IS AVAILABLE UNDER SEPARATE SOFTWARE LICENSE TERMS AND IS NOT PART OF A TIBCO PRODUCT. AS SUCH, THESE SOFTWARE ITEMS ARE NOT COVERED BY THE TERMS OF YOUR AGREEMENT WITH TIBCO, INCLUDING ANY TERMS CONCERNING SUPPORT, MAINTENANCE, WARRANTIES, AND INDEMNITIES. DOWNLOAD AND USE OF THESE ITEMS IS SOLELY AT YOUR OWN DISCRETION AND SUBJECT TO THE LICENSE TERMS APPLICABLE TO THEM. BY PROCEEDING TO DOWNLOAD, INSTALL OR USE ANY OF THESE ITEMS, YOU ACKNOWLEDGE THE FOREGOING DISTINCTIONS BETWEEN THESE ITEMS AND TIBCO PRODUCTS. -

Attacking AJAX Web Applications Vulns 2.0 for Web 2.0

Attacking AJAX Web Applications Vulns 2.0 for Web 2.0 Alex Stamos Zane Lackey [email protected] [email protected] Blackhat Japan October 5, 2006 Information Security Partners, LLC iSECPartners.com Information Security Partners, LLC www.isecpartners.com Agenda • Introduction – Who are we? – Why care about AJAX? • How does AJAX change Web Attacks? • AJAX Background and Technologies • Attacks Against AJAX – Discovery and Method Manipulation – XSS – Cross-Site Request Forgery • Security of Popular Frameworks – Microsoft ATLAS – Google GWT –Java DWR • Q&A 2 Information Security Partners, LLC www.isecpartners.com Introduction • Who are we? – Consultants for iSEC Partners – Application security consultants and researchers – Based in San Francisco • Why listen to this talk? – New technologies are making web app security much more complicated • This is obvious to anybody who reads the paper – MySpace – Yahoo – Worming of XSS – Our Goals for what you should walk away with: • Basic understanding of AJAX and different AJAX technologies • Knowledge of how AJAX changes web attacks • In-depth knowledge on XSS and XSRF in AJAX • An opinion on whether you can trust your AJAX framework to “take care of security” 3 Information Security Partners, LLC www.isecpartners.com Shameless Plug Slide • Special Thanks to: – Scott Stender, Jesse Burns, and Brad Hill of iSEC Partners – Amit Klein and Jeremiah Grossman for doing great work in this area – Rich Cannings at Google • Books by iSECer Himanshu Dwivedi – Securing Storage – Hackers’ Challenge 3 • We are -

Xwiki Enterprise 8.4.4 Developer Guide - Xwiki Rendering Macros in Java Xwiki Enterprise 8.4.4 Developer Guide - Xwiki Rendering Macros in Java

XWiki Enterprise 8.4.4 Developer Guide - XWiki Rendering Macros in Java XWiki Enterprise 8.4.4 Developer Guide - XWiki Rendering Macros in Java Table of Contents Page 2 XWiki Enterprise 8.4.4 Developer Guide - XWiki Rendering Macros in Java • XWiki Rendering Macros in Java • Box Macro • Cache Macro • Chart Macro • Children Macro • Code Macro • Comment Macro • Container Macro • Content Macro • Context Macro • Dashboard Macro • Display Macro • Document Tree Macro • Error Message Macro • Footnote Macro • Formula Macro • Gallery Macro • Groovy Macro • HTML Macro • ID Macro • Include Macro • Information Message Macro • Office Macro • Put Footnotes Macro • Python Macro • RSS Macro • Script Macro • Success Macro Message • Table of Contents Macro • Translation Macro • User Avatar Java Macro • Velocity Macro • Warning Message Macro XWiki Rendering Macros in Java Overview XWiki Rendering Macros in Java are created using the XWiki Rendering architecture and Java. In order to implement a Java macro you will need to write 2 classes: • One that representing the allowed parameters, including mandatory parameters, default values, parameter descriptions. An instance of this class will be automatically populated when the user calls the macro in wiki syntax. • Another one that is the macro itself and implements the Macro interface. The XWiki Rendering Architecture can be summarized by the image below: The Parser parses a text input like "xwiki/2.0" syntax or HTML and generates an XDOM object. This object is an Abstract Syntax Tree which represents the input into structured blocks. The Renderer takes an XDOM as input and generates an output like "xwiki/2.0" syntax, XHTML or PDF. The Transformation takes an XDOM as input and generates a modified one. -

WEB2PY Enterprise Web Framework (2Nd Edition)

WEB2PY Enterprise Web Framework / 2nd Ed. Massimo Di Pierro Copyright ©2009 by Massimo Di Pierro. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permitted under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600, or on the web at www.copyright.com. Requests to the Copyright owner for permission should be addressed to: Massimo Di Pierro School of Computing DePaul University 243 S Wabash Ave Chicago, IL 60604 (USA) Email: [email protected] Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their best efforts in preparing this book, they make no representations or warranties with respect to the accuracy or completeness of the contents of this book and specifically disclaim any implied warranties of merchantability or fitness for a particular purpose. No warranty may be created ore extended by sales representatives or written sales materials. The advice and strategies contained herein may not be suitable for your situation. You should consult with a professional where appropriate. Neither the publisher nor author shall be liable for any loss of profit or any other commercial damages, including but not limited to special, incidental, consequential, or other damages. Library of Congress Cataloging-in-Publication Data: WEB2PY: Enterprise Web Framework Printed in the United States of America. -

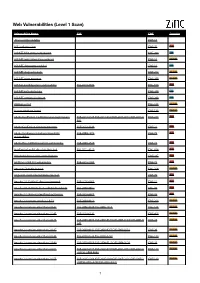

Web Vulnerabilities (Level 1 Scan)

Web Vulnerabilities (Level 1 Scan) Vulnerability Name CVE CWE Severity .htaccess file readable CWE-16 ASP code injection CWE-95 High ASP.NET MVC version disclosure CWE-200 Low ASP.NET application trace enabled CWE-16 Medium ASP.NET debugging enabled CWE-16 Low ASP.NET diagnostic page CWE-200 Medium ASP.NET error message CWE-200 Medium ASP.NET padding oracle vulnerability CVE-2010-3332 CWE-310 High ASP.NET path disclosure CWE-200 Low ASP.NET version disclosure CWE-200 Low AWStats script CWE-538 Medium Access database found CWE-538 Medium Adobe ColdFusion 9 administrative login bypass CVE-2013-0625 CVE-2013-0629CVE-2013-0631 CVE-2013-0 CWE-287 High 632 Adobe ColdFusion directory traversal CVE-2013-3336 CWE-22 High Adobe Coldfusion 8 multiple linked XSS CVE-2009-1872 CWE-79 High vulnerabilies Adobe Flex 3 DOM-based XSS vulnerability CVE-2008-2640 CWE-79 High AjaxControlToolkit directory traversal CVE-2015-4670 CWE-434 High Akeeba backup access control bypass CWE-287 High AmCharts SWF XSS vulnerability CVE-2012-1303 CWE-79 High Amazon S3 public bucket CWE-264 Medium AngularJS client-side template injection CWE-79 High Apache 2.0.39 Win32 directory traversal CVE-2002-0661 CWE-22 High Apache 2.0.43 Win32 file reading vulnerability CVE-2003-0017 CWE-20 High Apache 2.2.14 mod_isapi Dangling Pointer CVE-2010-0425 CWE-20 High Apache 2.x version equal to 2.0.51 CVE-2004-0811 CWE-264 Medium Apache 2.x version older than 2.0.43 CVE-2002-0840 CVE-2002-1156 CWE-538 Medium Apache 2.x version older than 2.0.45 CVE-2003-0132 CWE-400 Medium Apache 2.x version -

Wikis in Libraries Matthew M

Wikis in Libraries Matthew M. Bejune Wikis have recently been adopted to support a variety of a type of Web site that allows the visitors to add, collaborative activities within libraries. This article and remove, edit, and change some content, typically with out the need for registration. It also allows for linking its companion wiki, LibraryWikis (http://librarywikis. among any number of pages. This ease of interaction pbwiki.com/), seek to document the phenomenon of wikis and operation makes a wiki an effective tool for mass in libraries. This subject is considered within the frame- collaborative authoring. work of computer-supported cooperative work (CSCW). Wikis have been around since the mid1990s, though it The author identified thirty-three library wikis and is only recently that they have become ubiquitous. In 1995, Ward Cunningham launched the first wiki, WikiWikiWeb developed a classification schema with four categories: (1) (http://c2.com/cgi/wiki), which is still active today, to collaboration among libraries (45.7 percent); (2) collabo- facilitate the exchange of ideas among computer program ration among library staff (31.4 percent); (3) collabora- mers (Wikipedia 2007b). The launch of WikiWikiWeb was tion among library staff and patrons (14.3 percent); and a departure from the existing model of Web communica tion ,where there was a clear divide between authors and (4) collaboration among patrons (8.6 percent). Examples readers. WikiWikiWeb elevated the status of readers, if of library wikis are presented within the article, as is a they so chose, to that of content writers and editors. This discussion for why wikis are primarily utilized within model proved popular, and the wiki technology used on categories I and II and not within categories III and IV. -

HTTP Parameter Pollution Vulnerabilities in Web Applications @ Blackhat Europe 2011 @

HTTP Parameter Pollution Vulnerabilities in Web Applications @ BlackHat Europe 2011 @ Marco ‘embyte’ Balduzzi embyte(at)madlab(dot)it http://www.madlab.it Contents 1 Introduction 2 2 HTTP Parameter Pollution Attacks 3 2.1 Parameter Precedence in Web Applications . .3 2.2 Parameter Pollution . .4 2.2.1 Cross-Channel Pollution . .5 2.2.2 HPP to bypass CSRF tokens . .5 2.2.3 Bypass WAFs input validation checks . .6 3 Automated HPP Vulnerability Detection 6 3.1 Browser and Crawler Components . .7 3.2 P-Scan: Analysis of the Parameter Precedence . .7 3.3 V-Scan: Testing for HPP vulnerabilities . .9 3.3.1 Handling special cases . 10 3.4 Implementation . 10 3.4.1 Online Service . 11 3.5 Limitations . 11 4 Evaluation 11 4.1 HPP Prevalence in Popular Websites . 11 4.1.1 Parameter Precedence . 13 4.1.2 HPP Vulnerabilities . 14 4.1.3 False Positives . 15 4.2 Examples of Discovered Vulnerabilities . 15 4.2.1 Facebook Share . 16 4.2.2 CSRF via HPP Injection . 16 4.2.3 Shopping Carts . 16 4.2.4 Financial Institutions . 16 4.2.5 Tampering with Query Results . 17 5 Related work 17 6 Conclusion 18 7 Acknowledgments 18 1 1 Introduction In the last twenty years, web applications have grown from simple, static pages to complex, full-fledged dynamic applications. Typically, these applications are built using heterogeneous technologies and consist of code that runs on the client (e.g., Javascript) and code that runs on the server (e.g., Java servlets). Even simple web applications today may accept and process hundreds of different HTTP parameters to be able to provide users with rich, inter- active services.