Development and Qualification of an FPGA-Based Multi-Processor System-On-Chip On-Board Computer for LEO Satellites

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Foreign Government-Sponsored Broadcast Programming

February 11, 2021 Foreign Government-Sponsored Broadcast Programming Overview Radio Sputnik, a subsidiary of the Russian government- Congress has enacted several laws to enable U.S. citizens financed Rossiya Segodnya International Information and the federal government to monitor attempts by foreign Agency, airs programming on radio stations in Washington, governments to influence public opinion on political DC, and Kansas City, MO. Rossiya Segodnya has contracts matters. Nevertheless, radio and television viewers may with two different U.S.-based entities to broadcast Radio have difficulty distinguishing programs financed and Sputnik’s programming. One entity is a radio station distributed by foreign governments or their agents. In licensee itself, while the other is an intermediary. DOJ has October 2020, the Federal Communications Commission directed each entity to register under FARA. Copies of the (FCC) proposed new requirements for broadcast radio and Rossiya Segodnya’s contracts with both entities are television stations to identify foreign government-provided available on both the FCC and DOJ websites. programming. FCC filings and a November 2015 report from the Reuters Statutory Background news agency indicate that China Radio International (CRI), For nearly 100 years, beginning with the passage of the an organization owned by the Chinese government, may Radio Act of 1927 (P.L. 69-632) and the Communications have agreements to transmit programming to 10 full-power Act of 1934 (P.L. 73-416), Congress has required broadcast U.S. radio stations, but independent verification is not stations to label content supplied and paid for by third readily available. parties so viewers and listeners can distinguish it from content created by the stations themselves (47 U.S.C. -

Ellsworth American Only COUNTY Paper

CllLittwtli merfcan. — —1 —-* _ t You XLVHI. 1 gTffT&SSa.'VA.” ELLSWORTH, MAINE, WEDNESDAY AFTERNOON, MAY 14, 1902. j! No. 20. j muuHiiiiuiimj, excursion from Ellsworth by steamer, SbfacttfcfmmU. " LOCAL AFFAIRS. leaving here about noon Monday, and re- turning at noon Tuesday. Esoteric lodge 0. 0. BIJ KRILL & NEW ADVKKTIHKMKNTN THIS WEEK. SON, has also been informally invited to work Probate notice-Kst HI las K Trtbou. tbe third degree in East port in Jane. Joseph A Retorts— Notice of foreclosure. INSURANCE Probate notice—Kst Mar? Shannon. Miss Berths H. Lancaster, of Ellsworth, John Mum! i> (JENERAL AGENTS, shy—Sheriff's sale and D Lear, of Mt. were Kxec Harvey Desert, Bitbriu. Bank notice—Est May W Howler. Bldo., ELL8WORTH, ME. N P Culler, Jr—Girl wanted. married at the Methodist parsonage in Pro tote notice —Kst KMzntoth Sumlnsb? etals. Ellsworth this Rev. J. P. Kxec morning by WE REPRESENT THE notice—Kst Mary G Dorr Rank statement—Condition of First national Simonton. They left on the 11 18 train bank. for Northeast Harbor, where they will Most Reliable Home and Foreign M A Clark—Greenhouse. Companies. E J Walsh—Shoe store. live. Rates Fa sent A Rami— Lowest with Photographers. son of Compatible Safety. D FTrlbou—Household goods. Carlton, tb# two-year-old Mr. W R Parker Clothing l.'o-Clothlng. and Mrs. Charles Brooks, died Thursday China A Japan Tea coffee and 1 *D l,n,n, *° »<>lt on Improved real eatata and Co—Tea, spice. morning of Much BakingPoWde*^, IfOVUTY TO LOAN Giles A Burrlil—New market. -

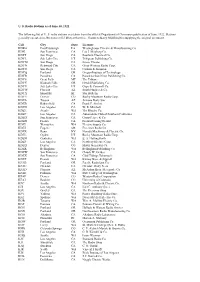

U. S. Radio Stations As of June 30, 1922 the Following List of U. S. Radio

U. S. Radio Stations as of June 30, 1922 The following list of U. S. radio stations was taken from the official Department of Commerce publication of June, 1922. Stations generally operated on 360 meters (833 kHz) at this time. Thanks to Barry Mishkind for supplying the original document. Call City State Licensee KDKA East Pittsburgh PA Westinghouse Electric & Manufacturing Co. KDN San Francisco CA Leo J. Meyberg Co. KDPT San Diego CA Southern Electrical Co. KDYL Salt Lake City UT Telegram Publishing Co. KDYM San Diego CA Savoy Theater KDYN Redwood City CA Great Western Radio Corp. KDYO San Diego CA Carlson & Simpson KDYQ Portland OR Oregon Institute of Technology KDYR Pasadena CA Pasadena Star-News Publishing Co. KDYS Great Falls MT The Tribune KDYU Klamath Falls OR Herald Publishing Co. KDYV Salt Lake City UT Cope & Cornwell Co. KDYW Phoenix AZ Smith Hughes & Co. KDYX Honolulu HI Star Bulletin KDYY Denver CO Rocky Mountain Radio Corp. KDZA Tucson AZ Arizona Daily Star KDZB Bakersfield CA Frank E. Siefert KDZD Los Angeles CA W. R. Mitchell KDZE Seattle WA The Rhodes Co. KDZF Los Angeles CA Automobile Club of Southern California KDZG San Francisco CA Cyrus Peirce & Co. KDZH Fresno CA Fresno Evening Herald KDZI Wenatchee WA Electric Supply Co. KDZJ Eugene OR Excelsior Radio Co. KDZK Reno NV Nevada Machinery & Electric Co. KDZL Ogden UT Rocky Mountain Radio Corp. KDZM Centralia WA E. A. Hollingworth KDZP Los Angeles CA Newbery Electric Corp. KDZQ Denver CO Motor Generator Co. KDZR Bellingham WA Bellingham Publishing Co. KDZW San Francisco CA Claude W. -

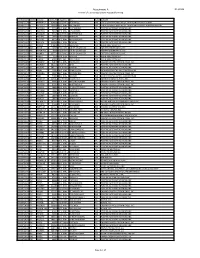

Attachment a DA 19-526 Renewal of License Applications Accepted for Filing

Attachment A DA 19-526 Renewal of License Applications Accepted for Filing File Number Service Callsign Facility ID Frequency City State Licensee 0000072254 FL WMVK-LP 124828 107.3 MHz PERRYVILLE MD STATE OF MARYLAND, MDOT, MARYLAND TRANSIT ADMN. 0000072255 FL WTTZ-LP 193908 93.5 MHz BALTIMORE MD STATE OF MARYLAND, MDOT, MARYLAND TRANSIT ADMINISTRATION 0000072258 FX W253BH 53096 98.5 MHz BLACKSBURG VA POSITIVE ALTERNATIVE RADIO, INC. 0000072259 FX W247CQ 79178 97.3 MHz LYNCHBURG VA POSITIVE ALTERNATIVE RADIO, INC. 0000072260 FX W264CM 93126 100.7 MHz MARTINSVILLE VA POSITIVE ALTERNATIVE RADIO, INC. 0000072261 FX W279AC 70360 103.7 MHz ROANOKE VA POSITIVE ALTERNATIVE RADIO, INC. 0000072262 FX W243BT 86730 96.5 MHz WAYNESBORO VA POSITIVE ALTERNATIVE RADIO, INC. 0000072263 FX W241AL 142568 96.1 MHz MARION VA POSITIVE ALTERNATIVE RADIO, INC. 0000072265 FM WVRW 170948 107.7 MHz GLENVILLE WV DELLA JANE WOOFTER 0000072267 AM WESR 18385 1330 kHz ONLEY-ONANCOCK VA EASTERN SHORE RADIO, INC. 0000072268 FM WESR-FM 18386 103.3 MHz ONLEY-ONANCOCK VA EASTERN SHORE RADIO, INC. 0000072270 FX W289CE 157774 105.7 MHz ONLEY-ONANCOCK VA EASTERN SHORE RADIO, INC. 0000072271 FM WOTR 1103 96.3 MHz WESTON WV DELLA JANE WOOFTER 0000072274 AM WHAW 63489 980 kHz LOST CREEK WV DELLA JANE WOOFTER 0000072285 FX W206AY 91849 89.1 MHz FRUITLAND MD CALVARY CHAPEL OF TWIN FALLS, INC. 0000072287 FX W284BB 141155 104.7 MHz WISE VA POSITIVE ALTERNATIVE RADIO, INC. 0000072288 FX W295AI 142575 106.9 MHz MARION VA POSITIVE ALTERNATIVE RADIO, INC. 0000072293 FM WXAF 39869 90.9 MHz CHARLESTON WV SHOFAR BROADCASTING CORPORATION 0000072294 FX W204BH 92374 88.7 MHz BOONES MILL VA CALVARY CHAPEL OF TWIN FALLS, INC. -

Before the Federal Communications Commission Washington, D.C

BEFORE THE FEDERAL COMMUNICATIONS COMMISSION WASHINGTON, D.C. 20554 In the matter of ) ) GLR Southern California, LLC ) IB Docket No. 19-144 ) File No. 325-STA-20180710-00002 Application for a Section 325(c) Permit to ) File No. 325-NEW-20180614-00001 Deliver Programs to Foreign Broadcast ) Stations for Delivery of Mandarin Chinese ) Programming to Mexican Station XEWW- ) AM, Rosarito, Baja California Norte, Mexico ) ) To: Office of the Secretary Attn.: Chief, International Bureau PETITION FOR RECONSIDERATION OF ORDER DISMISSING APPLICATION TO DELIVER FOREIGN PROGRAMMING Paige K. Fronabarger David Oxenford Christopher D. Bair WILKINSON BARKER KNAUER, LLP 1800 M Street, NW, Suite 800N Washington, D.C. 20036 (202) 783-4141 Counsel to GLR Southern California, LLC and H&H Group USA LLC July 22, 2020 TABLE OF CONTENTS EXECUTIVE SUMMARY ........................................................................................................ ii INTRODUCTION ......................................................................................................................2 DISCUSSION .............................................................................................................................5 I. Dismissal of the 325(c) Permit Application was Arbitrary and Capricious and a Violation of the APA. ..............................................................................................................................5 A. The Bureau Erred in Finding that it Lacked Adequate Information to Complete a Public Interest Analysis of -

Inside This Issue

News Serving DX’ers since 1933 Volume 83, No. 5 ● November 30, 2015 ● (ISSN 0737-1639) Inside this issue . 2 … AM Switch 13 … Musings of the Members 21 … Tower Calendar / DXtreme 5 … Domestic DX Digest West 14 … International DX Digest 22 … KC 2016 Call for Papers 9 … Domestic DX Digest East 17 … FCC CP Status Report 23 … Space Wx / FCC Silent List 2016 DXers Gathering: DXers in AM, FM, and Just FYI, as a nonprofit club run entirely by TV, including the NRC, IRCA, WTFDA, and uncompensated volunteers, NRC policy is not to DecaloMania will gather on September 9‐11, 2016 take advertising in DX News. However, we will in Kansas City, MO. It will be held at the Hyatt publish free announcements of commercial Place Kansas City Airport, 7600 NW 97th products that may be of interest to members – no Terrace. Information on registration will be made more than once a year, on a “space available” available starting in January. Rates are $99.00 per basis. Contact [email protected] for night for 1 to 3 persons per room, plus taxes and more info. fees. Plan to arrive on Thursday for 3 nights, and Membership Report we end Sunday at noon. Free airport transfers “Please renew my membership in the NRC for and breakfast each morning. Registration: $55 another year.” – Dave Bright. per person which includes a free Friday evening New Members: Welcome to Antoine Gamet, pizza party and Saturday evening banquet. Coatesville, PA; and Joseph Kremer, Bridgeport, Checks made payable to “National Radio Club” WV. and sent to Ernest J. -

530 CIAO BRAMPTON on ETHNIC AM 530 N43 35 20 W079 52 54 09-Feb

frequency callsign city format identification slogan latitude longitude last change in listing kHz d m s d m s (yy-mmm) 530 CIAO BRAMPTON ON ETHNIC AM 530 N43 35 20 W079 52 54 09-Feb 540 CBKO COAL HARBOUR BC VARIETY CBC RADIO ONE N50 36 4 W127 34 23 09-May 540 CBXQ # UCLUELET BC VARIETY CBC RADIO ONE N48 56 44 W125 33 7 16-Oct 540 CBYW WELLS BC VARIETY CBC RADIO ONE N53 6 25 W121 32 46 09-May 540 CBT GRAND FALLS NL VARIETY CBC RADIO ONE N48 57 3 W055 37 34 00-Jul 540 CBMM # SENNETERRE QC VARIETY CBC RADIO ONE N48 22 42 W077 13 28 18-Feb 540 CBK REGINA SK VARIETY CBC RADIO ONE N51 40 48 W105 26 49 00-Jul 540 WASG DAPHNE AL BLK GSPL/RELIGION N30 44 44 W088 5 40 17-Sep 540 KRXA CARMEL VALLEY CA SPANISH RELIGION EL SEMBRADOR RADIO N36 39 36 W121 32 29 14-Aug 540 KVIP REDDING CA RELIGION SRN VERY INSPIRING N40 37 25 W122 16 49 09-Dec 540 WFLF PINE HILLS FL TALK FOX NEWSRADIO 93.1 N28 22 52 W081 47 31 18-Oct 540 WDAK COLUMBUS GA NEWS/TALK FOX NEWSRADIO 540 N32 25 58 W084 57 2 13-Dec 540 KWMT FORT DODGE IA C&W FOX TRUE COUNTRY N42 29 45 W094 12 27 13-Dec 540 KMLB MONROE LA NEWS/TALK/SPORTS ABC NEWSTALK 105.7&540 N32 32 36 W092 10 45 19-Jan 540 WGOP POCOMOKE CITY MD EZL/OLDIES N38 3 11 W075 34 11 18-Oct 540 WXYG SAUK RAPIDS MN CLASSIC ROCK THE GOAT N45 36 18 W094 8 21 17-May 540 KNMX LAS VEGAS NM SPANISH VARIETY NBC K NEW MEXICO N35 34 25 W105 10 17 13-Nov 540 WBWD ISLIP NY SOUTH ASIAN BOLLY 540 N40 45 4 W073 12 52 18-Dec 540 WRGC SYLVA NC VARIETY NBC THE RIVER N35 23 35 W083 11 38 18-Jun 540 WETC # WENDELL-ZEBULON NC RELIGION EWTN DEVINE MERCY R. -

LA-4225-Ms , Z .

. .. .— .—, — . ----- .-. ,*. —-. z ._.-., “ ‘ -- . LA-4225-Ms .——, ——. “..!:- - cIc-14 REPORT COLECTION ,.......-..REPRODUCTION - -- - 4 _._cy3PY’” ‘“--” - C3 . .— ..J _ _. —..- .-. —--- -.:+ .-. -:. ,r#+ ..S_ J.+ .— .=.- —Q_— ----- .— . ..-. 4 . ..- -: ...” .—:-– —--—--- .... ,- . .““ . .. ... *.L._==_ .-. —-=——.-_TJ...---- ,‘7’- ----- .— .— - _— f.. ,,. .—.. F-*& - . .. .,----- ..””. ..- ----- - .,. - ..-:. — -. =.,- :...— r,. - , * -& ?.__+: ;–-._..-.-._ Q .- ?.-:;--%: .=:-:.. -. =--5=, - “-s k:, - =“s - - .’ ‘-- .%-.-i .. LOS ALAMOS’- “SC-lENTIFiC-”-,=...LABOliA@liy ‘-”-.“ — .—,‘—”- . ---- ---- -- —-—~_... — — ..— .-.<— ,..:. *, .—— J —---r-.-: ‘.”-:..;%ksssA= 1 -L-..-+--=-_+~ .. .&?@=@’-”+2:”G7“”””.. -.-—-... ... -..7 . ..-— ._ ~.-~+ . - &A++-+~~ ____..—.=g.~:.> --~ ----- - ?.7=s. —-— .- _.. --- .-. —-—-?-”= . -=—.——— -.=_-—~- .._-5.- ----- --7—. .— . —-. : -.= .=.. .— --- .2 A-=-- - -’---:-—.=z*– .-. -?-——...,-- --.—,___.+ ..—z-xi--y.—... -~ .= -=._ . -- . .. .-.-:-e ~-~ .-=~.-cx.-. -, .>.= ;2 ● ✎✎ “_- .._ . LEGAL NOTICE Tbls report was prepared as an accotit of Ooveroment sponsored work. Neither the United States, nor the Commission, nor any person acting on behalf of the Commission: A. Makes anywarrenty or representation, expressed or implied, with respect to the accu- racy, completeness, or usefulness of the information contained in this report, or that the uee of any information, apparatus, method, or process disclosed tn thte report may not infringe privately owned right6; or - B. Assumes any liabilities with respect to the -

Exhibit 2181

Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 1 of 4 Electronically Filed Docket: 19-CRB-0005-WR (2021-2025) Filing Date: 08/24/2020 10:54:36 AM EDT NAB Trial Ex. 2181.1 Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 2 of 4 NAB Trial Ex. 2181.2 Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 3 of 4 NAB Trial Ex. 2181.3 Exhibit 2181 Case 1:18-cv-04420-LLS Document 131 Filed 03/23/20 Page 4 of 4 NAB Trial Ex. 2181.4 Exhibit 2181 Case 1:18-cv-04420-LLS Document 132 Filed 03/23/20 Page 1 of 1 NAB Trial Ex. 2181.5 Exhibit 2181 Case 1:18-cv-04420-LLS Document 133 Filed 04/15/20 Page 1 of 4 ATARA MILLER Partner 55 Hudson Yards | New York, NY 10001-2163 T: 212.530.5421 [email protected] | milbank.com April 15, 2020 VIA ECF Honorable Louis L. Stanton Daniel Patrick Moynihan United States Courthouse 500 Pearl St. New York, NY 10007-1312 Re: Radio Music License Comm., Inc. v. Broad. Music, Inc., 18 Civ. 4420 (LLS) Dear Judge Stanton: We write on behalf of Respondent Broadcast Music, Inc. (“BMI”) to update the Court on the status of BMI’s efforts to implement its agreement with the Radio Music License Committee, Inc. (“RMLC”) and to request that the Court unseal the Exhibits attached to the Order (see Dkt. -

Hadiotv EXPERIMENTER AUGUST -SEPTEMBER 75C

DXer's DREAM THAT ALMOST WAS SHASILAND HadioTV EXPERIMENTER AUGUST -SEPTEMBER 75c BUILD COLD QuA BREE ... a 2-FET metal moocher to end the gold drain and De Gaulle! PIUS Socket -2 -Me CB Skyhook No -Parts Slave Flash Patrol PA System IC Big Voice www.americanradiohistory.com EICO Makes It Possible Uncompromising engineering-for value does it! You save up to 50% with Eico Kits and Wired Equipment. (%1 eft ale( 7.111 e, si. a er. ortinastereo Engineering excellence, 100% capability, striking esthetics, the industry's only TOTAL PERFORMANCE STEREO at lowest cost. A Silicon Solid -State 70 -Watt Stereo Amplifier for $99.95 kit, $139.95 wired, including cabinet. Cortina 3070. A Solid -State FM Stereo Tuner for $99.95 kit. $139.95 wired, including cabinet. Cortina 3200. A 70 -Watt Solid -State FM Stereo Receiver for $169.95 kit, $259.95 wired, including cabinet. Cortina 3570. The newest excitement in kits. 100% solid-state and professional. Fun to build and use. Expandable, interconnectable. Great as "jiffy" projects and as introductions to electronics. No technical experience needed. Finest parts, pre -drilled etched printed circuit boards, step-by-step instructions. EICOGRAFT.4- Electronic Siren $4.95, Burglar Alarm $6.95, Fire Alarm $6.95, Intercom $3.95, Audio Power Amplifier $4.95, Metronome $3.95, Tremolo $8.95, Light Flasher $3.95, Electronic "Mystifier" $4.95, Photo Cell Nite Lite $4.95, Power Supply $7.95, Code Oscillator $2.50, «6 FM Wireless Mike $9.95, AM Wireless Mike $9.95, Electronic VOX $7.95, FM Radio $9.95, - AM Radio $7.95, Electronic Bongos $7.95. -

Freq Call State Location U D N C Distance Bearing

AM BAND RADIO STATIONS COMPILED FROM FCC CDBS DATABASE AS OF FEB 6, 2012 POWER FREQ CALL STATE LOCATION UDNCDISTANCE BEARING NOTES 540 WASG AL DAPHNE 2500 18 1107 103 540 KRXA CA CARMEL VALLEY 10000 500 848 278 540 KVIP CA REDDING 2500 14 923 295 540 WFLF FL PINE HILLS 50000 46000 1523 102 540 WDAK GA COLUMBUS 4000 37 1241 94 540 KWMT IA FORT DODGE 5000 170 790 51 540 KMLB LA MONROE 5000 1000 838 101 540 WGOP MD POCOMOKE CITY 500 243 1694 75 540 WXYG MN SAUK RAPIDS 250 250 922 39 540 WETC NC WENDELL-ZEBULON 4000 500 1554 81 540 KNMX NM LAS VEGAS 5000 19 67 109 540 WLIE NY ISLIP 2500 219 1812 69 540 WWCS PA CANONSBURG 5000 500 1446 70 540 WYNN SC FLORENCE 250 165 1497 86 540 WKFN TN CLARKSVILLE 4000 54 1056 81 540 KDFT TX FERRIS 1000 248 602 110 540 KYAH UT DELTA 1000 13 415 306 540 WGTH VA RICHLANDS 1000 97 1360 79 540 WAUK WI JACKSON 400 400 1090 56 550 KTZN AK ANCHORAGE 3099 5000 2565 326 550 KFYI AZ PHOENIX 5000 1000 366 243 550 KUZZ CA BAKERSFIELD 5000 5000 709 270 550 KLLV CO BREEN 1799 132 312 550 KRAI CO CRAIG 5000 500 327 348 550 WAYR FL ORANGE PARK 5000 64 1471 98 550 WDUN GA GAINESVILLE 10000 2500 1273 88 550 KMVI HI WAILUKU 5000 3181 265 550 KFRM KS SALINA 5000 109 531 60 550 KTRS MO ST. LOUIS 5000 5000 907 73 550 KBOW MT BUTTE 5000 1000 767 336 550 WIOZ NC PINEHURST 1000 259 1504 84 550 WAME NC STATESVILLE 500 52 1420 82 550 KFYR ND BISMARCK 5000 5000 812 19 550 WGR NY BUFFALO 5000 5000 1533 63 550 WKRC OH CINCINNATI 5000 1000 1214 73 550 KOAC OR CORVALLIS 5000 5000 1071 309 550 WPAB PR PONCE 5000 5000 2712 106 550 WBZS RI -

Washington Dc National Capital Region Emergency Alert System (Eas) Plan

WASHINGTON DC NATIONAL CAPITAL REGION EMERGENCY ALERT SYSTEM (EAS) PLAN Revised August, 2003 Updated December, 2008 P B: THE WASHINGTON DC NATIONAL CAPITAL REGION EMERGENCY COMMUNICATIONS COMMITTEE I C W: T M W C G I. Intent and Purpose of this Plan II. The National, State and Local EAS: Participation and Priorities A. National EAS Participation B. State / Local Participation C. Conditions of EAS Participation D. EAS Priorities III. The Washington DC National Capital Region Emergency Communications Committee (WDCNCR ECC) IV. Organization and Concepts of the WDCNCR EAS A. Broadcast and Cable EAS Designations B. Other Definitions C. Primary and Secondary Delivery Plan D. Your Part in Completing the System V. EAS Header Code Information A. EAS Header Code Analysis B. WDCNCR Originator Codes C. WDCNCR Event Codes D. WDCNCR Jurisdiction-Location Codes E. WDCNCR �L-Code� Formats VI. EAS Tests A. Required Weekly Test (RWT) 1. Transmission 2. Reception B. Required Monthly Test (RMT) 1. Transmission 2. Scheduling of RMT�s: Week and Time of Day 3. Scheduling of RMT�s: Recommended Time Constraints 4. Reception / Re-transmission C. Time-Duration and Jurisdiction-Location Codes to be Used VII. WDCNCR EAS Scripts and Formats A. Test Scripts and Formats B. Real Alert Activation Scripts and Formats VIII. Guidance for Originators of EAS Alerts A. Guidance for National Weather Service Personnel B. Guidance for Emergency Services Personnel C. Guidance for Regional Emergency Messages IX. Guidance for All Users in Programming their EAS Decoders A. Modes of Operation B. Jurisdiction-Location Codes to Use C. Event Codes You MUST Program into your EAS Decoder D.