European High-Performance Computing Projects - HANDBOOK 2020 3

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Prepared by Textore, Inc. Peter Wood, David Yang, and Roger Cliff November 2020

AIR-TO-AIR MISSILES CAPABILITIES AND DEVELOPMENT IN CHINA Prepared by TextOre, Inc. Peter Wood, David Yang, and Roger Cliff November 2020 Printed in the United States of America by the China Aerospace Studies Institute ISBN 9798574996270 To request additional copies, please direct inquiries to Director, China Aerospace Studies Institute, Air University, 55 Lemay Plaza, Montgomery, AL 36112 All photos licensed under the Creative Commons Attribution-Share Alike 4.0 International license, or under the Fair Use Doctrine under Section 107 of the Copyright Act for nonprofit educational and noncommercial use. All other graphics created by or for China Aerospace Studies Institute Cover art is "J-10 fighter jet takes off for patrol mission," China Military Online 9 October 2018. http://eng.chinamil.com.cn/view/2018-10/09/content_9305984_3.htm E-mail: [email protected] Web: http://www.airuniversity.af.mil/CASI https://twitter.com/CASI_Research @CASI_Research https://www.facebook.com/CASI.Research.Org https://www.linkedin.com/company/11049011 Disclaimer The views expressed in this academic research paper are those of the authors and do not necessarily reflect the official policy or position of the U.S. Government or the Department of Defense. In accordance with Air Force Instruction 51-303, Intellectual Property, Patents, Patent Related Matters, Trademarks and Copyrights; this work is the property of the U.S. Government. Limited Print and Electronic Distribution Rights Reproduction and printing is subject to the Copyright Act of 1976 and applicable treaties of the United States. This document and trademark(s) contained herein are protected by law. This publication is provided for noncommercial use only. -

NSIAD-98-176 China B-279891

United States General Accounting Office Report to the Chairman, Joint Economic GAO Committee, U.S. Senate June 1998 CHINA Military Imports From the United States and the European Union Since the 1989 Embargoes GAO/NSIAD-98-176 United States General Accounting Office GAO Washington, D.C. 20548 National Security and International Affairs Division B-279891 June 16, 1998 The Honorable James Saxton Chairman, Joint Economic Committee United States Senate Dear Mr. Chairman: In June 1989, the United States and the members of the European Union 1 embargoed the sale of military items to China to protest China’s massacre of demonstrators in Beijing’s Tiananmen Square. You have expressed concern regarding continued Chinese access to foreign technology over the past decade, despite these embargoes. As requested, we identified (1) the terms of the EU embargo and the extent of EU military sales to China since 1989, (2) the terms of the U.S. embargo and the extent of U.S. military sales to China since 1989, and (3) the potential role that such EU and U.S. sales could play in addressing China’s defense needs. In conducting this review, we focused on military items—items that would be included on the U.S. Munitions List. This list includes both lethal items (such as missiles) and nonlethal items (such as military radars) that cannot be exported without a license.2 Because the data in this report was developed from unclassified sources, its completeness and accuracy may be subject to some uncertainty. The context for China’s foreign military imports during the 1990s lies in Background China’s recent military modernization efforts.3 Until the mid-1980s, China’s military doctrine focused on defeating technologically superior invading forces by trading territory for time and employing China’s vast reserves of manpower. -

Missilesmissilesdr Carlo Kopp in the Asia-Pacific

MISSILESMISSILESDr Carlo Kopp in the Asia-Pacific oday, offensive missiles are the primary armament of fighter aircraft, with missile types spanning a wide range of specialised niches in range, speed, guidance technique and intended target. With the Pacific Rim and Indian Ocean regions today the fastest growing area globally in buys of evolved third generation combat aircraft, it is inevitable that this will be reflected in the largest and most diverse inventory of weapons in service. At present the established inventories of weapons are in transition, with a wide variety of Tlegacy types in service, largely acquired during the latter Cold War era, and new technology 4th generation missiles are being widely acquired to supplement or replace existing weapons. The two largest players remain the United States and Russia, although indigenous Israeli, French, German, British and Chinese weapons are well established in specific niches. Air to air missiles, while demanding technologically, are nevertheless affordable to develop and fund from a single national defence budget, and they result in greater diversity than seen previously in larger weapons, or combat aircraft designs. Air-to-air missile types are recognised in three distinct categories: highly agile Within Visual Range (WVR) missiles; less agile but longer ranging Beyond Visual Range (BVR) missiles; and very long range BVR missiles. While the divisions between the latter two categories are less distinct compared against WVR missiles, the longer ranging weapons are often quite unique and usually much larger, to accommodate the required propellant mass. In technological terms, several important developments have been observed over the last decade. -

RSIS COMMENTARIES RSIS Commentaries Are Intended to Provide Timely And, Where Appropriate, Policy Relevant Background and Analysis of Contemporary Developments

RSIS COMMENTARIES RSIS Commentaries are intended to provide timely and, where appropriate, policy relevant background and analysis of contemporary developments. The views of the authors are their own and do not represent the official position of the S.Rajaratnam School of International Studies, NTU. These commentaries may be reproduced electronically or in print with prior permission from RSIS. Due recognition must be given to the author or authors and RSIS. Please email: [email protected] or call (+65) 6790 6982 to speak to the Editor RSIS Commentaries, Yang Razali Kassim. __________________________________________________________________________________________________ No. 85/2011 dated 24 May 2011 Indonesia’s Anti-ship Missiles: New Development in Naval Capabilities By Koh Swee Lean Collin Synopsis The recent Indonesian Navy test-launch of the supersonic Yakhont anti-ship missile marked yet another naval capability breakthrough in Southeast Asia. The Yakhont missile could potentially intensify the ongoing regional naval arms competition. Commentary ON 20 APRIL 2011, the Indonesian Navy (Tentera Nasional Indonesia – Angkatan Laut or TNI-AL) frigate KRI Oswald Siahaan test-fired a Russian-made Yakhont supersonic anti-ship missile during a naval exercise in the Indian Ocean. According to TNI-AL, the missile took about six minutes to travel 250 kilometres to score a direct hit on the target. This test-launch marks yet another significant capability breakthrough amongst Southeast Asian navies. It comes against the backdrop of unresolved -

The North African Military Balance Have Been Erratic at Best

CSIS _______________________________ Center for Strategic and International Studies 1800 K Street N.W. Washington, DC 20006 (202) 775 -3270 Access Web: ww.csis.org Contact the Author: [email protected] The No rth African Military Balance: Force Developments in the Maghreb Anthony H. Cordesman Center for Strategic and International Studies With the Assistance of Khalid Al -Rodhan Working Draft: Revised March 28, 2005 Please note that this documen t is a working draft and will be revised regularly. To comment, or to provide suggestions and corrections, please e - mail the author at [email protected] . Cordesman: The Middle East Military Ba lance: Force Development in North Africa 3/28/05 Page ii Table of Contents I. INTRODUCTION ................................ ................................ ................................ ................................ .................... 5 RESOURCES AND FORCE TRENDS ................................ ................................ ................................ ............................... 5 II. NATIONAL MILITAR Y FORCES ................................ ................................ ................................ .................... 22 THE MILITARY FORCES OF MOROCCO ................................ ................................ ................................ ...................... 22 Moroccan Army ................................ ................................ ................................ ................................ ................... 22 Moroccan Navy ............................... -

Worldwide Equipment Guide

WORLDWIDE EQUIPMENT GUIDE TRADOC DCSINT Threat Support Directorate DISTRIBUTION RESTRICTION: Approved for public release; distribution unlimited. Worldwide Equipment Guide Sep 2001 TABLE OF CONTENTS Page Page Memorandum, 24 Sep 2001 ...................................... *i V-150................................................................. 2-12 Introduction ............................................................ *vii VTT-323 ......................................................... 2-12.1 Table: Units of Measure........................................... ix WZ 551........................................................... 2-12.2 Errata Notes................................................................ x YW 531A/531C/Type 63 Vehicle Series........... 2-13 Supplement Page Changes.................................... *xiii YW 531H/Type 85 Vehicle Series ................... 2-14 1. INFANTRY WEAPONS ................................... 1-1 Infantry Fighting Vehicles AMX-10P IFV................................................... 2-15 Small Arms BMD-1 Airborne Fighting Vehicle.................... 2-17 AK-74 5.45-mm Assault Rifle ............................. 1-3 BMD-3 Airborne Fighting Vehicle.................... 2-19 RPK-74 5.45-mm Light Machinegun................... 1-4 BMP-1 IFV..................................................... 2-20.1 AK-47 7.62-mm Assault Rifle .......................... 1-4.1 BMP-1P IFV...................................................... 2-21 Sniper Rifles..................................................... -

Weapons Transfers and Violations of the Laws of War in Turkey

WEAPONS TRANSFERS AND VIOLATIONS OF THE LAWS OF WAR IN TURKEY Human Rights Watch Arms Project Human Right Watch New York AAA Washington AAA Los Angeles AAA London AAA Brussels Copyright 8 November 1995 by Human Rights Watch. All rights reserved. Printed in the United States of America. Library of Congress Catalog Card Number: 95-81502 ISBN 1-56432-161-4 HUMAN RIGHTS WATCH Human Rights Watch conducts regular, systematic investigations of human rights abuses in some seventy countries around the world. It addresses the human rights practices of governments of all political stripes, of all geopolitical alignments, and of all ethnic and religious persuasions. In internal wars it documents violations by both governments and rebel groups. Human Rights Watch defends freedom of thought and expression, due process and equal protection of the law; it documents and denounces murders, disappearances, torture, arbitrary imprisonment, exile, censorship and other abuses of internationally recognized human rights. Human Rights Watch began in 1978 with the founding of its Helsinki division. Today, it includes five divisions covering Africa, the Americas, Asia, the Middle East, as well as the signatories of the Helsinki accords. It also includes five collaborative projects on arms transfers, children's rights, free expression, prison conditions, and women's rights. It maintains offices in New York, Washington, Los Angeles, London, Brussels, Moscow, Dushanbe, Rio de Janeiro, and Hong Kong. Human Rights Watch is an independent, nongovernmental organization, supported by contributions from private individuals and foundations worldwide. It accepts no government funds, directly or indirectly. The staff includes Kenneth Roth, executive director; Cynthia Brown, program director; Holly J. -

VOLUME 14 . ISSUE 100 . YEAR 2020 ISSN 1306 5998 MERSEN WORLDWIDE SPECIALIST in MATERIALS SOLUTIONS Expertise, Performance and Innovation

VOLUME 14 . ISSUE 100 . YEAR 2020 ISSN 1306 5998 MERSEN WORLDWIDE SPECIALIST IN MATERIALS SOLUTIONS Expertise, Performance and Innovation Iso and Extruded Graphite C/C Composites Insulation Board Flexible Graphite SinteredSiC Material Enhancement Engineering - Densication and precise - Impregnation Solutions - Purication machining - Coatings for your needs Rocket Nozzles Ceramic Armour Semiconductor C/C Carrier Hot Pres Mold SiC Mirror GOSB Ihsan Dede Caddesi 900 Sokak 41480 Gebze-Kocaeli/TURKEY T+90 262 7510262 F+90 262 7510268 www.mersen.com [email protected] Yayıncı / Publisher Hatice Ayşe EVERS 10 70 Genel Yayın Yönetmeni / Editor in Chief Hatice Ayşe EVERS (AKALIN) [email protected] Şef Editör / Managing Editor Cem AKALIN [email protected] Uluslararası İlişkiler Direktörü / International Relations Director Şebnem AKALIN A Look at the Current [email protected] Status of the Turkish Editör / Editor İbrahim SÜNNETÇİ MMU/TF-X Program [email protected] İdari İşler Kordinatörü / Administrative Coordinator Yeşim BİLGİNOĞLU YÖRÜK [email protected] 86 Muhabir / Correspondent Turkey’s Medium Segment Saffet UYANIK System Provider / Integrator [email protected] FNSS General Manager & CEO SDT Accelerates on Export Çeviri / Translation Nail KURT Evaluates 2020 and Opportunities Tanyel AKMAN Future Outlook [email protected] Redaksiyon / Proof Reading Mona Melleberg YÜKSELTÜRK Grafik & Tasarım / Graphics & Design Gülsemin BOLAT Görkem ELMAS [email protected] -

Worldwide Equipment Guide Volume 2: Air and Air Defense Systems

Dec Worldwide Equipment Guide 2016 Worldwide Equipment Guide Volume 2: Air and Air Defense Systems TRADOC G-2 ACE–Threats Integration Ft. Leavenworth, KS Distribution Statement: Approved for public release; distribution is unlimited. 1 UNCLASSIFIED Worldwide Equipment Guide Opposing Force: Worldwide Equipment Guide Chapters Volume 2 Volume 2 Air and Air Defense Systems Volume 2 Signature Letter Volume 2 TOC and Introduction Volume 2 Tier Tables – Fixed Wing, Rotary Wing, UAVs, Air Defense Chapter 1 Fixed Wing Aviation Chapter 2 Rotary Wing Aviation Chapter 3 UAVs Chapter 4 Aviation Countermeasures, Upgrades, Emerging Technology Chapter 5 Unconventional and SPF Arial Systems Chapter 6 Theatre Missiles Chapter 7 Air Defense Systems 2 UNCLASSIFIED Worldwide Equipment Guide Units of Measure The following example symbols and abbreviations are used in this guide. Unit of Measure Parameter (°) degrees (of slope/gradient, elevation, traverse, etc.) GHz gigahertz—frequency (GHz = 1 billion hertz) hp horsepower (kWx1.341 = hp) Hz hertz—unit of frequency kg kilogram(s) (2.2 lb.) kg/cm2 kg per square centimeter—pressure km kilometer(s) km/h km per hour kt knot—speed. 1 kt = 1 nautical mile (nm) per hr. kW kilowatt(s) (1 kW = 1,000 watts) liters liters—liquid measurement (1 gal. = 3.785 liters) m meter(s)—if over 1 meter use meters; if under use mm m3 cubic meter(s) m3/hr cubic meters per hour—earth moving capacity m/hr meters per hour—operating speed (earth moving) MHz megahertz—frequency (MHz = 1 million hertz) mach mach + (factor) —aircraft velocity (average 1062 km/h) mil milliradian, radial measure (360° = 6400 mils, 6000 Russian) min minute(s) mm millimeter(s) m/s meters per second—velocity mt metric ton(s) (mt = 1,000 kg) nm nautical mile = 6076 ft (1.152 miles or 1.86 km) rd/min rounds per minute—rate of fire RHAe rolled homogeneous armor (equivalent) shp shaft horsepower—helicopter engines (kWx1.341 = shp) µm micron/micrometer—wavelength for lasers, etc. -

Air-To-Air Missiles

AAMs Link Page AIR-TO-AIR MISSILES British AAMs Chinese AAMs French AAMs Israeli AAMs Italian AAMs Russian AAMs South African AAMs US AAMs file:///E/My%20Webs/aams/aams_2.htm[5/24/2021 5:42:58 PM] British Air-to-Air Missiles Firestreak Notes: Firestreak was an early British heat-seeking missile, roughly analogous to the Sidewinder (though much larger). The testing program started in 1954, and the missile was so successful that for the first 100 launches, the engineers learned practically nothing about any potential weaknesses of the Firestreak. (Later testing revealed an accuracy rating of about 85% when fired within the proper parameters, still a remarkable total.) The Firestreak had been largely replaced by later missiles by 2003, though the few countries still using the Lightning (mostly Saudi Arabia and Kuwait) still had some Firestreaks on hand. Weapon Weight Accuracy Guidance Sensing Price Firestreak 137 kg Average IR Rear Aspect $7400 Weapon Speed Min Rng Max Rng Damage Pen Type Firestreak 5095 1065 8000 C47 B100 26C FRAG-HE Red Top Notes: The Red Top began as an upgrade to the Firestreak (and was originally called the Firestreak Mk IV). The Red Top was to overcome the narrow angle of acquisition of the Firestreak, as well as to rarrange the components of the Firestreak in a more logical and efficient pattern. Increases in technology allowed a better seeker head and the change in design as well as explosives technology allowed a more lethal warhead. However, by 2003, the Red Top has the same status as the Firestreak; largely in storage except for those countries still using the Lightning. -

Advanced Technology Acquisition Strategies of the People's Republic

Advanced Technology Acquisition Strategies of the People’s Republic of China Principal Author Dallas Boyd Science Applications International Corporation Contributing Authors Jeffrey G. Lewis and Joshua H. Pollack Science Applications International Corporation September 2010 This report is the product of a collaboration between the Defense Threat Reduction Agency’s Advanced Systems and Concepts Office and Science Applications International Corporation. The views expressed herein are those of the authors and do not necessarily reflect the official policy or position of the Defense Threat Reduction Agency, the Department of Defense, or the United States Government. This report is approved for public release; distribution is unlimited. Defense Threat Reduction Agency Advanced Systems and Concepts Office Report Number ASCO 2010-021 Contract Number DTRA01-03-D-0017, T.I. 18-09-03 The mission of the Defense Threat Reduction Agency (DTRA) is to safeguard America and its allies from weapons of mass destruction (chemical, biological, radiological, nuclear, and high explosives) by providing capabilities to reduce, eliminate, counter the threat, and mitigate its effects. The Advanced Systems and Concepts Office (ASCO) supports this mission by providing long-term rolling horizon perspectives to help DTRA leadership identify, plan, and persuasively communicate what is needed in the near-term to achieve the longer-term goals inherent in the Agency’s mission. ASCO also emphasizes the identification, integration, and further development of leading strategic thinking and analysis on the most intractable problems related to combating weapons of mass destruction. For further information on this project, or on ASCO’s broader research program, please contact: Defense Threat Reduction Agency Advanced Systems and Concepts Office 8725 John J. -

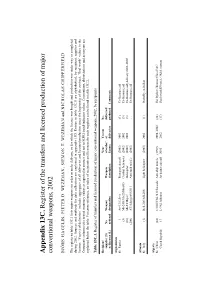

Appendix 13C. Register of the Transfers and Licensed Production of Major Conventional Weapons, 2002

Appendix 13C. Register of the transfers and licensed production of major conventional weapons, 2002 BJÖRN HAGELIN, PIETER D. WEZEMAN, SIEMON T. WEZEMAN and NICHOLAS CHIPPERFIELD The register in table 13C.1 lists major weapons on order or under delivery, or for which the licence was bought and production was under way or completed during 2002. Sources and methods for data collection are explained in appendix 13D. Entries in table 13C.1 are alphabetical, by recipient, supplier and licenser. ‘Year(s) of deliveries’ includes aggregates of all deliveries and licensed production since the beginning of the contract. ‘Deal worth’ values in the Comments column refer to real monetary values as reported in sources and not to SIPRI trend-indicator values. Conventions, abbreviations and acronyms are explained below the table. For cross-reference, an index of recipients and licensees for each supplier can be found in table 13C.2. Table 13C.1. Register of transfers and licensed production of major conventional weapons, 2002, by recipients Recipient/ Year Year(s) No. supplier (S) No. Weapon Weapon of order/ of delivered/ or licenser (L) ordered designation description licence deliveries produced Comments Afghanistan S: Russia 1 An-12/Cub-A Transport aircraft (2002) 2002 (1) Ex-Russian; aid (5) Mi-24D/Mi-25/Hind-D Combat helicopter (2002) 2002 (5) Ex-Russian; aid (10) Mi-8T/Hip-C Helicopter (2002) 2002 (3) Ex-Russian; aid; delivery 2002–2003 (200) AT-4 Spigot/9M111 Anti-tank missile (2002) . Ex-Russian; aid Albania S: Italy (1) Bell-206/AB-206 Light helicopter