Report from the AI 360 COPENHAGEN Workshop

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What to Know and Where to Go

What to Know and Where to Go A Practical Guide for International Students at the Faculty of Science CONTENT 1. INTRODUCTION ........................................................................................................................................................8 2. WHO TO CONTACT? ................................................................................................................................................ 9 FULL-DEGREE STUDENTS: ......................................................................................................................................9 GUEST/EXCHANGE STUDENTS: ........................................................................................................................... 10 3. ACADEMIC CALENDAR AND TIMETABLE GROUPS .................................................................................... 13 NORMAL TEACHING BLOCKS ........................................................................................................................................ 13 GUIDANCE WEEK ......................................................................................................................................................... 13 THE SUMMER PERIOD ................................................................................................................................................... 13 THE 2009/2010 ACADEMIC YEAR ................................................................................................................................. 14 HOLIDAYS & PUBLIC -

Denmark's Central Bank Nationalbanken

DANMARKS NATIONALBANK THE DANMARKS NATIONALBANK BUILDING 2 THE DANMARKS NATIONALBANK BUILDING DANMARKS NATIONALBANK Contents 7 Preface 8 An integral part of the urban landscape 10 The facades 16 The lobby 22 The banking hall 24 The conference and common rooms 28 The modular offices 32 The banknote printing hall 34 The canteen 36 The courtyards 40 The surrounding landscaping 42 The architectural competition 43 The building process 44 The architect Arne Jacobsen One of the two courtyards, called Arne’s Garden. The space supplies daylight to the surrounding offices and corridors. Preface Danmarks Nationalbank is Denmark’s central bank. Its objective is to ensure a robust economy in Denmark, and Danmarks Nationalbank holds a range of responsibilities of vital socioeconomic importance. The Danmarks Nationalbank building is centrally located in Copen hagen and is a distinctive presence in the urban landscape. The build ing, which was built in the period 1965–78, was designed by interna tionally renowned Danish architect Arne Jacobsen. It is considered to be one of his principal works. In 2009, it became the youngest building in Denmark to be listed as a historical site. When the building was listed, the Danish Agency for Culture highlighted five elements that make it historically significant: 1. The building’s architectural appearance in the urban landscape 2. The building’s layout and spatial qualities 3. The exquisite use of materials 4. The keen attention to detail 5. The surrounding gardens This publication presents the Danmarks Nationalbank building, its architecture, interiors and the surrounding gardens. For the most part, the interiors are shown as they appear today. -

Baltic Cruise Adventure to Last a Lifetime! That Has Long Been the City’S Most Popular Meeting Place

The Northern Illinois University Alumni Association Presents BalticBaltic CruiseCruise AdventureAdventure July 10 – 22, 2020 Balcony Cabin $6,175 Per Person, Double Occupancy from Chicago; Single Supplement - $2,290 This is an exclusive travel program presented by the Northern Illinois University Alumni Association Day by Day Itinerary Friday, July 10 – CHICAGO / EN ROUTE (I) reached on foot. We’ll see the Baroque façade of the Rathaus, This evening we depart Chicago’s O’Hare International the Steintor, the best known of the city gates in the old Airport by scheduled service of Scandinavian Airlines on the town wall, and visit the Marienkirche, home to the famous overnight transatlantic flight to Copenhagen, Denmark. astronomical clock built in 1472. Afterward, we’ll travel to Bad Doberan to visit the medieval Gothic abbey of Doberan Saturday, July 11 – COPENHAGEN (I,D) – an opportunity to marvel at the artistic mastership of the monks. Early this afternoon we arrive in Copenhagen, where we’ll Following our visit, we’ll board the nostalgic Molli train for a meet our local Tour Guide who will introduce us to the Danish scenic rail journey to Kuehlungsborn, where free time will be capital. As we’ll soon find out, Copenhagen is a gracious set aside to stroll the Baltic Sea promenade. and beautiful city whose main attractions include three royal palaces - Rosenborg, Amalienborg and Christiansborg, as well Wednesday, July 15 – CRUISING (At Sea) (B,L,D) as numerous museums, churches and monuments all found Just as each destination on our cruise is a voyage of discovery, within its medieval streets, charming canals, and spacious so too is our beautiful ship The Escape is one of the newest squares and gardens. -

Copenhagen Hotel Guide 13 Top Hotels Recommended for Cruise Guests Double Duty for Your Dollar

Copenhagen Hotel Guide 13 Top Hotels Recommended For Cruise Guests Double Duty For Your Dollar The Avid Cruiser’s Guide To Copenhagen Hotels features 13 breakfast included, to $665 per night (don’t balk at the rate properties that we think our readers will enjoy when cruising until you’ve read the review.) from the Danish capital. All but two of the hotels are located in the heart of Co- First, of course, you must decide whether to plant yourself penhagen. Skovshoved, situated in a charming fishing village in Copenhagen before (or after) your cruise. Or should you save a four miles north of the Danish capital, is a lovely 22-room the expense of a hotel and arrive the day your cruise departs? hotel with an award-winning restaurant, bar and a local pub There are at least two reasons for arriving a day or two early. next door (grab a beer and a Gammel Dansk and chat up the One is that you never know if you and/or your luggage locals). will arrive before your ship departs. Airlines can be delayed, Also outside the heart of the city is the Hilton Copen- luggage can be lost. I met a couple on a Baltic cruise three hagen Airport, an excellent choice for those who want to years ago whose flight was delayed because of storms on the “sleep in” before their flights home. U.S. East Coast. They spent the next day catching up with the The other eleven city-center hotels each have characters of ship, eventually boarding in Tallinn, Estonia. -

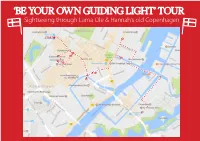

'Be Your Own Guiding Light' Tour

‘BE YOUR OWN GUIDING LIGHT’ TOUR Sightseeing through Lama Ole & Hannah’s old Copenhagen START 9 1 5 2 8 4 3 7 6 10 As a good starting point for this tour, one can get off at 3.3 The Boxing Club 7 Café Nick Walk back through Nyhavn harbour, cross the pedestrian Nørreport station and just walk through the suggested (Fiolstræde 2 – the grey low building after the Italian restaurant (Nikolajgade 20) and bicycle bridge – maybe in combination to eating at the order of numbers. At a good pace, one can make it on the corner) One of Lama Ole and his brother Bjørn’s favourite bars, during popular Street Food place in Papirøen – and heading towards from 1 to 10, as shown in a map, in approximately 2 Lama Ole used to come here regularly to box for 4 years during their wild days. It is nowadays one the few authentic old school Christianshavn harbour. Walk by their channel towards no. 11, hours (counting also with stops to take pictures, enjoy his university days. He calls it ‘The Academic Danish Boxing bars remaining in Copenhagen. Here they used to engage in a Our Saviours church – one can climb up the stairs of the tower the view and have a local beer in no. 8 – Café Nick). If Club’. lot of fights. They say each opponent used to put some Danish and have a great view of Copenhagen for the entrance fee of 40 one has extra time one can walk afterwards to no. 11 crowns on the bar and go outside to fight. -

From Copenhagen Central Station by Bus • Address: 22 Nyhavn, 1051 København • Tel

Welcome at thinkstep! 1 Hotels in Copenhagen 1. Hotel Bethel 2. Hotel Maritime 3. Admiral Hotel 4. Danhostel Copenhagen City 5. Radisson Blu 2 Hotel Bethel • Location: Situated approx.1 km from the Royal Danish Library (10/15 minute walk along the water), about 20 minutes away from Copenhagen airport by either metro or car, about 15 minutes away from Copenhagen Central Station by bus • Address: 22 Nyhavn, 1051 København • Tel. number: +45 33 13 03 70 • Cost of single room: from 895 DKK per night • Website: http://www.hotel-bethel.dk/index.php/en/ • Directions: • From the airport by car: Take Ellehammersvej to E20. Continue on E20 to København S. Take exit 20- København C from E20. Continue on Sjællandsbroen to København SV. Continue onto Vabsygade/O2. Turn right onto Holbergsgade and then follow the street until Nyhavn. Then turn left onto Nyhavn. • From the airport by metro: Take the line M2 in direction of Vanløse and get off at Kongens Nytorv. • • From Copenhagen Central Station: Leave the platform not at the exist in the main central station building, but on the other side of the platform. The bus stop is situated on a bridge that crosses the train rails and is called Hovedbanegården (Tietgensgade). Take the bus 1A in the direction of Hellerup St. or Klampenborg St. and get off at Kongens Nytorv. • • From Kongens Nytorv to hotel: Cross the O2 street and pass by Det Kongelige Teater (Royal Danish Theater). Then turn right onto Nyhavn. The hotel is on your right side. 3 Hotel Bethel 4 Hotel Maritime • Location: Situated approx. -

The Proliferation of Video Surveillance in Brussels and Copenhagen

TOWARDS THE PANOPTIC CITY THE PROLIFERATION OF VIDEO SURVEILLANCE IN BRUSSELS AND COPENHAGEN This master thesis has been co-written by Corentin Debailleul and Pauline De Keersmaecker 4Cities UNICA Euromaster in Urban Studies 2012-2014 Université Libre de Bruxelles (ULB) Supervisor : Mathieu Van Criekingen (Université Libre de Bruxelles) Second reader : Henrik Reeh (Københavns Universitet) Hence the major effect of the Panopticon: to induce in the inmate a state of conscious and permanent visibility that assures the automatic functioning of power. So to arrange things that the surveillance is permanent in its effects, even if it is discontinuous in its action; that the perfection of power should tend to render its actual exercise unnecessary; that this architectural apparatus should be a machine for creating and sustaining a power relation independent of the person who exercises it; in short, that the inmates should be caught up in a power situation of which they are themselves the bearers. To achieve this, it is at once too much and too little that the prisoner should be constantly observed by an inspector: too little, for what matters is that he knows himself to be observed; too much, because he has no need in fact of being so. In view of this, Bentham laid down the principle that power should be visible and unverifiable. Visible: the inmate will constantly have before his eyes the tall outline of the central tower from which he is spied upon. Unverifiable: the inmate must never know whether he is being looked at at any one moment; but he must be sure that he may always be so. -

Research on Scale of Urban Squares in Copenhagen

Research on scale of urban squares in Copenhagen Master of Science Programme in Spatial Planning with an emphasis on Urban Design in China and Europe Author : Chang Liu Email:[email protected] Superviser: Gunnar Nyström Date: 2013/08/13 Blekinge Tekniska Högskola Karlskrona, Sweden Urban Design in China and Europe 2013 Research on scale of Urban Squares in Copenhagen Abstract The urban square is one type of urban public space which has a long history in western countries. The square in China is a more recent concept, and designing squares seems dominated by the concept "the bigger city, the bigger square". This has triggered the author to start a research on scale issue of squares. The theoretical work is on the basis of previous studies of spatial scale and square scale, combining the theory of human dimension from physical and psychological factors. The absolute scale of a square is constituted by its size, while the relative scale reflects the square’s power of attraction. In the investigation of the six sites the size of the square, the size of subspaces, the height and width of buildings in the surroundings is measured and the manner of enclosure and space division is observed, thus the architectural field is calculated and evaluated. The situation of how many people come to the squares and how the spaces are used is observed. A comparison and analogy between each of the two squares of the same type is conducted in the discussion chapter. The scale of three types of squares in the Scandinavian capital city Copenhagen is looked at: civic squares, traffic evacuation squares, leisure and entertainment squares. -

19857 Nationalbanken.HG

English edition Danmarks Nationalbank DANMARKS NATIONALBANK TABLE OF CONTENTS The architect Arne Jacobsen . 4 Fitting into the street scene Spatial organization . 6 The facades Open and closed facades . 8 Glass facades . 10 Natural stone facades . 11 Spatial descriptions Lobby . 14 Corridor . 16 Banking hall . 16 Conference rooms . 18 Employee lounge . 19 Lounge/reception area . 20 Offices . 21 Office landscapes . 22 Details of furnishings . 23 Banknote printing hall . 24 Canteen . 26 Landscaping Courtyard above the printing hall 28 Courtyard above the banking hall 29 Roof garden above the low building . 30 Forecourt . 30 Architecture competition Background . 32 The competition . 32 Winning project . 32 Building history Stages . 33 Timeframe . 33 Functions . 33 DANMARKS NATIONALBANK 1 DANMARKS NATIONALBANK Published by Danmarks Nationalbank, Photos indicated by page and picture no.: Mydtskov og Rønne: 16, 17, 26-1 Havnegade 5, DK-1093 Copenhagen K, Stelton A/S: 4-18 Telephone +45 3363 6363 DISSING+WEITLING: 4-2, 4-4, 4-9, 4-10, Strüwing Reklamefoto: 4-8, 4-11, 4-12, Fax +45 3363 7103 4-15, 4-17, 4-19, 4-20, 10-1, 12-4, 12-6, 4-14, 4-16, 5, 32-1, 32-4, 33 www.nationalbanken.dk 18-4, 28-2, 28-3, 28-4, 28-5, 29, 32-3 Jan Kofoed Winther: 7 [email protected] DISSING+WEITLING / Adam Mørk: Cover photo, 1, 3, 6, 8, 9, 10-2, 10-3, 11, 12-1, Portions of this publication may be Graphic design and layout: 12-2, 12-3, 12-5, 13, 14, 15, 18-1, 18-2, quoted or reprinted without further DISSING+WEITLING 18-3, 19, 20, 21, 22, 23, 24, 25, 26-2, permission, provided that Danmarks 27, 30, 31 Nationalbank is expressly credited as Printing: HellasGrafisk, Haslev Arne Jacobsen: 4-1, 4-3, 4-5, 4-6, 4-7, the source and that photographers 28-1, 32-2 are credited. -

Copenhagen's Green Economy

Copenhagen Green Economy Leader Report A report by the Economics of Green Cities Programme at the London School of Economics and Political Science. Research Directors Graham Floater Director of Seneca and Principal Research Fellow, London School of Economics and Political Science Philipp Rode Executive Director of LSE Cities and Senior Research Fellow, London School of Economics and Political Science Dimitri Zenghelis Principal Research Fellow, Grantham Research Institute, London School of Economics and Political Science London School of Economics and Political Science Research Team Houghton Street London Matthew Ulterino WC2A 2AE Researcher, LSE Cities UK Duncan Smith Research Officer, LSE Cities Tel: +44 (0)20 7405 7686 Karl Baker Researcher, LSE Cities Catarina Heeckt Researcher, LSE Cities Advisors Nicky Gavron Greater London Authority Production and Graphic Design Atelier Works www.atelierworks.co.uk The full report is available for download from: http:// www.kk.dk/da/om-kommunen/indsatsomraader-og- politikker/natur-miljoe-og-affald/klima/co2-neutral- hovedstad This Report is intended as a basis for discussion. While every effort has been made to ensure the accuracy of the material in this report, the authors and/or LSE Cities will not be liable for any loss or damage incurred through the use of this report. Published by LSE Cities, London School of Economics and Political Science, 2014. Research support for this project was provided by Seneca Consultants SPRL. Cover photo credit: [email protected] Contents Executive Summary 7 -

Danish Library Guide

Information Guide DIS Library & Danish Libraries 2016 Brief Overview This booklet contains a thorough guide to both the DIS Library and other libraries in and around Copenhagen. The libraries listed in this book are those that we feel are the most relevant for DIS students. While information about opening hours, locations and contact details can be found here, the DIS Library Resource section contains links to their databases as well. The DIS Library webpage also offers access to a variety of online journals, newspapers and academic search engines. For more information regarding online recources, visit the library webpage listed above. If you have any questions or feedback, please see the DIS Librarian, the Assistant Librarian or one of the assistants in the DIS library office located on Vestergade 10A, second floor. 2 Contents Welcome to the DIS Library ........................................................................4 Access to Electronic Resources .................................................................6 General Danish Library Information and Regulations.................................7 Danish Library Guide ................................................................................10 The Royal Library .....................................................................................10 Copenhagen Business School Library .....................................................17 Royal Architecture Library ........................................................................20 Copenhagen Main Public Library .............................................................21 -

THE COPENHAGEN CITY and PORT DEVELOPMENT CORPORATION: a Model for Regenerating Cities

THE COPENHAGEN CITY AND PORT DEVELOPMENT CORPORATION: A Model for Regenerating Cities BRUCE KATZ | LUISE NORING COVER PHOTO AERIAL VIEW OF THE PORT OF COPENHAGEN © Ole Malling THE COPENHAGEN CITY AND PORT DEVELOPMENT CORPORATION: A Model for Regenerating Cities BRUCE KATZ | LUISE NORING INTRODUCTION Cities across the world face increasing demands at Combining strategic zoning, land transfers, and a time when public resources are under enormous revenue-generating mechanisms, this model pressure. Many older cities, in particular, are has helped spur a remarkable transformation plagued by outdated transportation and energy of Copenhagen over the past 25 years from an infrastructure and underutilized industrial and ailing manufacturing city to one of the wealthiest waterfront areas, all of which need to be upgraded cities in the world. It has made Copenhagen’s for a radically changed economy. This has sent industrial harbor a vibrant, multipurpose many U.S.—and global—cities scrambling to waterfront while channeling the proceeds of find new vehicles for infrastructure finance given land disposition, revaluation, and development to the unpopularity of increasing taxes and the finance the construction of an expanded metro unpredictability of national and state governments. transit system. To revive their flagging city in the late 1980s, The Copenhagen public/private corporate model a coalition of national and local officials laid the combines the efficiency of market discipline and groundwork for the Copenhagen (CPH) City mechanisms with the benefits of public direction & Port Development Corporation. Its success and legitimacy. The model enables large-scale provides a 21st-century model for global urban regeneration to be conducted in a more efficient renewal.