A Framework for Form-Based Conceptual Design in Structural Engineering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

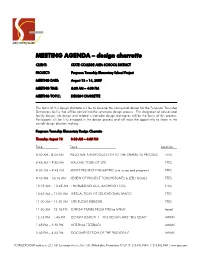

Design Charrette

MEETING AGENDA – design charrette CLIENT: STATE COLLEGE AREA SCHOOL DISTRICT PROJECT: Ferguson Township Elementary School Project MEETING DATE: August 13 – 14, 2009 MEETING TIME: 8:30 AM – 4:00 PM MEETING TOPIC: DESIGN CHARRETTE The focus of this design charrette will be to develop the conceptual design for the Ferguson Township Elementary facility that will be carried into the schematic design process. The integration of educational facility design, site design and related sustainable design techniques will be the focus of this process. Participants will be fully engaged in the design process and will have the opportunity to share in the overall design decision making. Ferguson Township Elementary Design Charrette Thursday August 13 8:30 AM – 4:00 PM Time Topic Location 8:30 AM - 8:45 AM WELCOME & INTRODUCTION TO THE CHARRETTE PROCESS FTES 8:45 AM – 9:30 AM WALKING TOUR OF SITE FTES 9:30 AM – 9:45 AM REVISIT PROJECT PARAMETERS (site issues and program) FTES 9:45 AM – 10:15 AM REVIEW OF PROJECT TOUCHSTONES & LEED GOALS FTES 10:15 AM - 10:45 AM THE BUILDING AS A TEACHING TOOL FTES 10:45 AM – 11:00 AM VIRTUAL TOUR OF EDUCATIONAL SPACES FTES 11:00 AM – 11:30 AM SITE FLOWS EXERCISE FTES 11:30 AM – 12:15 PM LUNCH/ TRAVEL FROM FTES to MNMS travel 12:15 PM – 1:45 PM DESIGN SESSION 1 – SITE DESIGN AND “BIG IDEAS” MNMS 1:45 PM – 3:30 PM INTERNAL FEEDBACK MNMS 3:30 PM – 4:00 PM DOCUMENTATION OF THE “BIG IDEAS” MNMS SCHRADERGROUP architecture LLC | 161 Leverington Avenue, Suite 105 | Philadelphia, Pennsylvania 19127 | T: 215.482.7440 | F: 215.482.7441 | www.sgarc.com Friday August 14 8:30 AM – 4:00 PM Time Topic Location 8:30 AM – 8:45 AM REFLECTION ON THE S“BIG IDEAS” MNMS 8:45 AM – 10:15AM DESIGN SESSION 2 MNMS 10:15 AM – 12:00 PM INTERNAL FEEDBACK MNMS 12:00 PM – 12:45 PM LUNCH brown bag 12:45 PM – 2:15 PM DESIGN SESSION 3 MNMS 2:15 PM – 3:30 PM INTERNAL FEEDBACK MNMS 3:30 PM – 4:00 PM NEXT STEPS MNMS The information developed during this design charrette will become the basis for the schematic design process to be carried through the process. -

Draft Conceptual Design Report Bolinas Lagoon North End Restoration Project

DRAFT Draft Conceptual Design Report Bolinas Lagoon North End Restoration Project Marin County Parks and Open Space District August 2017 DRAFT Draft Conceptual Design Report DRAFT Marin County Parks and Open Space District Prepared for: Marin County Parks and Open Space District 3501 Civic Center Drive Suite 260 San Rafael, CA 94903 Prepared by: AECOM 300 Lakeside Drive Suite #400 Oakland, CA, 94612 aecom.com Prepared in association with: Carmen Ecological Consulting, Watershed Sciences, Peter Baye Ecological Consulting Prepared for: Marin County Parks and Open Space District AECOM ǀ Carmen Ecological, Watershed Sciences, and Peter Baye Consulting Draft Conceptual Design Report DRAFT Marin County Parks and Open Space District Table of Contents Executive Summary ................................................................................................................................................. ES-1 PART I – PROJECT OVERVIEW ................................................................................................................................... 3 1. Introduction ......................................................................................................................................................... 3 1.1 Project Location ....................................................................................................................................... 4 1.2 Purpose and Need .................................................................................................................................. -

A Handling Qualities Analysis Tool for Rotorcraft Conceptual Designs

The Aeronautical Journal June 2018 Volume 122 No 1252 960 pp 960–987.© Royal Aeronautical Society 2018 doi: 10.1017/aer.2018.43 A handling qualities analysis tool for rotorcraft conceptual designs B. Lawrence [email protected] NASA’s Ames Research Center, San Jose State University Moffett Field, California US C. R. Theodore and W. Johnson NASA’s Ames Research Center, National Aeronautics and Space Administration Moffett Field, California US T. Berger U.S. Army Aviation Development Directorate Moffett Field California US ABSTRACT Over the past decade, NASA, under a succession of rotary-wing programs, has been moving towards coupling multiple discipline analyses to evaluate rotorcraft conceptual designs. Handling qualities is one of the component analyses to be included in such a future Multidisciplinary Analysis and Optimization framework for conceptual design of Vertical Take-Off and Landing (VTOL) aircraft. Similarly, the future vision for the capability of the Concept Design and Assessment Technology Area of the U.S Army Aviation Development Directorate also includes a handling qualities component. SIMPLI-FLYD is a tool jointly developed by NASA and the U.S. Army to perform modelling and analysis for the assessment of the handling qualities of rotorcraft conceptual designs. Illustrative scenarios of a tiltrotor in forward flight and a single-main rotor helicopter at hover are analysed using a combined process of SIMPLI-FLYD integrated with the conceptual design sizing tool NDARC. The effects of variations of input parameters such as horizontal tail and tail rotor geometry were evaluated in the form of margins to fixed- and rotary-wing handling qualities metrics and the computed vehicle empty weight. -

Design Process Visualizing and Review System with Architectural Concept Design Ontology

INTERNATIONAL CONFERENCE ON ENGINEERING DESIGN, ICED’07 28 - 31 AUGUST 2007, CITE DES SCIENCES ET DE L'INDUSTRIE, PARIS, FRANCE DESIGN PROCESS VISUALIZING AND REVIEW SYSTEM WITH ARCHITECTURAL CONCEPT DESIGN ONTOLOGY Sung Ah Kim and Yong Se Kim Creative Design & Intelligent Tutoring Systems (CREDITS) Research Center, Sungkyunkwan University, Korea ABSTRACT DesignScape, a prototype design process visualization and review system, is being developed. Its purpose is to visualize the design process in more intuitive manner so that one can get an insight to the complicated aspects of the design process. By providing a tangible utility to the design process performed by the expert designers or guided by the system, novice designers will be greatly helped to learn how to approach a certain class of design. Not only as an analysis tool to represent the characteristics of the design process, the system will be useful also for learning design process. A design ontology is being developed as a critical part of the system, to represent designer’s activities associated with various design information during the conceptual design process, and then to be utilized for a computer environment for design analysis and guidance. To develop the design ontology, a conceptual framework of design activity model is proposed, and then the model has been tested and elaborated through investigating the early phase of architectural design. A design process representation model is conceptualized based on the ontology, and reflected into the development of the system. Keywords: Design Process, Design Research, Design Ontology, Design Activity, Architectural Design 1 INTRODUCTION Design is an evolving process interwoven with numerous intermediate representations and various design information. -

Supporting Pre-Production in Game Development Process Mapping and Principles for a Procedural Prototyping Tool

DEGREE PROJECT IN THE FIELD OF TECHNOLOGY INDUSTRIAL ENGINEERING AND MANAGEMENT AND THE MAIN FIELD OF STUDY COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2020 Supporting Pre-Production in Game Development Process Mapping and Principles for a Procedural Prototyping Tool PAULINA HOLLSTRAND KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Abstract Game development involves both traditional software activities combined with creative work. As a result, game design practices are characterized by an extensive process of iterative development and evaluation, where prototyping is a major component to test and evaluate the player experience. Content creation for the virtual world the players inhabit is one of the most time-consuming aspects of production. This experimental research study focuses on analyzing and formulating challenges and desired properties in a prototyping tool based on Procedural Content Generation to assist game designers in their early ideation process. To investigate this, a proof of concept was iteratively developed based on information gathered from interviews and evaluations with world designers during a conceptual and design study. The final user study assessed the tool’s functionalities and indicated its potential utility in enhancing the designers’ content exploration and risk management during pre-production activities. Key guidelines for the tool’s architecture can be distilled into: (1) A modular design approach supports balance between content controllability and creativity. (2) Design levels and feature representation should combine and range between Micro (specific) to Macro (high-level, abstract). The result revealed challenges in combining exploration of the design space with optimization and refinement of content. -

6 Designing Conventional Data Warehouses

6 Designing Conventional Data Warehouses The development of a data warehouse is a complex and costly endeavor. A data warehouse project is similar in many aspects to any software develop- ment project and requires definition of the various activities that must be performed, which are related to requirements gathering, design, and imple- mentation into an operational platform, among other things. Even though there is an abundant literature in the area of software development (e.g., [48, 248, 282]), few publications have been devoted to the development of data warehouses. Some of these publications [15, 114, 119, 146, 242] have been written by practitioners and are based on their experience in building data warehouses. On the other hand, the scientific community has proposed a variety of approaches for developing data warehouses [28, 29, 35, 42, 47, 79, 82, 114, 167, 203, 221, 237, 246]. Nevertheless, many of these approaches target a specific conceptual model and are often too complex to be used in real-world environments. As a consequence, there is still a lack of a method- ological framework that could guide developers in the various stages of the data warehouse development process. This situation results from the fact that the need to build data warehouse systems arose before the definition of formal approaches to data warehouse development, as was the case for operational databases [166]. In this chapter, we propose a general method for conventional-data- warehouse design that unifies existing approaches. This method allows de- signers and developers to better understand the alternative approaches that can be used for data warehouse design, helping them to choose the one that best fits their needs. -

Application of Machine Learning Techniques in Conceptual Design

View第 3 1metadata,卷 第 4 citation期 and similar papers at core.ac.uk贵州工业大学学报(自然科学版) broughtV o tol .you31 byN o .CORE4 2002 年 8 月 JO U RNA L OF G U IZ HO U UN IV ERSIT Y OF T ECHNO LOG Y provided by PolyUA Institutionalugust .20 Repository02 (Na tural Science Edition) Article ID:1009-0193(2002)04-0021-06 Application of machine learning techniques in conceptual design TANG Ming-xi (Design Technology Research Centre , School of Design , The Hong Kong Polytechnic University , Hong Kong , China) Abstract :One of the challenges of developing a computational theory of the design process is to support learning by the use of com putational mechanisms that allow fo r the generation , accumula- tion and transfo rmation of design know ledge learned from design experts , or design exam ples . One of the w ay s in w hich machine learning techniques can be integrated in a know ledge-based de- sign support sy stem is to model the early stage of the design process as an incremental and induc- tive learning of design problem structures .The need for such a model arises from the need of cap- turing , refining , and transferring design know ledge at different levels of abstraction so that it can be manipulated easily .In desig n , the know ledge generated from past design solutions provides a feedback for modifying and enhancing the design know ledge base .Without a learning capability a design system is unable to reflect the desig ner' s grow ing ex perience in the field and designer' s a- bility to use knowledge abstracted from past design cases .In this paper , w e present three applica- tions of machine learning techniques in conceptual design and evaluate their effectiveness . -

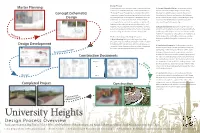

Design Process Overview

Design Process Master Planning A multi-stage process is involved in order to take a project from 2. Concept (Schematic) Design: The landscape architect a “vision” to a complete built project; commonly referred to as explores and creates multiple design concepts during “The Design Process.” Projects that are developed through the this stage. Conceptual designs incorporate the general Concept (Schematic) Community Visioning Program and presented on these boards characteristics of elements and amenities. The landscape Design are the beginning of this design process. Depending upon the architect will modify the concepts created during this stage project scale, scope and detail, the length of time between as more specific site information is gathered during the each stage will vary as well as the people who are involved. The subsequent Design Development stage. landscape architect may include other professionals (i.e., civil engineers, structural engineers, electrical engineers, surveyors, 3. Design Development: During this stage the landscape geotechnical engineers, building architects, etc.), on the design architect works to refine the conceptual design and define the team, depending upon the type and scope of the project. specific scope of the project. He or she also develops specific methods of construction, specific aesthetics and quality levels. Vi sio The landscape architect will address various issues such as ning The five primary stages of the design process are: drainage, accessibility, illumination coverage, etc., in more 1. Master Planning: During the initial stage of the design depth. process the landscape architect develops the overall concept of the building and/or site. Both near- and long-term projects are Design Development 4. -

Conceptual Design and the Entity

Conceptual Design Steps in Database Design and The Entity- Relationship Model • Requirements Analysis – user needs; what must database do? CS 186 Spring 2006 • Conceptual Design Lectures 19 & 20 – high level descr (often done w/ER model) R &G - Chapter 2 • Logical Design A relationship, I think, is like a – translate ER into DBMS data model shark, you know? It has to • Schema Refinement constantly move forward or it – consistency, normalization dies. And I think what we got on • Physical Design - indexes, disk layout our hands is a dead shark. • Security Design - who accesses what, and how Woody Allen (from Annie Hall, 1979) Databases Model the Real World Conceptual Design • “Data Model” allows us to translate real • What are the entities and relationships in world things into structures computers the enterprise? can store • What information about these entities and • Many models: Relational, E-R, O-O, relationships should we store in the Network, Hierarchical, etc. database? • Relational • What are the integrity constraints or –Rows & Columns business rules that hold? – Keys & Foreign Keys to link Relations Enrolled • A database `schema’ in the ER Model can sid cid grade Students be represented pictorially (ER diagrams). 53666 Carnatic101 C sid name login age gpa 53666 Reggae203 B 53666 Jones jones@cs 18 3.4 • Can then map an ER diagram into a 53650 Topology112 A 53688 Smith smith@eecs 18 3.2 relational schema. 53666 History105 B 53650 Smith smith@math 19 3.8 ER Model Basics name ssn lot ER Model Basics (Contd.) since Employees name dname ssn lot did budget • Entity: Real-world object, distinguishable from other objects. -

Development of Innovative High-Fashion Collection Via Conceptual Design Process Model

ISSN: 2641-192X DOI: 10.33552/JTSFT.2018.01.000507 Journal of Textile Science & Fashion Technology Research Article Copyright © All rights are reserved by Joe AU Development of Innovative High-Fashion Collection Via Conceptual Design Process Model Yu AU and Joe AU* The Hong Kong Polytechnic University, Institute of Textile and Clothing, Hong Kong *Corresponding author: Joe AU, The Hong Kong Polytechnic University, Institute of Received Date: August 23, 2018 Textile and Clothing, Hong Kong. Published Date: August 30, 2018 Abstract This study aims to demonstrate the creation of an innovative high-fashion collection based on a conceptual fashion design process model. The design process model makes use of understanding the context of design problems, design processes and users in the conceptual design domain. The model is characterized by four phases of design processes, namely investigation, interaction, development and evaluation. Each phase is closely related, connected and interacted with each other. Its focus is on the continuous cyclical design process which can generate an incremental output. By adopting the conceptual design process model, the development of a high-fashion collection is presented in a step-by-step approach. It demonstrates a holistic approach and the orders of importance within the design process, and how it can be rationalized despite the complexities of conceptual fashion design. This experimental activity is meant to provide fashion practitioners with a valuable reference for designing a successful fashion collection in a conceptual context. Keywords: Conceptual; High-fashion; Design process model Introduction fashion practitioners can make reference to the conceptual design Increasing attention has been given to the revolutionary processes. -

Design Guidance for Construction Work Zones on High-Speed Highways

Design Guidance for Construction Work Zones on High-Speed Highways Kevin M. Mahoney Penn State University Overview Scope of study Method Results Overview Scope of study Method Results Panel, NCHRP 3-69 Michael Christensen, MnDOT (Retired) - Chair James Kladianos, WY DOT Xiaoduan Sun, U of LA Russel Lenz, TX DOT J. Richard Young, PBSJ Herbert (Bert) Roy, NYS DOT Kenneth Opiela, FHWA Robert Schlicht, FHWA Frank Lisle, TRB Charles Niessner, NCHRP John Smith, MS DOT Reviewers James Brewer, KS DOT Norman Schips, NYS DOT Debbie Guest, LA DOTD David Smith, NH DOT Mohammad Kahn, OH DOT Barbara Solberg, MD SHA Glenn Rowe, PennDOT Marty Weed, WS DOT Marcella Saenz, TX DOT Scott Zeller, WS DOT Investigators Kevin M. Mahoney, Penn State Richard (R.J.) Porter, Penn State Gerald L. Ullman, Texas Transportation Institute Bohdan T. Kulakowski, Penn State Overview Construction work zone: .. area occupied for three or more days … High-speed highways: … 85th percentile free-flow speed of 50 mph or greater Coverage: … information or guidance not available in another nationally referenced publication Final Deliverable, NCHRP 3-69 Research report (summary of methods) Hard copy appendix: Design Guidance Dual units: Metric [US Customary] Green Book format and conventions CD: Work Zone Speed Prediction Model and User’s Manual Design Guidance Design Guidance Design Guidance State DOT Priorities Design Guidance Guideline ….. not a standard Does not supersede “processes and criteria that have been implemented in an operational environment and carefully evaluated” Document alone cannot guide user to appropriate decision, must be applied by knowledgeable and experienced personnel “minimum” values identified selectively Design Guidance: Contents 1. -

Architectural Design Students' Explorations Through Conceptual

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by DSpace@IZTECH Institutional Repository The Design Journal VOLUME 16, ISSUE 1 REPRINTS AVAILABLE PHOTOCOPYING © BLOOMSBURY PP 103–124 DIRECTLY FROM THE PERMITTED BY PUBLISHING PLC 2013 PUBLISHERS LICENSE ONLY PRINTED IN THE UK Architectural Design Students’ Explorations through Conceptual Diagrams Fehmi Dogan Assistant Professor of Architecture, Izmir Institute of Technology, Izmir, Turkey ABSTRACT Views of creativity highlight the importance of incubation or the significance of sketching as a means of seeing emergent properties. Both views put design students at a disadvantage. This study investigates the strengths and weaknesses of an alternative approach to design education, in which students were asked to develop a design idea through conceptual diagrams. DOI: 10.2752/175630613X13328375149002 This study investigates how conceptual diagrams might help architectural students to see the relationships between concepts and space and coordinate their dual development through conceptual diagrams. The study presents the development of The Design Journal the ideas of 13 second-year architectural students. Students’ logbooks, together with their midterm and final review presentations, 103 Fehmi Dogan were studied to determine whether students drew any conceptual diagrams, whether they were instrumental in spatial organization, and how they introduced changes during the design process. The findings showed that this particular design education approach helped students start the design process and stay focused throughout the design process. KEYWORDS: design education, conceptual design, conceptual diagrams Introduction Architectural design situations often impose both con- ceptually and spatially challenging tasks and require a + coordinated exploration of the two.