The Charrette Test Method

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Critical Making at the Edges

Visible Language 49.3 the journal of visual communication research special issue Jessica Barness Amy Papaelias guest editors December 2015 critical making DESIGN and the DIGITAL HUMANITIES ADVISORY BOARD GUEST EDITORS' INTRODUCTION Naomi Baron — The American University, Washington, D.C. 4–11 Critical Making at the Edges Michael Bierut — Pentagram, New York, NY Jessica Barness, Amy Papaelias Matthew Carter — Carter & Cone Type, Cambridge, MA Keith Crutcher — Cincinnati, OH THEORY AND SPECULATIONS Mary Dyson — University of Reading, UK 12–33 Meta!Meta!Meta! A Speculative Design Brief for the Digital Humanities Jorge Frascara — University of Alberta, Canada / Universidad Anne Burdick de las Americas Puebla Ken Friedman — Swinburne University of Technology, Melbourne, Australia 34–61 Clues. Anomalies. Understanding. Detecting underlying assumptions and Michael Golec — School of the Chicago Art Institute, Chicago, IL Judith Gregory — University of California-Irvine, Irvine, CA expected practices in the Digital Humanities through the AIME project Kevin Larson — Microsoft Advanced Reading Technologies Donato Ricci, Robin de Mourat, Christophe Leclercq, Bruno Latour Aaron Marcus — Aaron Marcus & Associates, Berkeley, CA Per Mollerup — Swinburne University of Technology, Melbourne, Australia 62–77 Writing Images and the Cinematic Humanities Tom Ockerse — Rhode Island School of Design, Providence, RI Holly Willis Sharon Poggenpohl — Estes Park, CO Michael Renner — The Basel School of Design — Visual Communication 78–99 Beyond the Map: Unpacking -

Game Level Layout from Design Specification

EUROGRAPHICS 2014 / B. Lévy and J. Kautz Volume 33 (2014), Number 2 (Guest Editors) Game Level Layout from Design Specification Chongyang Ma∗z Nicholas Vining∗ Sylvain Lefebvrey Alla Sheffer∗ ∗ University of British Columbia y ALICE/INRIA z University of Southern California Abstract The design of video game environments, or levels, aims to control gameplay by steering the player through a sequence of designer-controlled steps, while simultaneously providing a visually engaging experience. Traditionally these levels are painstakingly designed by hand, often from pre-existing building blocks, or space templates. In this paper, we propose an algorithmic approach for automatically laying out game levels from user-specified blocks. Our method allows designers to retain control of the gameplay flow via user-specified level connectivity graphs, while relieving them from the tedious task of manually assembling the building blocks into a valid, plausible layout. Our method produces sequences of diverse layouts for the same input connectivity, allowing for repeated replay of a given level within a visually different, new environment. We support complex graph connectivities and various building block shapes, and are able to compute complex layouts in seconds. The two key components of our algorithm are the use of configuration spaces defining feasible relative positions of building blocks within a layout and a graph-decomposition based layout strategy that leverages graph connectivity to speed up convergence and avoid local minima. Together these two tools quickly steer the solution toward feasible layouts. We demonstrate our method on a variety of real-life inputs, and generate appealing layouts conforming to user specifications. Categories and Subject Descriptors (according to ACM CCS): I.3.5 [Computer Graphics]: Computational Geometry and Object Modeling—Curve, surface, solid, and object representations 1. -

Linking Design Management Skills and Design Function Organization: an Empirical Study of Spanish and Italian Ceramic Tile Producers

CASTELLÓN (SPAIN) LINKING DESIGN MANAGEMENT SKILLS AND DESIGN FUNCTION ORGANIZATION: AN EMPIRICAL STUDY OF SPANISH AND ITALIAN CERAMIC TILE PRODUCERS Ricardo Chiva (1), Joaquín Alegre (2), David Gobert (3), Rafael Lapiedra (1) (1) Universitat Jaume I, (2) Universitat de València, (3) ITC, Universitat Jaume I, ABSTRACT Design management is an increasingly important concept, research into which is very scarce. This paper deals with the fit between design management skills and design function organization, ranging from solely in-house to solely outsourced and including a mixture of the two. We carried out a survey in the Spanish and Italian ceramic tile industry, to which 177 product development managers responded. Our results revealed that companies have different degrees of design management skills depending on the approach to design function organization. Solely in-house design approach companies are the most skilled firms and solely outsourced ones are the least skilled. Despite the fact that the design function has apparently evolved towards outsourcing, this research supports the idea that, under certain conditions, the in-house design department is the best option in order to attain higher degrees of design management skills. Implications of the findings for both academics and practitioners are examined. P.BA - 79 CASTELLÓN (SPAIN) 1. INTRODUCTION In today’s competitive environment, design is becoming increasingly important. Good design, though, does not emerge by accident but as the result of a managed process (Bruce and Bessant, 2002, p. 38). Apart from the development process leading up to the creation of an artifact or product, the concept of design has traditionally involved a series of organizational activities, practices or skills that are required for this development to be achieved (Gorb and Dumas, 1987). -

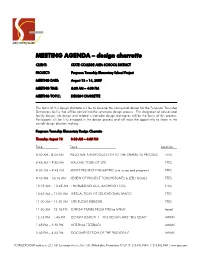

Design Charrette

MEETING AGENDA – design charrette CLIENT: STATE COLLEGE AREA SCHOOL DISTRICT PROJECT: Ferguson Township Elementary School Project MEETING DATE: August 13 – 14, 2009 MEETING TIME: 8:30 AM – 4:00 PM MEETING TOPIC: DESIGN CHARRETTE The focus of this design charrette will be to develop the conceptual design for the Ferguson Township Elementary facility that will be carried into the schematic design process. The integration of educational facility design, site design and related sustainable design techniques will be the focus of this process. Participants will be fully engaged in the design process and will have the opportunity to share in the overall design decision making. Ferguson Township Elementary Design Charrette Thursday August 13 8:30 AM – 4:00 PM Time Topic Location 8:30 AM - 8:45 AM WELCOME & INTRODUCTION TO THE CHARRETTE PROCESS FTES 8:45 AM – 9:30 AM WALKING TOUR OF SITE FTES 9:30 AM – 9:45 AM REVISIT PROJECT PARAMETERS (site issues and program) FTES 9:45 AM – 10:15 AM REVIEW OF PROJECT TOUCHSTONES & LEED GOALS FTES 10:15 AM - 10:45 AM THE BUILDING AS A TEACHING TOOL FTES 10:45 AM – 11:00 AM VIRTUAL TOUR OF EDUCATIONAL SPACES FTES 11:00 AM – 11:30 AM SITE FLOWS EXERCISE FTES 11:30 AM – 12:15 PM LUNCH/ TRAVEL FROM FTES to MNMS travel 12:15 PM – 1:45 PM DESIGN SESSION 1 – SITE DESIGN AND “BIG IDEAS” MNMS 1:45 PM – 3:30 PM INTERNAL FEEDBACK MNMS 3:30 PM – 4:00 PM DOCUMENTATION OF THE “BIG IDEAS” MNMS SCHRADERGROUP architecture LLC | 161 Leverington Avenue, Suite 105 | Philadelphia, Pennsylvania 19127 | T: 215.482.7440 | F: 215.482.7441 | www.sgarc.com Friday August 14 8:30 AM – 4:00 PM Time Topic Location 8:30 AM – 8:45 AM REFLECTION ON THE S“BIG IDEAS” MNMS 8:45 AM – 10:15AM DESIGN SESSION 2 MNMS 10:15 AM – 12:00 PM INTERNAL FEEDBACK MNMS 12:00 PM – 12:45 PM LUNCH brown bag 12:45 PM – 2:15 PM DESIGN SESSION 3 MNMS 2:15 PM – 3:30 PM INTERNAL FEEDBACK MNMS 3:30 PM – 4:00 PM NEXT STEPS MNMS The information developed during this design charrette will become the basis for the schematic design process to be carried through the process. -

Privacy When Form Doesn't Follow Function

Privacy When Form Doesn’t Follow Function Roger Allan Ford University of New Hampshire [email protected] Privacy When Form Doesn’t Follow Function—discussion draft—3.6.19 Privacy When Form Doesn’t Follow Function Scholars and policy makers have long recognized the key role that design plays in protecting privacy, but efforts to explain why design is important and how it affects privacy have been muddled and inconsistent. Tis article argues that this confusion arises because “design” has many different meanings, with different privacy implications, in a way that hasn’t been fully appreciated by scholars. Design exists along at least three dimensions: process versus result, plan versus creation, and form versus function. While the literature on privacy and design has recognized and grappled (sometimes implicitly) with the frst two dimensions, the third has been unappreciated. Yet this is where the most critical privacy problems arise. Design can refer both to how something looks and is experienced by a user—its form—or how it works and what it does under the surface—its function. In the physical world, though, these two conceptions of design are connected, since an object’s form is inherently limited by its function. Tat’s why a padlock is hard and chunky and made of metal: without that form, it could not accomplish its function of keeping things secure. So people have come, over the centuries, to associate form and function and to infer function from form. Software, however, decouples these two conceptions of design, since a computer can show one thing to a user while doing something else entirely. -

Choosing a Mixed Methods Design

04-Creswell (Designing)-45025.qxd 5/16/2006 8:35 PM Page 58 CHAPTER 4 CHOOSING A MIXED METHODS DESIGN esearch designs are procedures for collecting, analyzing, interpreting, and reporting data in research studies. They represent different mod- R els for doing research, and these models have distinct names and procedures associated with them. Rigorous research designs are important because they guide the methods decisions that researchers must make dur- ing their studies and set the logic by which they make interpretations at the end of studies. Once a researcher has selected a mixed methods approach for a study, the next step is to decide on the specific design that best addresses the research problem. What designs are available, and how do researchers decide which one is appropriate for their studies? Mixed methods researchers need to be acquainted with the major types of mixed methods designs and the common variants among these designs. Important considerations when choosing designs are knowing the intent, the procedures, and the strengths and challenges associated with each design. Researchers also need to be familiar with the timing, weighting, and mixing decisions that are made in each of the different mixed methods designs. This chapter will address • The classifications of designs in the literature • The four major types of mixed methods designs, including their intent, key procedures, common variants, and inherent strengths and challenges 58 04-Creswell (Designing)-45025.qxd 5/16/2006 8:35 PM Page 59 Choosing a Mixed Methods Design–●–59 • Factors such as timing, weighting, and mixing, which influence the choice of an appropriate design CLASSIFICATIONS OF MIXED METHODS DESIGNS Researchers benefit from being familiar with the numerous classifications of mixed methods designs found in the literature. -

Introduction: Design Epistemology

Design Research Society DRS Digital Library DRS Biennial Conference Series DRS2016 - Future Focused Thinking Jun 17th, 12:00 AM Introduction: Design Epistemology Derek Jones The Open University Philip Plowright Lawrence Technological University Leonard Bachman University of Houston Tiiu Poldma Université de Montréal Follow this and additional works at: https://dl.designresearchsociety.org/drs-conference-papers Citation Jones, D., Plowright, P., Bachman, L., and Poldma, T. (2016) Introduction: Design Epistemology, in Lloyd, P. and Bohemia, E. (eds.), Future Focussed Thinking - DRS International Conference 20227, 27 - 30 June, Brighton, United Kingdom. https://doi.org/10.21606/drs.2016.619 This Miscellaneous is brought to you for free and open access by the Conference Proceedings at DRS Digital Library. It has been accepted for inclusion in DRS Biennial Conference Series by an authorized administrator of DRS Digital Library. For more information, please contact [email protected]. Introduction: Design Epistemology Derek Jonesa*, Philip Plowrightb, Leonard Bachmanc and Tiiu Poldmad a The Open University b Lawrence Technological University c University of Houston d Université de Montréal * [email protected] DOI: 10.21606/drs.2016.619 “But the world of design has been badly served by its intellectual leaders, who have failed to develop their subject in its own terms.” (Cross, 1982) This quote from Nigel Cross is an important starting point for this theme: great progress has been made since Archer’s call to provide an intellectual foundation for design as a discipline in itself (Archer, 1979), but there are fundamental theoretical and epistemic issues that have remained largely unchallenged since they were first proposed (Cross, 1999, 2007). -

What We Heard from You

What We Heard From You “The Charette process invited my neighbors and me to share our opinions and suggestions in designing a positive addition to our community . The Charette process has developed a plan that no one group of people could have achieved alone – it has drawn the best from those who participated.” STEVE POTTER Charette participant he design process that created that showcased the proposed look, the Pleasant Hill BART Station function and character of the BART TCommunity Plan was based on station. the principle that “the best plans are The high level of citizen turnout made by many hands.” More than 500 was tremendously valuable to the people participated in the Charrette process. Many dropped in during the and offered up a wealth of ideas, sug- more than 80 hours of “open door gestions and perspectives about what studio time.” The Pleasant Hill BART they wanted for their community. Station owes a great deal to these While these citizen planners have committed citizens who spent their blazed the trail for the new BART time, energy and talents helping to transit village, there still is much work Charrette participants were encouraged create an innovative, thoughtful to be done, with plenty more opportu- to generate their own ideas, helping Community Plan for the new transit nities for public input from neighbors the designers better understand the village. and others during the land use review community’s needs and desires. and approval process (see Next Steps that need to be protected. In addition, below). the team conducted market and trans- A Charrette is an inclusive public planning process undertaken by How the Charrette process portation background research that was used to help test various scenarios a inter-disciplinary design team, worked created during the charrette. -

Industrial Designers Society of America (IDSA) Fact Sheet The

Industrial Designers Society of America (IDSA) Fact Sheet . The Industrial Designers Society of America began in 1965 out of the merger of several organizations to include American Designers Institute (ADI), Industrial Designers Institute (IDI), Industrial Designers Education Association (IDEA), Society of Industrial Designers (SID) and American Society of Industrial Designers (ASID). IDSA’s core purpose is to advance the profession of industrial design through education, information, community and advocacy. IDSA creates value by . Publishing Innovation, a quarterly professional journal of industrial design practice and education in America . Developing and organizing a joint national conference and education symposium each year, which brings together industrial designers, educators, business executives and students from all over the world . Hosting five district conferences annually where design practitioners, educators and students gather to consider the state of the profession . Creating and conducting the annual International Design Excellence Awards® (IDEA) and distributing information on the winners to the business, general, international and US design media . Hosting a website to communicate with the industrial design community, to keep members informed and to provide a place for unique content and dialogue to share . Distributing designBytes email that highlights the latest news and trends in the design world . Providing statistical research studies on professional practice, and the structure and financing of consulting and corporate design organizations . Advocating for the industrial design community to federal agencies and state governments . Serving as the primary information resource on design for national newspapers, magazines and television networks . Acting as a clearinghouse for design information requested by the general public . To serve the interests and activities of its members, IDSA formed 16 special interest sections . -

Academic Worksheet 1St YEAR

1st Fall WU TRANSFER YEAR COMM 120 Public Speaking 3 2019-2020 FOUN 101 Beginning Drawing 3 GAME 101 Game Design Fundamentals 3 Academic Worksheet GAME 106 Game Code Fundamentals 3 GAME ART & DESIGN WRIT 111 Academic Writing 1 3 Design Emphasis ANIM 112 Portfolio Review Workshop 1 Spring GENERAL Core Competencies GDES 107 Digital Practice 3 EDUCATION GAME 105 3D Game Art Fundamentals 3 Breadth GAME 112 Game Design Documentation 3 Principles GAME 114 Introduction to Game Engines 3 LSCI 105 Information Theory and Practice 1 WRIT 112 Academic Writing 2 3 Name WU TRANSFER nd YEAR Fall ID# Matriculated 2 FOUN 102 Design and Composition 3 ____________________________________ GAME 211 Game Level Design 3 Minimum Unit Requirement 125 GAME 221 Game Prototyping 3 GAME 224 History of Games: 20th Century 3 Major 67 INDS 1____ Interdisciplinary Core Course 3 General Education 49 __________ Social Science Course 3 Unrestricted Electives 9 ____________________________________ Spring Preparatory Requirements __________ Ethics Course 3 WRIT 100 Bridge to Academic Writing 3 GAME 222 Game Player Analysis 3 MATH 100 Pre-Statistics 3 GAME 240 Networked Game Development 3 GAME 250 Portfolio Review 0 FILM 200 Screenwriting 3 __________ Art/Film/Design History Course 3 Fall WU TRANSFER 3rd YEAR FILM 140 Sound 3 GAME 321 User Interface Design 3 GAME 323 Story Development for Interactive 3 ENVT 220 Environmental Studies 3 MATH 2__ Mathematics Course 3 Spring __________ Natural Science Course w lab 3 GAME 304 Sound Synthesis and Design 3 GAME 332 Experimental Technology for Games 3 INDS 3___ Transdisciplinary Course 3 __________ Social Science Course 3 Work Experience 0 Fall WU TRANSFER 4th YEAR GAME 431 Degree Project R & D 3 __________ Art/Film/Design History 3 __________ Humanities Course 3 __________ Unrestricted Elective 3 __________ Unrestricted Elective 3 Spring GAME 432 Degree Project: Production 3 GAME 434 Professional Practices 3 ____3____ General Education Elective 3 __________ Art/Film/Design History 3 __________ Unrestricted Elective 3 . -

Meaningful Urban Design: Teleological/Catalytic/Relevant

Journal ofUrban Design,Vol. 7, No. 1, 35– 58, 2002 Meaningful Urban Design: Teleological/Catalytic/Relevant ASEEM INAM ABSTRACT Thepaper begins with a critique ofcontemporary urban design:the eldof urban designis vague because it isan ambiguousamalgam of several disciplines, includingarchitecture, landscapearchitecture, urban planningand civil engineering; it issuper cial because itisobsessedwith impressions and aesthetics ofphysical form; and it ispractised as an extensionof architecture, whichoften impliesan exaggerated emphasison theend product. The paper then proposesa meaningful(i.e. truly consequential to improvedquality of life) approach to urban design,which consists of: beingteleological (i.e. driven by purposes rather than de ned by conventional disci- plines);being catalytic (i.e. generating or contributing to long-term socio-economic developmentprocesses); andbeing relevant (i.e. grounded in rst causes andpertinent humanvalues). The argument isillustratedwith a number ofcase studiesof exemplary urban designers,such asMichael Pyatok and Henri Ciriani,and urban designprojects, such asHorton Plazaand Aranya Nagar, from around the world. The paper concludes withan outlineof future directionsin urban design,including criteria for successful urban designprojects (e.g. striking aesthetics, convenient function andlong-term impact) anda proposedpedagogical approach (e.g. interdisciplinary, in-depth and problem-driven). Provocations In the earlypart of 1998,two provocative urban design eventsoccurred at the Universityof Michigan in Ann Arbor.The rstwas an exhibition organizedas partof aninternationalsymposium on ‘ City,Space 1 Globalization’. The second wasa lecture by the renowned Dutch architectand urbanist, Rem Koolhaas. By themselves,the events generated much interestand discussion, yet were innocu- ous,compared to, say, Prince Charles’s controversialcomments on contempor- arycities in the UKorthe gathering momentumof the New Urbanism movementin the USA. -

Fashion Designers' Decision-Making Process

Iowa State University Capstones, Theses and Graduate Theses and Dissertations Dissertations 2013 Fashion designers' decision-making process: The influence of cultural values and personal experience in the creative design process Ja-Young Hwang Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/etd Part of the Art and Design Commons Recommended Citation Hwang, Ja-Young, "Fashion designers' decision-making process: The influence of cultural values and personal experience in the creative design process" (2013). Graduate Theses and Dissertations. 13638. https://lib.dr.iastate.edu/etd/13638 This Dissertation is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Graduate Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Fashion designers’ decision-making process: The influence of cultural values and personal experience in the creative design process by Ja -Young Hwang A dissertation submitted to the graduate faculty in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY Major: Apparel, Merchandising, and Design Program of Study Committee: Mary Lynn Damhorst, Co-Major Professor Eulanda Sanders, Co-Major Professor Sara B. Marcketti Cindy Gould Barbara Caldwell Iowa State University Ames, Iowa 2013 Copyright © Ja Young Hwang, 2013. All rights