Shared Memory Communication

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interconnect Solutions Short Form Catalog

Interconnect Solutions Short Form Catalog How to Search this Catalog This digital catalog provides you with three quick ways to find the products and information you are looking for. Just point and click on the bookmarks to the left, the linked images on the next page or the labeled sections of the table of contents. You can also use the “search” function built into Adobe Acrobat to jump directly to any text reference in this document. Acrobat “Search” function instructions: 1. Press CONTROL + F 2. When the dialog box appears, type in the word or words you are looking for and press ENTER. 3. Depending on your version of Acrobat, it will either take you directly to the first instance found, or display a list of pages where the text can be found. In the latter, click on the link to the pages provided. Interconnect Solutions Short Form Catalog Complete Solutions for the Electronics Industry 3M Electronics offers a comprehensive range of Interconnect Solutions for the electronics industry with a product portfolio that includes connectors, cables, cable assemblies and assembly tooling for a wide variety of applications. 3M is dedicated to innovation, continually developing new products that become an important part of everyday life across many diverse markets. A number of 3M solution categories are based on custom-designed products for specialized applications. 3M Electronics can help you design, modify and customize your product as well as help you to seamlessly integrate our products into your manufacturing process on a global basis. RoHS Compliant Statement “RoHS compliant” means that the product or part does not contain any of the following substances in excess of the following maximum concentration values in any homogeneous material, unless the substance is in an application that is exempt under RoHS: (a) 0.1% (by weight) for lead, mercury, hexavalent chromium, polybrominated biphenyls or polybrominated diphenyl ethers; or (b) 0.01% (by weight) for cadmium. -

Publication Title 1-1962

publication_title print_identifier online_identifier publisher_name date_monograph_published_print 1-1962 - AIEE General Principles Upon Which Temperature 978-1-5044-0149-4 IEEE 1962 Limits Are Based in the rating of Electric Equipment 1-1969 - IEEE General Priniciples for Temperature Limits in the 978-1-5044-0150-0 IEEE 1968 Rating of Electric Equipment 1-1986 - IEEE Standard General Principles for Temperature Limits in the Rating of Electric Equipment and for the 978-0-7381-2985-3 IEEE 1986 Evaluation of Electrical Insulation 1-2000 - IEEE Recommended Practice - General Principles for Temperature Limits in the Rating of Electrical Equipment and 978-0-7381-2717-0 IEEE 2001 for the Evaluation of Electrical Insulation 100-2000 - The Authoritative Dictionary of IEEE Standards 978-0-7381-2601-2 IEEE 2000 Terms, Seventh Edition 1000-1987 - An American National Standard IEEE Standard for 0-7381-4593-9 IEEE 1988 Mechanical Core Specifications for Microcomputers 1000-1987 - IEEE Standard for an 8-Bit Backplane Interface: 978-0-7381-2756-9 IEEE 1988 STEbus 1001-1988 - IEEE Guide for Interfacing Dispersed Storage and 0-7381-4134-8 IEEE 1989 Generation Facilities With Electric Utility Systems 1002-1987 - IEEE Standard Taxonomy for Software Engineering 0-7381-0399-3 IEEE 1987 Standards 1003.0-1995 - Guide to the POSIX(R) Open System 978-0-7381-3138-2 IEEE 1994 Environment (OSE) 1003.1, 2004 Edition - IEEE Standard for Information Technology - Portable Operating System Interface (POSIX(R)) - 978-0-7381-4040-7 IEEE 2004 Base Definitions 1003.1, 2013 -

Opening Plenary March 2021

Opening Plenary March 2021 Glenn Parsons – IEEE 802.1 WG Chair [email protected] 802.1 plenary agenda Monday, March 8th opening Tuesday, March 16th closing • Copyright Policy • Copyright Policy • Call for Patents • Call for Patents • Participant behavior • Participant behavior • Administrative • Membership status • Membership status • Future Sessions • Future Sessions • Sanity check – current projects • 802 EC report • TG reports • Sanity check – current projects • Outgoing Liaisons • Incoming Liaisons • Motions for EC • TG agendas • Motions for 802.1 • Any other business • Any other business 2 INSTRUCTIONS FOR CHAIRS OF STANDARDS DEVELOPMENT ACTIVITIES At the beginning of each standards development meeting the chair or a designee is to: .Show the following slides (or provide them beforehand) .Advise the standards development group participants that: .IEEE SA’s copyright policy is described in Clause 7 of the IEEE SA Standards Board Bylaws and Clause 6.1 of the IEEE SA Standards Board Operations Manual; .Any material submitted during standards development, whether verbal, recorded, or in written form, is a Contribution and shall comply with the IEEE SA Copyright Policy; .Instruct the Secretary to record in the minutes of the relevant meeting: .That the foregoing information was provided and that the copyright slides were shown (or provided beforehand). .Ask participants to register attendance in IMAT: https://imat.ieee.org 3 IEEE SA COPYRIGHT POLICY By participating in this activity, you agree to comply with the IEEE Code of Ethics, all applicable laws, and all IEEE policies and procedures including, but not limited to, the IEEE SA Copyright Policy. .Previously Published material (copyright assertion indicated) shall not be presented/submitted to the Working Group nor incorporated into a Working Group draft unless permission is granted. -

Architectural Support for Real-Time Computing Using Generalized Rate Monotonic Theory

744 計 測 と 制 御 シ テ ア Vol.31, No.7 集 ル タ イ ム 分 ス ム 特 リ 散 (1992年7月) ≪展 望 ≫ Architectural Support for Real-Time Computing using Generalized Rate Monotonic Theory Lui SHA* and Shirish S. SATHAYE** Abstract The rate monotonic theory and its generalizations have been adopted by national high tech- nology projects such as the Space Station and has recently been supported by major open stand- ards such as the IEEE Futurebus+ and POSIX. 4. In this paper, we focus on the architectural support necessary for scheduling activities using the generalized rate monotonic theory. We briefly review the theory and provide an application example. Finally we describe the architec- tural requirements for the use of the theory. Key Words: Real-Time Scheduling, Distributed Real-Time System, Rate Monotonic scheduling ● Stability under transient overload. When the 1. Introduction system is overloaded by events and it is im- Real-time computing systems are critical to an possible to meet all the deadlines, we must industrialized nation's technological infrastructure. still guarantee the deadines of selected critical Modern telecommunication systems, factories, de- tasks. fense systems, aircrafts and airports, space stations Generalized rate monotonic scheduling (GRMS) and high energy physics experiments cannot op- theory allows system developers to meet the erate .without them. In real-time applications, above requirements by managing system concur- the correctness of computation depends upon not rency and timing constraints at the level of task- only its results but also the time at which outputs ing and message passing. In essence, this theory are generated. -

Bi-Directional Optical Backplane Bus for General Purpose Multi-Processor B Oard-To-B Oard Optoelectronic Interconnects

JOURNAL OF LIGHTWAVE TECHNOLOGY, VOL. 13, NO. 6, JUNE 1995 1031 Bi-Directional Optical Backplane Bus for General Purpose Multi-Processor B oard-to-B oard Optoelectronic Interconnects Srikanth Natarajan, Chunhe Zhao, and Ray. T. Chen Absfract- We report for the first time a bidirectional opti- cal backplane bus for a high performance system containing nine multi-chip module (MCM) boards, operating at 632.8 and 1300 nm. The backplane bus reported here employs arrays of multiplexed polymer-based waveguide holograms in conjunction with a waveguiding plate, within which 16 substrate guided waves for 72 (8 x 9) cascaded fanouts, are generated. Data transfer of 1.2 GbUs at 1.3-pm wavelength is demonstrated for a single bus line with 72 cascaded fanouts. Packaging-related issues such as Waveguiding Plate transceiver size and misalignment are embarked upon to provide n a reliable system with a wide bandwidth coverage. Theoretical U hocessor/Memory Board treatment to minimize intensity fluctuations among the nine modules in both directions is further presented and an optimum I High-speed Optoelectronic Transceiver design rule is provided. The backplane bus demonstrated, is for general-purpose and therefore compatible with such IEEE stan- - Waveguide Hologram For Bi-Directional Coupling dardized buses as VMEbus, Futurebus and FASTBUS, and can Fig. 1. Optical equivalent of a section of a single bidirectional electronic function as a backplane bus in existing computing environments. bus line. I. INTRODUCTION needed to preserve the rising and falling edges of the signals HE LIMITATIONS of current computer backplane buses increases. This makes using bulky, expensive, terminated Tstem from their purely electronic interconnects. -

BUS and CACHE MEMORY ORGANIZATIONS for MULTIPROCESSORS By

BUS AND CACHE MEMORY ORGANIZATIONS FOR MULTIPROCESSORS by Donald Charles Winsor A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy (Electrical Engineering) in The University of Michigan 1989 Doctoral Committee: Associate Professor Trevor N. Mudge, Chairman Professor Daniel E. Atkins Professor John P. Hayes Professor James O. Wilkes ABSTRACT BUS AND CACHE MEMORY ORGANIZATIONS FOR MULTIPROCESSORS by Donald Charles Winsor Chairman: Trevor Mudge The single shared bus multiprocessor has been the most commercially successful multiprocessor system design up to this time, largely because it permits the implementation of efficient hardware mechanisms to enforce cache consistency. Electrical loading problems and restricted bandwidth of the shared bus have been the most limiting factors in these systems. This dissertation presents designs for logical buses constructed from a hierarchy of physical buses that will allow snooping cache protocols to be used without the electrical loading problems that result from attaching all processors to a single bus. A new bus bandwidth model is developed that considers the effects of electrical loading of the bus as a function of the number of processors, allowing optimal bus configurations to be determined. Trace driven simulations show that the performance estimates obtained from this bus model agree closely with the performance that can be expected when running a realistic multiprogramming workload in which each processor runs an independent task. The model is also used with a parallel program workload to investigate its accuracy when the processors do not operate independently. This is found to produce large errors in the mean service time estimate, but still gives reasonably accurate estimates for the bus utilization. -

COVID-19 – Emergency Management Tips and Practices for Bus Transit Systems

COVID-19 – Emergency Management Tips and Practices for Bus Transit Systems Prepared for: Florida Department of Transportation Office of Freight, Logistics, and Passenger Operations Prepared by: Center for Urban Transportation Research University of South Florida Published: April 1, 2020 Revision: March 10, 2021 Revision: March 10, 2021 Table of Contents List of Figures ..................................................................................................................................................... iii Emergency Management Tips and Practices for Transit .................................................................................... 1 Supplies ........................................................................................................................................................... 1 Hazard Risk Analysis ....................................................................................................................................... 1 Center for Disease Control (CDC) Guidance ................................................................................................... 2 U.S. Department of Transportation’s Federal Transit Administration (FTA) .................................................. 4 Federal Mask Requirement for Surface Transportation Providers ............................................................ 5 Additional Resources .................................................................................................................................. 5 Transportation Research -

3M Interconnect Short Form Catalog

3M Interconnect Products Short Form Catalog Comprehensive Solutions for Reliable Connections Complete Solutions for the Electronics Industry 3M Interconnect Solutions offers a comprehensive range of Interconnect Solutions for the electronics industry, with a product portfolio that includes connectors, cables, cable assemblies and assembly tooling for a wide variety of applications. 3M is dedicated to innovation, continually developing new products that become an important part of everyday life across many diverse markets. A number of 3M solution categories are based on custom-designed products for specialized applications. 3M Interconnect Solutions can help you design, modify and customize your product as well as help you seamlessly integrate our products into your manufacturing process on a global basis. Design, modify and customize your product 2 Contents Backplane Connector Solutions 4 Boardmount Connector Solutions 6 Wiremount Connectors and Assembly Solutions 9 Device-to-Board Connector Solutions 13 Input/Output Connector Solutions 16 Cable Solutions 24 Cable Assembly Solutions — High Speed Assemblies 26 3 3M™ BACKPLANE CONNECTOR SOLUTIONS PRODUCT FEATURES MATERIALS PERFORMANCE • RoHS Compliant Version Available Contact Current Rating: 3.0 A @ 30°C DIN Connectors • DIN 41612 Material: Copper Alloy T-rise Above Ambient • C-Form Plug and Socket Insulation Temperature Rating: -55°C to +125°C • C-Form Wiremount Plugs Material: Engineering Thermoplastic and Sockets Flammability UL 94V-0 • R-Form Plug and Socket Plating • Q-Form Plug and -

TIM Specification

TIM-40 MODULE SPECIFICATION Including TMS320C44 Addendum VERSION 1.12. 1 Table of Contents TABLE OF CONTENTS ............................................................................................................................................................... 2 1.THE MODULE CONCEPT........................................................................................................................................................ 4 1.1 INTRODUCTION.................................................................................................................................................................... 4 1.2 ORIGINS OF THE MODULE..................................................................................................................................................... 4 1.3 MODULE CONCEPT............................................................................................................................................................... 4 1.4 MOTHERBOARD CONCEPT .................................................................................................................................................... 4 1.5 SUMMARY ........................................................................................................................................................................... 4 2. PHYSICAL SPECIFICATIONS ............................................................................................................................................. 5 2.1 INTRODUCTION................................................................................................................................................................... -

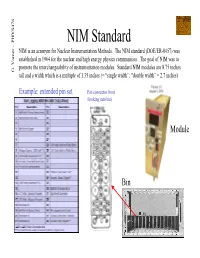

NIM Standard E Of1.35Inches(=“Singlewidt (Looking Intobin) Bin Connectorfront Methods

NIM Standard NIM is an acronym for Nuclear Instrumentation Methods. The NIM standard (DOE/ER-0457) was established in 1964 for the nuclear and high energy physics communities. The goal of NIM was to promote the interchangeability of instrumentation modules. Standard NIM modules are 8.75 inches G. Varner – PHYS476 tall and a width which is a multiple of 1.35 inches (= “single width”; “double width” = 2.7 inches) Example: extended pin set Bin connector front (looking into bin) Module Bin CAMAC Standard CAMAC is also an acronym: Computer Automated Measurement and Control. The CAMAC standard (IEEE 583) was established in 1975 and has been used in virtually every physics laboratory and many industrial applications. While other modular instrumentation standards have been widely adopted, the G. Varner – PHYS476 robust CAMAC module and enclosure construction and simple hardware level interface means CAMAC is still a viable choice for instrument applications today. Separate 24-bit Read/Write Busses Simple FNAD commanding: e.g. F = 0 (read) N = 1 (crate slot) A = card channel # D = data (either) Z = initialize (reset) X = I’m responding Q = zero suppress L = LAM (Look-At-Me) LeCroy 3377 500ps multi-hit TDC FASTBUS Standard FASTBUS is a standard largely exclusive to High Energy Physics. Detailed in ANSI/IEEE 960 and IEC-547, it is designed for VERY high power operation (many kW per crate – from the age of ECL) G. Varner – PHYS476 Hundreds of pins, Impossible to detail here and it is likely you will never need to know this. Mechanics are frightening: bed of nails in back. -

Cache Attacks on ARM

Moritz Lipp Cache Attacks on ARM Master thesis Graz, University Of Technology NOTICE If you want to cite this work based on last-level cache attacks on ARM, please cite the following research paper instead: •L ipp, Moritz ; Gruss, Daniel ; Spreitzer, Raphael ; Maurice, Clementine´ ;Mangard, Stefan: ARMageddon: Cache Attacks on Mobile De- vices. In: 25th USENIX Security Symposium (USENIX Security 16). Austin, TX : USENIX Association, August 2016. – ISBN 978–1–931971– 32–4, 549–564 If you want to cite this work in respect to rowhammer on ARM-based devices, please cite the following publication instead: •V een, Victor van d. ; Fratantonio, Yanick ; Lindorfer, Martina ; Gruss, Daniel ; Maurice, Clementine´ ; Vigna, Giovanni ; Bos, Her- bert ; Razavi, Kaveh ; Giuffrida, Christiano: Drammer : Determinis- tic Rowhammer Attacks on Commodity Mobile Platforms. In: ACM Conference on Computer and Communications Security – CCS, 2016 Contents 1 Introduction 1 1.1 Motivation . .3 1.2 Key Challenges and Results . .4 1.3 Contributions . .5 1.4 Test devices . .6 1.5 Outline . .7 2 Background 9 2.1 CPU caches . .9 2.2 Cache coherence . 18 2.3 Shared memory . 29 2.4 Cache Attacks . 31 2.5 DRAM . 39 3 Attack primitives 43 3.1 Timing Measurements . 43 3.2 Cache Eviction . 47 3.3 Defeating the Cache-Organization . 53 4 Attack Case Studies 57 4.1 High Performance Covert-Channels . 58 4.2 Spying on User input . 62 4.3 Attacks on Cryptographic Algorithms . 69 4.4 Rowhammer on ARM . 75 5 Countermeasures 79 6 Conclusion 81 i List of tables . 83 List of figures . 86 List of acronyms . -

Firewire™ Reference Tutorial (An Informational Guide) January 22

FireWire™ Reference Tutorial (An Informational Guide) January 22, 2010 Abstract This document is a FireWire tutorial aimed at engineers that have no previous exposure or understanding of FireWire. Its purpose is to familiarize the reader with FireWire and to build a sound and complete understanding of how the FireWire bus operates from the experiences of expert members of the 1394 Trade Association. 1394 Trade Association Specifications, Tutorials and Guides are developed within 1394 Trade Working Groups of the 1394 Trade Association, a non-profit industry association devoted Association to the promotion of and growth of the market for IEEE 1394-compliant products. Participants in Working Groups serve voluntarily and without compensation from the Specification Trade Association. Most participants represent member organizations of the 1394 Trade Association. The work product developed within these working groups represent a consensus of the expertise represented by the participants. Use of a 1394 Trade Association document is wholly voluntary. The existence of a 1394 Trade Association document is not meant to imply that there are not other ways to produce, test, measure, purchase, market or provide other goods and services related to the scope of the 1394 Trade Association Specification. Furthermore, the viewpoint expressed at the time a document is accepted and issued is subject to change brought about through developments in the state of the art and comments received from users of the specification. Users are cautioned to check to determine that they have the latest revision of any 1394 Trade Association document. Comments for revision of 1394 Trade Association Documents are welcome from any interested party, regardless of membership affiliation with the 1394 Trade Association.