Invalid Forensic Science Testimony and Wrongful Convictions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Scanned Image

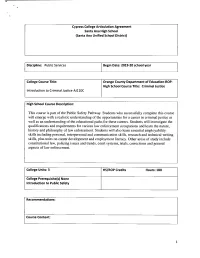

Cypress College Articulation Agreement Santa Ana High School (Santa Ana Unified School District) Discipline: Public Services Begin Date: 2019-20 school year College Course Title: Orange County Department of Education-ROP- High School Course Title: Criminal Justice Introduction to Criminal Justice-AJ110C High School Course Description: This course is part of the Public Safety Pathway. Students who successfully complete this course will emerge with a realistic understanding of the opportunities for a career in criminal justice as well as an understanding of the educational paths for these careers. Students will investigate the qualifications and requirements for various law enforcement occupations and learn the nature, history and philosophy of law enforcement. Students will also learn essential employability skills including personal, interpersonal and communication skills, research and technical writing skills, plus units on career development and employment literacy. Other areas of study include constitutional law, policing issues and trends, court systems, trials, corrections and general aspects of law enforcement. College Units: 3 HS/ROP Credits Hours: 180 College Prerequisite(s) None Introduction to Public Safety Recommendations: Course Content: > . INDUSTRY FOCUS PR law . Identify possible career profiles and pathways in enforcement. Describe current labor market projections. WH . Use law enforcement industry terminology. DP observe . Explain occupational safety issues and all safety rules. Develop perspective and understanding of all aspects of the law enforcement industry. NOU and become police . Identify the educational physical requirements needed to a officer. Explain the process of background checks for eligibility, and the implications of prior convictions and personal history. 8. Review criminal justice training and educational programs @ . CRIMINAL JUSTICE SYSTEM . -

Voices of Forensic Science

Chapter 3 The Contamination and Misuse of DNA Evidence Vanessa Toncic, Alexandra Silva "Genes are like the story, and DNA is the language that the story is written in." (Kean, 2012) To understand how potentially mishandled or contaminated DNA evidence could be manipulated or presented in court, (under false pretenses), as an evidentiary ‘gold standard,’ it is important to first explain why, and under what circumstances, DNA evidence is in fact a gold standard. DNA evidence may be disguised as a gold standard when either the techniques used, or the representation of the data has been skewed and is no longer reflective of DNA as a gold standard. This chapter will explore the use of mixed DNA profiles as well as low copy number (LCN) techniques of DNA analysis, and how these methods may not be deserving of the ‘gold standard’ seal associated with DNA evidence. We will explore the biological aspect of issues with these methods and how they may reflect a contaminated DNA sample. Notably, DNA evidence that has been appropriately collected and analyzed may still be purposely misrepresented to a judge and/or jury. DNA as an evidentiary gold standard requires proper handling from the onset of collection, throughout analysis and storage, as well as during presentation in court to ensure that the quality of evidence is upheld. DNA evidence and its associated challenges are multi-faceted areas of study. These studies include issues surrounding the collection as well as the analysis of evidence leading to subsequent contamination and root cause analysis. There also exists debate on the ethics and privatization of DNA given the popularization of online DNA databases. -

Embedded Reporters: What Are Americans Getting?

Embedded Reporters: What Are Americans Getting? For More Information Contact: Tom Rosenstiel, Director, Project for Excellence in Journalism Amy Mitchell, Associate Director Matt Carlson, Wally Dean, Dante Chinni, Atiba Pertilla, Research Nancy Anderson, Tom Avila, Staff Embedded Reporters: What Are Americans Getting? Defense Secretary Donald Rumsfeld has suggested we are getting only “slices” of the war. Other observers have likened the media coverage to seeing the battlefield through “a soda straw.” The battle for Iraq is war as we’ve never it seen before. It is the first full-scale American military engagement in the age of the Internet, multiple cable channels and a mixed media culture that has stretched the definition of journalism. The most noted characteristic of the media coverage so far, however, is the new system of “embedding” some 600 journalists with American and British troops. What are Americans getting on television from this “embedded” reporting? How close to the action are the “embeds” getting? Who are they talking to? What are they talking about? To provide some framework for the discussion, the Project for Excellence in Journalism conducted a content analysis of the embedded reports on television during three of the first six days of the war. The Project is affiliated with Columbia University and funded by the Pew Charitable Trusts. The embedded coverage, the research found, is largely anecdotal. It’s both exciting and dull, combat focused, and mostly live and unedited. Much of it lacks context but it is usually rich in detail. It has all the virtues and vices of reporting only what you can see. -

BSCHIFFER 2009 05 28 These

UNIVERSITY OF LAUSANNE FACULTY OF LAW AND CRIMINAL JUSTICE SCHOOL OF CRIMINAL JUSTICE FORENSIC SCIENCE INSTITUTE The Relationship between Forensic Science and Judicial Error: A Study Covering Error Sources, Bias, and Remedies PhD thesis submitted to obtain the doctoral degree in forensic science Beatrice SCHIFFER Lausanne, 2009 To my family, especially my parents Summary Forensic science - both as a source of and as a remedy for error potentially leading to judicial error - has been studied empirically in this research. A comprehensive literature review, experimental tests on the influence of observational biases in fingermark comparison, and semi- structured interviews with heads of forensic science laboratories/units in Switzerland and abroad were the tools used. For the literature review , some of the areas studied are: the quality of forensic science work in general, the complex interaction between science and law, and specific propositions as to error sources not directly related to the interaction between law and science. A list of potential error sources all the way from the crime scene to the writing of the report has been established as well. For the empirical tests , the ACE-V (Analysis, Comparison, Evaluation, and Verification) process of fingermark comparison was selected as an area of special interest for the study of observational biases, due to its heavy reliance on visual observation and recent cases of misidentifications. Results of the tests performed with forensic science students tend to show that decision-making stages are the most vulnerable to stimuli inducing observational biases. For the semi-structured interviews, eleven senior forensic scientists answered questions on several subjects, for example on potential and existing error sources in their work, of the limitations of what can be done with forensic science, and of the possibilities and tools to minimise errors. -

The Rise of Nixon by Megan Kimbrell

The Rise of Nixon by Megan Kimbrell Richard Milhous Nixon is one of the most central political figures in American history. Therefore, an analysis of how he rose to national prominence, and so quickly at that, is a worthwhile discussion. For example, Nixon entered the United States House of Representatives in 1946 by defeating the popular Democratic incumbent, Jerry Voorhis. Without previous political experience, Nixon was thrown into Congress where he was promptly placed on the infamous House Committee on Un-American Activities (HUAC). There he gained national fame in the case of Alger Hiss, an accused communist spy. He followed this with a stunning victory in the 1950 senatorial race against Helen Gahagan Douglas. Soon after, Nixon was nominated as the vice presidential candidate in 1952. At the young age of forty, and just six years after his first political campaign, Nixon entered the White House as Dwight D. Eisenhower's vice president. Nixon's meteoric rise to power begs the question of just how exactly he accomplished this feat. The answer to this question is quite simple: Nixon used the issue of communist subversion to further his political career. In fact, the perceived communist threat of the post-World War II era was the chief catalyst in Nixon's rise to the forefront of American politics. His career gained momentum alongside the Red Scare of this era with his public battles against accused communist sympathizers. Following World War II, Americans became obsessed with the fears of communist subversion. The Cold War produced unstable relations with the Soviet Union and other pro-communist countries, which made for a frightening future. -

John Ahouse-Upton Sinclair Collection, 1895-2014

http://oac.cdlib.org/findaid/ark:/13030/c8cn764d No online items INVENTORY OF THE JOHN AHOUSE-UPTON SINCLAIR COLLECTION, 1895-2014, Finding aid prepared by Greg Williams California State University, Dominguez Hills Archives & Special Collections University Library, Room 5039 1000 E. Victoria Street Carson, California 90747 Phone: (310) 243-3895 URL: http://www.csudh.edu/archives/csudh/index.html ©2014 INVENTORY OF THE JOHN "Consult repository." 1 AHOUSE-UPTON SINCLAIR COLLECTION, 1895-2014, Descriptive Summary Title: John Ahouse-Upton Sinclair Collection Dates: 1895-2014 Collection Number: "Consult repository." Collector: Ahouse, John B. Extent: 12 linear feet, 400 books Repository: California State University, Dominguez Hills Archives and Special Collections Archives & Special Collection University Library, Room 5039 1000 E. Victoria Street Carson, California 90747 Phone: (310) 243-3013 URL: http://www.csudh.edu/archives/csudh/index.html Abstract: This collection consists of 400 books, 12 linear feet of archival items and resource material about Upton Sinclair collected by bibliographer John Ahouse, author of Upton Sinclair, A Descriptive Annotated Bibliography . Included are Upton Sinclair books, pamphlets, newspaper articles, publications, circular letters, manuscripts, and a few personal letters. Also included are a wide variety of subject files, scholarly or popular articles about Sinclair, videos, recordings, and manuscripts for Sinclair biographies. Included are Upton Sinclair’s A Monthly Magazine, EPIC Newspapers and the Upton Sinclair Quarterly Newsletters. Language: Collection material is primarily in English Access There are no access restrictions on this collection. Publication Rights All requests for permission to publish or quote from manuscripts must be submitted in writing to the Director of Archives and Special Collections. -

The Pink Lady and Tricky Dick: Communism's Role in the 1950 Senatorial Election” Was Written for Dr

The Pink Lady and Tricky Dick: Communism’s Role in the 1950 Senatorial Election Michael Bird The shock had set in and the damage had been done. “I failed to take his attacks seriously enough.” That tricky man had struck quite low. What happened to “no name-calling, no smears, no misrepresentations in this campaign?” Is this politics? alifornia Republican Congressman Richard Nixon needed a C stage to stand on if he were to take the next step in politics during the 1950s. World War II left behind a world that was opportune for this next step. In the early days after the war, most Americans hoped for a continuation of cooperation between Americans and Soviets. However, that would change as a handful of individuals took to fighting Communism as one of their political weapons. Author Richard M. Fried suggests Communism “was the focal point of the careers of Wisconsin Senator Joseph R. McCarthy; of Richard Nixon during his tenure as Congressman, Senator” and “of several of Nixon’s colleagues on the House Committee on Un-American Activities.”1 The careers of these men,, both successful and unsuccessful, had roots in anticommunism. Characteristic of previous campaigns were the accomplishments and failures of each candidate. What appeared during the 1950 senatorial campaign in California was a politics largely focused on whether a candidate could be called soft on Michael Bird, who earned his BA in history in spring 2014, is from Villa Grove, Illinois. “The Pink Lady and Tricky Dick: Communism's Role in the 1950 Senatorial Election” was written for Dr. -

Introduction Rick Perlstein

© Copyright, Princeton University Press. No part of this book may be distributed, posted, or reproduced in any form by digital or mechanical means without prior written permission of the publisher. Introduction Rick Perlstein I In the fall of 1967 Richard Nixon, reintroducing himself to the public for his second run for the presidency of the United States, published two magazine articles simultaneously. The first ran in the distinguished quarterly Foreign Affairs, the re- view of the Council of Foreign Relations. “Asia after Viet Nam” was sweeping, scholarly, and high-minded, couched in the chessboard abstrac- tions of strategic studies. The intended audience, in whose language it spoke, was the nation’s elite, and liberal-leaning, opinion-makers. It argued for the diplomatic “long view” toward the nation, China, that he had spoken of only in terms of red- baiting demagoguery in the past: “we simply can- not afford to leave China forever outside the fam- ily of nations,” he wrote. This was the height of foreign policy sophistication, the kind of thing one heard in Ivy League faculty lounges and Brookings Institution seminars. For Nixon, the conclusion was the product of years of quiet travel, study, and reflection that his long stretch in the political wil- derness, since losing the California governor’s race in 1962, had liberated him to carry out. It bore no relation to the kind of rip-roaring, elite- baiting things he usually said about Communists For general queries, contact [email protected] © Copyright, Princeton University Press. No part of this book may be distributed, posted, or reproduced in any form by digital or mechanical means without prior written permission of the publisher. -

NCSBI Evidence Guide Issue Date: 01/01/2010 Page 1 of 75 ______

NCSBI Evidence Guide Issue Date: 01/01/2010 Page 1 of 75 _____________________________________________________________________________________________________________________ North Carolina State Bureau of Investigation EVIDENCE GUIDE North Carolina Department of Justice Attorney General Roy Cooper North Carolina State Bureau of Investigation Director Robin P. Pendergraft Crime Laboratory Division Assistant Director Jerry Richardson January 2010 NCSBI Evidence Guide Issue Date: 01/01/2010 Page 2 of 75 _____________________________________________________________________________________________________________________ Address questions regarding this guide to the: North Carolina State Bureau of Investigation Evidence Control Unit 121 East Tryon Road Raleigh, North Carolina 27603 phone: (919) 662-4500 (Ex. 1501) or Fax questions or comments to the: North Carolina State Bureau of Investigation Evidence Control Unit at fax: (919) 661-5849 This guide may be duplicated and distributed to any law enforcement officer whose duties include the collection, preservation, and submission of evidence to the North Carolina State Bureau of Investigation Crime Laboratory Division. NCSBI Evidence Guide Issue Date: 01/01/2010 Page 3 of 75 _____________________________________________________________________________________________________________________ Table of Contents SPECIAL NOTICES .............................................................................................................. Page 4 Where to Submit Evidence .................................................................................................... -

The Political History of Classical Hollywood: Moguls, Liberals and Radicals in The

The political history of Classical Hollywood: moguls, liberals and radicals in the 1930s Professor Mark Wheeler, London Metropolitan University Introduction Hollywood’s relationship with the political elite in the Depression reflected the trends which defined the USA’s affairs in the interwar years. For the moguls mixing with the powerful indicated their acceptance by America’s elites who had scorned them as vulgar hucksters due to their Jewish and show business backgrounds. They could achieve social recognition by demonstrating a commitment to conservative principles and supported the Republican Party. However, MGM’s Louis B. Mayer held deep right-wing convictions and became the vice-chairman of the Southern Californian Republican Party. He formed alliances with President Herbert Hoover and the right-wing press magnate William Randolph Hearst. Along with Hearst, Will Hays and an array of Californian business forces, ‘Louie Be’ and MGM’s Production Chief Irving Thalberg led a propaganda campaign against Upton Sinclair’s End Poverty in California (EPIC) gubernatorial election crusade in 1934. This form of ‘mogul politics’ was characterized by the instincts of its authors: hardness, shrewdness, autocracy and coercion. 1 In response to the mogul’s mercurial values, the Hollywood community pursued a significant degree of liberal and populist political activism, along with a growing radicalism among writers, directors and stars. For instance, James Cagney and Charlie Chaplin supported Sinclair’s EPIC campaign by attending meetings and collecting monies. Their actions reflected the economic, social and political conditions of the era, notably the collapse of US capitalism with the Great Depression, the New Deal, the establishment of trade unions, and the emigration of European political refugees due to Nazism. -

Wrongful Convictions and Forensic Science: the Need to Regulate Crime Labs

Case Western Reserve University School of Law Scholarly Commons Faculty Publications 2006 Wrongful Convictions and Forensic Science: The Need to Regulate Crime Labs Paul C. Giannelli Case Western University School of Law, [email protected] Follow this and additional works at: https://scholarlycommons.law.case.edu/faculty_publications Part of the Evidence Commons Repository Citation Giannelli, Paul C., "Wrongful Convictions and Forensic Science: The Need to Regulate Crime Labs" (2006). Faculty Publications. 149. https://scholarlycommons.law.case.edu/faculty_publications/149 This Article is brought to you for free and open access by Case Western Reserve University School of Law Scholarly Commons. It has been accepted for inclusion in Faculty Publications by an authorized administrator of Case Western Reserve University School of Law Scholarly Commons. GIANNELLI.PTD 12/17/2007 2:47:57 PM WRONGFUL CONVICTIONS AND FORENSIC SCIENCE: THE NEED TO REGULATE CRIME LABS* PAUL C. GIANNELLI** DNA testing has exonerated over 200 convicts, some of whom were on death row. Studies show that a substantial number of these miscarriages of justice involved scientific fraud or junk science. This Article documents the failures of crime labs and some forensic techniques, such as microscopic hair comparison and bullet lead analysis. Some cases involved incompetence and sloppy procedures, while others entailed deceit, but the extent of the derelictions—the number of episodes and the duration of some of the abuses, covering decades in several instances—demonstrates that the problems are systemic. Paradoxically, the most scientifically sound procedure—DNA analysis—is the most extensively regulated, while many forensic techniques with questionable scientific pedigrees go completely unregulated. -

State of New Hampshire Department of Safety Division of State Police Forensic Laboratory Performance Audit September 2011

STATE OF NEW HAMPSHIRE DEPARTMENT OF SAFETY DIVISION OF STATE POLICE FORENSIC LABORATORY PERFORMANCE AUDIT SEPTEMBER 2011 To The Fiscal Committee Of The General Court: We conducted an audit of the New Hampshire Department of Safety, Division of State Police, Forensic Laboratory (Lab) to address the recommendation made to you by the joint Legislative Performance Audit and Oversight Committee. We conducted this performance audit in accordance with generally accepted government auditing standards. Those standards require we plan and perform the audit to obtain sufficient, appropriate evidence to provide a reasonable basis for our findings and conclusions based on our audit objectives. We believe the evidence obtained provides a reasonable basis for our findings and conclusions based on our audit objectives. The purpose of the audit was to determine if the Lab was operating efficiently and effectively. The audit period is State fiscal years 2009 and 2010. This report is the result of our evaluation of the information noted above and is intended solely for the information of the Department of Safety, Division of State Police, the Lab, and the Fiscal Committee of the General Court. This restriction is not intended to limit the distribution of this report, which upon acceptance by the Fiscal Committee is a matter of public record. Office Of Legislative Budget Assistant September 2011 i THIS PAGE INTENTIONALLY LEFT BLANK ii STATE OF NEW HAMPSHIRE DIVISION OF STATE POLICE – FORENSIC LABORATORY TABLE OF CONTENTS PAGE TRANSMITTAL LETTER ..................................................................................................................