FY2017 Annual Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind River Vxworks Platforms 3.8

Wind River VxWorks Platforms 3.8 The market for secure, intelligent, Table of Contents Build System ................................ 24 connected devices is constantly expand- Command-Line Project Platforms Available in ing. Embedded devices are becoming and Build System .......................... 24 VxWorks Edition .................................2 more complex to meet market demands. Workbench Debugger .................. 24 New in VxWorks Platforms 3.8 ............2 Internet connectivity allows new levels of VxWorks Simulator ....................... 24 remote management but also calls for VxWorks Platforms Features ...............3 Workbench VxWorks Source increased levels of security. VxWorks Real-Time Operating Build Configuration ...................... 25 System ...........................................3 More powerful processors are being VxWorks 6.x Kernel Compatibility .............................3 considered to drive intelligence and Configurator ................................. 25 higher functionality into devices. Because State-of-the-Art Memory Host Shell ..................................... 25 Protection ..................................3 real-time and performance requirements Kernel Shell .................................. 25 are nonnegotiable, manufacturers are VxBus Framework ......................4 Run-Time Analysis Tools ............... 26 cautious about incorporating new Core Dump File Generation technologies into proven systems. To and Analysis ...............................4 System Viewer ........................ -

Intel Quartus Prime Pro Edition User Guide: Programmer Send Feedback

Intel® Quartus® Prime Pro Edition User Guide Programmer Updated for Intel® Quartus® Prime Design Suite: 21.2 Subscribe UG-20134 | 2021.07.21 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Intel® Quartus® Prime Programmer User Guide..............................................................4 1.1. Generating Primary Device Programming Files........................................................... 5 1.2. Generating Secondary Programming Files................................................................. 6 1.2.1. Generating Secondary Programming Files (Programming File Generator)........... 7 1.2.2. Generating Secondary Programming Files (Convert Programming File Dialog Box)............................................................................................. 11 1.3. Enabling Bitstream Security for Intel Stratix 10 Devices............................................ 18 1.3.1. Enabling Bitstream Authentication (Programming File Generator)................... 19 1.3.2. Specifying Additional Physical Security Settings (Programming File Generator).............................................................................................. 21 1.3.3. Enabling Bitstream Encryption (Programming File Generator).........................22 1.4. Enabling Bitstream Encryption or Compression for Intel Arria 10 and Intel Cyclone 10 GX Devices.................................................................................................. 23 1.5. Generating Programming Files for Partial Reconfiguration......................................... -

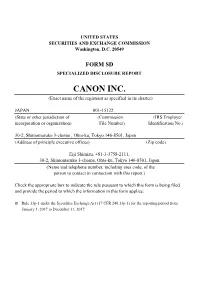

CANON INC. (Exact Name of the Registrant As Specified in Its Charter)

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM SD SPECIALIZED DISCLOSURE REPORT CANON INC. (Exact name of the registrant as specified in its charter) JAPAN 001-15122 (State or other jurisdiction of (Commission (IRS Employer incorporation or organization) File Number) Identification No.) 30-2, Shimomaruko 3-chome , Ohta-ku, Tokyo 146-8501, Japan (Address of principle executive offices) (Zip code) Eiji Shimizu, +81-3-3758-2111, 30-2, Shimomaruko 3-chome, Ohta-ku, Tokyo 146-8501, Japan (Name and telephone number, including area code, of the person to contact in connection with this report.) Check the appropriate box to indicate the rule pursuant to which this form is being filed and provide the period to which the information in this form applies: Rule 13p-1 under the Securities Exchange Act (17 CFR 240.13p-1) for the reporting period from January 1, 2017 to December 31, 2017. Section 1 - Conflict Minerals Disclosure Established in 1937, Canon Inc. is a Japanese corporation with its headquarters in Tokyo, Japan. Canon Inc. is one of the world’s leading manufacturers of office multifunction devices (“MFDs”), plain paper copying machines, laser printers, inkjet printers, cameras, diagnostic equipment and lithography equipment. Canon Inc. earns revenues primarily from the manufacture and sale of these products domestically and internationally. Canon Inc. and its consolidated companies fully have been aware of conflict minerals issue and have been working together with business partners and industry entities to address the issue of conflict minerals. In response to Rule 13p-1, Canon Inc. conducted Reasonable Country of Origin Inquiry and due diligence based on the “OECD Due Diligence Guidance for Responsible Supply Chains of Minerals from Conflict-Affected and High-Risk Areas,” for its various products. -

A Superscalar Out-Of-Order X86 Soft Processor for FPGA

A Superscalar Out-of-Order x86 Soft Processor for FPGA Henry Wong University of Toronto, Intel [email protected] June 5, 2019 Stanford University EE380 1 Hi! ● CPU architect, Intel Hillsboro ● Ph.D., University of Toronto ● Today: x86 OoO processor for FPGA (Ph.D. work) – Motivation – High-level design and results – Microarchitecture details and some circuits 2 FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT6-LUT 6-LUT6-LUT 3 6-LUT 6-LUT FPGA: Field-Programmable Gate Array ● Is a digital circuit (logic gates and wires) ● Is field-programmable (at power-on, not in the fab) ● Pre-fab everything you’ll ever need – 20x area, 20x delay cost – Circuit building blocks are somewhat bigger than logic gates 6-LUT 6-LUT 6-LUT 6-LUT 4 6-LUT 6-LUT FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 5 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel code and hardware accelerators need effort – Less effort if soft processors got faster 6 FPGA Soft Processors ● FPGA systems often have software components – Often running on a soft processor ● Need more performance? – Parallel -

EDN Magazine, December 17, 2004 (.Pdf)

ᮋ HE BEST 100 PRODUCTS OF 2004 encompass a range of architectures and technologies Tand a plethora of categories—from analog ICs to multimedia to test-and-measurement tools. All are innovative, but, of the thousands that manufacturers announce each year and the hundreds that EDN reports on, only about 100 hot products make our readers re- ally sit up and take notice. Here are the picks from this year's crop. We present the basic info here. To get the whole scoop and find out why these products are so compelling, go to the Web version of this article on our Web site at www.edn.com. There, you'll find links to the full text of the articles that cover these products' dazzling features. ANALOG ICs Power Integrations COMMUNICATIONS NetLogic Microsystems Analog Devices LNK306P Atheros Communications NSE5512GLQ network AD1954 audio DAC switching power converter AR5005 Wi-Fi chip sets search engine www.analog.com www.powerint.com www.atheros.com www.netlogicmicro.com D2Audio Texas Instruments Fulcrum Microsystems Parama Networks XR125 seven-channel VCA8613 FM1010 six-port SPI-4,2 PNI8040 add-drop module eight-channel VGA switch chip multiplexer www.d2audio.com www.ti.com www.fulcrummicro.com www.paramanet.com International Rectifier Wolfson Microelectronics Motia PMC-Sierra IR2520D CFL ballast WM8740 audio DAC Javelin smart-antenna IC MSP2015, 2020, 4000, and power controller www.wolfsonmicro.com www.motia.com 5000 VoIP gateway chips www.irf.com www.pmc-sierra.com www.edn.com December 17, 2004 | edn 29 100 Texas Instruments Intel DISCRETE SEMICONDUCTORS -

Introduction to Intel® FPGA IP Cores

Introduction to Intel® FPGA IP Cores Updated for Intel® Quartus® Prime Design Suite: 20.3 Subscribe UG-01056 | 2020.11.09 Send Feedback Latest document on the web: PDF | HTML Contents Contents 1. Introduction to Intel® FPGA IP Cores..............................................................................3 1.1. IP Catalog and Parameter Editor.............................................................................. 4 1.1.1. The Parameter Editor................................................................................. 5 1.2. Installing and Licensing Intel FPGA IP Cores.............................................................. 5 1.2.1. Intel FPGA IP Evaluation Mode.....................................................................6 1.2.2. Checking the IP License Status.................................................................... 8 1.2.3. Intel FPGA IP Versioning............................................................................. 9 1.2.4. Adding IP to IP Catalog...............................................................................9 1.3. Best Practices for Intel FPGA IP..............................................................................10 1.4. IP General Settings.............................................................................................. 11 1.5. Generating IP Cores (Intel Quartus Prime Pro Edition)...............................................12 1.5.1. IP Core Generation Output (Intel Quartus Prime Pro Edition)..........................13 1.5.2. Scripting IP Core Generation.................................................................... -

North American Company Profiles 8X8

North American Company Profiles 8x8 8X8 8x8, Inc. 2445 Mission College Boulevard Santa Clara, California 95054 Telephone: (408) 727-1885 Fax: (408) 980-0432 Web Site: www.8x8.com Email: [email protected] Fabless IC Supplier Regional Headquarters/Representative Locations Europe: 8x8, Inc. • Bucks, England U.K. Telephone: (44) (1628) 402800 • Fax: (44) (1628) 402829 Financial History ($M), Fiscal Year Ends March 31 1992 1993 1994 1995 1996 1997 1998 Sales 36 31 34 20 29 19 50 Net Income 5 (1) (0.3) (6) (3) (14) 4 R&D Expenditures 7 7 7 8 8 11 12 Capital Expenditures — — — — 1 1 1 Employees 114 100 105 110 81 100 100 Ownership: Publicly held. NASDAQ: EGHT. Company Overview and Strategy 8x8, Inc. is a worldwide leader in the development, manufacture and deployment of an advanced Visual Information Architecture (VIA) encompassing A/V compression/decompression silicon, software, subsystems, and consumer appliances for video telephony, videoconferencing, and video multimedia applications. 8x8, Inc. was founded in 1987. The “8x8” refers to the company’s core technology, which is based upon Discrete Cosine Transform (DCT) image compression and decompression. In DCT, 8-pixel by 8-pixel blocks of image data form the fundamental processing unit. 2-1 8x8 North American Company Profiles Management Paul Voois Chairman and Chief Executive Officer Keith Barraclough President and Chief Operating Officer Bryan Martin Vice President, Engineering and Chief Technical Officer Sandra Abbott Vice President, Finance and Chief Financial Officer Chris McNiffe Vice President, Marketing and Sales Chris Peters Vice President, Sales Michael Noonen Vice President, Business Development Samuel Wang Vice President, Process Technology David Harper Vice President, European Operations Brett Byers Vice President, General Counsel and Investor Relations Products and Processes 8x8 has developed a Video Information Architecture (VIA) incorporating programmable integrated circuits (ICs) and compression/decompression algorithms (codecs) for audio/video communications. -

J-PARC MLF MUSE Muon Beams

For Project X muSR forum at Fermilab Oct 17th-19th,2012 J-PARC MLF MUSE muon beams J-PARC MLF Muon Section/KEK IMSS Yasuhiro Miyake N D-Line In operation N U-Line Commissioning started! N S-Line Partially constructed! N H-Line Partially constructed! Proton Beam Transport from 3GeV RCS to MLF� On the way, towards neutron source� Graphite Muon Target! G-2, DeeMe Mu-Hf Super Highexperiments Resolution Powder etc. H-LineDiffractometer (SHRPD) – KEK are planned 100 m LineBL8� NOBORU - IBARAKI Biological S-Line JAEA BL4� Crystal Diffractometer Nuclear Data - Hokkaido Univ. High Resolution Chopper 4d Space Access Neutron Spectrometer Spectrometer(4SEASONS) Muon Target Grant - in - Aid for Specially Promoted Research, MEXT, Neutron Target U-Line HI - SANS Versatile High Intensity (JAEA) Total Diffractometer(KEK /NEDO) D-Line&9&$3/-&3&1 (KEK) Cold Neutron Double Chopper Spectrometer IBARAKI Materials Design (CNDCS) - JAEA Diffractometer 30 m Muon Engineering Materials and Life Science Facility (MLF) for Muon & NeutronDiffractometer - JAEA Edge-cooling Rotating Graphite Graphite Fixed Target Target From January 2014! At Present! Will be changed in Summer 2013! Inves�gated during shut-‐down! Fixed Target Rota�ng Target S-Line H-Line Surface µ+(30 MeV/c) Surface µ+ For HF, g-2 exp. For material sciences e- up to 120 MeV/c For DeeMe µ- up to 120 MeV/c For µCF Muon Target U-Line D-Line Ultra Slow µ+(0.05-30keV) Surface µ+(30 MeV/c) For multi-layered thin Decay µ+/µ-(up to 120 MeV/c) foils, nano-materials, catalysis, etc Users’ RUN, in Operation MUSE D-Line, since Sep., 2008 [The world-most intense pulsed muon beam achieved at J-PARC MUSE] ZZZAt the J-PARC Muon Facility (MUSE), the intensity of the pulsed surface muon beam was recorded to be 1.8 x 106/s on November 2009, which was produced by a primary proton beam at a corresponding power of 120 kW delivered from the Rapid Cycle Synchrotron (RCS). -

FROM KEK-PS to J-PARC Yoshishige Yamazaki, J-PARC, KEK & JAEA, Japan

FROM KEK-PS TO J-PARC Yoshishige Yamazaki, J-PARC, KEK & JAEA, Japan Abstract target are located in series. Every 3 s or so, depending The user experiments at J-PARC have just started. upon the usage of the main ring (MR), the beam is JPARC, which stands for Japan Proton Accelerator extracted from the RCS to be injected to the MR. Here, it Research Complex, comprises a 400-MeV linac (at is ramped up to 30 GeV at present and slowly extracted to present: 180 MeV, being upgraded), a 3-GeV rapid- Hadron Experimental Hall, where the kaon-production cycling synchrotron (RCS), and a 50-GeV main ring target is located. The experiments using the kaons are (MR) synchrotron, which is now in operation at 30 GeV. conducted there. Sometimes, it is fast extracted to The RCS will provide the muon-production target and the produce the neutrinos, which are sent to the Super spallation-neutron-production target with a beam power Kamiokande detector, which is located 295-km west of of 1 MW (at present: 120 kW) at a repetition rate of 25 the J-PARC site. In the future, we are conceiving the Hz. The muons and neutrons thus generated will be used possibility of constructing a test facility for an in materials science, life science, and others, including accelerator-driven nuclear waste transmutation system, industrial applications. The beams that are fast extracted which was shifted to Phase II. We are trying every effort from the MR generate neutrinos to be sent to the Super to get funding for this facility. -

EARLY SCHIZOPHRENIA DETECTION Biomarker Found in Hair

SPRING 2020 SHOWCASING THE BEST OF JAPAN’S PREMIER RESEARCH ORGANIZATION • www.riken.jp/en EARLY SCHIZOPHRENIA DETECTION Biomarker found in hair WHAT'S THE MATTER? LIVING UNTIL 110 READING THE MIND OF AI Possible link between Abundance of immune Cancer prognosis two cosmic mysteries cell linked to longevity method revealed ▲ Web of dark matter A supercomputer simulation showing the distribution of dark matter in the local universe. RIKEN astrophysicists have performed the first laboratory experiments to see whether the interaction between dark matter and antimatter differs from that between dark matter and normal matter. If such a difference exists, it could explain two cosmological mysteries: why there is so little antimatter in the Universe and what is the true nature of dark matter (see page 18). ADVISORY BOARD RIKEN, Japan’s flagship publication is a selection For further information on the BIOLOGY ARTIFICIAL INTELLIGENCE research institute, conducts of the articles published research in this publication or • Kuniya Abe (BRC) • Hiroshi Nakagawa (AIP) basic and applied research in by RIKEN at: https://www. to arrange an interview with a • Makoto Hayashi (CSRS) • Satoshi Sekine (AIP) a wide range of fields riken.jp/en/news_pubs/ researcher, please contact: • Shigeo Hayashi (BDR) including physics, chemistry, research_news/ RIKEN International • Joshua Johansen (CBS) PHYSICS medical science, biology Please visit the website for Affairs Division • Atsuo Ogura (BRC) • Akira Furusaki (CEMS) and engineering. recent updates and related 2-1, Hirosawa, Wako, • Yasushi Okada (BDR) • Hiroaki Minamide (RAP) articles. Articles showcase Saitama, 351-0198, Japan • Hitoshi Okamoto (CBS) • Yasuo Nabekawa (RAP) Initially established RIKEN’s groundbreaking Tel: +81 48 462 1225 • Kensaku Sakamoto (BDR) • Shigehiro Nagataki (CPR) as a private research results and are written for a Fax: +81 48 463 3687 • Kazuhiro Sakurada (MIH) • Masaki Oura (RSC) foundation in Tokyo in 1917, non-specialist audience. -

Intel® Arria® 10 Device Overview

Intel® Arria® 10 Device Overview Subscribe A10-OVERVIEW | 2020.10.20 Send Feedback Latest document on the web: PDF | HTML Contents Contents Intel® Arria® 10 Device Overview....................................................................................... 3 Key Advantages of Intel Arria 10 Devices........................................................................ 4 Summary of Intel Arria 10 Features................................................................................4 Intel Arria 10 Device Variants and Packages.....................................................................7 Intel Arria 10 GX.................................................................................................7 Intel Arria 10 GT............................................................................................... 11 Intel Arria 10 SX............................................................................................... 14 I/O Vertical Migration for Intel Arria 10 Devices.............................................................. 17 Adaptive Logic Module................................................................................................ 17 Variable-Precision DSP Block........................................................................................18 Embedded Memory Blocks........................................................................................... 20 Types of Embedded Memory............................................................................... 21 Embedded Memory Capacity in -

Demystifying Internet of Things Security Successful Iot Device/Edge and Platform Security Deployment — Sunil Cheruvu Anil Kumar Ned Smith David M

Demystifying Internet of Things Security Successful IoT Device/Edge and Platform Security Deployment — Sunil Cheruvu Anil Kumar Ned Smith David M. Wheeler Demystifying Internet of Things Security Successful IoT Device/Edge and Platform Security Deployment Sunil Cheruvu Anil Kumar Ned Smith David M. Wheeler Demystifying Internet of Things Security: Successful IoT Device/Edge and Platform Security Deployment Sunil Cheruvu Anil Kumar Chandler, AZ, USA Chandler, AZ, USA Ned Smith David M. Wheeler Beaverton, OR, USA Gilbert, AZ, USA ISBN-13 (pbk): 978-1-4842-2895-1 ISBN-13 (electronic): 978-1-4842-2896-8 https://doi.org/10.1007/978-1-4842-2896-8 Copyright © 2020 by The Editor(s) (if applicable) and The Author(s) This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Open Access This book is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made. The images or other third party material in this book are included in the book’s Creative Commons license, unless indicated otherwise in a credit line to the material.