Towards Realistic MIDI Instrument Synthesizers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

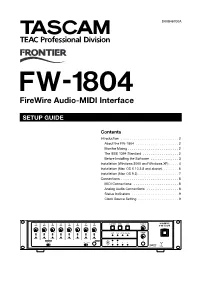

FW-1804 Firewire Audio-MIDI Interface

» D00846700A FW-1804 FireWire Audio-MIDI Interface SETUP GUIDE Contents Introduction ...............................2 About the FW-1804 .......................2 Monitor Mixing ...........................2 The IEEE 1394 Standard ...................2 Before Installing the Software ...............3 Installation (Windows 2000 and Windows XP) .....4 Installation (Mac OS X 10.2.8 and above).........6 Installation (Mac OS 9.2)......................7 Connections ...............................8 MIDI Connections ........................8 Analog Audio Connections .................8 Status Indicators .........................9 Clock Source Setting ......................9 Introduction About the FW-1804 • COMPUTER selects the signals from the DAW passed through the FireWire connection. The level of the signals from the computer is set using the master output control The FW-1804 provides your computer with high-quality of the DAW software and the two analog outputs may be audio facilities: eight channels of analog input and two of selected (using the software Control Panel) for output of output, with two channels of digital audio I/O through these signals. coaxial connections and eight channels of digital I/O through optical connections—at up to 96 kHz 24-bit. There • INPUTS selects the stereo mix of the analog, optical and are also two physical MIDI input and four physical output coaxial signals for monitoring. ports. • BOTH allows the computer signals to be monitored It is connected to the host computer using a single 6-pin to mixed with the input signals. 6-pin IEEE 1394 cable (supplied) that carries audio and Individual channels can be set to unity gain by pressing and MIDI information back and forth between the FW-1804 holding the computer's [Shift] key while clicking on the and the computer. -

United States Patent (19) 11 Patent Number: 6,157,976 Tien Et Al

USOO6157976A United States Patent (19) 11 Patent Number: 6,157,976 Tien et al. (45) Date of Patent: Dec. 5, 2000 54 PCI-PCI BRIDGE AND PCI-BUS AUDIO OTHER PUBLICATIONS ACCELERATOR INTEGRATED CIRCUIT PCI System Architecture, Tom Shanley/Don Anderson, 75 Inventors: Paul Tien, Fremont; Cheng-Yeuan 1995, pp. 381-382. Tsay, Pleasanton; Rsong-Hsiang Shiao, Fremont, all of Calif. Primary Examiner Ayaz R. Sheikh Assistant Examiner Rupal D. Dharia 73 Assignee: ESS Technology, Fremont, Calif. Attorney, Agent, or Firm-Gray Cary Ware & Freidenrich 57 ABSTRACT 21 Appl. No.: 09/074,657 A semiconductor device with an embedded PCI 2.1 com 22 Filed: May 6, 1998 pliant bridge provides expanded functionality as System 51511 Int. Cl. ............................. GO6F13FOO700; GO6F 13/38/ level implementationsp of a PCI-to-PCI bridge,9. and enhances 52 U.S. Cl. ............................ 710/129, 710/127, 710/64; the level of integration possible. The embedded PCI-to-PCI 345/435; 84/604; 84/621; 84/622; 84/647 bridge allows the creation of multi-function, multimedia 58) Field of Search 345/435: 710/127 add-on cards Supporting multiple devices. Multi-function, 710129,6484/602,604 621 622 647. multimedia Subsystems that provide audio, graphics, MPEG, s w is s s 454. 70425s etc., are mapped into a bridged-to PCI-bus that keeps Such s traffic off the main PCI-bus. The advantage for the system or 56) References Cited add-in card Vendor is that the various multimedia chips that are combined can come from different Sources, providing an U.S. PATENT DOCUMENTS optimized and highly customized combination of functions. -

Profire Lightbridge User Guide | 2 Introduction 1

34-in/36-out FireWire Lightpipe Interface User Guide English Table of Contents English . 2 Introduction . 2 What’s in the Box . 2 About ProFire Lightbridge . 3 ProFire Lightbridge Features . 4 System Requirements . 5 Controls and Connectors . 6 Front Panel . 6 Rear Panel . 7 Driver Installation . 8 Hardware Connections . 8 Audio . 8 MIDI . 9 Word Clock . 9 Using ProFire Lightbridge . 9 The Software Control Panel . 10 Hardware Page . 10 About Page . 13 Word Clock Synchronization . 14 Understanding Word Clock . 14 Specifications . 18 Warranty . 19 Warranty Terms . 19 Warranty Registration . 19 M-Audio ProFire Lightbridge User Guide | 2 Introduction 1 hank you for purchasing M-Audio’s ProFire Lightbridge interface. ProFire Lightbridge uses the ADAT optical T I/O standard to bring extensive digital connectivity to your studio. With its four ADAT optical inputs, four ADAT optical outputs, S/PDIF coaxial input and output, and stereo analog outputs, ProFire Lightbridge lets you connect a variety of devices to your FireWire-equipped digital audio workstation. Using the high-bandwidth, industry-standard FireWire (IEEE1394) protocol, ProFire Lightbridge gives your DAW up to 34 audio inputs and 36 outputs while connecting to your computer via a single cable. This makes it perfect for multi-channel transfers to and from external multitrack recorders. ProFire Lightbridge is also ideal for linking your DAW to an external digital mixer, or for connecting to another computer hosting soft synths and signal processors. This manual will explain the features and operation of ProFire Lightbridge. Even if you are an experienced recording enthusiast, please take a moment to read this guide and familiarize yourself with all of the unique features of your ProFire Lightbridge. -

US-16X08 Reference Manual

D01247020B US-16x08USB2.0 Audio Interface/Mic Preamp Reference Manual Before connecting this unit to a computer, you must download and install a dedicated driver. Contents 1 – Introduction ..............................................3 Windows 8 ....................................................................23 Features ..................................................................................3 Windows 7 ....................................................................23 Conventions used in this manual ..................................3 Mac OS X and iTunes ........................................................24 iOS ..........................................................................................24 2 – Names and functions of parts ..................4 Front panel ............................................................................4 9 – MIDI Implementation Chart ...................25 Rear panel ..............................................................................5 10 – Troubleshooting ...................................26 3 – Installation ................................................6 Troubleshooting ................................................................26 System requirements.........................................................6 11 – Specifications ........................................28 Windows ..........................................................................6 Specifications .....................................................................28 Mac OS X..........................................................................6 -

3.4.1 SPI - Serial Peripheral Interface

DIPLOMARBEIT Herr Axel Schneider Entwicklung einer updatefähigen Embedded-Linux-Hardwareplattform zum Einsatz in einer speziellen Gerätesteuerung Mittweida, 2012 Fakultät Elektro- und Informationstechnik DIPLOMARBEIT Entwicklung einer updatefähigen Embedded-Linux-Hardwareplattform zum Einsatz in einer speziellen Gerätesteuerung Autor: Herr Axel Schneider Studiengang: Elektrotechnik Schwerpunkt Energiesystemtechnik Seminargruppe: ET07wE-D Erstprüfer: Prof. Dr.-Ing. Thomas Beierlein Zweitprüfer: Dipl.-Ing. (FH) Jan Färber Einreichung: Mittweida, 17.08.2012 Bibliografische Angaben: Schneider, Axel: Entwicklung einer updatefähigen Embedded-Linux-Hardwareplatt- form für den Einsatz in einer speziellen Gerätesteuerung - 2012 – 74 Seiten, 43 Abbildungen, 13 Tabellen, 3 Anlagen , Mittweida, Hochschule Mittweida (FH), University of Applied Sciences, Fakultät Elektro- und Informationstechnik Diplomarbeit, 2012 Referat: Das Projekt „Pfeifen-Orgel mit dynamischer Stimmung“ ist ein Steuerungssystem zur Verbesserung der Klangqualität einer Orgel. Das System besteht aus dezentralen Elementen und einer zentralen Steuerung. Diese Arbeit befasst sich mit der Entwicklung der zentralen Einheit, der Zentralen Ak- tor-Steuerung. Ihre Aufgabe umfasst grundlegend die Mikrocontroller gestützte Da- tenverarbeitung und Kommunikation über spezielle, im Projekt benötigte Peripherie. Für die an diese Arbeit angrenzende Entwicklung der Steuerungssoftware, verfügt die Hardwareplattform über ein angepasstes Embedded Linux. Inhaltsverzeichnis Inhaltsverzeichnis...............................................................................................I -

Apogee Ensemble Thunderbolt Audio Interface

Apogee Ensemble Thunderbolt Audio Interface User’s Guide Fall 2014 Contents Overview!...........................................................................................................5 Introduction!................................................................................................................5 Features!.......................................................................................................................5 Package Contents!......................................................................................................6 Ensemble Thunderbolt Panel Tour!...........................................................................7 Front Panel!.................................................................................................................7 Rear Panel!..................................................................................................................8 Display!........................................................................................................................9 Input Settings Display Screen!..................................................................................9 Getting Started!...............................................................................................10 Precautions when powering Ensemble On/Off!......................................................10 Thunderbolt Notes!....................................................................................................10 Ensemble Software!..................................................................................................11 -

User's Guide M-Audio Ozone

M-Audio Ozone version: MA-Ozone_052803 User’s Guide Introduction . .2 M-Audio Ozone Features . .2 M-Audio Ozone Overview . .2 What’s in the Box . .3 Guide to Getting Started . .4 M-Audio Ozone Panel Features . .4 Top Panel . .4 Rear Panel . .6 M-Audio Ozone Driver Installation . .7 Driver Installation for Windows . .7 Windows XP: . .8 Windows 2000: . .12 Windows ME: . .16 Windows 98SE: . .18 M-Audio Ozone and the Windows Sound System . .23 Macintosh Driver Installation . .23 OMS Installation . .24 M-Audio Ozone Driver Installation . .24 OMS Configuration (Mac OS9 only) . .25 M-Audio Ozone and the Mac OS 9 Sound Manager . .27 M-Audio Ozone and Mac OS X . .27 The M-Audio Ozone Control Panel . .28 Application Software Setup . .30 M-Audio Ozone Hardware Installation . .31 M-Audio Ozone Audio Setup and Control . .31 Using the Mic and Instrument Inputs . .33 Setting Input Gain . .34 Phantom Power . .34 Using the Aux Inputs . .35 Using Direct Monitor . .36 M-Audio Ozone MIDI Setup and Control . .37 MIDI Functions In Standalone Mode . .39 Utilizing the Programming Assignment Keys . .39 Technical Support & Contact Information . .44 M-Audio Ozone Warranty Information . .45 M-Audio Ozone Technical Specifications . .46 Appendix A - MIDI Controller Information . .47 Appendix B - M-Audio Ozone Block Diagram . .48 Introduction Congratulations on your purchase of the M-Audio Ozone. The M-Audio Ozone is an innovative product—a powerful combination of MIDI controller and audio interface with microphone and instrument preamps that will turn your computer into a virtual music production studio. You may use your M-Audio Ozone in conjunction with a USB-equipped PC or Macintosh computer and appropriate music software to enter a full range of MIDI note and controller information, as well as record and play back your voice, guitar, or external sound modules. -

Serial Communication Buses

Computer Architecture 10 Serial Communication Buses Made wi th OpenOffi ce.org 1 Serial Communication SendingSending datadata oneone bitbit atat oneone time,time, sequentiallysequentially SerialSerial vsvs parallelparallel communicationcommunication cable cost (or PCB space), synchronization, distance ! speed ? ImprovedImproved serialserial communicationcommunication technologytechnology allowsallows forfor transfertransfer atat higherhigher speedsspeeds andand isis dominatingdominating thethe modernmodern digitaldigital technology:technology: RS232, RS-485, I2C, SPI, 1-Wire, USB, FireWire, Ethernet, Fibre Channel, MIDI, Serial Attached SCSI, Serial ATA, PCI Express, etc. Made wi th OpenOffi ce.org 2 RS232, EIA232 TheThe ElectronicElectronic IndustriesIndustries AllianceAlliance (EIA)(EIA) standardstandard RS-232-CRS-232-C (1969)(1969) definition of physical layer (electrical signal characteristics: voltage levels, signaling rate, timing, short-circuit behavior, cable length, etc.) 25 or (more often) 9-pin connector serial transmission (bit-by-bit) asynchronous operation (no clock signal) truly bi-directional transfer (full-duplex) only limited power can be supplied to another device numerous handshake lines (seldom used) many protocols use RS232 (e.g. Modbus) Made wi th OpenOffi ce.org 3 Voltage Levels RS-232RS-232 standardstandard convertconvert TTL/CMOS-levelTTL/CMOS-level signalssignals intointo bipolarbipolar voltagevoltage levelslevels toto improveimprove noisenoise immunityimmunity andand supportsupport longlong cablecable lengthslengths TTL/CMOS → RS232: 0V = logic zero → +3V…+12V (SPACE) +5V (+3.3V) = logic one → −3V…−12V (MARK) Some equipment ignores the negative level and accepts a zero voltage level as the "OFF" state The "dead area" between +3V and -3V may vary, many receivers are sensitive to differentials of 1V or less Made wi th OpenOffi ce.org 4 Data frame CompleteComplete one-byteone-byte frameframe consistsconsists of:of: start-bit (SPACE), data bits (7, 8), stop-bits (MARK) e.g. -

EXB-FW for M3 Manual

EXB-FW for M3 Manual EXB-FW option (FireWire board) If you install the optional EXB-FW in the M3, you’ll be application. For details, refer to the manual of the able to use its Virtualized Hardware functionality. software you’re using. Virtualized Hardware is functionality that allows For more about the EXB-FW, refer to the manual audio signals and MIDI messages from a Korg included with the EXB-FW. hardware device supporting this functionality to be routed via a dedicated Korg editor running in your DAW software or other host application and sent to tracks in your host application, letting you use your EXB-FW parameters Korg hardware device as though it were a plug-in software instrument. When you install the EXB-FW, the following M3 In order to use the Virtualized Hardware functionality, parameters will become available. For details on the you’ll need to connect the M3 to your computer via a parameters, refer to the corresponding pages of the M3 FireWire (IEEE 1394) cable, and use the M3 Editor/ Parameter Guide. Plug-In Editor version compatible with the EXB-FW. Program mode With this setup, you’ll be able to perform various types • Prog P0: Play– Sampling/Audio In of control including total recall, as follows. “Input” (→ PG p.9) • Edit and set up the M3’s various mode parameters → via MIDI. “Source Bus” ( PG p.10) • Send and receive the M3’s 2-in and 6-out digital The menu command “Auto Sampling Setup” REC → audio signals. Audio Input ( PG p.110) You can send the M3’s audio signals to tracks in • Prog P0: Play– Control Surface, Mixer Input your host application using the Virtualized Hard- “Audio Play/Mute,” “Audio Solo,” “Audio Vol- ware functionality. -

User Manual Audiofuse - Overview 2 a Version with More Details Is Available After Registration On

USER MANUAL Special Thanks DIRECTION Frederic Brun Adrien Courdavault Nicolas Dubois ENGINEERING Pierre Demouveaux Pierre Pfister Jérome Laurent Mathieu Nocenti Philippe Wicker MANUAL Adrien Courdavault Jérémie Weber Morgan Perrier Germain Marzin DESIGN Frederic Brun Daniel Vester Glen Darcey Fabien Deboves Morgan Perrier Sébastien Rochard © ARTURIA SA – 2017 – All rights reserved. 11 Chemin de la Dhuy - 38240 Meylan - FRANCE www.arturia.com Information contained in this manual is subject to change without notice and does not represent a commitment on the part of Arturia. The software described in this manual is provided under the terms of a license agreement or non-disclosure agreement. The software license agreement specifies the terms and conditions for its lawful use. No part of this manual may be reproduced or transmitted in any form or by any purpose other than purchaser’s personal use, without the express written permission of ARTURIA S.A. All other products, logos or company names quoted in this manual are trademarks or registered trademarks of their respective owners. Product version: 1.0 Revision date: 24 February 2017 Thank you for purchasing the Arturia AudioFuse! Dear musician, AudioFuse is the revolutionary next-gen pro audio interface that sets a new standard in sonic quality, creative production and value. It fuses the superior sound of high-end analog studio consoles with the flexibility of a solid mobile interface—with all the connectivity you need for any recording or performance. AudioFuse comes with very high quality audio pre-amplifier based on the DiscretePro technology. AudioFuse connects you to a whole world of possibilities, gears and formats. -

Oxygen 8 Version: O8-090402

Oxygen 8 version: O8-090402 Users Manual Introduction . .2 Features . .2 Oxygen 8 Overview . .2 What’s in the Box . .4 Guide to Getting Started . .4 Hardware Installation . .4 Software Installation . .5 USB Driver Installation for Windows . .5 USB Driver Installation for Macintosh . .8 First Time OMS Configuration Instructions . .9 Setting Up Your MIDI Studio . .10 Using the Oxygen 8 in your Application Software . .11 Oxygen 8 MIDI Functions . .12 MIDI Functions In Standalone Mode . .12 Default Settings of The Oxygen 8 . .13 Overall Diagram Preview . .13 Operation Panel . .13 Utilizing the Key Programming Assignment Keys . .14 Rear Panel . .17 Specifications . .18 Technical Support & Contact Information . .19 MIDI Implementation Chart . .20 Appendix A-Midi Controller Chart . .21 Limited 90 Day Warranty . .22 Introduction Congratulations on your purchase of the Oxygen 8, part of the USB Keystation series of MIDI controllers brought to you by Midiman. You may use your Oxygen 8 in conjunction with a USB equipped PC or Macintosh computer and appropriate music software to enter a full range of MIDI note and controller information. You will be able to discover the wonderful world of Computer Music with a set of complete musical instruments from your sound card, sound modules, or workstation. The Oxygen 8 gives you full control over MIDI parameters, while utilizing proven Midiman USB MIDI interface technology. The Oxygen 8 will also serve your needs as a standalone MIDI controller. This manual will help you become familiar with the powerful features of the Oxygen 8. After reading the manual carefully, you will have a clear understanding of how to transmit different MIDI messages to other instruments and equipment. -

Quantum-Series Ultra-Low Latency Thunderbolt™ Audio Interfaces and Studio Command Centers Owner’S Manual

Quantum-series Ultra-low latency Thunderbolt™ Audio Interfaces and Studio Command Centers Owner’s Manual ® English www.presonus.com Table of Contents 1 Overview — 1 5 Aggregating Devices — 19 1.1 Introduction — 1 5.1 macOS — 19 1.2 Quantum-series Interface Hardware 5.2 Windows — 21 Features — 1 6 Studio One Artist Quick Start — 23 1.3 UC Surface Features — 2 6.1 Installation and Authorization — 23 1.4 Studio One Artist Features — 2 6.2 Setting Up Studio One — 24 1.5 What is in the Box — 2 6.2.1 Configuring Audio Devices — 25 2 Hookup — 3 6.2.2 Configuring MIDI Devices — 25 2.1 Front Panel Connections and Controls — 3 6.3 Creating a New Song — 29 2.2 Back Panel Connections — 5 6.3.1 Configuring Your I/O — 30 2.3 Quantum Hookup Diagram — 8 6.3.2 Creating Audio and MIDI Tracks — 31 6.3.3 Recording an Audio Track — 32 2.4 Quantum 2 Hookup Diagram — 9 6.3.4 Adding Virtual Instruments 3 Connecting to a Computer — 10 and Effects — 33 3.1 Installation for Windows and macOS — 10 6.4 Integrated Quantum Controls — 35 6.4.1 Preamp Controls — 35 3.2 Using a Quantum Interface with Popular Audio Applications — 10 6.4.2 Talkback and Monitoring — 36 3.3 Controlling Quantum Mic Preamps 6.5 Monitor Mixing in Studio One — 36 with MIDI — 12 6.5.1 Cue Mix Functions — 36 4 UC Surface Control Software — 13 6.5.2 Punching In — 37 4.1 UC Surface Launch Window — 14 7 Technical Information — 39 4.2 Input Controls — 14 7.1 Specifications — 39 4.3 Main Knob Controls — 15 8 Warranty Information — 41 4.4 Main Output Controls — 15 8.1 How Consumer Law Relates 4.5 Talkback (Quantum) — 15 To This Warranty — 41 4.6 Headphone Select — 16 4.7 MIDI Control — 16 4.8 RTA — 17 1 Overview Quantum-series 1.1 Introduction Owner’s Manual 1 Overview 1.1 Introduction Thank you for purchasing a PreSonus Quantum-series Thunderbolt Audio Interface and Studio Command Center.