Cache Coherence: Part I

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cover Story 18 / 19 Side a Fever Ray

Cover Story 18 / 19 Side A Fever Ray Plunge è la rivoluzione sessuale di Karin Dreijer. Difuso lo scorso ottobre, l’album arriva questo mese in formato fisico, assieme a un atteso tour in cui la misteriosa artista svedese del duo The Knife promette di confondere, ancora una volta, le aspettative del pubblico Testo di Giuseppe Zevolli Fotografie di Martin Falck “Nessuno sguardo irrispettoso / Ubriaca e felice, felice in uno spazio sicuro”, mormora la voce robotica di Karjn Dreijer in A Part Of Us, uno dei brani chiave di Plunge, il suo secondo disco a nome Fever Ray. “Una mano nella tua e l’altra stretta in un pugno”, Quest’urgenza di esprimersi è riflessa nell’uso continua. A Part Of Us celebra la sensazione di della voce, per la prima volta in primissimo piano, protezione degli spazi queer, dipinti come delle anni luce dalle cupe distorsioni dell’album del oasi sospese nel tempo, in cui l’espressione della 2009. Fever Ray era un esercizio di camouflage propria identità e del proprio desiderio rifuggono notturno a confronto con la scattante, penetrante dallo sguardo giudicante incontrato troppo spesso elettronica di Plunge. Nonostante la fama di autrice al loro esterno. Uno sguardo proveniente da chi sfuggente e la storia di amore-odio con la stampa, vorrebbe infondere nei loro abitanti un antico senso chiarezza e entusiasmo finiscono per animare di vergogna. Pur essendo reali, tangibili, Dreijer anche la nostra chiacchierata Skype. Dreijer parte rappresenta questi spazi come dei microcosmi in sordina, scandendo qualche breve risposta e felici in anticipazione di un’utopia allargata ancora prendendosi delle brevi pause per riflettere sul in divenire. -

Kumouksen Kynnyksellä Queer-Feministinen Luenta the Knifen Shaking the Habitual Show'sta

HELSINGIN YLIOPISTO Kumouksen kynnyksellä Queer-feministinen luenta the Knifen Shaking the Habitual Show'sta Olga Palo Pro gradu -tutkielma Sukupuolentutkimus Filosofian, historian, kulttuurin ja taiteiden tutkimuksen laitos Humanistinen tiedekunta Helsingin yliopisto Huhtikuu 2016 Tiedekunta/Osasto – Fakultet/Sektion – Faculty Laitos – Institution – Department Humanistinen tiedekunta Filosofian, historian, kulttuurin ja taiteiden tutkimuksen laitos Tekijä – Författare – Author Olga Palo Työn nimi – Arbetets titel – Title Kumouksen kynnyksellä. Queer-feministinen luenta The Knifen Shaking the Habitual Show'sta. Oppiaine – Läroämne – Subject Sukupuolentutkimus Työn laji – Arbetets art – Level Aika – Datum – Month and Sivumäärä– Sidoantal – Number of pages year Pro gradu Huhtikuu 2016 84 Tiivistelmä – Referat – Abstract Judith Butlerin teoretisointi performatiivisesta sukupuolesta on vaikuttanut suuresti sukupuolentutkimukseen, mutta myös muun muassa kulttuurin- ja taiteidentutkimukseen. Myös ajatus sukupuolen luonnollisuutta horjuttavista kumouksellisista teoista nousee Butlerin kirjoituksista. The Knife-yhtyeen Shaking the Habitual Show esimerkkiaineistonaan tämä työ tutkii, mitä kumouksellisuus voisi olla esittävissä taiteissa ja kysyy, voiko Shaking the Habitual Show'ta ajatella esimerkkinä kumouksellisista käytännöistä. Performatiivisuuden ajatuksen soveltaminen esittäviin taiteisiin on paikoin nähty haastavana. Ongelmallisuus liittyy ennen kaikkea siihen, että taideteokselle on perinteisesti oletettu tekijä, kun taas performatiivisen -

2018, in English and German

Tuesday, October 9, 2018 Wednesday, October 3, 2018 An article on Saturday about political fric- An article on Monday about a proposal tion between Ukraine and Hungary mis- by the Trump administration to weaken spelled the name of a Hungarian far-right regulations on mercury emissions referred group. It is Jobbik, not Jobbick. incorrectly to the initial estimate of direct health benefits from the mercury rule. The An article on Sunday about advice given to Obama administration estimated those Brett M. Kavanaugh by the White House benefits to be worth $6 million per year, counsel, Don McGahn, misstated when not $6 billion. Corrections and Senator Lindsey Graham, Republican of South Carolina, had dinner with three A chart with an article on Feb. 13 about Republican senators who were undecided drinking water safety across the United on Judge Kavanaugh’s confirmation. The States represented incorrectly the scale of dinner was Friday, September 28, not the y-axis. The chart shows violations of Thursday, September 27. water quality regulations per 10 water sys- tems in rural areas, not per 100 systems. An article on Sept. 30 about former N.F.L. ClarificationsKorrekturen, Berichtigungen, Klarstellungen, Gegendarstellungen cheerleaders speaking out about work- An article on Tuesday about widespread place harassment misstated the surname of delays affecting British train companies a lawyer representing several of the cheer- misidentified the organization that owns those that responded in full, a stun gun was An essay last Sunday about the effects of misstated the name of the unfinished novel leaders. She is Sara Blackwell, not Davis. the United Kingdom’s railroad infrastruc- fired 21 times, not 24. -

THE DAILY TEXAN 94 71 Tuesday, September 14, 2010 Serving the University of Texas at Austin Community Since 1900

1 LIFE&ARTS PAGE 14 Healthy tips to prevent gaining ‘freshman 15’ Slacklining gives participants rush SPORTS PAGE 8 LIFE&ARTS PAGE 14 Golf recruit looks forward to life on 40 Acres next year TOMORROW’S WEATHER High Low THE DAILY TEXAN 94 71 Tuesday, September 14, 2010 Serving the University of Texas at Austin community since 1900 www.dailytexanonline.com TODAY City eateries honor Mexico Libraries’ Calendar periodical Support human rights The Human Rights Documentation Initiative and Texas After Violence will co-host resources a reception to teach students about how they can support human rights documentation and education in Texas. From 5 to 7 p.m. in the Benson Latin in danger American Collection Rare Books Room SRH 1.108. By Lauren Bacom Daily Texan Staff HFSA More than 2,000 academic journals, research Margarita Arellano, associate materials and databases could be eliminated from vice president for student affairs libraries across campus beginning in the 2011-12 and dean of students at Texas academic year. State University, will speak at Fred Heath, vice provost and director of UT Li- the first meeting of the Hispanic braries, requested a 33.5-percent reduction in the Faculty/Staff Association from amount of money the libraries spend on research 11:30 a.m. to 1 p.m. All faculty materials earlier this year. and staff employed at least 20 The library staff looked for titles that would not have a negative impact on students, said Dennis hours a week at the University Catalina Padilla | Daily Texan Staff are invited to attend regardless Dillon, the research service associate director for Diana Kennedy, author of the book “Oaxaca al Gusto,” talks to owners of the restaurant La Margarita about authentic Mexican the UT Libraries. -

Flyinglow – Debut Album from Sweden's New Deep Electronica Artist

Jun 09, 2017 12:17 EDT Flyinglow – Debut Album from Sweden's New Deep Electronica Artist Following the well-received single 'Clockwork' this spring, Flyinglow brings us a fresh, yet classic, deep, Scandinavian electronica sound with today’s self- titled album debut. After The Knife’s iconic Silent Shout album some ten years ago, Scandinavian electronica has become synonymous with the uplifting, pop-infused EDM sound developed by the likes of Swedish House Mafia, Avicii and Kygo, but the last few years have seen an increasing number of dark and deep electronic artists come out of the region. Following the release of the single 'Clockwork', Flyinglow now releases his first album. The 7 track electronic debut is a dynamic work of electronica balancing on the thin line between slightly experimental shoegaze and pure pop aesthetic. About Flyinglow Behind the moniker, we find the Swedish Uppsala-based musician Joel Gabrielsson. Joel has a very international backgound. He grew up in Singapore, studied at the School of Movement and Performance in Finland and in 2009 relocated to Ukraine for a couple of years. It was during this time that his interest in composing music started taking a more prominent role. He was particularly interested in electro-acoustic and ambient music, and began to experiment with sounds, textures and electronics in his home studio. The following years were spent fronting the drone-pop band Toys in the Well and performing venues around Europe. After moving back to Sweden, into a small cottage in the countryside, Joel began to develop a new, solo recording project. -

Die Schirn Zeigt Anlässlich Des Bauhaus-Jubiläums Eine

IM ZEICHEN DER AVANTGARDE: ERSTE SCHIRN AT NIGHT 2016 MIT KUNST DER STURM- FRAUEN UND MUSIK VON KARIM & KARAM – KARIN DREIJER UND MARYAM NIKANDISH PLANET AVANTGARDE – SCHIRN AT NIGHT ZUR AUSSTELLUNG „STURM-FRAUEN“ SAMSTAG, 16. JANUAR 2016, AB 20 UHR SCHIRN KUNSTHALLE FRANKFURT DJ-SHOW VON KARIM & KARAM UM 22 UHR MIT MARYAM NIKANDISH UND KARIN DREIJER (THE KNIFE / FEVER RAY) TICKETS IM VVK ONLINE UNTER WWW.SCHIRN.DE UND AN DER SCHIRN-KASSE: 10 €, ABENDKASSE AB 20 UHR: 12 € DRESSCODE: CRAZY AVANTGARDE GALAXY LOOK Die erste SCHIRN AT NIGHT im neuen Jahr steht im Zeichen der Avantgarde. Am 16. Januar 2016, ab 20 Uhr, lassen sich unter dem Motto „Planet Avantgarde“ zum einen in der Ausstellung STURM-FRAUEN rund 280 Kunstwerke von insgesamt 18 Künstlerinnen des Expressionismus, des Kubismus, des Futurismus, des Konstruktivismus und der Neuen Sachlichkeit entdecken. Zum anderen präsentiert die schwedische Sängerin und Ikone der elektronischen Musik Karin Dreijer zusammen mit Maryam Nikandish ihr neues DJ-Projekt KARIM & KARAM. Mit temperamentvollen Beats von Aster Aweke, Zhala, Omar Soleiman, Planningtorock, Andy & Kouros, Shahram Shabpareh, Cleo, DJ Nigga Fox, Azealia Banks, Death Grips und vielen mehr werden sie ab 22 Uhr das Publikum in ihre Welt der eklektisch-elektronischen Sounds mitnehmen. Karin Dreijer, bekannt von The Knife, ist eine der innovativsten und einflussreichsten Elektro- Künstlerinnen der letzten Jahrzehnte. Für das Solo-Projekt Fever Ray kombinierte Dreijer etwa mysteriöse elektronische Musik mit karibischen Elementen. Neben Drinks und Snacks des Teams von Badias Catering bietet die SCHIRN AT NIGHT zum Einstieg sowie im Anschluss an den Live Act ein DJ-Set der Frankfurterin GERI. -

Tomorrow, in a Year *. O

• ~ SAISON 2010-2011 TOMORROW, IN A YEAR *. Hotel Pro Forma/^ ^ a The Knife • •• • •• •• • O vu w .s o g s o o *3 «-J 2 OPÉRA NATIONAL DE LORRAINE TOMORROW, IN A YEAR Hotel Pro Forma/ The Knife ÉLECTRO-OPÉRA DARWINIEN CRÉÉ LE 2 SEPTEMBRE 2009 AU THÉÂTRE ROYAL DU DANEMARK À COPENHAGUE OUVRAGE CHANTÉ MEZZO-SOPRANO DIRECTION ARTISTIQUE, DIRECTEUR EN ANGLAIS, SURTITRÉ Kristina Wahlin MISE EN SCÈNE DE PRODUCTION Ralf Rîchardt Jesper Sonderstrup DURÉE DE L'OUVRAGE : CHANTEUSE/ACTRICE Strobech, 1 H 20 SANS ENTRACTE Laerke Winther INGÉNIEUR DU SON Kirsten Dehlholm Troels Bech Jessen CHANTEUR MUSIQUE Jonathan Johansson INGÉNIEUR LUMIÈRE The Knife Frederik Heitmann DANSEURS COLLABORATION 20, 21 JANVIER 2011 À 20H Lisbeth Sonne RÉGIE PLATEAU MUSICALE Eva Hoffmann 22 JANVIER 2011 À 15 H ET20H Andersen, Mt. Sims, Agnete Beierholm, 23 JANVIER 2011 À 15 H Planningtorock RESPONSABLE DE Alexandre Bourdat, LA CONSTRUCTION Bo Madvig, LIVRET Oskars Plataiskalns Jacob Stage, The Knife, Mt. Sims, TECHNICIEN PLATEAU an Strobech Charles Darwin Janis Linins CONCEPT, SCÉNOGRAPHIE ANIMATION Ralf Richardt Louise Springborg, Strobech Birk Marcus Hansen CRÉATION LUMIÈRES Jesper Kongshaug ACCESSOIRES Maja Dahl Nielsen CRÉATION SON Anders Jorgensen CONSEILLER À LA CHORÉGRAPHIE COSTUMES Hiroaki Umeda Maja Ravn ARCHITECTE Runa Johannessen ATELIERS Soren Romer, Oskars Plataiskalns 3 TOMORROW. IN A YEAR COPRODUCTION LA BATIE #%. STATENS. -FESTIVAL DE GENÈVE, %„J; KUNSTRÂD HELLERAU - CENTRE i\V DANISH ARTS COUNCIL EUROPÉEN DES ARTS DE DRESDE, THE CONCERT HALL DE AARHUS, LA DANSENS HUS DE STOCKHOLM ET MUSIKHUSET AARHUS HOTEL PRO FORMA. «TOMORROW, IN A YEAR» DAN/EN/ BÉNÉFICIE DU SOUTIEN —HU/=- DES FONDATIONS BIKUBEN, OTICON, TOYOTA, BECKETT, KNUD COUTURE COACH VOCAL H0JGAARDS, SONNING, Siri Vilbol Ole Rasmus Moller TUBORG, DU NORDIC CULTURE POINT ET DU COSTUMES TRICOTÉS IMAGES FOND DE COOPÉRATION Rikke Jo Tholstrup Claudi Thyrrestrup, ENTRE LA SUÈDE ET MAQUILLAGE Runa Johannessen LE DANEMARK. -

Sight&Sound 5

SUNDAY MORNING POST DECEMBER 27, 2009 Sight&Sound 5 rock. Despite the horrendous first “The Automator” Nakamura for a set single, Know Your Enemy, this is a that took Stonesy blues rock and cohesive outing that tells an Ennio Morricone and hauls them interesting, albeit slightly contrived, into the 21st century, complete with story of a lovelorn couple. Horseshoes killer riffs. and Handgrenades is about as close to punk as we’re likely to get from Yuksek Green Day again. Away from the Sea (Barclay) Illustration: Teresa Tan Teresa Illustration: Somewhere between Bob Sinclar, The Dead Weather Justice and Hall and Oates, Horehound (Warner) French-born music producer, Even when Jack White takes a back remixer and DJ Pierre-Alexandre seat – literally – as a drummer, his Busson channels 80s rock and trademark bluesy guitar riff can still electro, Britpop and pounding be heard throughout the album. But dance floor beats for a schizophrenic with other members such as Alison but irresistible set. Mosshart and Jack Lawrence sharing the vocals, the Dead Weather offer a Yeah Yeah Yeahs fix for those in need of some earthy It’s Blitz! (Polydor) garage rock. Taking an electro turn, the Yeahs manage to up their game without Florence + the Machine betraying their punk roots with a set Lungs (Island) of songs that, despite a pulsating Lungs is 45 minutes of soulful vocal new electronic sheen, still sounds as bliss. Florence Welch spills her soul if they’d been made for guitars. Their on tracks such as I’m Not Calling most satisfying album yet and a You a Liar and My Boy Builds Coffins. -

The Deadly Affairs of John Figaro Newton Or a Senseless Appeal to Reason and an Elegy for the Dreaming

The deadly affairs of John Figaro Newton or a senseless appeal to reason and an elegy for the dreaming Item Type Thesis Authors Campbell, Regan Download date 26/09/2021 19:18:08 Link to Item http://hdl.handle.net/11122/11260 THE DEADLY AFFAIRS OF JOHN FIGARO NEWTON OR A SENSELESS APPEAL TO REASON AND AN ELEGY FOR THE DREAMING By Regan Campbell, B.F.A. A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Fine Arts in Creative Writing University of Alaska Fairbanks May 2020 APPROVED: Daryl Farmer, Committee Chair Leonard Kamerling, Committee Member Chris Coffman, Committee Member Rich Carr, Chair Department of English Todd Sherman, Dean College of Liberal Arts Michael Castellini, Dean of the Graduate School Abstract Are you really you? Are your memories true? John “Fig” Newton thinks much the same as you do. But in three separate episodes of his life, he comes to see things are a little more strange and less straightforward than everyone around him has been inured to the point of pretending they are; maybe it's all some kind of bizarre form of torture for someone with the misfortune of assuming they embody a real and actual person. Whatever the case, Fig is sure he can't trust that truth exists, and over the course of his many doomed relationships and professional foibles, he continually strives to find another like him—someone incandescent with rage, and preferably, as insane and beautiful as he. i Extracts “Whose blood do you still thirst for? But sacred philosophy will shackle your success, for whatsoever may be your momentary triumph or the disorder of this anarchy, you will never govern enlightened men. -

STA0151 Complet BD.Pdf

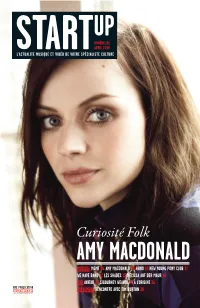

UP NUMÉRO 151 STARTAVRIL 2010 L’ACTUALITE MUSIQUE ET VIDÉO DE VOTRE SPÉCIALISTE CULTURE Curiosité Folk AMY MACDONALD MUSIQUE MGMT 06 AMY MACDONALD 08 ARNO 10 NEW YOUNG PONY CLUB 11 WE HAVE BAND 12 LES SHADES 13 MELISSA AUF DER MAUR 14 DVD AVATAR 23 SIGOURNEY WEAVER 24 À L’ORIGINE 26 UNE PUBLICATION ÉVÉNEMENT RENCONTRE AVEC TIM BURTON 30 SOMMAIRE 06 12 08 13 News / 06 MGMT 06 /John Butler Trio 06 / Camélia Jordana 07 / Angus & Julia Stone 07 / Françoise Hardy 07 / Interviews / 08 Amy Macdonald 08 / Slash 09 / Arno 10 / New Young Pony Club 11 / We Have Band 12 / Curry & Coco 12 / Les Shades 13 / Melissa Auf Der Maur 14 / Mark Linkous 15 / Chroniques musique / 16 Pop/Rock 16 / Rap-Soul 18 / Electro 19 / Autres musiques 20 / Français 21 Chroniques DVD / 23 DVD du mois: Avatar 23 / DVD 26 Événement / 30 Tim Burton aux pays des merveilles 30 UP START est édité par la société PRÉLUDE ET FUGUE, SAS de presse au capital de 30 000 euros, 29, rue de Châteaudun 75308 PARIS Cedex 09 Tél.: 01 75 55 43 44 Fax: 0175554111 DIRECTEUR DE PUBLICATION Marc FEUILLÉE / PRINCIPAL ACTIONNAIRE GROUPE EXPRESS-ROULARTA / RÉDACTION RÉDACTRICE EN CHEF Melody LEBLOND [email protected] / RÉDACTION Paul Alexandre, Antoine Beretto, David Bouseul, Hervé Crespy, Laurent Denner, Hervé Guilleminot, Maxime Goguet, Héléna McDouglas, Bertrand Rocher, Guillaume Graf / CONCEPTION GRAPHIQUE Didier FITAN MANAGEMENT ÉDITEUR DÉLÉGUÉ Tristan THOMAS / DIRECTRICE RÉGIE Véronique PICAN / PUBLICITÉ Tristan THOMAS [email protected] / FABRICATION Pascal DELEPINE avec Laurence BIDEAU / PRÉPRESSE GROUPE EXPRESS-ROULARTA / IMPRIMÉ EN BELGIQUE Roularta Printing / Photographie couverture D.R. -

GSU President to Become a Paid Role

FR IDAY, 3RD NOVEMBER, 2017 – Keep the Cat Free – ISSUE 1674 Felix The Student Newspaper of Imperial College London NEWS Event cancelled after speaker accused of rape PAGE 4 MUSIC Our guide to October’s best music releases PAGE 12 From this year onwards, the GSU President will earn £10,000 per year. // Stewart Oak FILM GSU President to become a paid role NEWS Students’ Union”, would Shamso spoke of the postgraduate representa- committee, the majority see the GSU President re- difficulties he had faced tion at Imperial”. He told of whom were voted in Fred Fyles ceiving an annual stipend as a result of taking on Felix that the President last month, the President Editor-in-Chief of £10,000. The money the GSU President role: is “placed under huge should “further student-re- would be funded by Im- “Spending a large amount amounts of pressure, not lated policy and influence The count-down perial’s Education Office, of my time on enriching only having to lead and decisions for the benefit The Graduate Students and would not come from the life of postgraduate motivate a committee of of Imperial College’s of best actresses Union President will be the Union itself. The po- students at Imperial has elected students, but also Postgraduate students”, begins paid an annual stipend sition would be part-time, had a costly effect on the having to deliver the PG “ensure that decisions and PAGE 20 in contrast to the seven progression of my PhD re- voice and opinion at many achievements of the GSU of £10,000, funded by paid sabbatical positions search...I will most likely College committees, some are clearly communicated the Graduate School. -

Rhizo-Memetic Art

RHIZO-MEMETIC ART: The Production and Curation of Transdisciplinary Performance James Alan Burrows Submitted in accordance with the requirements for the degree of Doctor of Philosophy Edge Hill University Department of Performing Arts October 2017 The candidate confirms that the work submitted is his own, and that appropriate credit has been given where reference has been made to the work of others. ACKNOWLEDGEMENTS The production of Rhizo-Memetic Art has involved the support, interest and commitment of many people. I would like to acknowledge and sincerely thank the various people who have been involved over the last four years. I would like to thank all of the artist-participants who have been involved with the various stages of the project, the many people who were involved with the performances and exhibition, my supervisory team and my colleagues in the Department of Performing Arts at Edge Hill University. I would especially like to thank Professor Victor Merriman for his constant sound advice and support. I extend my gratitude to Dr. Rachel Hann for guiding me towards a truer understanding of my own practice. In the same regard I’d like to thank Dr. Helen Newall – our conversations have sparked my imagination. I reserve the greatest of recognition for my parents, Yvonne and Edmund Burrows, whose kind words of encouragement have long sustained me. Lastly, I’d like to dedicate this work to Christopher Valentine, who has supported, challenged, discussed and nurtured this project alongside me, and who has put up with maximum possible disruption to our life together with good humour and love.