Secure, Network-Centric Operations of a Space- Based Asset: Cisco Router in Low Earth Orbit (CLEO) and Virtual Mission Operations Center (VMOC)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ODQN 10-1.Indd

National Aeronautics and Space Administration Orbital Debris Quarterly News Volume 10, Issue 1 January 2006 Collision Avoidance Maneuver Performed by NASA’s Terra Spacecraft Inside... The Terra spacecraft, often referred to as the ignator 1983-063C, U.S. Satellite Number 14222) fl agship of NASA’s Earth Observing System (EOS), would come within 500 m of Terra on 23 October, successfully performed a small collision avoidance GSFC and SSN personnel undertook a more de- Large Area Debris maneuver on 21 October 2005 to ensure safe passage tailed assessment of the coming conjunction. Collector (LAD-C) by a piece of orbital debris two days later. This ac- The Scout debris was in an orbit with an alti- Update ........................2 tion demonstrated the effectiveness of a conjunction tude similar to that of Terra (approximately assessment procedure implemented in 2004 680 km by 710 km), but its posigrade Revision of Space by personnel of the NASA Goddard inclination of 82.4° and different orbit Shuttle Wing Leading Space Flight Center (GSFC) and the plane meant that a collision would have Edge Reinforced U.S. Space Surveillance Network occurred at a high velocity of near- (SSN). The trajectories of Terra ly 12 km/s. By 21 October Carbon-Carbon Failure and its companion EOS space- refi ned analysis of the Criteria Based on craft are frequently com- future close approach Hypervelocity Impact pared with the orbits of indicated that the miss and Arc-Jet Testing ...3 thousands of objects distance was only ap- tracked by the SSN proximately 50 m with Object Reentry to determine if an an uncertainty that Survivability Analysis accidental collision yielded a probability Tool (ORSAT) – is possible. -

Highlights in Space 2010

International Astronautical Federation Committee on Space Research International Institute of Space Law 94 bis, Avenue de Suffren c/o CNES 94 bis, Avenue de Suffren UNITED NATIONS 75015 Paris, France 2 place Maurice Quentin 75015 Paris, France Tel: +33 1 45 67 42 60 Fax: +33 1 42 73 21 20 Tel. + 33 1 44 76 75 10 E-mail: : [email protected] E-mail: [email protected] Fax. + 33 1 44 76 74 37 URL: www.iislweb.com OFFICE FOR OUTER SPACE AFFAIRS URL: www.iafastro.com E-mail: [email protected] URL : http://cosparhq.cnes.fr Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law The United Nations Office for Outer Space Affairs is responsible for promoting international cooperation in the peaceful uses of outer space and assisting developing countries in using space science and technology. United Nations Office for Outer Space Affairs P. O. Box 500, 1400 Vienna, Austria Tel: (+43-1) 26060-4950 Fax: (+43-1) 26060-5830 E-mail: [email protected] URL: www.unoosa.org United Nations publication Printed in Austria USD 15 Sales No. E.11.I.3 ISBN 978-92-1-101236-1 ST/SPACE/57 *1180239* V.11-80239—January 2011—775 UNITED NATIONS OFFICE FOR OUTER SPACE AFFAIRS UNITED NATIONS OFFICE AT VIENNA Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law Progress in space science, technology and applications, international cooperation and space law UNITED NATIONS New York, 2011 UniTEd NationS PUblication Sales no. -

Topsat: Demonstrating High Performance from a Low Cost Satellite

TOPSAT: DEMONSTRATING HIGH PERFORMANCE FROM A LOW COST SATELLITE W A Levett 1*, J. Laycock 1, E Baxter 1 1 QinetiQ, Cody Technology Park, Room 1058, A8 Building, Ively Road, Farnborough GU14 0LX, UK [email protected] KEY WORDS: Optical, Multispectral, Mobile, High resolution, Imagery, Small/micro satellites, Pushbroom, Disaster ABSTRACT: Advances in technology continue to yield improvements in satellite based remote sensing but can also be used to provide existing capability at a lower cost. This is the philosophy behind the TopSat demonstrator mission, which was built by a UK consortium led by QinetiQ, and including SSTL, RAL and Infoterra. The project is funded jointly by the British National Space Centre (BNSC) and UK MoD. TopSat has demonstrated the ability to build a low cost satellite capable of generating high quality imagery (2.8m panchromatic resolution on nadir, 5.6m multispectral). Since it was successfully launched on 27th October 2005, it has down-linked imagery daily to a fixed QinetiQ ground station in the UK in order to support a wide range of users. Rapid data delivery has also been demonstrated using a low cost mobile ground station. The main achievement of the project is the fact that this capability has been achieved for such a relatively low cost. The cost of the entire programme (including the imager and all other development, build, launch, commissioning and ground segment costs) has been kept within a budget of approximately 20M euros. TopSat enables a low cost, whole mission approach to high-timeliness imagery. The low cost of the satellite makes a small constellation feasible for improved revisit times. -

<> CRONOLOGIA DE LOS SATÉLITES ARTIFICIALES DE LA

1 SATELITES ARTIFICIALES. Capítulo 5º Subcap. 10 <> CRONOLOGIA DE LOS SATÉLITES ARTIFICIALES DE LA TIERRA. Esta es una relación cronológica de todos los lanzamientos de satélites artificiales de nuestro planeta, con independencia de su éxito o fracaso, tanto en el disparo como en órbita. Significa pues que muchos de ellos no han alcanzado el espacio y fueron destruidos. Se señala en primer lugar (a la izquierda) su nombre, seguido de la fecha del lanzamiento, el país al que pertenece el satélite (que puede ser otro distinto al que lo lanza) y el tipo de satélite; este último aspecto podría no corresponderse en exactitud dado que algunos son de finalidad múltiple. En los lanzamientos múltiples, cada satélite figura separado (salvo en los casos de fracaso, en que no llegan a separarse) pero naturalmente en la misma fecha y juntos. NO ESTÁN incluidos los llevados en vuelos tripulados, si bien se citan en el programa de satélites correspondiente y en el capítulo de “Cronología general de lanzamientos”. .SATÉLITE Fecha País Tipo SPUTNIK F1 15.05.1957 URSS Experimental o tecnológico SPUTNIK F2 21.08.1957 URSS Experimental o tecnológico SPUTNIK 01 04.10.1957 URSS Experimental o tecnológico SPUTNIK 02 03.11.1957 URSS Científico VANGUARD-1A 06.12.1957 USA Experimental o tecnológico EXPLORER 01 31.01.1958 USA Científico VANGUARD-1B 05.02.1958 USA Experimental o tecnológico EXPLORER 02 05.03.1958 USA Científico VANGUARD-1 17.03.1958 USA Experimental o tecnológico EXPLORER 03 26.03.1958 USA Científico SPUTNIK D1 27.04.1958 URSS Geodésico VANGUARD-2A -

2013 Commercial Space Transportation Forecasts

Federal Aviation Administration 2013 Commercial Space Transportation Forecasts May 2013 FAA Commercial Space Transportation (AST) and the Commercial Space Transportation Advisory Committee (COMSTAC) • i • 2013 Commercial Space Transportation Forecasts About the FAA Office of Commercial Space Transportation The Federal Aviation Administration’s Office of Commercial Space Transportation (FAA AST) licenses and regulates U.S. commercial space launch and reentry activity, as well as the operation of non-federal launch and reentry sites, as authorized by Executive Order 12465 and Title 51 United States Code, Subtitle V, Chapter 509 (formerly the Commercial Space Launch Act). FAA AST’s mission is to ensure public health and safety and the safety of property while protecting the national security and foreign policy interests of the United States during commercial launch and reentry operations. In addition, FAA AST is directed to encourage, facilitate, and promote commercial space launches and reentries. Additional information concerning commercial space transportation can be found on FAA AST’s website: http://www.faa.gov/go/ast Cover: The Orbital Sciences Corporation’s Antares rocket is seen as it launches from Pad-0A of the Mid-Atlantic Regional Spaceport at the NASA Wallops Flight Facility in Virginia, Sunday, April 21, 2013. Image Credit: NASA/Bill Ingalls NOTICE Use of trade names or names of manufacturers in this document does not constitute an official endorsement of such products or manufacturers, either expressed or implied, by the Federal Aviation Administration. • i • Federal Aviation Administration’s Office of Commercial Space Transportation Table of Contents EXECUTIVE SUMMARY . 1 COMSTAC 2013 COMMERCIAL GEOSYNCHRONOUS ORBIT LAUNCH DEMAND FORECAST . -

Alternative Futures: United States Commercial Satellite Imagery in 2020

1455 Pennsylvania Avenue, NW Suite 415 Washington, DC 20004 202-280-2045 phone www.innovative -analytics.com ___________________________________________________________________ Alternative Futures: United States Commercial Satellite Imagery in 2020 Robert A. Weber and Kevin M. O’Connell November 2011 Contact Information: Kevin O’Connell President and CEO Innovative Analytics and Training, LLC 1455 Pennsylvania Ave NW, Suite 415 Washington, DC 2004 www.innovative-analytics.com Phone 202-280-2045 x1 Prepared for: Department of Commerce National Oceanic and Atmospheric Administration National Environmental Satellite, Data, and Information Service Commercial Remote Sensing Regulatory Affairs Innovative Analytics and Training, LLC. Proprietary 2012. Page 1 Foreword This independent study, sponsored by the U.S. Department of Commerce in late 2010, posits three alternative futures for U.S. commercial satellite imagery in 2020. It begins with a detailed history of the U.S. policy and regulatory environment for remote sensing commercialization, including many of the assumptions made about U.S. government and commercial interests, international competition, security issues that relate to the proliferation of remote sensing data and technology, and others. In many ways, it reflects a brilliant American vision that has sometimes stumbled in implementation. Following a discussion about remote sensing technologies, and how they are changing, the report goes on to describe three alternative futures for U.S. commercial satellite imagery in 2020, with a special emphasis on the U.S. high-resolution electro-optical firms. The reader should note that, by definition, none of these futures is “correct” nor reflects a prediction or a preference in any way. Alternative futures methodologies are designed to identify plausible futures, and their underlying factors and drivers, in such a way as to allow stakeholders to understand important directions for a given issue, including important signposts to monitor as reflective of movement toward those (or perhaps other) futures. -

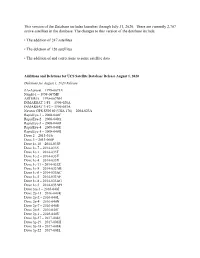

This Version of the Database Includes Launches Through July 31, 2020

This version of the Database includes launches through July 31, 2020. There are currently 2,787 active satellites in the database. The changes to this version of the database include: • The addition of 247 satellites • The deletion of 126 satellites • The addition of and corrections to some satellite data Additions and Deletions for UCS Satellite Database Release August 1, 2020 Deletions for August 1, 2020 Release ZA-Aerosat – 1998-067LU Nsight-1 – 1998-067MF ASTERIA – 1998-067NH INMARSAT 3-F1 – 1996-020A INMARSAT 3-F2 – 1996-053A Navstar GPS SVN 60 (USA 178) – 2004-023A RapidEye-1 – 2008-040C RapidEye-2 – 2008-040A RapidEye-3 – 2008-040D RapidEye-4 – 2008-040E RapidEye-5 – 2008-040B Dove 2 – 2013-015c Dove 3 – 2013-066P Dove 1c-10 – 2014-033P Dove 1c-7 – 2014-033S Dove 1c-1 – 2014-033T Dove 1c-2 – 2014-033V Dove 1c-4 – 2014-033X Dove 1c-11 – 2014-033Z Dove 1c-9 – 2014-033AB Dove 1c-6 – 2014-033AC Dove 1c-5 – 2014-033AE Dove 1c-8 – 2014-033AG Dove 1c-3 – 2014-033AH Dove 3m-1 – 2016-040J Dove 2p-11 – 2016-040K Dove 2p-2 – 2016-040L Dove 2p-4 – 2016-040N Dove 2p-7 – 2016-040S Dove 2p-5 – 2016-040T Dove 2p-1 – 2016-040U Dove 3p-37 – 2017-008F Dove 3p-19 – 2017-008H Dove 3p-18 – 2017-008K Dove 3p-22 – 2017-008L Dove 3p-21 – 2017-008M Dove 3p-28 – 2017-008N Dove 3p-26 – 2017-008P Dove 3p-17 – 2017-008Q Dove 3p-27 – 2017-008R Dove 3p-25 – 2017-008S Dove 3p-1 – 2017-008V Dove 3p-6 – 2017-008X Dove 3p-7 – 2017-008Y Dove 3p-5 – 2017-008Z Dove 3p-9 – 2017-008AB Dove 3p-10 – 2017-008AC Dove 3p-75 – 2017-008AH Dove 3p-73 – 2017-008AK Dove 3p-36 – -

Financial Operational Losses in Space Launch

UNIVERSITY OF OKLAHOMA GRADUATE COLLEGE FINANCIAL OPERATIONAL LOSSES IN SPACE LAUNCH A DISSERTATION SUBMITTED TO THE GRADUATE FACULTY in partial fulfillment of the requirements for the Degree of DOCTOR OF PHILOSOPHY By TOM ROBERT BOONE, IV Norman, Oklahoma 2017 FINANCIAL OPERATIONAL LOSSES IN SPACE LAUNCH A DISSERTATION APPROVED FOR THE SCHOOL OF AEROSPACE AND MECHANICAL ENGINEERING BY Dr. David Miller, Chair Dr. Alfred Striz Dr. Peter Attar Dr. Zahed Siddique Dr. Mukremin Kilic c Copyright by TOM ROBERT BOONE, IV 2017 All rights reserved. \For which of you, intending to build a tower, sitteth not down first, and counteth the cost, whether he have sufficient to finish it?" Luke 14:28, KJV Contents 1 Introduction1 1.1 Overview of Operational Losses...................2 1.2 Structure of Dissertation.......................4 2 Literature Review9 3 Payload Trends 17 4 Launch Vehicle Trends 28 5 Capability of Launch Vehicles 40 6 Wastage of Launch Vehicle Capacity 49 7 Optimal Usage of Launch Vehicles 59 8 Optimal Arrangement of Payloads 75 9 Risk of Multiple Payload Launches 95 10 Conclusions 101 10.1 Review of Dissertation........................ 101 10.2 Future Work.............................. 106 Bibliography 108 A Payload Database 114 B Launch Vehicle Database 157 iv List of Figures 3.1 Payloads By Orbit, 2000-2013.................... 20 3.2 Payload Mass By Orbit, 2000-2013................. 21 3.3 Number of Payloads of Mass, 2000-2013.............. 21 3.4 Total Mass of Payloads in kg by Individual Mass, 2000-2013... 22 3.5 Number of LEO Payloads of Mass, 2000-2013........... 22 3.6 Number of GEO Payloads of Mass, 2000-2013.......... -

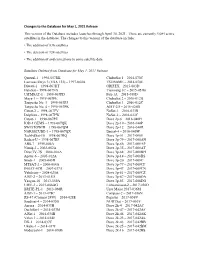

Changes to the Database for May 1, 2021 Release This Version of the Database Includes Launches Through April 30, 2021

Changes to the Database for May 1, 2021 Release This version of the Database includes launches through April 30, 2021. There are currently 4,084 active satellites in the database. The changes to this version of the database include: • The addition of 836 satellites • The deletion of 124 satellites • The addition of and corrections to some satellite data Satellites Deleted from Database for May 1, 2021 Release Quetzal-1 – 1998-057RK ChubuSat 1 – 2014-070C Lacrosse/Onyx 3 (USA 133) – 1997-064A TSUBAME – 2014-070E Diwata-1 – 1998-067HT GRIFEX – 2015-003D HaloSat – 1998-067NX Tianwang 1C – 2015-051B UiTMSAT-1 – 1998-067PD Fox-1A – 2015-058D Maya-1 -- 1998-067PE ChubuSat 2 – 2016-012B Tanyusha No. 3 – 1998-067PJ ChubuSat 3 – 2016-012C Tanyusha No. 4 – 1998-067PK AIST-2D – 2016-026B Catsat-2 -- 1998-067PV ÑuSat-1 – 2016-033B Delphini – 1998-067PW ÑuSat-2 – 2016-033C Catsat-1 – 1998-067PZ Dove 2p-6 – 2016-040H IOD-1 GEMS – 1998-067QK Dove 2p-10 – 2016-040P SWIATOWID – 1998-067QM Dove 2p-12 – 2016-040R NARSSCUBE-1 – 1998-067QX Beesat-4 – 2016-040W TechEdSat-10 – 1998-067RQ Dove 3p-51 – 2017-008E Radsat-U – 1998-067RF Dove 3p-79 – 2017-008AN ABS-7 – 1999-046A Dove 3p-86 – 2017-008AP Nimiq-2 – 2002-062A Dove 3p-35 – 2017-008AT DirecTV-7S – 2004-016A Dove 3p-68 – 2017-008BH Apstar-6 – 2005-012A Dove 3p-14 – 2017-008BS Sinah-1 – 2005-043D Dove 3p-20 – 2017-008C MTSAT-2 – 2006-004A Dove 3p-77 – 2017-008CF INSAT-4CR – 2007-037A Dove 3p-47 – 2017-008CN Yubileiny – 2008-025A Dove 3p-81 – 2017-008CZ AIST-2 – 2013-015D Dove 3p-87 – 2017-008DA Yaogan-18 -

Highlights in Space 2010

International Astronautical Federation Committee on Space Research International Institute of Space Law 94 bis, Avenue de Suffren c/o CNES 94 bis, Avenue de Suffren 75015 Paris, France 2 place Maurice Quentin 75015 Paris, France UNITED NATIONS Tel: +33 1 45 67 42 60 75039 Paris Cedex 01, France E-mail: : [email protected] Fax: +33 1 42 73 21 20 Tel. + 33 1 44 76 75 10 URL: www.iislweb.com E-mail: [email protected] Fax. + 33 1 44 76 74 37 OFFICE FOR OUTER SPACE AFFAIRS URL: www.iafastro.com E-mail: [email protected] URL: http://cosparhq.cnes.fr Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law The United Nations Office for Outer Space Affairs is responsible for promoting international cooperation in the peaceful uses of outer space and assisting developing countries in using space science and technology. United Nations Office for Outer Space Affairs P. O. Box 500, 1400 Vienna, Austria Tel: (+43-1) 26060-4950 Fax: (+43-1) 26060-5830 E-mail: [email protected] URL: www.unoosa.org United Nations publication Printed in Austria USD 15 Sales No. E.11.I.3 ISBN 978-92-1-101236-1 ST/SPACE/57 V.11-80947—March*1180947* 2011—475 UNITED NATIONS OFFICE FOR OUTER SPACE AFFAIRS UNITED NATIONS OFFICE AT VIENNA Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law Progress in space science, technology and applications, international cooperation and space law UNITED NATIONS New York, 2011 UniTEd NationS PUblication Sales no. -

Small-Satellite Mission Failure Rates

NASA/TM—2018– 220034 Small-Satellite Mission Failure Rates Stephen A. Jacklin NASA Ames Research Center, Moffett Field, CA March 2019 This page is required and contains approved text that cannot be changed. NASA STI Program ... in Profile Since its founding, NASA has been dedicated CONFERENCE PUBLICATION. to the advancement of aeronautics and space Collected papers from scientific and science. The NASA scientific and technical technical conferences, symposia, seminars, information (STI) program plays a key part in or other meetings sponsored or helping NASA maintain this important role. co-sponsored by NASA. The NASA STI program operates under the SPECIAL PUBLICATION. Scientific, auspices of the Agency Chief Information Officer. technical, or historical information from It collects, organizes, provides for archiving, and NASA programs, projects, and missions, disseminates NASA’s STI. The NASA STI often concerned with subjects having program provides access to the NTRS Registered substantial public interest. and its public interface, the NASA Technical Reports Server, thus providing one of the largest TECHNICAL TRANSLATION. collections of aeronautical and space science STI English-language translations of foreign in the world. Results are published in both non- scientific and technical material pertinent to NASA channels and by NASA in the NASA STI NASA’s mission. Report Series, which includes the following report types: Specialized services also include organizing and publishing research results, distributing TECHNICAL PUBLICATION. Reports of specialized research announcements and completed research or a major significant feeds, providing information desk and personal phase of research that present the results of search support, and enabling data exchange NASA Programs and include extensive data services. -

Building the Architecture for Sustainable Space Security

i UNIDIR/2006/17 Building the Architecture for Sustainable Space Security Conference Report 30–31 March 2006 UNIDIR United Nations Institute for Disarmament Research Geneva, Switzerland NOTE The designations employed and the presentation of the material in this publication do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations concerning the legal status of any country, territory, city or area, or of its authorities, or concerning the delimitation of its frontiers or boundaries. * * * The views expressed in this publication are the sole responsibility of the individual authors. They do not necessarily reflect the views or opinions of the United Nations, UNIDIR, its staff members or sponsors. UNIDIR/2006/17 Copyright © United Nations, 2006 All rights reserved UNITED NATIONS PUBLICATION Sales No. GV.E.06.0.14 ISBN 92-9045-185-8 The United Nations Institute for Disarmament Research (UNIDIR)—an intergovernmental organization within the United Nations—conducts research on disarmament and security. UNIDIR is based in Geneva, Switzerland, the centre for bilateral and multilateral disarmament and non- proliferation negotiations, and home of the Conference on Disarmament. The Institute explores current issues pertaining to the variety of existing and future armaments, as well as global diplomacy and local tensions and conflicts. Working with researchers, diplomats, government officials, NGOs and other institutions since 1980, UNIDIR acts as a bridge between the research community and policy makers. UNIDIR’s activities are funded by contributions from governments and donors foundations. The Institute’s web site can be found at: www.unidir.org iv CONTENTS Foreword by Patricia Lewis .