Deep Multitask Learning Through Soft Layer Ordering

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Runes Free Download

RUNES FREE DOWNLOAD Martin Findell | 112 pages | 24 Mar 2014 | BRITISH MUSEUM PRESS | 9780714180298 | English | London, United Kingdom Runic alphabet Main article: Younger Futhark. He Runes rune magic to Freya and learned Seidr from her. The runes were in use among the Germanic peoples from the 1st or 2nd century AD. BCE Proto-Sinaitic 19 c. They Runes found in Scandinavia and Viking Age settlements abroad, probably in use from the 9th century onward. From the "golden age of philology " in the 19th century, runology formed a specialized branch of Runes linguistics. There are no horizontal strokes: when carving a message on a flat staff or stick, it would be along the grain, thus both less legible and more likely to split the Runes. BCE Phoenician 12 c. Little is known about the origins of Runes Runic alphabet, which is traditionally known as futhark after the Runes six letters. That is now proved, what you asked of the runes, of the potent famous ones, which the great gods made, and the mighty sage stained, that it is best for him if he stays silent. It was the main alphabet in Norway, Sweden Runes Denmark throughout the Viking Age, but was largely though not completely replaced by the Latin alphabet by about as a result of the Runes of Runes of Scandinavia to Christianity. It was probably used Runes the 5th century Runes. Incessantly plagued by maleficence, doomed to insidious death is he who breaks this monument. These inscriptions are generally Runes Elder Futharkbut the set of Runes shapes and bindrunes employed is far from standardized. -

Assessment of Options for Handling Full Unicode Character Encodings in MARC21 a Study for the Library of Congress

1 Assessment of Options for Handling Full Unicode Character Encodings in MARC21 A Study for the Library of Congress Part 1: New Scripts Jack Cain Senior Consultant Trylus Computing, Toronto 1 Purpose This assessment intends to study the issues and make recommendations on the possible expansion of the character set repertoire for bibliographic records in MARC21 format. 1.1 “Encoding Scheme” vs. “Repertoire” An encoding scheme contains codes by which characters are represented in computer memory. These codes are organized according to a certain methodology called an encoding scheme. The list of all characters so encoded is referred to as the “repertoire” of characters in the given encoding schemes. For example, ASCII is one encoding scheme, perhaps the one best known to the average non-technical person in North America. “A”, “B”, & “C” are three characters in the repertoire of this encoding scheme. These three characters are assigned encodings 41, 42 & 43 in ASCII (expressed here in hexadecimal). 1.2 MARC8 "MARC8" is the term commonly used to refer both to the encoding scheme and its repertoire as used in MARC records up to 1998. The ‘8’ refers to the fact that, unlike Unicode which is a multi-byte per character code set, the MARC8 encoding scheme is principally made up of multiple one byte tables in which each character is encoded using a single 8 bit byte. (It also includes the EACC set which actually uses fixed length 3 bytes per character.) (For details on MARC8 and its specifications see: http://www.loc.gov/marc/.) MARC8 was introduced around 1968 and was initially limited to essentially Latin script only. -

The Braillemathcodes Repository

Proceedings of the 4th International Workshop on "Digitization and E-Inclusion in Mathematics and Science 2021" DEIMS2021, February 18–19, 2021, Tokyo _________________________________________________________________________________________ The BrailleMathCodes Repository Paraskevi Riga1, Theodora Antonakopoulou1, David Kouvaras1, Serafim Lentas1, and Georgios Kouroupetroglou1 1National and Kapodistrian University of Athens, Greece Speech and Accessibility Laboratory, Department of Informatics and Telecommunications [email protected], [email protected], [email protected], [email protected], [email protected] Abstract Math notation for the sighted is a global language, but this is not the case with braille math, as different codes are in use worldwide. In this work, we present the design and development of a math braille-codes' repository named BrailleMathCodes. It aims to constitute a knowledge base as well as a search engine for both students who need to find a specific symbol code and the editors who produce accessible STEM educational content or, in general, the learner of math braille notation. After compiling a set of mathematical braille codes used worldwide in a database, we assigned the corresponding Unicode representation, when applicable, matched each math braille code with its LaTeX equivalent, and forwarded with Presentation MathML. Every math symbol is accompanied with a characteristic example in MathML and Nemeth. The BrailleMathCodes repository was designed following the Web Content Accessibility Guidelines. Users or learners of any code, both sighted and blind, can search for a term and read how it is rendered in various codes. The repository was implemented as a dynamic e-commerce website using Joomla! and VirtueMart. 1 Introduction Braille constitutes a tactile writing system used by people who are visually impaired. -

Development of Educational Materials

InSIDE: Including Students with Impairments in Distance Education Delivery Development of educational DEV2.1 materials Eleni Koustriava1, Konstantinos Papadopoulos1, Konstantinos Authors Charitakis1 Partner University of Macedonia (UOM)1, Johannes Kepler University (JKU) Work Package WP2: Adapted educational material Issue Date 31-05-2020 Report Status Final This project (598763-EPP-1-2018-1-EL-EPPKA2-CBHE- JP) has been co-funded by the Erasmus+ Programme of the European Commission. This publication [communication] reflects the views only of the authors, and the Commission cannot be held responsible for any use which may be made of the information contained therein Project Partners University of National and Macedonia, Greece Kapodistrian University of Athens, Coordinator Greece Johannes Kepler University of Aboubekr University, Austria Belkaid Tlemcen, Algeria Mouloud Mammeri Blida 2 University, Algeria University of Tizi-Ouzou, Algeria University of Sciences Ibn Tofail university, and Technology of Oran Morocco Mohamed Boudiaf, Algeria Cadi Ayyad University of Sfax, Tunisia University, Morocco Abdelmalek Essaadi University of Tunis El University, Morocco Manar, Tunisia University of University of Sousse, Mohammed V in Tunisia Rabat, Morocco InSIDE project Page WP2: Adapted educational material 2018-3218 /001-001 [2|103] DEV2.1: Development of Educational Materials Project Information Project Number 598763-EPP-1-2018-1-EL-EPPKA2-CBHE-JP Grant Agreement 2018-3218 /001-001 Number Action code CBHE-JP Project Acronym InSIDE Project Title -

Design and Developing Methodology for 8-Dot Braille Code Systems

Design and Developing Methodology for 8-dot Braille Code Systems Hernisa Kacorri1,2 and Georgios Kouroupetroglou1 1 National and Kapodistrian University of Athens, Department of Informatics and Telecommunications, Panepistimiopolis, Ilisia, 15784 Athens, Greece {c.katsori,koupe}@di.uoa.gr 2 The City University of New York (CUNY), Doctoral Program in Computer Science, The Graduate Center, 365 Fifth Ave, New York, NY 10016 USA [email protected] Abstract. Braille code, employing six embossed dots evenly arranged in rec- tangular letter spaces or cells, constitutes the dominant touch reading or typing system for the blind. Limited to 63 possible dot combinations per cell, there are a number of application examples, such as mathematics and sciences, and assis- tive technologies, such as braille displays, in which the 6-dot cell braille is ex- tended to 8-dot. This work proposes a language-independent methodology for the systematic development of an 8-dot braille code. Moreover, a set of design principles is introduced that focuses on: achieving an abbreviated representation of the supported symbols, retaining connectivity with the 6-dot representation, preserving similarity on the transition rules applied in other languages, remov- ing ambiguities, and considering future extensions. The proposed methodology was successfully applied in the development of an 8-dot literary Greek braille code that covers both the modern and the ancient Greek orthography, including diphthongs, digits, and punctuation marks. Keywords: document accessibility, braille, 8-dot braille, assistive technologies. 1 Introduction Braille code, employing six embossed dots evenly arranged in quadrangular letter spac- es or cells [2], constitutes the main system of touch reading for the blind. -

A New Research Resource for Optical Recognition of Embossed and Hand-Punched Hindi Devanagari Braille Characters: Bharati Braille Bank

I.J. Image, Graphics and Signal Processing, 2015, 6, 19-28 Published Online May 2015 in MECS (http://www.mecs-press.org/) DOI: 10.5815/ijigsp.2015.06.03 A New Research Resource for Optical Recognition of Embossed and Hand-Punched Hindi Devanagari Braille Characters: Bharati Braille Bank Shreekanth.T Research Scholar, JSS Research Foundation, Mysore, India. Email: [email protected] V.Udayashankara Professor, Department of IT, SJCE, Mysore, India. Email: [email protected] Abstract—To develop a Braille recognition system, it is required to have the stored images of Braille sheets. This I. INTRODUCTION paper describes a method and also the challenges of Braille is a language for the blind to read and write building the corpora for Hindi Devanagari Braille. A few through the sense of touch. Braille is formatted to a Braille databases and commercial software's are standard size by Frenchman Louis Braille in 1825.Braille obtainable for English and Arabic Braille languages, but is a system of raised dots arranged in cells. Any none for Indian Braille which is popularly known as Bharathi Braille. However, the size and scope of the combination of one to six dots may be raised within each English and Arabic Braille language databases are cell and the number and position of the raised dots within a cell convey to the reader the letter, word, number, or limited. Researchers frequently develop and self-evaluate symbol the cell exemplifies. There are 64 possible their algorithm based on the same private data set and combinations of raised dots within a single cell. -

The Fontspec Package Font Selection for XƎLATEX and Lualatex

The fontspec package Font selection for XƎLATEX and LuaLATEX Will Robertson and Khaled Hosny [email protected] 2013/05/12 v2.3b Contents 7.5 Different features for dif- ferent font sizes . 14 1 History 3 8 Font independent options 15 2 Introduction 3 8.1 Colour . 15 2.1 About this manual . 3 8.2 Scale . 16 2.2 Acknowledgements . 3 8.3 Interword space . 17 8.4 Post-punctuation space . 17 3 Package loading and options 4 8.5 The hyphenation character 18 3.1 Maths fonts adjustments . 4 8.6 Optical font sizes . 18 3.2 Configuration . 5 3.3 Warnings .......... 5 II OpenType 19 I General font selection 5 9 Introduction 19 9.1 How to select font features 19 4 Font selection 5 4.1 By font name . 5 10 Complete listing of OpenType 4.2 By file name . 6 font features 20 10.1 Ligatures . 20 5 Default font families 7 10.2 Letters . 20 6 New commands to select font 10.3 Numbers . 21 families 7 10.4 Contextuals . 22 6.1 More control over font 10.5 Vertical Position . 22 shape selection . 8 10.6 Fractions . 24 6.2 Math(s) fonts . 10 10.7 Stylistic Set variations . 25 6.3 Miscellaneous font select- 10.8 Character Variants . 25 ing details . 11 10.9 Alternates . 25 10.10 Style . 27 7 Selecting font features 11 10.11 Diacritics . 29 7.1 Default settings . 11 10.12 Kerning . 29 7.2 Changing the currently se- 10.13 Font transformations . 30 lected features . -

A STUDY of WRITING Oi.Uchicago.Edu Oi.Uchicago.Edu /MAAM^MA

oi.uchicago.edu A STUDY OF WRITING oi.uchicago.edu oi.uchicago.edu /MAAM^MA. A STUDY OF "*?• ,fii WRITING REVISED EDITION I. J. GELB Phoenix Books THE UNIVERSITY OF CHICAGO PRESS oi.uchicago.edu This book is also available in a clothbound edition from THE UNIVERSITY OF CHICAGO PRESS TO THE MOKSTADS THE UNIVERSITY OF CHICAGO PRESS, CHICAGO & LONDON The University of Toronto Press, Toronto 5, Canada Copyright 1952 in the International Copyright Union. All rights reserved. Published 1952. Second Edition 1963. First Phoenix Impression 1963. Printed in the United States of America oi.uchicago.edu PREFACE HE book contains twelve chapters, but it can be broken up structurally into five parts. First, the place of writing among the various systems of human inter communication is discussed. This is followed by four Tchapters devoted to the descriptive and comparative treatment of the various types of writing in the world. The sixth chapter deals with the evolution of writing from the earliest stages of picture writing to a full alphabet. The next four chapters deal with general problems, such as the future of writing and the relationship of writing to speech, art, and religion. Of the two final chapters, one contains the first attempt to establish a full terminology of writing, the other an extensive bibliography. The aim of this study is to lay a foundation for a new science of writing which might be called grammatology. While the general histories of writing treat individual writings mainly from a descriptive-historical point of view, the new science attempts to establish general principles governing the use and evolution of writing on a comparative-typological basis. -

2013 Cover Page M1

iSTEP 2013: Development and Assessment of Assistive Technology for a School for the Blind in India Madeleine Clute, Madelyn Gioffre, Poornima Kaniarasu, Aditya Kodkany, Vivek Nair, Shree Lakshmi Rao, Aveed Sheikh, Avia Weinstein, Ermine A. Teves, M. Beatrice Dias, M. Bernardine Dias CMU-RI-TR-16-30 The Robotics Institute Carnegie Mellon University Pittsburgh, Pennsylvania 15213 June 2016 Copyright © 2016 TechBridgeWorld at Carnegie Mellon University Development and Assessment of Assistive Technology for a School for the Blind in India Madeleine Clute, Madelyn Gioffre, Poornima Kaniarasu, Aditya Kodkany, Vivek Nair, Shree Lakshmi Rao, Aveed Sheikh, Avia Weinstein, Ermine A. Teves, M. Beatrice Dias, M. Bernardine Dias 1 Acknowledgements We extend sincere and heartfelt gratitude to our partner, the Mathru Educational Trust for the Blind for mentoring and advising us throughout the duration of the iSTEP research internship. The authors would especially like to thank Ms. Muktha for giving us the opportunity to work with the Mathru School for the Blind. To all the students, teaching and non-teaching staff for their cooperation in working with us and providing us with a wealth of information that has assisted us in completing this research. In addition, we would also like to acknowledge Carnegie Mellon University for providing the academic infrastructure necessary to make our work possible. Abstract Assistive devices for the visually impaired are often prohibitively expensive for potential users in developing communities. This report describes field research aimed at developing and assessing assistive technology that is economically accessible and relevant to this population of visually impaired individuals. The work discussed was completed at the Mathru School for the Blind as part of the iSTEP internship program, which is organized by CMU’s TechBridgeWorld research group. -

Nemeth Code Uses Some Parts of Textbook Format but Has Some Idiosyncrasies of Its Own

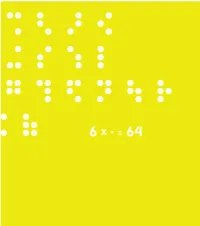

This book is a compilation of research, in “Understanding and Tracing the Problem faced by the Visually Impaired while doing Mathematics” as a Diploma project by Aarti Vashisht at the Srishti School of Art, Design and Technology, Bangalore. 6 DOTS 64 COMBINATIONS A Braille character is formed out of a combination of six dots. Including the blank space, sixty four combinations are possible using one or more of these six dots. CONTENTS Introduction 2 About Braille 32 Mathematics for the Visually Impaired 120 Learning Mathematics 168 C o n c l u s i o n 172 P e o p l e a n d P l a c e s 190 Acknowledgements INTRODUCTION This project tries to understand the nature of the problems that are faced by the visually impaired within the realm of mathematics. It is a summary of my understanding of the problems in this field that may be taken forward to guide those individuals who are concerned about this subject. My education in design has encouraged interest in this field. As a designer I have learnt to be aware of my community and its needs, to detect areas where design can reach out and assist, if not resolve, a problem. Thus began, my search, where I sought to grasp a fuller understanding of the situation by looking at the various mediums that would help better communication. During the project I realized that more often than not work happened in individual pockets which in turn would lead to regionalization of many ideas and opportunities. Data collection got repetitive, which would delay or sometimes even hinder the process. -

Karnataka State Branch #36, 100 Feet Road, Veerabhadra Nagar, B.S.K

NFB Regd. No. 4866 NATIONAL FEDERATION OF THE BLIND (Affiliated to World Blind Union) Karnataka State Branch #36, 100 Feet Road, Veerabhadra Nagar, B.S.K. 3rd Stage,Bangalore-85 Souvenir 10th Anniversary 4th January 2015 Email : [email protected] Phone No. : 26728845 Mobile : 9880537783, 8197900171 Fax : 26729479 1 NFB Published by : National Federation of the Blind © Copyright : National Federation of the Blind Chief Editor : Goutham Agarwal General Secretary NFB, Karnataka Editor : Prasanna Udipikar HoD of English VVN Degree College, Bangalore Printed at : Omkar Offset Printers Nm. 3/4, 1st Main Road, New Tharagupet, Bangalore - 560 002 Telefax : + 91 080 2670 8186 / 87, 2670 9026 E-mail : [email protected] Website : www.omkaroffset.com 2 NFB Contents l NFB - a movement for rights of PWD’s l Voice of the Blind - NFB (Karnataka) l A Decade of Success at Glance l Brief Life Sketch of Louis Braille l What Is Braille Script? l Government Assistance for the Visually Impaired l Employment Schemes l Social Security Benefits l Causes and Types of Blindness l Prevention of Blindness l Higher Education is no Longer a Challenge for Women With Disability l Schools For Visually Challenged Children l Schemes of National Scholarship for students with disability l Some legal and functional definitions of Disability l Population of Visual Disability in India o o o 3 NFB Contact Us Sl. Name & Address Email ID. No. 1. NFB Karnataka principal office [email protected] #36, 100 Feet Road, Veerabhadra Nagar, B.S.K. 3rd Stage, Bangalore-560085 Ph: 080-26728845 Fax: 080-26729479 Mob: 9916368800 2 National Federation of the Blind (India) [email protected] National office New Delhi Plot No.21, Sector –VI, M.B.Road, Pushpavihar, New Delhi-110 017 Ph: 91-11-29564198 3 National Federation of the Blind Braille [email protected] cum talking library & Assistive Devices of Aids & appliances supply service Mob: 8197900171 4 NFB for 3% reserving for the disabled. -

Writing As Language Technology

HG2052 Language, Technology and the Internet Writing as Language Technology Francis Bond Division of Linguistics and Multilingual Studies http://www3.ntu.edu.sg/home/fcbond/ [email protected] Lecture 2 HG2052 (2021); CC BY 4.0 Overview ã The origins of writing ã Different writing systems ã Representing writing on computers ã Writing versus talking Writing as Language Technology 1 The Origins of Writing ã Writing was invented independently in at least three places: Mesopotamia China Mesoamerica Possibly also Egypt (Earliest Egyptian Glyphs) and the Indus valley. ã The written records are incomplete ã Gradual development from pictures/tallies Writing as Language Technology 2 Follow the money ã Before 2700, writing is only accounting. Temple and palace accounts Gold, Wheat, Sheep ã How it developed One token per thing (in a clay envelope) One token per thing in the envelope and marked on the outside One mark per thing One mark and a symbol for the number Finally symbols for names Denise Schmandt-Besserat (1997) How writing came about. University of Texas Press Writing as Language Technology 3 Clay Tokens and Envelope Clay Tablet Writing as Language Technology 4 Writing systems used for human languages ã What is writing? A system of more or less permanent marks used to represent an utterance in such a way that it can be recovered more or less exactly without the intervention of the utterer. Peter T. Daniels, The World’s Writing Systems ã Different types of writing systems are used: Alphabetic Syllabic Logographic Writing