Testing a Coupled Global-Limited-Area Data Assimilation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

P1.24 a Typhoon Loss Estimation Model for China

P1.24 A TYPHOON LOSS ESTIMATION MODEL FOR CHINA Peter J. Sousounis*, H. He, M. L. Healy, V. K. Jain, G. Ljung, Y. Qu, and B. Shen-Tu AIR Worldwide Corporation, Boston, MA 1. INTRODUCTION the two. Because of its wind intensity (135 mph maximum sustained winds), it has been Nowhere 1 else in the world do tropical compared to Hurricane Katrina 2005. But Saomai cyclones (TCs) develop more frequently than in was short lived, and although it made landfall as the Northwest Pacific Basin. Nearly thirty TCs are a strong Category 4 storm and generated heavy spawned each year, 20 of which reach hurricane precipitation, it weakened quickly. Still, economic or typhoon status (cf. Fig. 1). Five of these reach losses were ~12 B RMB (~1.5 B USD). In super typhoon status, with windspeeds over 130 contrast, Bilis, which made landfall a month kts. In contrast, the North Atlantic typically earlier just south of where Saomai hit, was generates only ten TCs, seven of which reach actually only tropical storm strength at landfall hurricane status. with max sustained winds of 70 mph. Bilis weakened further still upon landfall but turned Additionally, there is no other country in the southwest and traveled slowly over a period of world where TCs strike with more frequency than five days across Hunan, Guangdong, Guangxi in China. Nearly ten landfalling TCs occur in a and Yunnan Provinces. It generated copious typical year, with one to two additional by-passing amounts of precipitation, with large areas storms coming close enough to the coast to receiving more than 300 mm. -

An Efficient Method for Simulating Typhoon Waves Based on A

Journal of Marine Science and Engineering Article An Efficient Method for Simulating Typhoon Waves Based on a Modified Holland Vortex Model Lvqing Wang 1,2,3, Zhaozi Zhang 1,*, Bingchen Liang 1,2,*, Dongyoung Lee 4 and Shaoyang Luo 3 1 Shandong Province Key Laboratory of Ocean Engineering, Ocean University of China, 238 Songling Road, Qingdao 266100, China; [email protected] 2 College of Engineering, Ocean University of China, 238 Songling Road, Qingdao 266100, China 3 NAVAL Research Academy, Beijing 100070, China; [email protected] 4 Korea Institute of Ocean, Science and Technology, Busan 600-011, Korea; [email protected] * Correspondence: [email protected] (Z.Z.); [email protected] (B.L.) Received: 20 January 2020; Accepted: 23 February 2020; Published: 6 March 2020 Abstract: A combination of the WAVEWATCH III (WW3) model and a modified Holland vortex model is developed and studied in the present work. The Holland 2010 model is modified with two improvements: the first is a new scaling parameter, bs, that is formulated with information about the maximum wind speed (vms) and the typhoon’s forward movement velocity (vt); the second is the introduction of an asymmetric typhoon structure. In order to convert the wind speed, as reconstructed by the modified Holland model, from 1-min averaged wind inputs into 10-min averaged wind inputs to force the WW3 model, a gust factor (gf) is fitted in accordance with practical test cases. Validation against wave buoy data proves that the combination of the two models through the gust factor is robust for the estimation of typhoon waves. -

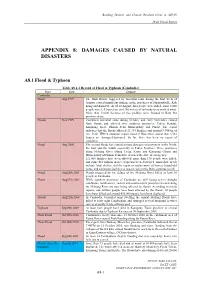

Appendix 8: Damages Caused by Natural Disasters

Building Disaster and Climate Resilient Cities in ASEAN Draft Finnal Report APPENDIX 8: DAMAGES CAUSED BY NATURAL DISASTERS A8.1 Flood & Typhoon Table A8.1.1 Record of Flood & Typhoon (Cambodia) Place Date Damage Cambodia Flood Aug 1999 The flash floods, triggered by torrential rains during the first week of August, caused significant damage in the provinces of Sihanoukville, Koh Kong and Kam Pot. As of 10 August, four people were killed, some 8,000 people were left homeless, and 200 meters of railroads were washed away. More than 12,000 hectares of rice paddies were flooded in Kam Pot province alone. Floods Nov 1999 Continued torrential rains during October and early November caused flash floods and affected five southern provinces: Takeo, Kandal, Kampong Speu, Phnom Penh Municipality and Pursat. The report indicates that the floods affected 21,334 families and around 9,900 ha of rice field. IFRC's situation report dated 9 November stated that 3,561 houses are damaged/destroyed. So far, there has been no report of casualties. Flood Aug 2000 The second floods has caused serious damages on provinces in the North, the East and the South, especially in Takeo Province. Three provinces along Mekong River (Stung Treng, Kratie and Kompong Cham) and Municipality of Phnom Penh have declared the state of emergency. 121,000 families have been affected, more than 170 people were killed, and some $10 million in rice crops has been destroyed. Immediate needs include food, shelter, and the repair or replacement of homes, household items, and sanitation facilities as water levels in the Delta continue to fall. -

Chlorophyll Variations Over Coastal Area of China Due to Typhoon Rananim

Indian Journal of Geo Marine Sciences Vol. 47 (04), April, 2018, pp. 804-811 Chlorophyll variations over coastal area of China due to typhoon Rananim Gui Feng & M. V. Subrahmanyam* Department of Marine Science and Technology, Zhejiang Ocean University, Zhoushan, Zhejiang, China 316022 *[E.Mail [email protected]] Received 12 August 2016; revised 12 September 2016 Typhoon winds cause a disturbance over sea surface water in the right side of typhoon where divergence occurred, which leads to upwelling and chlorophyll maximum found after typhoon landfall. Ekman transport at the surface was computed during the typhoon period. Upwelling can be observed through lower SST and Ekman transport at the surface over the coast, and chlorophyll maximum found after typhoon landfall. This study also compared MODIS and SeaWiFS satellite data and also the chlorophyll maximum area. The chlorophyll area decreased 3% and 5.9% while typhoon passing and after landfall area increased 13% and 76% in SeaWiFS and MODIS data respectively. [Key words: chlorophyll, SST, upwelling, Ekman transport, MODIS, SeaWiFS] Introduction runoff, entrainment of riverine-mixing, Integrated Due to its unique and complex geographical Primary Production are also affected by typhoons environment, China becomes a country which has a when passing and landfall25,26,27,28&29. It is well known high frequency of natural disasters and severe that, ocean phytoplankton production (primate influence over coastal area. Since typhoon causes production) plays a considerable role in the severe damage, meteorologists and oceanographers ecosystem. Primary production can be indexed by have studied on the cause and influence of typhoon chlorophyll concentration. The spatial and temporal for a long time. -

Study on Formation and Development of a Mesoscale Convergence Line in Typhoon Rananim∗

NO:4 LI Ying, CHEN Lianshou, QIAN Chuanhai, et al. 413 Study on Formation and Development of a Mesoscale Convergence Line in Typhoon Rananim¤ 1y ¢¡ 1 2 3 ¨ © § LI Ying ( ), CHEN Lianshou ( £¥¤§¦ ), QIAN Chuanhai ( ), and YANG Jiakang ( ) 1 State Key Laboratory of Severe Weather (LaSW ), Chinese Academy of Meteorological Sciences, Beijing 100081 2 National Meteorological Center, Beijing 100081 3 Yunnan Institute of Meteorology, Kunming 650034 (Received April 29, 2010) ABSTRACT This study investigated the formation and development of a mesoscale convergence line (MCL) within the circulation of Typhoon Rananim (0414), which eventually led to torrential rainfall over inland China. The study is based on satellite, surface and sounding data, and 20 km£20 km regional spectral model data released by the Japan Meteorological Agency. It is found that midlatitude cold air intruded into the typhoon circulation, which resulted in the formation of the MCL in the northwestern quadrant of the typhoon. The MCL occurred in the lower troposphere below 700 hPa, with an ascending airflow inclined to cold air, and a secondary vertical circulation across the MCL. Meso-¯ scale convective cloud clusters emerged and developed near the MCL before their merging into the typhoon remnant clouds. Convective instability and conditional symmetric instability appeared simultaneously near the MCL, favorable for the development of convection. Diagnosis of the interaction between the MCL and the typhoon remnant implies that the MCL obtained kinetic energy and positive vorticity for its further development from the typhoon remnant in the lower troposphere. In turn, the development of the MCL provided kinetic energy and positive vorticity at upper levels for the typhoon remnant, which may have slowed down the decaying of the typhoon. -

Improving WRF Typhoon Precipitation and Intensity Simulation Using a Surrogate-Based Automatic Parameter Optimization Method

atmosphere Article Improving WRF Typhoon Precipitation and Intensity Simulation Using a Surrogate-Based Automatic Parameter Optimization Method Zhenhua Di 1,2,* , Qingyun Duan 3 , Chenwei Shen 1 and Zhenghui Xie 2 1 State Key Laboratory of Earth Surface Processes and Resource Ecology, Faculty of Geographical Science, Beijing Normal University, Beijing 100875, China; [email protected] 2 State Key Laboratory of Numerical Modeling for Atmospheric Sciences and Geophysical Fluid Dynamics, Institute of Atmospheric Physics, Chinese Academy of Sciences, Beijing 100029, China; [email protected] 3 State Key Laboratory of Hydrology-Water Resources and Hydraulic Engineering, College of Hydrology and Water Resources, Hohai University, Nanjing 210098, China; [email protected] * Correspondence: [email protected]; Tel.: +86-10-5880-0217 Received: 7 December 2019; Accepted: 8 January 2020; Published: 10 January 2020 Abstract: Typhoon precipitation and intensity forecasting plays an important role in disaster prevention and mitigation in the typhoon landfall area. However, the issue of improving forecast accuracy is very challenging. In this study, the Weather Research and Forecasting (WRF) model typhoon simulations on precipitation and central 10-m maximum wind speed (10-m wind) were improved using a systematic parameter optimization framework consisting of parameter screening and adaptive surrogate modeling-based optimization (ASMO) for screening sensitive parameters. Six of the 25 adjustable parameters from seven physics components of the WRF model were screened by the Multivariate Adaptive Regression Spline (MARS) parameter sensitivity analysis tool. Then the six parameters were optimized using the ASMO method, and after 178 runs, the 6-hourly precipitation, and 10-m wind simulations were finally improved by 6.83% and 13.64% respectively. -

WMO Bulletin, Volume 54, No. 2: April 2005

Cover(final)GB+dos 4mm.qxd(8) 17/06/05 12:24 Page a1 Vol. 54 (2) April 2005 feature articles - interviews - news - book reviews - calendar Climate research: achievements and Volume 54 No. 2 54 No. Volume April 2005 Weather and crops in 2004 challenges The global climate system in 2004 25th anniversary of the World Climate Research Programme World Meteorological Organization 7bis, avenue de la Paix Case postale No. 2300 CH-1211 Geneva 2, Switzerland WMO BULLETIN Tel:+ 41 22 730 81 11 Fax: + 41 22 730 81 81 Water and energy cycles Hurricane Ivan’s impact Climate change indices E-mail: [email protected] Stratospheric processes on the Cayman Islands The cryosphere and the Web: http://www.wmo.int ISSN 0042-9767 www.wmo.int and climate Climate change and variability climate system Cover(final)GB+dos 4mm.qxd(8) 17/06/05 12:24 Page a2 The World Meteorological North America, Central America and the Congress Caribbean (Region IV) The World C. Fuller (Belize) is the supreme body of the Organization. It South-West Pacific (Region V) brings together delegates of all Members Woon Shih Lai (Singapore) CD-ROM Meteorological once every four years to determine general Europe (Region VI) policies for the fulfilment of the purposes D.K. Keuerleber-Burk (Switzerland) (acting) Organization of the Organization. The CD-Rom contains (in pdf format in both (WMO) The Executive Council Elected members of the Executive high and low resolution): Council is composed of 37 directors of National Weather • Climate • Water • WMO Bulletin 54 (2) – April 2005 Meteorological or Hydrometeorological M.L. -

“RANANIM” AFTER LANDFALL PDF Created with Pdffactory Trial

Vol.12 No.2 JOURNAL OF TROPICAL METEOROLOGY December 2006 Article ID: 1006-8775(2006) 02-0189-04 THE DIAGNOSTIC ANALYSIS OF THE TRACK AND PRECIPITATION OF TYPHOON “RANANIM” AFTER LANDFALL 1 2 3 4 XU Ai-hu (许爱华) , YE Cheng-zhi (叶成志) , OUYANG Li-cheng (欧阳里程) , CHENG Rui (程 锐) (1. Jiangxi provincial meteorological observatory, Nanchang 330046 China; 2. Hunan provincial meteorological observatory, Changsha 410007 China; 3. Guangzhou International Specialized Weather Services, Guangzhou 510080 China; 4. LASG, Institute of Atmospheric Sciences, Chinese Academy of Sciences, Beijing 100080 China) Key words: Typhoon Rananim; track; precipitation; diagnostic analysis CLC number: P458.1.24 Document code: A 1 INTRODUCTION humidity and large-sized bodies of water are favorable for the maintenance and enhancement of landfall TCs Over the past few years, landfall and track, circulation. All of the above research achievements not intensity, sustaining mechanisms of tropical cyclones only help broaden the understanding of the patterns by (hereafter TCs) and associated weather changes have which TCs behave but are positive in improving the become heated topics of research. From the viewpoints forecast of the track, winds and rains after landfall. It is, of energy transformation, moisture transfer, mid- however, not much addressed in the field of evolution latitude baroclinic frontal zones and ambient wind [1] [2] [3] of landfall TCs when they are with special underlying fields, Chen et al. Le et al. and Zeng et al. studied surface and circulation background. TC Rananim the sustaining mechanism of TCs that have made (0414) was the most serious typhoon that ever affected [4] landfall. -

Typhoon Mindulle, Taiwan, July 2004

Short-term changes in seafl oor character due to fl ood-derived hyperpycnal discharge: Typhoon Mindulle, Taiwan, July 2004 J.D. Milliman School of Marine Science, College of William and Mary, Gloucester Point, Virginia 23062, USA S.W. Lin Institute of Oceanography, National Taiwan University, Taipei 106, Taiwan S.J. Kao Research Center for Environmental Change, Academia Sinica, Taipei 115, Taiwan J.P. Liu Department of Marine, Earth and Atmospheric Sciences, North Carolina State University, Raleigh, North Carolina 27695, USA C.S. Liu J.K. Chiu Institute of Oceanography, National Taiwan University, Taipei 106, Taiwan Y.C. Lin ABSTRACT amount of sediment, however, is signifi cant; During Typhoon Mindulle in early July 2004, the Choshui River (central-western Taiwan) since 1965 the Choshui River has discharged discharged ~72 Mt of sediment to the eastern Taiwan Strait; peak concentrations were ≥200 ~1.5–2 Gt of sediment to the Taiwan Strait. g/L, ~35%–40% of which was sand. Box-core samples and CHIRP (compressed high-intensity radar pulse) sonar records taken just before and after the typhoon indicate that the hyper- STUDY APPROACH pycnal sediment was fi rst deposited adjacent to the mouth of the Choshui, subsequently To document the impact of a hyperpycnal re suspended and transported northward (via the Taiwan Warm Current), and redeposited as event, we initiated a preliminary study of the a patchy coastal band of mud-dominated sediment that reached thicknesses of 1–2 m within seafl oor off the Choshui River from 22–26 megaripples. Within a month most of the mud was gone, probably continuing its northward June 2004. -

East & Southeast Asia: Typhoon Aere and Typhoon

EAST & SOUTHEAST ASIA: TYPHOON AERE AND 26 August 2004 TYPHOON CHABA The Federation’s mission is to improve the lives of vulnerable people by mobilizing the power of humanity. It is the world’s largest humanitarian organization and its millions of volunteers are active in over 181 countries. In Brief This Information Bulletin (no. 01/2004) is being issued for information only. The Federation is not seeking funding or other assistance from donors for this operation at this time. For further information specifically related to this operation please contact: in Geneva: Asia and Pacific Department; phone +41 22 730 4222; fax+41 22 733 0395 All International Federation assistance seeks to adhere to the Code of Conduct and is committed to the Humanitarian Charter and Minimum Standards in Disaster Response in delivering assistance to the most vulnerable. For support to or for further information concerning Federation programmes or operations in this or other countries, or for a full description of the national society profile, please access the Federation’s website at http://www.ifrc.org The Situation Over the past 48 hours over a million people have had to be evacuated as two powerful typhoons, Typhoon Aere and Typhoon Chaba have been adversely affecting parts of East Asia and the Philippines. East Asia Some 516,000 people were evacuated earlier this week in response to the approach of Typhoon Aere which struck Taiwan with winds measuring as high as 165 kilometres per hour on Tuesday 24 August, triggering flooding and landslides throughout central and northern Taiwan. Thousands of homes were damaged in northern Taiwan, and thousands of people in the mountainous area of Hsinchu were stranded due to blocked roads. -

(2004) and Yagi (2006) Using Four Methods

1394 WEATHER AND FORECASTING VOLUME 27 Determining the Extratropical Transition Onset and Completion Times of Typhoons Mindulle (2004) and Yagi (2006) Using Four Methods QIAN WANG Ocean University of China, Qingdao, and Shanghai Typhoon Institute and Laboratory of Typhoon Forecast Technique, China Meteorological Administration, Shanghai, China QINGQING LI Shanghai Typhoon Institute and Laboratory of Typhoon Forecast Technique, China Meteorological Administration, Shanghai, China GANG FU Laboratory of Physical Oceanography, Key Laboratory of Ocean–Atmosphere Interaction and Climate in Universities of Shandong, and Department of Marine Meteorology, Ocean University of China, Qingdao, China (Manuscript received 5 December 2011, in final form 21 July 2012) ABSTRACT Four methods for determining the extratropical transition (ET) onset and completion times of Typhoons Mindulle (2004) and Yagi (2006) were compared using four numerically analyzed datasets. The open-wave and scalar frontogenesis parameter methods failed to smoothly and consistently determine the ET completion from the four data sources, because some dependent factors associated with these two methods significantly impacted the results. Although the cyclone phase space technique succeeded in determining the ET onset and completion times, the ET onset and completion times of Yagi identified by this method exhibited a large distinction across the datasets, agreeing with prior studies. The isentropic potential vorticity method was also able to identify the ET onset times of both Mindulle and Yagi using all the datasets, whereas the ET onset time of Yagi determined by such a method differed markedly from that by the cyclone phase space technique, which may create forecast uncertainty. 1. Introduction period due to the complex interaction between the cy- clone and the midlatitude systems. -

Improvement of Numerical Simulation of Typhoon Rananim (0414) by Using Doppler Radar Data

Vol.14 No.2 JOURNAL OF TROPICAL METEOROLOGY December 2008 Article ID: 1006-8775(2008) 02-0089-04 IMPROVEMENT OF NUMERICAL SIMULATION OF TYPHOON RANANIM (0414) BY USING DOPPLER RADAR DATA 1 1 1 2 YU Zhen-shou (余贞寿) , ZHONG Jian-feng (钟建锋) , ZHAO Fang (赵 放), JI Chun-xiao (冀春晓) (1. Wenzhou Meteorological Bureau, Wenzhou 325027 China; 2. Meteorological Science Research Institute of Zhejiang Province, Hangzhou 310017 China) Abstract: Typhoon Rananim (0414) has been simulated by using the non-hydrostatic Advanced Regional Prediction System (ARPS) from Center of Analysis and Prediction of Storms (CAPS). The prediction of Rananim has generally been improved with ARPS using the new generation CINRAD Doppler radar data. Numerical experiments with or without using the radar data have shown that model initial fields with the assimilated radar radial velocity data in ARPS can change the wind field at the middle and high levels of the troposphere; fine characteristics of the tropical cyclone (TC) are introduced into the initial wind, the x component of wind speed south of the TC is increased and so is the y component west of it. They lead to improved forecasting of TC tracks for the time after landfall. The field of water vapor mixing ratio, temperature, cloud water mixing ratio and rainwater mixing ratio have also been improved by using radar reflectivity data. The model’s initial response to the introduction of hydrometeors has been increased. It is shown that horizontal model resolution has a significant impact on intensity forecasts, by greatly improving the forecasting of TC rainfall, and heavy rainstorm of the TC specially, as well as its distribution and variation with time.