Delft University of Technology Accounting for Values in Design

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

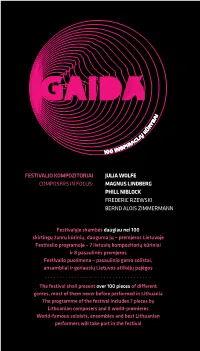

Julia Wolfe Magnus Lindberg Phill Niblock Frederic

FESTIVALIO KOMPOZITORIAI JULIA WOLFE COMPOSERS IN FOCUS: MAGNUS LINDBERG PHILL NIBLOCK FREDERIC RZEWSKI BERND ALOIS ZIMMERMANN Festivalyje skambės daugiau nei 100 skirtingų žanrų kūrinių, dauguma jų – premjeros Lietuvoje Festivalio programoje – 7 lietuvių kompozitorių kūriniai ir 8 pasaulinės premjeros Festivalio puošmena – pasaulinio garso solistai, ansambliai ir geriausių Lietuvos atlikėjų pajėgos The festival shall present over 100 pieces of different genres, most of them never before performed in Lithuania The programme of the festival includes 7 pieces by Lithuanian composers and 8 world-premieres World-famous soloists, ensembles and best Lithuanian performers will take part in the festival PB 1 PROGRAMA | TURINYS In Focus: Festivalio dėmesys taip pat: 6 JULIA WOLFE 18 FREDERIC RZEWSKI 10 MAGNUS LINDBERG 22 BERND ALOIS ZIMMERMANN 14 PHILL NIBLOCK 24 Spalio 20 d., šeštadienis, 20 val. 50 Spalio 26 d., penktadienis, 19 val. Vilniaus kongresų rūmai Šiuolaikinio meno centras LAURIE ANDERSON (JAV) SYNAESTHESIS THE LANGUAGE OF THE FUTURE IN FAHRENHEIT Florent Ghys. An Open Cage (2012) 28 Spalio 21 d., sekmadienis, 20 val. Frederic Rzewski. Les Moutons MO muziejus de Panurge (1969) SYNAESTHESIS Meredith Monk. Double Fiesta (1986) IN CELSIUS Julia Wolfe. Stronghold (2008) Panayiotis Kokoras. Conscious Sound (2014) Julia Wolfe. Reeling (2012) Alexander Schubert. Sugar, Maths and Whips Julia Wolfe. Big Beautiful Dark and (2011) Scary (2002) Tomas Kutavičius. Ritus rhythmus (2018, premjera)* 56 Spalio 27 d., šeštadienis, 19 val. Louis Andriessen. Workers Union (1975) Lietuvos nacionalinė filharmonija LIETUVOS NACIONALINIS 36 Spalio 24 d., trečiadienis, 19 val. SIMFONINIS ORKESTRAS Šiuolaikinio meno centras RŪTA RIKTERĖ ir ZBIGNEVAS Styginių kvartetas CHORDOS IBELHAUPTAS (fortepijoninis duetas) Dalyvauja DAUMANTAS KIRILAUSKAS COLIN CURRIE (kūno perkusija, (fortepijonas) Didžioji Britanija) Laurie Anderson. -

November 4 November 11

NOVEMBER 4 ISSUE NOVEMBER 11 Orders Due October 7 23 Orders Due October 14 axis.wmg.com 11/1/16 AUDIO & VIDEO RECAP ARTIST TITLE LBL CNF UPC SEL # SRP ORDERS DUE Hope, Bob Bob Hope: Hope for the Holidays (DVD) TL DV 610583538595 31845-X 12.95 9/30/16 Last Update: 09/20/16 For the latest up to date info on this release visit axis.wmg.com. ARTIST: Bob Hope TITLE: Bob Hope: Hope for the Holidays (DVD) Label: TL/Time Life/WEA Config & Selection #: DV 31845 X Street Date: 11/01/16 Order Due Date: 09/30/16 UPC: 610583538595 Box Count: 30 Unit Per Set: 1 SRP: $12.95 Alphabetize Under: H ALBUM FACTS Genre: Television Description: HOPE FOR THE HOLIDAYS... There’s no place like home for the holidays. And there really was no place like Bob Hope’s home for the holidays with Bob, Dolores and the Hope family. They invited friends from the world of entertainment and sports to celebrate and reminisce about vintage seasonal sketches in the 1993 special Bob Hope’s Bag Full of Christmas Memories. Bob Hope’s TV Christmas connection began on December 24, 1950, with The Comedy Hour. Heartwarming and fun—that’s the way Bob planned it. No Christmas party would be complete without music, while flubbed lines in some sketches remain intact and a blooper really hits below the belt. The host of Christmases past hands out laughs galore! HIGHLIGHTS INCLUDE: A compilation of Bob’s monologues from his many holiday tours for the USO Department store Santas Robert Cummings and Bob swap stories on the subway Redd Foxx and Bob play reindeer reluctant to guide Santa’s sleigh -

The 35Th Norwegian Short Film Festival

35 The 35th Norwegian Short Film Festival Kortfilmfestivalen 2012 Katalog for den 35. Kortfilmfestivalen, Utgiver/Publisher: Grimstad 13.–17. juni 2012 Kortfilmfestivalen, Filmens Hus Dronningens gate 16, NO-0152 Oslo Design: Eriksen / Brown Med Celine Wouters Telefon/Phone: +47 22 47 46 46 Basert på en profil av Yokoland E-mail: [email protected] Short Film | Documentary | Music Video Trykk: Zoom Grafisk AS E-mail: [email protected] Papir: Munken Lynx 130 g. Satt i Berthold Akzidenz Grotesk www.kortfilmfestivalen.no Grimstad 13.6 – 17.6 2012 ProgramProgramProgram WednesdayWednesdayWednesday 13 13 13thth Juneth June June ThursdayThursdayThursday 14 14 14thth Juneth June June FridayFridayFriday 15 15 15thth Juneth June June SaturdaySaturdaySaturday 16 16 16thth Juneth June June SundaySundaySunday 17 17 17thth Juneth June June NKNK NK NorskNorskNorsk kortfilm/Norwegian kortfilm/Norwegian kortfilm/Norwegian Short Short Short Film Film Film CatilinaCatilinaCatilina PanPanPan VesleVesleVesle HestetorgetHestetorgetHestetorgetOtherOtherOther Venues Venues Venues CatilinaCatilinaCatilina PanPanPan VesleVesleVesle HestetorgetHestetorgetHestetorgetRicaRicaRica Hotel Hotel Hotel RicaRicaRica Hotel Hotel Hotel OtherOtherOther Venues Venues Venues CatilinaCatilinaCatilina PanPanPan VesleVesleVesle HestetorgetHestetorgetHestetorgetRicaRicaRica Hotel Hotel Hotel OtherOtherOther Venues Venues Venues CatilinaCatilinaCatilina PanPanPan VesleVesleVesle HestetorgetHestetorgetHestetorgetRicaRicaRica Hotel Hotel Hotel OtherOtherOther Venues Venues -

AUDIO + VIDEO 9/14/10 Audio & Video Releases *Click on the Artist Names to Be Taken Directly to the Sell Sheet

NEW RELEASES WEA.COM ISSUE 18 SEPTEMBER 14 + SEPTEMBER 21, 2010 LABELS / PARTNERS Atlantic Records Asylum Bad Boy Records Bigger Picture Curb Records Elektra Fueled By Ramen Nonesuch Rhino Records Roadrunner Records Time Life Top Sail Warner Bros. Records Warner Music Latina Word AUDIO + VIDEO 9/14/10 Audio & Video Releases *Click on the Artist Names to be taken directly to the Sell Sheet. Click on the Artist Name in the Order Due Date Sell Sheet to be taken back to the Recap Page Street Date DV- En Vivo Desde Morelia 15 LAT 525832 BANDA MACHOS Años (DVD) $12.99 9/14/10 8/18/10 CD- FER 888109 BARLOWGIRL Our Journey…So Far $11.99 9/14/10 8/25/10 CD- NON 524138 CHATHAM, RHYS A Crimson Grail $16.98 9/14/10 8/25/10 CD- ATL 524647 CHROMEO Business Casual $13.99 9/14/10 8/25/10 CD- Business Casual (Deluxe ATL 524649 CHROMEO Edition) $18.98 9/14/10 8/25/10 Business Casual (White ATL A-524647 CHROMEO Colored Vinyl) $18.98 9/14/10 8/25/10 DV- Crossroads Guitar Festival RVW 525705 CLAPTON, ERIC 2004 (Super Jewel)(2DVD) $29.99 9/14/10 8/18/10 DV- Crossroads Guitar Festival RVW 525708 CLAPTON, ERIC 2007 (Super Jewel)(2DVD) $29.99 9/14/10 8/18/10 COLMAN, Shape Of Jazz To Come (180 ACG A-1317 ORNETTE Gram Vinyl) $24.98 9/14/10 8/25/10 REP A-524901 DEFTONES White Pony (2LP) $26.98 9/14/10 8/25/10 CD- RRR 177622 DRAGONFORCE Twilight Dementia (Live) $18.98 9/14/10 8/25/10 DV- LAT 525829 EL TRI Sinfonico (DVD) $12.99 9/14/10 8/18/10 JACKSON, MILT & HAWKINS, ACG A-1316 COLEMAN Bean Bags (180 Gram Vinyl) $24.98 9/14/10 8/25/10 CD- NON 287228 KREMER, GIDON -

Corpus Antville

Corpus Epistemológico da Investigação Vídeos musicais referenciados pela comunidade Antville entre Junho de 2006 e Junho de 2011 no blogue homónimo www.videos.antville.org Data Título do post 01‐06‐2006 videos at multiple speeds? 01‐06‐2006 music videos based on cars? 01‐06‐2006 can anyone tell me videos with machine guns? 01‐06‐2006 Muse "Supermassive Black Hole" (Dir: Floria Sigismondi) 01‐06‐2006 Skye ‐ "What's Wrong With Me" 01‐06‐2006 Madison "Radiate". Directed by Erin Levendorf 01‐06‐2006 PANASONIC “SHARE THE AIR†VIDEO CONTEST 01‐06‐2006 Number of times 'panasonic' mentioned in last post 01‐06‐2006 Please Panasonic 01‐06‐2006 Paul Oakenfold "FASTER KILL FASTER PUSSYCAT" : Dir. Jake Nava 01‐06‐2006 Presets "Down Down Down" : Dir. Presets + Kim Greenway 01‐06‐2006 Lansing‐Dreiden "A Line You Can Cross" : Dir. 01‐06‐2006 SnowPatrol "You're All I Have" : Dir. 01‐06‐2006 Wolfmother "White Unicorn" : Dir. Kris Moyes? 01‐06‐2006 Fiona Apple ‐ Across The Universe ‐ Director ‐ Paul Thomas Anderson. 02‐06‐2006 Ayumi Hamasaki ‐ Real Me ‐ Director: Ukon Kamimura 02‐06‐2006 They Might Be Giants ‐ "Dallas" d. Asterisk 02‐06‐2006 Bersuit Vergarabat "Sencillamente" 02‐06‐2006 Lily Allen ‐ LDN (epk promo) directed by Ben & Greg 02‐06‐2006 Jamie T 'Sheila' directed by Nima Nourizadeh 02‐06‐2006 Farben Lehre ''Terrorystan'', Director: Marek Gluziñski 02‐06‐2006 Chris And The Other Girls ‐ Lullaby (director: Christian Pitschl, camera: Federico Salvalaio) 02‐06‐2006 Megan Mullins ''Ain't What It Used To Be'' 02‐06‐2006 Mr. -

Deutsche Nationalbibliografie 2014 T 11

Deutsche Nationalbibliografie Reihe T Musiktonträgerverzeichnis Monatliches Verzeichnis Jahrgang: 2014 T 11 Stand: 19. November 2014 Deutsche Nationalbibliothek (Leipzig, Frankfurt am Main) 2014 ISSN 1613-8945 urn:nbn:de:101-ReiheT11_2014-8 2 Hinweise Die Deutsche Nationalbibliografie erfasst eingesandte Pflichtexemplare in Deutschland veröffentlichter Medienwerke, aber auch im Ausland veröffentlichte deutschsprachige Medienwerke, Übersetzungen deutschsprachiger Medienwerke in andere Sprachen und fremdsprachige Medienwerke über Deutschland im Original. Grundlage für die Anzeige ist das Gesetz über die Deutsche Nationalbibliothek (DNBG) vom 22. Juni 2006 (BGBl. I, S. 1338). Monografien und Periodika (Zeitschriften, zeitschriftenartige Reihen und Loseblattausgaben) werden in ihren unterschiedlichen Erscheinungsformen (z.B. Papierausgabe, Mikroform, Diaserie, AV-Medium, elektronische Offline-Publikationen, Arbeitstransparentsammlung oder Tonträger) angezeigt. Alle verzeichneten Titel enthalten einen Link zur Anzeige im Portalkatalog der Deutschen Nationalbibliothek und alle vorhandenen URLs z.B. von Inhaltsverzeichnissen sind als Link hinterlegt. Die Titelanzeigen der Musiktonträger in Reihe T sind, wie sche Katalogisierung von Ausgaben musikalischer Wer- auf der Sachgruppenübersicht angegeben, entsprechend ke (RAK-Musik)“ unter Einbeziehung der „International der Dewey-Dezimalklassifikation (DDC) gegliedert, wo- Standard Bibliographic Description for Printed Music – bei tiefere Ebenen mit bis zu sechs Stellen berücksichtigt ISBD (PM)“ zugrunde. -

アーティスト 商品名 フォーマット 発売日 商品ページ 555 Solar Express CD 2013/11/13

【SOUL/CLUB/RAP】「怒涛のサプライズ ボヘミアの狂詩曲」対象リスト(アーティスト順) アーティスト 商品名 フォーマット 発売日 商品ページ 555 Solar Express CD 2013/11/13 https://tower.jp/item/3319657 1773 サウンド・ソウルステス(DIGI) CD 2010/6/2 https://tower.jp/item/2707224 1773 リターン・オブ・ザ・ニュー CD 2009/3/11 https://tower.jp/item/2525329 1773 Return Of The New (HITS PRICE) CD 2012/6/16 https://tower.jp/item/3102523 1773 RETURN OF THE NEW(LTD) CD 2013/12/25 https://tower.jp/item/3352923 2562 The New Today CD 2014/10/23 https://tower.jp/item/3729257 *Groovy workshop. Emotional Groovin' -Best Hits Mix- mixed by *Groovy workshop. CD 2017/12/13 https://tower.jp/item/4624195 100 Proof (Aged in Soul) エイジド・イン・ソウル CD 1970/11/30 https://tower.jp/item/244446 100X Posse Rare & Unreleased 1992 - 1995 Mixed by Nicky Butters CD 2009/7/18 https://tower.jp/item/2583028 13 & God Live In Japan [Limited] <初回生産限定盤>(LTD/ED) CD 2008/5/14 https://tower.jp/item/2404194 16FLIP P-VINE & Groove-Diggers Presents MIXCHAMBR : Selected & Mixed by 16FLIP <タワーレコード限定> CD 2015/7/4 https://tower.jp/item/3931525 2 Chainz Collegrove(INTL) CD 2016/4/1 https://tower.jp/item/4234390 2000 And One Voltt 02 CD 2010/2/27 https://tower.jp/item/2676223 2000 And One ヘリタージュ CD 2009/2/28 https://tower.jp/item/2535879 24-Carat Black Ghetto : Misfortune's Wealth(US/LP) Analog 2018/3/13 https://tower.jp/item/4579300 2Pac (Tupac Shakur) TUPAC VS DVD 2004/11/12 https://tower.jp/item/1602263 2Pac (Tupac Shakur) 2-PAC 4-EVER DVD 2006/9/2 https://tower.jp/item/2084155 2Pac (Tupac Shakur) Live at the house of blues(BRD) Blu-ray 2017/11/20 https://tower.jp/item/4644444 -

Various BNR, Vol. 1 Mp3, Flac, Wma

Various BNR, Vol. 1 mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Electronic Album: BNR, Vol. 1 Country: Germany Released: 2008 Style: House, Techno, Electro MP3 version RAR size: 1270 mb FLAC version RAR size: 1171 mb WMA version RAR size: 1425 mb Rating: 4.7 Votes: 908 Other Formats: XM AUD DMF MP3 WAV MIDI DXD Tracklist Hide Credits Mein Neues Fahrrad (Boys Noize Remix) 1-1 –Siriusmo Remix – Boys Noize 1-2 –Shadow Dancer Poke The Geeks Were Right (Boys Noize Vs. D.I.M. Remix) 1-3 –The Faint Remix – Boys Noize, D.I.M. 1-4 –PUZIQUe Don't Go 1-5 –Boys Noize Volta 82 1-6 –Djedjotronic Dirty & Hard Shadowbreaker (Boys Noize Mix No. 1) 1-7 –John Starlight Remix – Boys Noize 1-8 –Les Petits Pilous Dodo Electro 1-9 –Strip Steve Ready Steady 1-10 –D.I.M. Is You 1-11 –Housemeister We Need Cash Cowbois (Das Glow Remix) 1-12 –Shadow Dancer Remix – Das Glow 1-13 –Boys Noize Kill The Kid Out Of The Blue (D.I.M. Remix) 1-14 –Darmstadt Remix – D.I.M. 1-15 –Einzeller Schwarzfahrer 2-1 –Housemeister Hello Again 2-2 –Siriusmo Simple 2-3 –PUZIQUe Thomas 2-4 –Shadow Dancer What Is Natural 2-5 –Les Petits Pilous Wake Up 2-6 –CLP* Ready Or Not Frau (Pandullo Vs Und Mix) 2-7 –I-Robots Vocals – Terry-Ann FrenckenWritten-By – Gianluca Pandullo, Marco Palmieri Lava Lava (Feadz Aval Aval Remix) 2-8 –Boys Noize Remix – Feadz Is You (Brodinski Remix) 2-9 –D.I.M. -

WINDOWLICKER. Der Ästhetische Paradigmenwechsel Im Musikvideo Durch Electronic Dance Music

WINDOWLICKER. Der ästhetische Paradigmenwechsel im Musikvideo durch Electronic Dance Music Dissertation zur Erlangung des akademischen Grades doctor philosophiae (Dr. phil.) Eingereicht an der Kultur-, Sozial- und Bildungswissenschaftlichen Fakultät der Humboldt- Universität zu Berlin (im Fachbereich Musik- und Medienwissenschaft) von M.A. Sabine Röthig Präsident der Humboldt-Universität zu Berlin Prof. Dr. Jan-Hendrik Olbertz Dekanin der Philosophischen Fakultät III Prof. Dr. Julia von Blumenthal 1. Gutachter: Prof. Dr. Peter Wicke, Humboldt-Universität zu Berlin 2. Gutachter: Prof. Dr. Holger Schulze, University of Copenhagen Tag der mündlichen Prüfung: 30.11.2015 Abstract Deutsch In der Arbeit wird das Zusammentreffen der aus dem Club kommenden Electronic Dance Music (EDM) mit dem massenmedial konsolidierten Musikvideo untersucht. Diskutiert wird die These eines ästhetischen Paradigmenwechsels, der sich dadurch im Musikvideo vollzieht. Dieser beruht vor allem auf der musikalischen Figur des instrumentalen, modularen Tracks, die sich signifikant von der des Songs unterscheidet. Der originäre Zweck des Musikvideos, den Auftritt des Interpreten auf dem Monitor zu visualisieren, steht also mit dem Track zur Disposition oder wird gar obsolet; das erfordert neue Strategien der Bebilderung. Teil I und II der Arbeit verorten Musikvideo und EDM im wissenschaftlichen Diskurs und versammeln die jeweiligen ästhetischen Attribute. In Teil III wird anhand der Club Visuals die Beziehung von Tracks und Bildern erörtert, um darüber die veränderte Klang-Bild-Konstellation -

Manifestation of Real World Social Events on Twitter

Radboud University Master Thesis Computer Science Manifestation of real world social events on Twitter Author: M. Van de Voort Supervisor: dr. S. Verberne Second reader: prof. dr. T.M. Heskes August 13, 2014 2 ABSTRACT Situations in which many people come together can result in very interesting and positive, lively social events. Unfortunately, large events with many people involved also include risks, ranging from small injuries to death. It would be useful if social media could be used to monitor these events, and to predict unwanted situations and casualties. To be able to know in advance how an event will develop based on information on social media, a good understanding of the relation between the development of real world events and their manifestation online is needed. In this work, we study the relationship between real world events and their online manifestation. We create a model of both the real world event and its online manifestation. We compare these two models using data about five real world social events and Twitter data about them. We determine the correlation between the online and real world model. We find several weak to moderate correla- tions between online and real world characteristics of events. The intensity, readability and sentiment of the tweets are examples of variables in the online model that show a correlation with the real world and the weekends, school holidays and position of the moon are examples of real world variables which manifest themselves on Twitter. 3 4 Contents 1 Introduction 9 1.1 Research questions . 10 1.2 Methodology . 10 2 Related Work 13 2.1 Event detection in social media . -

The Blue Album G-Star Store Brussels Rue Antoine Dansaert 48

Volume 04 — Issue 04 Neighbourhood Life + Global Style Neighbourhood Up in smoke Life Deep waters Style Doing denim Music Out of the blue Culture Memoirs of melancholy + The Design Special The blue album G-Star Store Brussels Rue Antoine Dansaert 48 operated by rdb1 sprl 11214177 The Word BE 420x295 VB DPS.indd 1 8/16/11 2:28 PM G-Star Store Brussels Rue Antoine Dansaert 48 operated by rdb1 sprl 11214177 The Word BE 420x295 VB DPS.indd 1 8/16/11 2:28 PM 4 The editor's letter RADO r5.5 XXL / WWW.RADO.COM Publisher and editor-in-chief There was a point in the production phase of this edition where we Nicholas Lewis tinkered with the idea of interviewing Portishead for our music section. The band were just about to curate ATP’s I’ll Be Your Mirror festi- Design val in London and, as is the case with most summer revival projects facetofacedesign + (Pavement and Faith No More anyone?), the band kept popping up on pleaseletmedesign our radar for all the good reasons. Writers For starters, memories poured in the minute the Bristol threesome’s Guy Dittrich debut album Dummy was loaded into the player. Olivia’s house parties Rose Kelleher in Tervueren / Tervuren, teenage benders in Julie’s flat in Schaerbeek Nicholas Lewis and mix tapes that, at the time, went from Portishead and Mudhoney Philippe Pourhashemi to Rancid and The Fugees. The album was lodged in my collective Sam Steverlynck conscience of the blue, next to Massive Attack’s Blue Lines, Kool Robbert van Jaarsveld Keith’s Sex Styles, Del’s I Wish My Brother George Was Here and St Randa Wazen Germain’s Boulevard (yes, really). -

Ed Royal Toby Tobias Rob Birch Marc Romboy Moguai

TOBY TOBIAS ROB BIRCH MOGUAI MARC ROMBOY SLACK HIPPY 1 Q-tip/gettin Up 1 Africa Bambaata & James 1 The Killers/Human (Thin 1 Audiofly X/1999/8 Bit 1 Darkstar/Lily Liver (Starkey 2 MIA/Paper Planes Brown/Timezone White Duke Mix)/White 2 Marc Romboy/Karambolage Remix)/Offshore Rec. (DFA remix) 2 Bobby Evens/Freak-A-Zoid 2 Ante Perry & Tube Berger/ (Oxia Remix)/ Systematic 2 Martyn/Natural Selec- 3 Loud e/Oceans of Loud Robotz (Rmxxology Theme) Human U/Moonboutque Recordings tion/3024 Rec. 4 Laughing Light of plenty/ 3 Dj Mehdi/Signature (Thomas 3 Pryda/Miami-Atlanta/Pryda 3 Dimitri Andreas/Run and hide 3 Ruckspin&Quantum Soul/ the rose Bangalter Edit) Recordings (Afrilounge Remix)/ Atomise/Ranking Rec. 5 Daso/Pars Tensa 4 Tyken Feat Adl/Let It Rain 4 Alex Metric/Caller/Marine Systematic Recordings 4 2562/Techno Dread/Tectonic 6 ma kayen walou kima (Trevor Loveys Remix) Parade 4 Morgan Geist/Detroit (C2 Rec. l‘amour/Playgroup remix 5 Dj Mehdi/I Am Somebody 5 Deadmau 5/Ghost N Stuff/ Remix 1)/Environment 5 Substance/Relish (Shed (from the arabesque arba‘a (Switch Remix) Mau 5 Trap 5 Guido Schneider feat. Florian Remix)/Scion Versions LP) 6 MGMT/Kids 6 Axwell/Rotterdam City Of Schirmacher/Too late to land 6 Headhunter/Paradigm Shift/ 7 Simon Baker/Plastik 7 Boys Noize/Lava Lava Love (Axwell Reedit)/Axtone /Cocoon Tempa Rec. (Todd Terje remix) 8 Housemeister/What You Records 6 Martin Eyerer/Further more 7 Dabrye/Air feat. Doom 8 Afefe Iku/Mirror Dance Want (Siriusmo Remix) 7 Doman & Gooding/Runnin (Marc Romboy Remix)/ (Kode 9 Remix)/Ghostly 9 Diplo/Blow Your Head (Mark Knight & Funkagenda Kling Klong International (Original And Dj Eli Remix) Remix)/Toolroom 7 Minimal Man/Make a move 8 Modeselektor/The White ED ROYAL 10 Jackbeats/Boy 8 Bit Remix 8 D Ramirez & Dirty South/ part 1 (Marc Romboy Edit)/ Flash feat Thom Yorke 11 Mstrkrft feat.