Data Caches for Superscalar Processors*

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Design and Verification of the Alphastation 600 5-Series Workstation by John H

The Design and Verification of the AlphaStation 600 5-series Workstation by John H. Zurawski, John E. Murray, and Paul J. Lemmon ABSTRACT The AlphaStation 600 5-series workstation is a high-performance, uniprocessor design based on the Alpha 21164 microprocessor and on the PCI bus. Six CMOS ASICs provide high-bandwidth, low-latency interconnects between the CPU, the main memory, and the I/O subsystem. The verification effort used directed, pseudorandom testing on a VERILOG software model. A hardware-based verification technique provided a test throughput that resulted in a significant improvement over software tests. This technique currently involves the use of graphics cards to emulate generic DMA devices. A PCI hardware demon is under development to further enhance the capability of the hardware-based verification. INTRODUCTION The high-performance AlphaStation 600 5-series workstation is based on the fastest Alpha microprocessor to date -- the Alpha 21164.[1] The I/O subsystem uses the 64-bit version of the Peripheral Component Interconnect (PCI) and the Extended Industry Standard Architecture (EISA) bus. The AlphaStation 600 supports three operating systems: Digital UNIX (formerly DEC OSF/1), OpenVMS, and Microsoft's Windows NT. This workstation series uses the DECchip 21171 chip set designed and built by Digital. These chips provide high-bandwidth, low-latency interconnects between the CPU, the main memory, and the PCI bus. This paper describes the architecture and features of the AlphaStation 600 5-series workstation and the DECchip 21171 chip set. The system overview is first presented, followed by a detailed discussion of the chip set. The paper then describes the cache and memory designs, detailing how the memory design evolved from the workstation's requirements. -

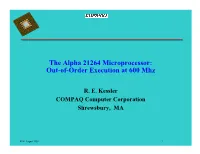

The Alpha 21264 Microprocessor: Out-Of-Order Execution at 600 Mhz

The Alpha 21264 Microprocessor: Out-of-Order Execution at 600 Mhz R. E. Kessler COMPAQ Computer Corporation Shrewsbury, MA REK August 1998 1 Some Highlights z Continued Alpha performance leadership y 600 Mhz operation in 0.35u CMOS6, 6 metal layers, 2.2V y 15 Million transistors, 3.1 cm2, 587 pin PGA y Specint95 of 30+ and Specfp95 of 50+ y Out-of-order and speculative execution y 4-way integer issue y 2-way floating-point issue y Sophisticated tournament branch prediction y High-bandwidth memory system (1+ GB/sec) REK August 1998 2 Alpha 21264: Block Diagram FETCH MAP QUEUE REG EXEC DCACHE Stage: 0 1 2 3 4 5 6 Int Branch Int Reg Exec Predictors Reg Issue File Queue Addr Sys Bus Map (80) Exec (20) L1 Bus 64-bit Data Reg Exec Inter- Cache Bus 80 in-flight instructions File Cache plus 32 loads and 32 stores Addr face 64KB 128-bit (80) Exec Unit Next-Line 2-Set Address Phys Addr 4 Instructions / cycle L1 Ins. 44-bit Cache FP ADD FP Reg 64KB FP Div/Sqrt Issue File Victim 2-Set Reg Queue (72) FP MUL Buffer Map (15) Miss Address REK August 1998 3 Alpha 21264: Block Diagram FETCH MAP QUEUE REG EXEC DCACHE Stage: 0 1 2 3 4 5 6 Int Branch Int Reg Exec Predictors Reg Issue File Queue Addr Sys Bus Map (80) Exec (20) L1 Bus 64-bit Data Reg Exec Inter- Cache Bus 80 in-flight instructions File Cache plus 32 loads and 32 stores Addr face 64KB 128-bit (80) Exec Unit Next-Line 2-Set Address Phys Addr 4 Instructions / cycle L1 Ins. -

Computer Organization EECC 550 • Introduction: Modern Computer Design Levels, Components, Technology Trends, Register Transfer Week 1 Notation (RTN)

Computer Organization EECC 550 • Introduction: Modern Computer Design Levels, Components, Technology Trends, Register Transfer Week 1 Notation (RTN). [Chapters 1, 2] • Instruction Set Architecture (ISA) Characteristics and Classifications: CISC Vs. RISC. [Chapter 2] Week 2 • MIPS: An Example RISC ISA. Syntax, Instruction Formats, Addressing Modes, Encoding & Examples. [Chapter 2] • Central Processor Unit (CPU) & Computer System Performance Measures. [Chapter 4] Week 3 • CPU Organization: Datapath & Control Unit Design. [Chapter 5] Week 4 – MIPS Single Cycle Datapath & Control Unit Design. – MIPS Multicycle Datapath and Finite State Machine Control Unit Design. Week 5 • Microprogrammed Control Unit Design. [Chapter 5] – Microprogramming Project Week 6 • Midterm Review and Midterm Exam Week 7 • CPU Pipelining. [Chapter 6] • The Memory Hierarchy: Cache Design & Performance. [Chapter 7] Week 8 • The Memory Hierarchy: Main & Virtual Memory. [Chapter 7] Week 9 • Input/Output Organization & System Performance Evaluation. [Chapter 8] Week 10 • Computer Arithmetic & ALU Design. [Chapter 3] If time permits. Week 11 • Final Exam. EECC550 - Shaaban #1 Lec # 1 Winter 2005 11-29-2005 Computing System History/Trends + Instruction Set Architecture (ISA) Fundamentals • Computing Element Choices: – Computing Element Programmability – Spatial vs. Temporal Computing – Main Processor Types/Applications • General Purpose Processor Generations • The Von Neumann Computer Model • CPU Organization (Design) • Recent Trends in Computer Design/performance • Hierarchy -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Improving the Precise Interrupt Mechanism of Software- Managed TLB Miss Handlers

Improving the Precise Interrupt Mechanism of Software- Managed TLB Miss Handlers Aamer Jaleel and Bruce Jacob Electrical & Computer Engineering University of Maryland at College Park {ajaleel,blj}@eng.umd.edu Abstract. The effects of the general-purpose precise interrupt mechanisms in use for the past few decades have received very little attention. When modern out-of-order processors handle interrupts precisely, they typically begin by flushing the pipeline to make the CPU available to execute handler instructions. In doing so, the CPU ends up flushing many instructions that have been brought in to the reorder buffer. In par- ticular, many of these instructions have reached a very deep stage in the pipeline - representing significant work that is wasted. In addition, an overhead of several cycles can be expected in re-fetching and re-executing these instructions. This paper concentrates on improving the performance of precisely handling software managed translation lookaside buffer (TLB) interrupts, one of the most frequently occurring interrupts. This paper presents a novel method of in-lining the interrupt handler within the reorder buffer. Since the first level interrupt-handlers of TLBs are usually small, they could potentially fit in the reorder buffer along with the user-level code already there. In doing so, the instructions that would otherwise be flushed from the pipe need not be re-fetched and re-executed. Additionally, it allows for instructions independent of the exceptional instruction to continue to execute in parallel with the handler code. We simulate two different schemes of in-lining the interrupt on a pro- cessor with a 4-way out-of-order core similar to the Alpha 21264. -

VAX VMS at 20

1977–1997... and beyond Nothing Stops It! Of all the winning attributes of the OpenVMS operating system, perhaps its key success factor is its evolutionary spirit. Some would say OpenVMS was revolutionary. But I would prefer to call it evolutionary because its transition has been peaceful and constructive. Over a 20-year period, OpenVMS has experienced evolution in five arenas. First, it evolved from a system running on some 20 printed circuit boards to a single chip. Second, it evolved from being proprietary to open. Third, it evolved from running on CISC-based VAX to RISC-based Alpha systems. Fourth, VMS evolved from being primarily a technical oper- ating system, to a commercial operat- ing system, to a high availability mission-critical commercial operating system. And fifth, VMS evolved from time-sharing to a workstation environment, to a client/server computing style environment. The hardware has experienced a similar evolution. Just as the 16-bit PDP systems laid the groundwork for the VAX platform, VAX laid the groundwork for Alpha—the industry’s leading 64-bit systems. While the platforms have grown and changed, the success continues. Today, OpenVMS is the most flexible and adaptable operating system on the planet. What start- ed out as the concept of ‘Starlet’ in 1975 is moving into ‘Galaxy’ for the 21st century. And like the universe, there is no end in sight. —Jesse Lipcon Vice President of UNIX and OpenVMS Systems Business Unit TABLE OF CONTENTS CHAPTER I Changing the Face of Computing 4 CHAPTER II Setting the Stage 6 CHAPTER -

The Alphaserver 4100 Cached Processor Module Maurice B

I' UI* Qi .H I1 0 &, hi 1, 7 : I . - * -1 ALPHASERVER 4100 SYSTEM -. ORACLE AND SYBASE DATABASE PRODUCTS - - 1 FORVLM .& Digital . &&' *-I INSTRUCTION EXECUTION ON ALPHA PROCESSORS 663***01 c: c: "' ~echn ica l 3 Journal i ri. i . - ,.i;' F ,. ! , 14 )-? ".,' & I-, 1 *,< .- , ',,I"',., , '*a, ,...,- csa y -p~ iic Editorial The Digital TechnicalJournalis a refereed The following are trademarks of Digital Jane C. Blake, Managing Editor journal published quarterly by Digital Equipment Corporation: AlphaServer, Kathleen M. Stetson, Editor Equipment Corporation, 50 Nagog Park, Alphastation, DEC, DECnet, DIGITAL, Helen L. Patterson, Editor AK02-3/B3, Acton, MA 01720-9843. the DIGITAL logo, VAX, VMS, and Hard-copy subscriptions can be ordered by ULTRIX. Circulation sending a check in U.S. hds(made payable AIM is a trademark ofAIM Technology, Inc. Catherine M. Phillips, Administrator to Digital Equipment Corporation) to the CCT is a registered trademark of Cooper Dorothea B. Cassady, Secretary published-by address. General subscription and Chyan Technologies, Inc. CHALLENGE rates are $40.00 (non-U.S. $60) for four and Silicon Graphics are registered trademarks Production issues and $75.00 (non-U.S. $115) for and POWER CHALLENGE is a trademark Christa W. Jessico, Production Editor eight issues. University and college profes- of Silicon Graphics, Inc. Compaq is a regis- Anne S. Katzeff, Typographer sors and Ph.D. students in the electrical tered trademark and ProLiant is a trademark Peter R. Woodbury, Illustrator engineering and computer science fields of Compaq Computer Corporation. HP is receive complimentary subscriptions upon a registered trademark of Hewlett-Packard Advisory Board request. -

Zarka Cvetanovic and R.E. Kessler Compaq Computer Corporation

PERFORMANCE ANALYSIS OF THE ALPHA 21264-BASED COMPAQ ES40 SYSTEM Zarka Cvetanovic and R.E. Kessler Compaq Computer Corporation Abstract SPEC95 1-CPU 90 This paper evaluates performance characteristics of the Compaq ES40/21264 667MHz Compaq ES40 shared memory multiprocessor. The ES40 80 HP PA-8500 440MHz system contains up to four Alpha 21264 CPU’s together IBM Power3 375MHz with a high-performance memory system. We qualitatively 70 SUN USPARC-II 450MHz describe architectural features included in the 21264 Intel Pentium-III 800MHz microprocessor and the surrounding system chipset. We 60 further quantitatively show the performance effects of these features using benchmark results and profiling data 50 collected from industry-standard commercial and technical workloads. The profile data includes basic performance 40 information – such as instructions per cycle, branch mispredicts, and cache misses – as well as other data that 30 specifically characterizes the 21264. Wherever possible, we compare and contrast the ES40 to the AlphaServer 4100 – a 20 previous-generation Alpha system containing four Alpha 21164 microprocessors – to highlight the architectural 10 advances in the ES40. We find that the Compaq ES40 often provides 2 to 3 times the performance of the AlphaServer 0 4100 at similar clock frequencies. We also find that the SPECint95 SPECfp95 ES40 memory system has about five times the memory bandwidth of the 4100. These performance improvements Figure 1 - SPEC95 Comparison come from numerous microprocessor and platform enhancements, including out-of-order execution, branch SPECfp_rate95 prediction, functional units, and the memory system. 3000 Compaq ES40/21264 667MHz 1. INTRODUCTION HP PA-8500 440MHz 2500 SUN USparc-II 400MHz The Compaq ES40 is a shared memory multiprocessor Intel Pentium-III 800MHz containing up to four third-generation Alpha 21264 2000 microprocessors [1][2]. -

MICROPROCESSOR EVALUATIONS for SAFETY-CRITICAL, REAL-TIME February 2009 APPLICATIONS: AUTHORITY for EXPENDITURE NO

DOT/FAA/AR-08/55 Microprocessor Evaluations for Air Traffic Organization Operations Planning Safety-Critical, Real-Time Office of Aviation Research and Development Applications: Authority for Washington, DC 20591 Expenditure No. 43 Phase 3 Report February 2009 Final Report This document is available to the U.S. public through the National Technical Information Services (NTIS), Springfield, Virginia 22161. U.S. Department of Transportation Federal Aviation Administration NOTICE This document is disseminated under the sponsorship of the U.S. Department of Transportation in the interest of information exchange. The United States Government assumes no liability for the contents or use thereof. The United States Government does not endorse products or manufacturers. Trade or manufacturer's names appear herein solely because they are considered essential to the objective of this report. This document does not constitute FAA certification policy. Consult your local FAA aircraft certification office as to its use. This report is available at the Federal Aviation Administration William J. Hughes Technical Center’s Full-Text Technical Reports page: actlibrary.act.faa.gov in Adobe Acrobat portable document format (PDF). Technical Report Documentation Page 1. Report No. 2. Government Accession No. 3. Recipient's Catalog No. DOT/FAA/AR-08/55 4. Title and Subtitle 5. Report Date MICROPROCESSOR EVALUATIONS FOR SAFETY-CRITICAL, REAL-TIME February 2009 APPLICATIONS: AUTHORITY FOR EXPENDITURE NO. 43 PHASE 3 REPORT 6. Performing Organization Code 7. Author(s) 8. Performing Organization Report No. TAMU-CS-AVSI-72005 Rabi N. Mahapatra, Praveen Bhojwani, Jason Lee, and Yoonjin Kim 9. Performing Organization Name and Address 10. Work Unit No. -

Alphapc 264DP Technical Reference Manual Preliminary

AlphaPC 264DP Technical Reference Manual Order Number: EC–RB0DA–TE Revision/Update Information: This is a new document. Preliminary Compaq Computer Corporation February 1999 The information in this publication is subject to change without notice. COMPAQ COMPUTER CORPORATION SHALL NOT BE LIABLE FOR TECHNICAL OR EDITORIAL ERRORS OR OMISSIONS CONTAINED HEREIN, NOR FOR INCIDENTAL OR CONSEQUENTIAL DAM- AGES RESULTING FROM THE FURNISHING, PERFORMANCE, OR USE OF THIS MATERIAL. THIS INFORMATION IS PROVIDED "AS IS" AND COMPAQ COMPUTER CORPORATION DISCLAIMS ANY WARRANTIES, EXPRESS, IMPLIED OR STATUTORY AND EXPRESSLY DISCLAIMS THE IMPLIED WAR- RANTIES OF MERCHANTABILITY, FITNESS FOR PARTICULAR PURPOSE, GOOD TITLE AND AGAINST INFRINGEMENT. This publication contains information protected by copyright. No part of this publication may be photocopied or reproduced in any form without prior written consent from Compaq Computer Corporation. ©1999 Digital Equipment Corporation. All rights reserved. Printed in U.S.A. COMPAQ, the Compaq logo, the Digital logo, and DIGITAL Registered in U.S. Patent and Trademark Office. AlphaPC, DECchip, and Tru64 are trademarks of Compaq Computer Corporation. Intel is a registered trademark of Intel Corporation. Microsoft, Visual C++, and Windows NT are registered trademarks of Microsoft Corporation. Other product names mentioned herein may be the trademarks of their respective companies. 12 February 1999 – Subject to Change Contents 1 AlphaPC 264DP Introduction 1.1 System Components and Features . 1-1 1.1.1 Memory Subsystem . 1-2 1.1.2 21272 Core Logic Chipset . 1-2 1.1.3 CPU Daughtercard . 1-3 1.1.3.1 Level 2 Cache Subsystem Overview . 1-4 1.1.3.2 21264 DC-to-DC Converter . -

The Computer History Simulation Project

The Computer History Simulation Project The Computer History Simulation Project The Computer History Simulation Project is a loose Internet-based collective of people interested in restoring historically significant computer hardware and software systems by simulation. The goal of the project is to create highly portable system simulators and to publish them as freeware on the Internet, with freely available copies of significant or representative software. Simulators SIMH is a highly portable, multi-system simulator. ● Download the latest sources for SIMH (V3.5-1 updated 15-Oct-2005 - see change log). ● Download a zip file containing Windows executables for all the SIMH simulators. The VAX and PDP-11 are compiled without Ethernet support. Versions with Ethernet support are available here. If you download the executables, you should download the source archive as well, as it contains the documentation and other supporting files. ● If your host system is Alpha/VMS, and you want Ethernet support, you need to download the VMS Pcap library and execlet here. SIMH implements simulators for: ● Data General Nova, Eclipse ● Digital Equipment Corporation PDP-1, PDP-4, PDP-7, PDP-8, PDP-9, PDP-10, PDP-11, PDP- 15, VAX ● GRI Corporation GRI-909 ● IBM 1401, 1620, 1130, System 3 ● Interdata (Perkin-Elmer) 16b and 32b systems ● Hewlett-Packard 2116, 2100, 21MX ● Honeywell H316/H516 ● MITS Altair 8800, with both 8080 and Z80 ● Royal-Mcbee LGP-30, LGP-21 ● Scientific Data Systems SDS 940 Also available is a collection of tools for manipulating simulator file formats and for cross- assembling code for the PDP-1, PDP-7, PDP-8, and PDP-11. -

Appendix C a Survey of RISC Architectures for Desktop, Server, and Embedded Computers

C.1 Introduction C-2 C.2 Addressing Modes and Instruction Formats C-4 C.3 Instructions: The MIPS Core Subset C-5 C.4 Instructions: Multimedia Extensions of the Desktop/Server RISCs C-16 C.5 Instructions: Digital Signal-Processing Extensions of the Embedded RISCs C-18 C.6 Instructions: Common Extensions to MIPS Core C-19 C.7 Instructions Unique to MIPS64 C-24 C.8 Instructions Unique to Alpha C-26 C.9 Instructions Unique to SPARC v.9 C-27 C.10 Instructions Unique to PowerPC C-31 C.11 Instructions Unique to PA-RISC 2.0 C-32 C.12 Instructions Unique to ARM C-35 C.13 Instructions Unique to Thumb C-36 C.14 Instructions Unique to SuperH C-37 C.15 Instructions Unique to M32R C-38 C.16 Instructions Unique to MIPS16 C-38 C.17 Concluding Remarks C-40 C.18 Acknowledgments C-41 References C-41 C A Survey of RISC Architectures for Desktop, Server, and Embedded Computers RISC: any computer announced after 1985. Steven Przybylski A Designer of the Stanford MIPS © 2003 Elsevier Science (USA). All rights reserved. C-2 I Appendix C A Survey of RISC Architectures for Desktop, Server, and Embedded Computers C.1 Introduction We cover two groups of reduced instruction set computer (RISC) architectures in this appendix. The first group is the desktop and server RISCs: I Digital Alpha I Hewlett-Packard PA-RISC I IBM and Motorola PowerPC I Silicon Graphics MIPS I Sun Microsystems SPARC The second group is the embedded RISCs: I Advanced RISC Machines ARM I Advanced RISC Machines Thumb I Hitachi SuperH I Mitsubishi M32R I Silicon Graphics MIPS16 There has never been another class of computers so similar.