Chapter 1 the Euclidean Space

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Swimming in Spacetime: Motion by Cyclic Changes in Body Shape

RESEARCH ARTICLE tation of the two disks can be accomplished without external torques, for example, by fixing Swimming in Spacetime: Motion the distance between the centers by a rigid circu- lar arc and then contracting a tension wire sym- by Cyclic Changes in Body Shape metrically attached to the outer edge of the two caps. Nevertheless, their contributions to the zˆ Jack Wisdom component of the angular momentum are parallel and add to give a nonzero angular momentum. Cyclic changes in the shape of a quasi-rigid body on a curved manifold can lead Other components of the angular momentum are to net translation and/or rotation of the body. The amount of translation zero. The total angular momentum of the system depends on the intrinsic curvature of the manifold. Presuming spacetime is a is zero, so the angular momentum due to twisting curved manifold as portrayed by general relativity, translation in space can be must be balanced by the angular momentum of accomplished simply by cyclic changes in the shape of a body, without any the motion of the system around the sphere. external forces. A net rotation of the system can be ac- complished by taking the internal configura- The motion of a swimmer at low Reynolds of cyclic changes in their shape. Then, presum- tion of the system through a cycle. A cycle number is determined by the geometry of the ing spacetime is a curved manifold as portrayed may be accomplished by increasing by ⌬ sequence of shapes that the swimmer assumes by general relativity, I show that net translations while holding fixed, then increasing by (1). -

Foundations of Newtonian Dynamics: an Axiomatic Approach For

Foundations of Newtonian Dynamics: 1 An Axiomatic Approach for the Thinking Student C. J. Papachristou 2 Department of Physical Sciences, Hellenic Naval Academy, Piraeus 18539, Greece Abstract. Despite its apparent simplicity, Newtonian mechanics contains conceptual subtleties that may cause some confusion to the deep-thinking student. These subtle- ties concern fundamental issues such as, e.g., the number of independent laws needed to formulate the theory, or, the distinction between genuine physical laws and deriva- tive theorems. This article attempts to clarify these issues for the benefit of the stu- dent by revisiting the foundations of Newtonian dynamics and by proposing a rigor- ous axiomatic approach to the subject. This theoretical scheme is built upon two fun- damental postulates, namely, conservation of momentum and superposition property for interactions. Newton’s laws, as well as all familiar theorems of mechanics, are shown to follow from these basic principles. 1. Introduction Teaching introductory mechanics can be a major challenge, especially in a class of students that are not willing to take anything for granted! The problem is that, even some of the most prestigious textbooks on the subject may leave the student with some degree of confusion, which manifests itself in questions like the following: • Is the law of inertia (Newton’s first law) a law of motion (of free bodies) or is it a statement of existence (of inertial reference frames)? • Are the first two of Newton’s laws independent of each other? It appears that -

Vectors, Matrices and Coordinate Transformations

S. Widnall 16.07 Dynamics Fall 2009 Lecture notes based on J. Peraire Version 2.0 Lecture L3 - Vectors, Matrices and Coordinate Transformations By using vectors and defining appropriate operations between them, physical laws can often be written in a simple form. Since we will making extensive use of vectors in Dynamics, we will summarize some of their important properties. Vectors For our purposes we will think of a vector as a mathematical representation of a physical entity which has both magnitude and direction in a 3D space. Examples of physical vectors are forces, moments, and velocities. Geometrically, a vector can be represented as arrows. The length of the arrow represents its magnitude. Unless indicated otherwise, we shall assume that parallel translation does not change a vector, and we shall call the vectors satisfying this property, free vectors. Thus, two vectors are equal if and only if they are parallel, point in the same direction, and have equal length. Vectors are usually typed in boldface and scalar quantities appear in lightface italic type, e.g. the vector quantity A has magnitude, or modulus, A = |A|. In handwritten text, vectors are often expressed using the −→ arrow, or underbar notation, e.g. A , A. Vector Algebra Here, we introduce a few useful operations which are defined for free vectors. Multiplication by a scalar If we multiply a vector A by a scalar α, the result is a vector B = αA, which has magnitude B = |α|A. The vector B, is parallel to A and points in the same direction if α > 0. -

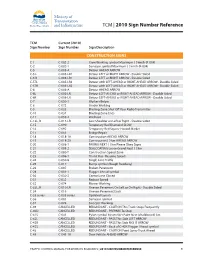

2010 Sign Number Reference for Traffic Control Manual

TCM | 2010 Sign Number Reference TCM Current (2010) Sign Number Sign Number Sign Description CONSTRUCTION SIGNS C-1 C-002-2 Crew Working symbol Maximum ( ) km/h (R-004) C-2 C-002-1 Surveyor symbol Maximum ( ) km/h (R-004) C-5 C-005-A Detour AHEAD ARROW C-5 L C-005-LR1 Detour LEFT or RIGHT ARROW - Double Sided C-5 R C-005-LR1 Detour LEFT or RIGHT ARROW - Double Sided C-5TL C-005-LR2 Detour with LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-5TR C-005-LR2 Detour with LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-6 C-006-A Detour AHEAD ARROW C-6L C-006-LR Detour LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-6R C-006-LR Detour LEFT-AHEAD or RIGHT-AHEAD ARROW - Double Sided C-7 C-050-1 Workers Below C-8 C-072 Grader Working C-9 C-033 Blasting Zone Shut Off Your Radio Transmitter C-10 C-034 Blasting Zone Ends C-11 C-059-2 Washout C-13L, R C-013-LR Low Shoulder on Left or Right - Double Sided C-15 C-090 Temporary Red Diamond SLOW C-16 C-092 Temporary Red Square Hazard Marker C-17 C-051 Bridge Repair C-18 C-018-1A Construction AHEAD ARROW C-19 C-018-2A Construction ( ) km AHEAD ARROW C-20 C-008-1 PAVING NEXT ( ) km Please Obey Signs C-21 C-008-2 SEALCOATING Loose Gravel Next ( ) km C-22 C-080-T Construction Speed Zone C-23 C-086-1 Thank You - Resume Speed C-24 C-030-8 Single Lane Traffic C-25 C-017 Bump symbol (Rough Roadway) C-26 C-007 Broken Pavement C-28 C-001-1 Flagger Ahead symbol C-30 C-030-2 Centre Lane Closed C-31 C-032 Reduce Speed C-32 C-074 Mower Working C-33L, R C-010-LR Uneven Pavement On Left or On Right - Double Sided C-34 -

Phoronomy: Space, Construction, and Mathematizing Motion Marius Stan

To appear in Bennett McNulty (ed.), Kant’s Metaphysical Foundations of Natural Science: A Critical Guide. Cambridge University Press. Phoronomy: space, construction, and mathematizing motion Marius Stan With his chapter, Phoronomy, Kant defies even the seasoned interpreter of his philosophy of physics.1 Exegetes have given it little attention, and un- derstandably so: his aims are opaque, his turns in argument little motivated, and his context mysterious, which makes his project there look alienating. I seek to illuminate here some of the darker corners in that chapter. Specifi- cally, I aim to clarify three notions in it: his concepts of velocity, of compo- site motion, and of the construction required to compose motions. I defend three theses about Kant. 1) his choice of velocity concept is ul- timately insufficient. 2) he sided with the rationalist faction in the early- modern debate on directed quantities. 3) it remains an open question if his algebra of motion is a priori, though he believed it was. I begin in § 1 by explaining Kant’s notion of phoronomy and its argu- ment structure in his chapter. In § 2, I present four pictures of velocity cur- rent in Kant’s century, and I assess the one he chose. My § 3 is in three parts: a historical account of why algebra of motion became a topic of early modern debate; a synopsis of the two sides that emerged then; and a brief account of his contribution to the debate. Finally, § 4 assesses how general his account of composite motion is, and if it counts as a priori knowledge. -

Glossary of Linear Algebra Terms

INNER PRODUCT SPACES AND THE GRAM-SCHMIDT PROCESS A. HAVENS 1. The Dot Product and Orthogonality 1.1. Review of the Dot Product. We first recall the notion of the dot product, which gives us a familiar example of an inner product structure on the real vector spaces Rn. This product is connected to the Euclidean geometry of Rn, via lengths and angles measured in Rn. Later, we will introduce inner product spaces in general, and use their structure to define general notions of length and angle on other vector spaces. Definition 1.1. The dot product of real n-vectors in the Euclidean vector space Rn is the scalar product · : Rn × Rn ! R given by the rule n n ! n X X X (u; v) = uiei; viei 7! uivi : i=1 i=1 i n Here BS := (e1;:::; en) is the standard basis of R . With respect to our conventions on basis and matrix multiplication, we may also express the dot product as the matrix-vector product 2 3 v1 6 7 t î ó 6 . 7 u v = u1 : : : un 6 . 7 : 4 5 vn It is a good exercise to verify the following proposition. Proposition 1.1. Let u; v; w 2 Rn be any real n-vectors, and s; t 2 R be any scalars. The Euclidean dot product (u; v) 7! u · v satisfies the following properties. (i:) The dot product is symmetric: u · v = v · u. (ii:) The dot product is bilinear: • (su) · v = s(u · v) = u · (sv), • (u + v) · w = u · w + v · w. -

The Dot Product

The Dot Product In this section, we will now concentrate on the vector operation called the dot product. The dot product of two vectors will produce a scalar instead of a vector as in the other operations that we examined in the previous section. The dot product is equal to the sum of the product of the horizontal components and the product of the vertical components. If v = a1 i + b1 j and w = a2 i + b2 j are vectors then their dot product is given by: v · w = a1 a2 + b1 b2 Properties of the Dot Product If u, v, and w are vectors and c is a scalar then: u · v = v · u u · (v + w) = u · v + u · w 0 · v = 0 v · v = || v || 2 (cu) · v = c(u · v) = u · (cv) Example 1: If v = 5i + 2j and w = 3i – 7j then find v · w. Solution: v · w = a1 a2 + b1 b2 v · w = (5)(3) + (2)(-7) v · w = 15 – 14 v · w = 1 Example 2: If u = –i + 3j, v = 7i – 4j and w = 2i + j then find (3u) · (v + w). Solution: Find 3u 3u = 3(–i + 3j) 3u = –3i + 9j Find v + w v + w = (7i – 4j) + (2i + j) v + w = (7 + 2) i + (–4 + 1) j v + w = 9i – 3j Example 2 (Continued): Find the dot product between (3u) and (v + w) (3u) · (v + w) = (–3i + 9j) · (9i – 3j) (3u) · (v + w) = (–3)(9) + (9)(-3) (3u) · (v + w) = –27 – 27 (3u) · (v + w) = –54 An alternate formula for the dot product is available by using the angle between the two vectors. -

Calculus Terminology

AP Calculus BC Calculus Terminology Absolute Convergence Asymptote Continued Sum Absolute Maximum Average Rate of Change Continuous Function Absolute Minimum Average Value of a Function Continuously Differentiable Function Absolutely Convergent Axis of Rotation Converge Acceleration Boundary Value Problem Converge Absolutely Alternating Series Bounded Function Converge Conditionally Alternating Series Remainder Bounded Sequence Convergence Tests Alternating Series Test Bounds of Integration Convergent Sequence Analytic Methods Calculus Convergent Series Annulus Cartesian Form Critical Number Antiderivative of a Function Cavalieri’s Principle Critical Point Approximation by Differentials Center of Mass Formula Critical Value Arc Length of a Curve Centroid Curly d Area below a Curve Chain Rule Curve Area between Curves Comparison Test Curve Sketching Area of an Ellipse Concave Cusp Area of a Parabolic Segment Concave Down Cylindrical Shell Method Area under a Curve Concave Up Decreasing Function Area Using Parametric Equations Conditional Convergence Definite Integral Area Using Polar Coordinates Constant Term Definite Integral Rules Degenerate Divergent Series Function Operations Del Operator e Fundamental Theorem of Calculus Deleted Neighborhood Ellipsoid GLB Derivative End Behavior Global Maximum Derivative of a Power Series Essential Discontinuity Global Minimum Derivative Rules Explicit Differentiation Golden Spiral Difference Quotient Explicit Function Graphic Methods Differentiable Exponential Decay Greatest Lower Bound Differential -

Rotation Matrix - Wikipedia, the Free Encyclopedia Page 1 of 22

Rotation matrix - Wikipedia, the free encyclopedia Page 1 of 22 Rotation matrix From Wikipedia, the free encyclopedia In linear algebra, a rotation matrix is a matrix that is used to perform a rotation in Euclidean space. For example the matrix rotates points in the xy -Cartesian plane counterclockwise through an angle θ about the origin of the Cartesian coordinate system. To perform the rotation, the position of each point must be represented by a column vector v, containing the coordinates of the point. A rotated vector is obtained by using the matrix multiplication Rv (see below for details). In two and three dimensions, rotation matrices are among the simplest algebraic descriptions of rotations, and are used extensively for computations in geometry, physics, and computer graphics. Though most applications involve rotations in two or three dimensions, rotation matrices can be defined for n-dimensional space. Rotation matrices are always square, with real entries. Algebraically, a rotation matrix in n-dimensions is a n × n special orthogonal matrix, i.e. an orthogonal matrix whose determinant is 1: . The set of all rotation matrices forms a group, known as the rotation group or the special orthogonal group. It is a subset of the orthogonal group, which includes reflections and consists of all orthogonal matrices with determinant 1 or -1, and of the special linear group, which includes all volume-preserving transformations and consists of matrices with determinant 1. Contents 1 Rotations in two dimensions 1.1 Non-standard orientation -

1 Sets and Set Notation. Definition 1 (Naive Definition of a Set)

LINEAR ALGEBRA MATH 2700.006 SPRING 2013 (COHEN) LECTURE NOTES 1 Sets and Set Notation. Definition 1 (Naive Definition of a Set). A set is any collection of objects, called the elements of that set. We will most often name sets using capital letters, like A, B, X, Y , etc., while the elements of a set will usually be given lower-case letters, like x, y, z, v, etc. Two sets X and Y are called equal if X and Y consist of exactly the same elements. In this case we write X = Y . Example 1 (Examples of Sets). (1) Let X be the collection of all integers greater than or equal to 5 and strictly less than 10. Then X is a set, and we may write: X = f5; 6; 7; 8; 9g The above notation is an example of a set being described explicitly, i.e. just by listing out all of its elements. The set brackets {· · ·} indicate that we are talking about a set and not a number, sequence, or other mathematical object. (2) Let E be the set of all even natural numbers. We may write: E = f0; 2; 4; 6; 8; :::g This is an example of an explicity described set with infinitely many elements. The ellipsis (:::) in the above notation is used somewhat informally, but in this case its meaning, that we should \continue counting forever," is clear from the context. (3) Let Y be the collection of all real numbers greater than or equal to 5 and strictly less than 10. Recalling notation from previous math courses, we may write: Y = [5; 10) This is an example of using interval notation to describe a set. -

THE NUMBER of ⊕-SIGN TYPES Jian-Yi Shi Department Of

THE NUMBER OF © -SIGN TYPES Jian-yi Shi Department of Mathematics, East China Normal University Shanghai, 200062, P.R.C. In [6], the author introduced the concept of a sign type indexed by the set f(i; j) j 1 · i; j · n; i 6= jg for the description of the cells in the affine Weyl group of type Ae` ( in the sense of Kazhdan and Lusztig [3] ) . Later, the author generalized it by defining a sign type indexed by any root system [8]. Since then, sign type has played a more and more important role in the study of the cells of any irreducible affine Weyl group [1],[2],[5],[8],[9],[10],[12]. Thus it is worth to study sign type itself. One task is to enumerate various kinds of sign types. In [8], the author verified Carter’s conjecture on the number of all sign types indexed by any irreducible root system. In the present paper, we consider some special kind of sign types, called ©-sign types. We show that these sign types are in one-to-one correspondence with the increasing subsets of the related positive root system as a partially ordered set. Thus we get the number of all the ©-sign types indexed by any irreducible positive root system Φ+ by enumerating all the increasing subsets Key words and phrases. © -sign types, increasing subsets, Young diagram. Supported by the National Science Foundation of China and by the Science Foundation of the University Doctorial Program of CNEC Typeset by AMS-TEX 1 2 JIAN.YI-SHI of Φ+. -

Basics of Linear Algebra

Basics of Linear Algebra Jos and Sophia Vectors ● Linear Algebra Definition: A list of numbers with a magnitude and a direction. ○ Magnitude: a = [4,3] |a| =sqrt(4^2+3^2)= 5 ○ Direction: angle vector points ● Computer Science Definition: A list of numbers. ○ Example: Heights = [60, 68, 72, 67] Dot Product of Vectors Formula: a · b = |a| × |b| × cos(θ) ● Definition: Multiplication of two vectors which results in a scalar value ● In the diagram: ○ |a| is the magnitude (length) of vector a ○ |b| is the magnitude of vector b ○ Θ is the angle between a and b Matrix ● Definition: ● Matrix elements: ● a)Matrix is an arrangement of numbers into rows and columns. ● b) A matrix is an m × n array of scalars from a given field F. The individual values in the matrix are called entries. ● Matrix dimensions: the number of rows and columns of the matrix, in that order. Multiplication of Matrices ● The multiplication of two matrices ● Result matrix dimensions ○ Notation: (Row, Column) ○ Columns of the 1st matrix must equal the rows of the 2nd matrix ○ Result matrix is equal to the number of (1, 2, 3) • (7, 9, 11) = 1×7 +2×9 + 3×11 rows in 1st matrix and the number of = 58 columns in the 2nd matrix ○ Ex. 3 x 4 ॱ 5 x 3 ■ Dot product does not work ○ Ex. 5 x 3 ॱ 3 x 4 ■ Dot product does work ■ Result: 5 x 4 Dot Product Application ● Application: Ray tracing program ○ Quickly create an image with lower quality ○ “Refinement rendering pass” occurs ■ Removes the jagged edges ○ Dot product used to calculate ■ Intersection between a ray and a sphere ■ Measure the length to the intersection points ● Application: Forward Propagation ○ Input matrix * weighted matrix = prediction matrix http://immersivemath.com/ila/ch03_dotprodu ct/ch03.html#fig_dp_ray_tracer Projections One important use of dot products is in projections.