High-Level Synthesis of Control and Memory Intensive Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Digital Systems Modeling Chapter 2 VHDL-Based Design

Digital Systems Modeling Chapter 2 VHDL-Based Design Alain Vachoux Microelectronic Systems Laboratory [email protected] Digital Systems Modeling Chapter 2: VHDL-Based Design Chapter 2: Table of contents ♦ VHDL overview ♦ Synthesis with VHDL ♦ Test bench models & verification techniques A. Vachoux, 2004-2005 Digital Systems Modeling Chapter 2: VHDL-Based Design - 2 A. Vachoux 2004-2005 2-2 Digital Systems Modeling Chapter 2: VHDL-Based Design VHDL highlights (1/2) ♦ Hardware description language • Digital hardware systems • Modeling, simulation, synthesis, documentation • IEEE standard 1076 (1987, 1993, 2002) ♦ Originally created for simulation • IEEE standards 1164 (STD_LOGIC) and 1076.4 (VITAL) ♦ Further adapted to synthesis • Language subset • IEEE standards 1076.3 (packages) and 1076.6 (RTL semantics) A. Vachoux, 2004-2005 Digital Systems Modeling Chapter 2: VHDL-Based Design - 3 A. Vachoux 2004-2005 2-3 Digital Systems Modeling Chapter 2: VHDL-Based Design VHDL highlights (2/2) ♦ Application domain (abstraction levels): Functional -> logic ♦ Modularity • 5 design entities: entity, architecture, package declaration and body, configuration • Separation of interface from implementation • Separate compilation ♦ Strong typing • Every object has a type • Type compatibility checked at compile time ♦ Extensibility: User-defined types ♦ Model of time • Discrete time, integer multiple of some MRT (Minimum Resolvable Time) ♦ Event-driven simulation semantics A. Vachoux, 2004-2005 Digital Systems Modeling Chapter 2: VHDL-Based Design - 4 A. Vachoux 2004-2005 2-4 Digital Systems Modeling Chapter 2: VHDL-Based Design VHDL-based design flow Editor (text or graphic) Test bench models VHDL packages RTL model Logic simulation Logic/RTL Constraints synthesis (area, timing, power) VHDL VITAL standard cell Gate-level modeld netlist Standard cell library SDF file Place & route Delay Layout extraction A. -

Xilinx Synthesis and Verification Design Guide

Synthesis and Simulation Design Guide 8.1i R R Xilinx is disclosing this Document and Intellectual Property (hereinafter “the Design”) to you for use in the development of designs to operate on, or interface with Xilinx FPGAs. Except as stated herein, none of the Design may be copied, reproduced, distributed, republished, downloaded, displayed, posted, or transmitted in any form or by any means including, but not limited to, electronic, mechanical, photocopying, recording, or otherwise, without the prior written consent of Xilinx. Any unauthorized use of the Design may violate copyright laws, trademark laws, the laws of privacy and publicity, and communications regulations and statutes. Xilinx does not assume any liability arising out of the application or use of the Design; nor does Xilinx convey any license under its patents, copyrights, or any rights of others. You are responsible for obtaining any rights you may require for your use or implementation of the Design. Xilinx reserves the right to make changes, at any time, to the Design as deemed desirable in the sole discretion of Xilinx. Xilinx assumes no obligation to correct any errors contained herein or to advise you of any correction if such be made. Xilinx will not assume any liability for the accuracy or correctness of any engineering or technical support or assistance provided to you in connection with the Design. THE DESIGN IS PROVIDED “AS IS” WITH ALL FAULTS, AND THE ENTIRE RISK AS TO ITS FUNCTION AND IMPLEMENTATION IS WITH YOU. YOU ACKNOWLEDGE AND AGREE THAT YOU HAVE NOT RELIED ON ANY ORAL OR WRITTEN INFORMATION OR ADVICE, WHETHER GIVEN BY XILINX, OR ITS AGENTS OR EMPLOYEES. -

Publication Title 1-1962

publication_title print_identifier online_identifier publisher_name date_monograph_published_print 1-1962 - AIEE General Principles Upon Which Temperature 978-1-5044-0149-4 IEEE 1962 Limits Are Based in the rating of Electric Equipment 1-1969 - IEEE General Priniciples for Temperature Limits in the 978-1-5044-0150-0 IEEE 1968 Rating of Electric Equipment 1-1986 - IEEE Standard General Principles for Temperature Limits in the Rating of Electric Equipment and for the 978-0-7381-2985-3 IEEE 1986 Evaluation of Electrical Insulation 1-2000 - IEEE Recommended Practice - General Principles for Temperature Limits in the Rating of Electrical Equipment and 978-0-7381-2717-0 IEEE 2001 for the Evaluation of Electrical Insulation 100-2000 - The Authoritative Dictionary of IEEE Standards 978-0-7381-2601-2 IEEE 2000 Terms, Seventh Edition 1000-1987 - An American National Standard IEEE Standard for 0-7381-4593-9 IEEE 1988 Mechanical Core Specifications for Microcomputers 1000-1987 - IEEE Standard for an 8-Bit Backplane Interface: 978-0-7381-2756-9 IEEE 1988 STEbus 1001-1988 - IEEE Guide for Interfacing Dispersed Storage and 0-7381-4134-8 IEEE 1989 Generation Facilities With Electric Utility Systems 1002-1987 - IEEE Standard Taxonomy for Software Engineering 0-7381-0399-3 IEEE 1987 Standards 1003.0-1995 - Guide to the POSIX(R) Open System 978-0-7381-3138-2 IEEE 1994 Environment (OSE) 1003.1, 2004 Edition - IEEE Standard for Information Technology - Portable Operating System Interface (POSIX(R)) - 978-0-7381-4040-7 IEEE 2004 Base Definitions 1003.1, 2013 -

Designcon 2004 VHDL-200X and the Future of VHDL

DesignCon 2004 VHDL-200X and the Future of VHDL Jim Lewis, SynthWorks [email protected] Stephen Bailey, Mentor Graphic’s Model Technology Group Erich Marschner, Cadence Design Systems J. Bhasker, eSilicon Corp. Peter Ashenden, Ashenden Designs Pty. Ltd. Abstract VHDL is a critical language for RTL design and is a major component of the $200+ million RTL simulation market1. Many users prefer to use VHDL for RTL design as the language continues to provide desired characteristics in design safety, flexibility and maintainability. While VHDL has provided significant value for digital designers since 1987, it has had only one significant language revision in 1993. It has taken many years for design state-of-practice to catch-up to and, in some cases, surpass the capabilities that have been available in VHDL for over 15 years. Last year, the VHDL Analysis and Standardization Group (VASG), which is responsible for the VHDL standard, received clear indication from the VHDL community that it was now time to look at enhancing VHDL. In response to the user community, VASG initiated the VHDL-200x project2. VHDL-200x will result in at least two revisions of the VHDL standard. The first revision is planned to be completed next year (2004) and will include a C language interface (VHPI); a collection of high user value enhancements to improve designer productivity and modeling capability and potential inclusion of assertion-based verification and testbench modeling enhancements. A second revision is planned to follow about two years later. This paper summarizes VHDL-200X enhancements proposed for the first revision of VHDL. -

Introduction

Introduction © Sudhakar Yalamanchili, Georgia Institute of Technology, 2006 (1) VHDL • What is VHDL? V H I S C Æ Very High Speed Integrated Circuit Hardware Description IEEE Standard 1076-1993 Language (2) 1 History of VHDL • Designed by IBM, Texas Instruments, and Intermetrics as part of the DoD funded VHSIC program • Standardized by the IEEE in 1987: IEEE 1076-1987 • Enhanced version of the language defined in 1993: IEEE 1076-1993 • Additional standardized packages provide definitions of data types and expressions of timing data – IEEE 1164 (data types) – IEEE 1076.3 (numeric) – IEEE 1076.4 (timing) (3) Traditional vs. Hardware Description Languages • Procedural programming languages provide the how or recipes – for computation – for data manipulation – for execution on a specific hardware model • Hardware description languages describe a system – Systems can be described from many different points of view • Behavior: what does it do? • Structure: what is it composed of? • Functional properties: how do I interface to it? • Physical properties: how fast is it? (4) 2 Usage • Descriptions can be at different levels of abstraction – Switch level: model switching behavior of transistors – Register transfer level: model combinational and sequential logic components – Instruction set architecture level: functional behavior of a microprocessor • Descriptions can used for – Simulation • Verification, performance evaluation – Synthesis • First step in hardware design (5) Why do we Describe Systems? • Design Specification – unambiguous definition -

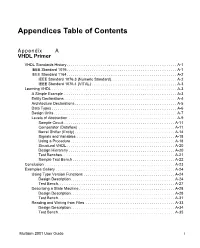

Appendices Table of Contents

Appendices Table of Contents Appendix A VHDL Primer VHDL Standards History. A-1 IEEE Standard 1076. A-1 IEEE Standard 1164. A-2 IEEE Standard 1076.3 (Numeric Standard). A-2 IEEE Standard 1076.4 (VITAL) . A-3 Learning VHDL . A-3 A Simple Example . A-3 Entity Declarations . A-4 Architecture Declarations . A-5 Data Types. A-6 Design Units . A-7 Levels of Abstraction . A-9 Sample Circuit . A-11 Comparator (Dataflow) . A-11 Barrel Shifter (Entity) . A-14 Signals and Variables . A-18 Using a Procedure . A-18 Structural VHDL. A-20 Design Hierarchy . A-20 Test Benches. A-21 Sample Test Bench . A-22 Conclusion . A-23 Examples Gallery . A-24 Using Type Version Functions . A-24 Design Description. A-24 Test Bench. A-27 Describing a State Machine . A-28 Design Description. A-28 Test Bench. A-31 Reading and Writing from Files . A-33 Design Description. A-34 Test Bench. A-35 Multisim 2001 User Guide i Appendix B.1 Verilog Primer Introduction . .B.1-1 What is Verilog? . B.1-2 What is VeriWell? . B.1-2 Why Use Verilog HDL? . B.1-3 The Verilog Language . .B.1-4 A First Verilog Program . B.1-4 Lexical Conventions . B.1-6 Program Structure . B.1-7 Data Types. B.1-10 Physical Data Types . .B.1-10 Abstract Data Types . .B.1-11 Operators . B.1-12 Binary Arithmetic Operators. .B.1-12 Unary Arithmetic Operators . .B.1-12 Relational Operators . .B.1-12 Logical Operators . .B.1-12 Bitwise Operators . .B.1-13 Unary Reduction Operators . -

GHDL Documentation Release 1.0-Dev

GHDL Documentation Release 1.0-dev Tristan Gingold and contributors Aug 30, 2020 Introduction 1 What is VHDL? 3 2 What is GHDL? 5 3 Who uses GHDL? 7 4 Contributing 9 4.1 Reporting bugs............................................9 4.2 Requesting enhancements...................................... 10 4.3 Improving the documentation.................................... 10 4.4 Fork, modify and pull-request.................................... 11 4.5 Related interesting projects..................................... 11 5 Copyrights | Licenses 13 5.1 GNU GPLv2............................................. 13 5.2 CC-BY-SA.............................................. 14 5.3 List of Contributors......................................... 14 I Getting GHDL 15 6 Releases and sources 17 6.1 Using package managers....................................... 17 6.2 Downloading pre-built packages................................... 17 6.3 Downloading Source Files...................................... 18 7 Building GHDL from Sources 21 7.1 Directory structure.......................................... 22 7.2 mcode backend............................................ 23 7.3 LLVM backend............................................ 23 7.4 GCC backend............................................. 24 8 Precompile Vendor Primitives 27 8.1 Supported Vendors Libraries..................................... 27 8.2 Supported Simulation and Verification Libraries.......................... 28 8.3 Script Configuration......................................... 28 8.4 Compiling on Linux........................................ -

High-Speed Data Acquisition and Optimal Filtering Based on Programmable Logic for Single-Photoelectron (SPE) Measurement Setup

High-speed data acquisition and optimal filtering based on programmable logic for single-photoelectron (SPE) measurement setup Experiment #7 Herman P. Lima Jr (CBPF), Rafael Nobrega (UFJF) [email protected], [email protected] Challenge library ieee; use ieee.std_logic_1164.all; entity logica is port (A,B,C : in std_logic; D,E,F : in std_logic; SAIDA : out std_logic); end logica; architecture v_1 of logica is begin SAIDA <= (A and B) or (C and D) or (E and F); end v_1; Herman Lima Jr References • Fundamentals of Digital Logic with VHDL Design, Stephen Brown, Zvonko Vranesic, McGraw-Hill, 2000. • The Designer’s Guide to VHDL, Peter Ashenden, 2nd Edition, Morgan Kaufmann, 2002. • VHDL Coding Styles and Methodologies, Ben Cohen, 2nd Edition, Kluwer Academic Publishers, 1999. • Digital Systems Design with VHDL and Synthesis: An Integrated Approach, K. C. Chang, Wiley-IEEE Computer Society Press, 1999. • Application-Specific Integrated Circuits, Michael Smith, Addison-Wesley, 1997. • www.altera.com (datasheets, application notes, reference designs) • www.xilinx.com (datasheets, application notes, reference designs) • www.doulos.com/knowhow/vhdl_designers_guide (The Designer’s Guide to VHDL) • www.acc-eda.com/vhdlref/index.html (VHDL Language Guide) • www.vhdl.org Herman Lima Jr Background required Digital Electronics: logic gates flip-flops multiplexers comparators counters ... Herman Lima Jr Agenda Digital electronics: evolution, current technologies Programmable Logic Introduction to VHDL (for synthesis) Herman Lima Jr Digital Electronics: -

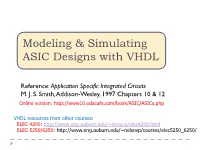

Modeling & Simulating ASIC Designs with VHDL

Modeling & Simulating ASIC Designs with VHDL Reference: Application Specific Integrated Circuits M. J. S. Smith, Addison-Wesley, 1997 Chapters 10 & 12 Online version: http://www10.edacafe.com/book/ASIC/ASICs.php VHDL resources from other courses: ELEC 4200: http://www.eng.auburn.edu/~strouce/elec4200.html ELEC 5250/6250: http://www.eng.auburn.edu/~nelsovp/courses/elec5250_6250/ Digital ASIC Design Flow Behavioral ELEC 5200/6200 Verify Design Activity Model Function VHDL/Verilog Front-End Synthesis Design DFT/BIST Gate-Level Verify & ATPG Netlist Function Test vectors Full-custom IC Transistor-Level Verify Function Standard Cell IC Netlist & Timing & FPGA/CPLD Back-End Design Physical DRC & LVS Verify Layout Verification Timing Map/Place/Route IC Mask Data/FPGA Configuration File ASIC CAD tools available in ECE Modeling and Simulation Active-HDL (Aldec) Questa ADMS = Questa+Modelsim+Eldo+ADiT (Mentor Graphics) Verilog-XL, NC_Verilog, Spectre (Cadence) Design Synthesis (digital) Leonardo Spectrum (Mentor Graphics) Design Compiler (Synopsys), RTL Compiler (Cadence) FPGA: Xilinx ISE; CPLD: Altera Quartus II Design for Test and Automatic Test Pattern Generation Tessent DFT Advisor, Fastscan, SoCScan (Mentor Graphics) Schematic Capture & Design Integration Design Architect-IC (Mentor Graphics) Design Framework II (DFII) - Composer (Cadence) Physical Layout IC Station (Mentor Graphics) SOC Encounter, Virtuoso (Cadence) Xilinx ISE/Altera Quartus II – FPGA/CPLD Synthesis, Map, Place & Route Design Verification Calibre DRC, LVS, -

VHDL Primer 23

VHDL Primer Appendix A VHDL Primer A.1 VHDL Standards History. A-1 A.1.1 IEEE Standard 1076. A-1 A.1.2 IEEE Standard 1164. A-1 A.1.2.1IEEE Standard 1076.3 (Numeric Standard) . A-2 A.1.2.2IEEE Standard 1076.4 (VITAL). A-2 A.2 Learning VHDL . A-3 A.2.1 A Simple Example . A-3 A.2.2 Entity Declarations . A-4 A.2.3 Architecture Declarations . A-5 A.2.4 Data Types . A-6 A.2.5 Design Units . A-6 A.2.6 Levels of Abstraction . A-9 A.2.6.1Sample Circuit . A-10 A.2.6.2Comparator (Dataflow) . A-11 A.2.6.3Barrel Shifter (Entity) . A-13 A.2.6.4Signals and Variables . A-17 A.2.6.5Using a Procedure . A-17 A.2.6.6Structural VHDL . A-19 A.2.6.7Design Hierarchy . A-20 A.2.6.8Test Benches . A-21 A.2.6.9Sample Test Bench . A-21 A.3 Conclusion . A-23 A.4 Examples Gallery . A-23 A.4.1 Using Type Version Functions . A-23 A.4.1.1Design Description . A-24 A.4.1.2Test Bench . A-26 A.4.2 Describing a State Machine . A-27 A.4.2.1Design Description . A-27 A.4.2.2Test Bench . A-30 A.4.3 Reading and Writing from Files . A-32 Multisim User Guide A.4.3.1Design Description. A-33 VDHL Prrimer A.4.3.2Test Bench. A-34 Electronics Workbench VHDL Primer Appendix A VHDL Primer This section provides a solid introduction to programming in VHDL. -

Synthesizable Systemc to VHDL Compiler Design Rui Chen

Florida State University Libraries Electronic Theses, Treatises and Dissertations The Graduate School 2011 Synthesizable SystemC to VHDL Compiler Design Rui Chen Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] THE FLORIDA STATE UNIVERSITY COLLEGE OF ENGINEERING SYNTHESIZABLE SYSTEMC TO VHDL COMPILER DESIGN By RUI CHEN A Thesis submitted to the Department of Electrical and Computer Engineering in partial fulfillment of the requirements for the degree of Master of Science Degree Awarded: Fall Semester, 2011 Rui Chen defended this thesis on November 3, 2011. The members of the supervisory committee were: Uwe Meyer-Baese Professor Directing Thesis Simon Foo Committee Member Ming Yu Committee Member The Graduate School has verified and approved the above-named committee mem- bers, and certifies that the thesis has been approved in accordance with the university requirements. ii ACKNOWLEDGMENTS As the author of this thesis, I am keenly aware that it represents the fruition of not only my own work, but also the support which other individuals and organizations have lent me over the years, and for which I am profoundly grateful. First, I would like to send my deepest gratitude to my supervisor, Dr. Uwe Mayer- Baese, whose precious guidance, support support and encouragement were pivotal in establishing my self-confidence in this endeavor. Also, I would like to thank Dr. Simon Foo and Dr. Ming Yu for spending their valuable time to be my committee members. I would like to thank Dr. Mei Su, my undergraduate advisor, who gave me great confidence and led me to the research fields. -

Trabajo Fin De Carrera

UNIVERSIDAD POLITÉCNICA DE MADRID FACULTAD DE INFORMÁTICA TRABAJO FIN DE CARRERA TRADUCTOR DE VHDL A SYSTEMC AUTOR: Grande Lobo, Eduardo TUTORES: Antonio García Dopico Mariano Hermida de la Rica A tod@s l@s que me habéis ayudado y animado a seguir adelante. Pero sobre todo, a mi madrina. ¡Tía Nieves, he cumplido! Traductor de VHDL a SystemC ÍNDICE CAPÍTULO I: INTRODUCCIÓN ............................................................................ 1 1 OBJETIVOS ................................................................................................................................................... 4 2 CONTENIDOS ............................................................................................................................................... 4 CAPÍTULO II: FUNDAMENTOS TEÓRICOS ....................................................... 7 1 EL LENGUAJE VHDL .................................................................................................................................. 7 2 EL LENGUAJE SYSTEMC ............................................................................................................................. 8 2.1 INTRODUCCIÓN ..................................................................................................................... 8 2.2 EL LENGUAJE C++ ................................................................................................................ 9 2.3 CARACTERÍSTICAS PROPIAS DE SYSTEMC ........................................................................ 9 3 COMPARACIÓN