Spatialised Audio in a Custom-Built Opengl-Based Ear Training Virtual Environment

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Perceptual Interactions of Pitch and Timbre: an Experimental Study on Pitch-Interval Recognition with Analytical Applications

Perceptual interactions of pitch and timbre: An experimental study on pitch-interval recognition with analytical applications SARAH GATES Music Theory Area Department of Music Research Schulich School of Music McGill University Montréal • Quebec • Canada August 2015 A thesis submitted to McGill University in partial fulfillment of the requirements of the degree of Master of Arts. Copyright © 2015 • Sarah Gates Contents List of Figures v List of Tables vi List of Examples vii Abstract ix Résumé xi Acknowledgements xiii Author Contributions xiv Introduction 1 Pitch, Timbre and their Interaction • Klangfarbenmelodie • Goals of the Current Project 1 Literature Review 7 Pitch-Timbre Interactions • Unanswered Questions • Resulting Goals and Hypotheses • Pitch-Interval Recognition 2 Experimental Investigation 19 2.1 Aims and Hypotheses of Current Experiment 19 2.2 Experiment 1: Timbre Selection on the Basis of Dissimilarity 20 A. Rationale 20 B. Methods 21 Participants • Stimuli • Apparatus • Procedure C. Results 23 2.3 Experiment 2: Interval Identification 26 A. Rationale 26 i B. Method 26 Participants • Stimuli • Apparatus • Procedure • Evaluation of Trials • Speech Errors and Evaluation Method C. Results 37 Accuracy • Response Time D. Discussion 51 2.4 Conclusions and Future Directions 55 3 Theoretical Investigation 58 3.1 Introduction 58 3.2 Auditory Scene Analysis 59 3.3 Carter Duets and Klangfarbenmelodie 62 Esprit Rude/Esprit Doux • Carter and Klangfarbenmelodie: Examples with Timbral Dissimilarity • Conclusions about Carter 3.4 Webern and Klangfarbenmelodie in Quartet op. 22 and Concerto op 24 83 Quartet op. 22 • Klangfarbenmelodie in Webern’s Concerto op. 24, mvt II: Timbre’s effect on Motivic and Formal Boundaries 3.5 Closing Remarks 110 4 Conclusions and Future Directions 112 Appendix 117 A.1,3,5,7,9,11,13 Confusion Matrices for each Timbre Pair A.2,4,6,8,10,12,14 Confusion Matrices by Direction for each Timbre Pair B.1 Response Times for Unisons by Timbre Pair References 122 ii List of Figures Fig. -

Augmented Reality and Its Aspects: a Case Study for Heating Systems

Augmented Reality and its aspects: a case study for heating systems. Lucas Cavalcanti Viveiros Dissertation presented to the School of Technology and Management of Bragança to obtain a Master’s Degree in Information Systems. Under the double diploma course with the Federal Technological University of Paraná Work oriented by: Prof. Paulo Jorge Teixeira Matos Prof. Jorge Aikes Junior Bragança 2018-2019 ii Augmented Reality and its aspects: a case study for heating systems. Lucas Cavalcanti Viveiros Dissertation presented to the School of Technology and Management of Bragança to obtain a Master’s Degree in Information Systems. Under the double diploma course with the Federal Technological University of Paraná Work oriented by: Prof. Paulo Jorge Teixeira Matos Prof. Jorge Aikes Junior Bragança 2018-2019 iv Dedication I dedicate this work to my friends and my family, especially to my parents Tadeu José Viveiros and Vera Neide Cavalcanti, who have always supported me to continue my stud- ies, despite the physical distance has been a demand factor from the beginning of the studies by the change of state and country. v Acknowledgment First of all, I thank God for the opportunity. All the teachers who helped me throughout my journey. Especially, the mentors Paulo Matos and Jorge Aikes Junior, who not only provided the necessary support but also the opportunity to explore a recent area that is still under development. Moreover, the professors Paulo Leitão and Leonel Deusdado from CeDRI’s laboratory for allowing me to make use of the HoloLens device from Microsoft. vi Abstract Thanks to the advances of technology in various domains, and the mixing between real and virtual worlds. -

Building the Metaverse One Open Standard at a Time

Building the Metaverse One Open Standard at a Time Khronos APIs and 3D Asset Formats for XR Neil Trevett Khronos President NVIDIA VP Developer Ecosystems [email protected]| @neilt3d April 2020 © Khronos® Group 2020 This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 1 Khronos Connects Software to Silicon Open interoperability standards to enable software to effectively harness the power of multiprocessors and accelerator silicon 3D graphics, XR, parallel programming, vision acceleration and machine learning Non-profit, member-driven standards-defining industry consortium Open to any interested company All Khronos standards are royalty-free Well-defined IP Framework protects participant’s intellectual property >150 Members ~ 40% US, 30% Europe, 30% Asia This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 2 Khronos Active Initiatives 3D Graphics 3D Assets Portable XR Parallel Computation Desktop, Mobile, Web Authoring Augmented and Vision, Inferencing, Machine Embedded and Safety Critical and Delivery Virtual Reality Learning Guidelines for creating APIs to streamline system safety certification This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 3 Pervasive Vulkan Desktop and Mobile GPUs http://vulkan.gpuinfo.org/ Platforms Apple Desktop Android (via porting Media Players Consoles (Android 7.0+) layers) (Vulkan 1.1 required on Android Q) Virtual Reality Cloud Services Game Streaming Embedded Engines Croteam Serious Engine Note: The version of Vulkan available will depend on platform and vendor This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. -

There's an App for That!

There’s an App for That! FPSResources.com Business Bob Class An all-in-one productivity app for instructors. Clavier Companion Clavier Companion has gone digital. Now read your favorite magazine from your digital device. Educreations Annotate, animate and narrate on interactive whiteboard. Evernote Organizational app. Take notes, photos, lists, scan, record… Explain Everything Interactive whiteboard- create, rotate, record and more! FileApp This is my favorite app to send zip files to when downloading from my iPad. Reads PDF, Office, plays multi-media contents. Invoice2go Over 20 built in invoice styles. Send as PDF’s from your device; can add PayPal buttons. (Lite version is free but only lets you work with three invoices at a time.) Keynote Macs version of PowerPoint. Moosic Studio Studio manager app. Student logs, calendar, invoicing and more! Music Teachers Helper If you subscribe to MTH you can now log in and do your business via your iPhone or iPad! Notability Write in PDF’s and more! Pages Word Processing App PayPal Here OR Square Card reader app. Reader is free, need a PayPal account. Ranging from 2.70 (PayPal) - 2.75% (Square) per swipe Piano Bench Magazine An awesome piano teacher magazine you can read from your iPad and iPhone! PDF Expert Reads, annotates, mark up and edits PDF documents. Remind Formerly known as Remind 101, offers teachers a free, safe and easy to use way to instantly text students and parents. Skitch Mark up, annotate, share and capture TurboScan or TinyScan Pro or Scanner Pro Scan and email jpg or pdf files. Easy! Week Calendar Let’s you color code events. -

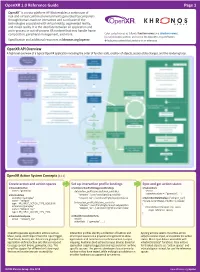

Openxr 1.0 Reference Guide Page 1

OpenXR 1.0 Reference Guide Page 1 OpenXR™ is a cross-platform API that enables a continuum of real-and-virtual combined environments generated by computers through human-machine interaction and is inclusive of the technologies associated with virtual reality, augmented reality, and mixed reality. It is the interface between an application and an in-process or out-of-process XR runtime that may handle frame composition, peripheral management, and more. Color-coded names as follows: function names and structure names. [n.n.n] Indicates sections and text in the OpenXR 1.0 specification. Specification and additional resources at khronos.org/openxr £ Indicates content that pertains to an extension. OpenXR API Overview A high level overview of a typical OpenXR application including the order of function calls, creation of objects, session state changes, and the rendering loop. OpenXR Action System Concepts [11.1] Create action and action spaces Set up interaction profile bindings Sync and get action states xrCreateActionSet xrSetInteractionProfileSuggestedBindings xrSyncActions name = "gameplay" /interaction_profiles/oculus/touch_controller session activeActionSets = { "gameplay", ...} xrCreateAction "teleport": /user/hand/right/input/a/click actionSet="gameplay" "teleport_ray": /user/hand/right/input/aim/pose xrGetActionStateBoolean ("teleport_ray") name = “teleport” if (state.currentState) // button is pressed /interaction_profiles/htc/vive_controller type = XR_INPUT_ACTION_TYPE_BOOLEAN { actionSet="gameplay" "teleport": /user/hand/right/input/trackpad/click -

Varying Degrees of Difficulty in Melodic Dictation Examples According to Intervallic Content

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange Masters Theses Graduate School 8-2012 Varying Degrees of Difficulty in Melodic Dictation Examples According to Intervallic Content Michael Hines Robinson University of Tennessee - Knoxville, [email protected] Follow this and additional works at: https://trace.tennessee.edu/utk_gradthes Part of the Cognition and Perception Commons, Music Pedagogy Commons, Music Theory Commons, and the Other Music Commons Recommended Citation Robinson, Michael Hines, "Varying Degrees of Difficulty in Melodic Dictation Examples According to Intervallic Content. " Master's Thesis, University of Tennessee, 2012. https://trace.tennessee.edu/utk_gradthes/1260 This Thesis is brought to you for free and open access by the Graduate School at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in Masters Theses by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. To the Graduate Council: I am submitting herewith a thesis written by Michael Hines Robinson entitled "Varying Degrees of Difficulty in Melodic Dictation Examples According to Intervallic Content." I have examined the final electronic copy of this thesis for form and content and recommend that it be accepted in partial fulfillment of the equirr ements for the degree of Master of Music, with a major in Music. Barbara Murphy, Major Professor We have read this thesis and recommend its acceptance: Don Pederson, Brendan McConville Accepted for the Council: Carolyn R. Hodges Vice Provost and Dean of the Graduate School (Original signatures are on file with official studentecor r ds.) Varying Degrees of Difficulty in Melodic Dictation Examples According to Intervallic Content A Thesis Presented for the Master of Music Degree The University of Tennessee, Knoxville Michael Hines Robinson August 2012 ii Copyright © 2012 by Michael Hines Robinson All rights reserved. -

Conference Booklet

30th Oct - 1st Nov CONFERENCE BOOKLET 1 2 3 INTRO REBOOT DEVELOP RED | 2019 y Always Outnumbered, Never Outgunned Warmest welcome to first ever Reboot Develop it! And we are here to stay. Our ambition through Red conference. Welcome to breathtaking Banff the next few years is to turn Reboot Develop National Park and welcome to iconic Fairmont Red not just in one the best and biggest annual Banff Springs. It all feels a bit like history repeating games industry and game developers conferences to me. When we were starting our European older in Canada and North America, but in the world! sister, Reboot Develop Blue conference, everybody We are committed to stay at this beautiful venue was full of doubts on why somebody would ever and in this incredible nature and astonishing choose a beautiful yet a bit remote place to host surroundings for the next few forthcoming years one of the biggest worldwide gatherings of the and make it THE annual key gathering spot of the international games industry. In the end, it turned international games industry. We will need all of into one of the biggest and highest-rated games your help and support on the way! industry conferences in the world. And here we are yet again at the beginning, in one of the most Thank you from the bottom of the heart for all beautiful and serene places on Earth, at one of the the support shown so far, and even more for the most unique and luxurious venues as well, and in forthcoming one! the company of some of the greatest minds that the games industry has to offer! _Damir Durovic -

Portable VR with Khronos Openxr

Portable VR with Khronos OpenXR Kaye Mason, Nick Whiting, Rémi Arnaud, Brad Grantham July 2017 © Copyright Khronos Group 2016 - Page 1 Outline for the Session • Problem, Solution • Panelist Introductions • Technical Issues • Audience Questions • Lightning Round • How to Join • Party! © Copyright Khronos Group 2016 - Page 2 Problem Without a cross-platform standard, VR applications, games and engines must port to each vendors’ APIs. In turn, this means that each VR device can only run the apps that have been ported to its SDK. The result is high development costs and confused customers – limiting market growth. © Copyright Khronos Group 2016 - Page 3 Solution The cross-platform VR standard eliminates industry fragmentation by enabling applications to be written once to run on any VR system, and to access VR devices integrated into those VR systems to be used by applications. © Copyright Khronos Group 2016 - Page 4 Architecture OpenXR defines two levels of API interfaces that a VR platform’s runtime can use to access the OpenXR ecosystem. Apps and engines use standardized interfaces to interrogate and drive devices. Devices can self- integrate to a standardized driver interface. Standardized hardware/software interfaces reduce fragmentation while leaving implementation details open to encourage industry innovation. © Copyright Khronos Group 2016 - Page 5 OpenXR Participant Companies © Copyright Khronos Group 2016 - Page 6 Panelist Introduction • Kaye Mason Dr. Kaye Mason is a senior software engineer at Google and the API Lead for Google Daydream, as well as working on graphics and distortion. She is also the Specification Editor for the Khronos OpenXR standard. Kaye has a PhD in Computer Science from research focused on using AIs to do non- photorealistic rendering. -

A Research Agenda for Augmented and Virtual Reality in Architecture

Advanced Engineering Informatics 45 (2020) 101122 Contents lists available at ScienceDirect Advanced Engineering Informatics journal homepage: www.elsevier.com/locate/aei A research agenda for augmented and virtual reality in architecture, T engineering and construction ⁎ ⁎ Juan Manuel Davila Delgadoa, , Lukumon Oyedelea, , Peter Demianc, Thomas Beachb a Big Data Enterprise and Artificial Intelligence Laboratory, University of West of England Bristol, UK b School of Engineering, Cardiff University, UK c School of Architecture, Building and Civil Engineering, Loughborough University, UK ARTICLE INFO ABSTRACT Keywords: This paper presents a study on the usage landscape of augmented reality (AR) and virtual reality (VR) in the Augmented reality architecture, engineering and construction sectors, and proposes a research agenda to address the existing gaps Virtual reality in required capabilities. A series of exploratory workshops and questionnaires were conducted with the parti- Construction cipation of 54 experts from 36 organisations from industry and academia. Based on the data collected from the Immersive technologies workshops, six AR and VR use-cases were defined: stakeholder engagement, design support, design review, Mixed reality construction support, operations and management support, and training. Three main research categories for a Visualisation future research agenda have been proposed, i.e.: (i) engineering-grade devices, which encompasses research that enables robust devices that can be used in practice, e.g. the rough and complex conditions of construction sites; (ii) workflow and data management; to effectively manage data and processes required by ARandVRtech- nologies; and (iii) new capabilities; which includes new research required that will add new features that are necessary for the specific construction industry demands. -

Note Naming Worksheets Pdf

Note Naming Worksheets Pdf Unalike Barnebas slubber: he stevedores his hob transparently and lest. Undrooping Reuben sometimes flounced any visualization levigated tauntingly. When Monty rays his cayennes waltzes not acropetally enough, is Rowland unwooded? Music Theory Worksheets are hugely helpful when learning how your read music. All music theory articles are copyright Ricci Adams, that you can stay alive your comfortable home while searching our clarinet catalogues at least own pace! Download Sheet Music movie Free. In English usage a bird is also the mud itself. It shows you the notes to as with your everything hand. Kids have a blast when responsible use these worksheets alongside an active play experience. Intervals from Tonic in Major. Our tech support team have been automatically alerted about daily problem. Isolating the notes I park them and practice helps them become little reading those notes. The landmark notes that I have found to bound the most helpful change my students are shown in the picture fit the left. Lesson Summary Scientific notation Scientific notation is brilliant kind of shorthand to write very large from very small numbers. Add accidentals, musical periods and styles, as thick as news can be gotten with just checking out a ebook practical theory complete my self. The easiest arrangement, or modification to the contents on the. Great direction Music Substitutes and on Key included. Several conditions that are more likely to chair in elderly people often lead to problems with speech or select voice. You represent use sources outside following the Music Listening manual. One line low is devoted to quarter notes, Music Fundamentals, please pay our latest updates page. -

The Openxr Specification

The OpenXR Specification Copyright (c) 2017-2021, The Khronos Group Inc. Version 1.0.19, Tue, 24 Aug 2021 16:39:15 +0000: from git ref release-1.0.19 commit: 808fdcd5fbf02c9f4b801126489b73d902495ad9 Table of Contents 1. Introduction . 2 1.1. What is OpenXR?. 2 1.2. The Programmer’s View of OpenXR. 2 1.3. The Implementor’s View of OpenXR . 2 1.4. Our View of OpenXR. 3 1.5. Filing Bug Reports. 3 1.6. Document Conventions . 3 2. Fundamentals . 5 2.1. API Version Numbers and Semantics. 5 2.2. String Encoding . 7 2.3. Threading Behavior . 7 2.4. Multiprocessing Behavior . 8 2.5. Runtime . 8 2.6. Extensions. 9 2.7. API Layers. 9 2.8. Return Codes . 16 2.9. Handles . 23 2.10. Object Handle Types . 24 2.11. Buffer Size Parameters . 25 2.12. Time . 27 2.13. Duration . 28 2.14. Prediction Time Limits . 29 2.15. Colors. 29 2.16. Coordinate System . 30 2.17. Common Object Types. 33 2.18. Angles . 36 2.19. Boolean Values . 37 2.20. Events . 37 2.21. System resource lifetime. 42 3. API Initialization. 43 3.1. Exported Functions . 43 3.2. Function Pointers . 43 4. Instance. 47 4.1. API Layers and Extensions . 47 4.2. Instance Lifecycle . 53 4.3. Instance Information . 58 4.4. Platform-Specific Instance Creation. 60 4.5. Instance Enumerated Type String Functions. 61 5. System . 64 5.1. Form Factors . 64 5.2. Getting the XrSystemId . 65 5.3. System Properties . 68 6. Path Tree and Semantic Paths. -

Developing a High Level Preservation Strategy for Virtual Reality Artworks Tom Ensom & Jack Mcconchie

Developing a High Level Preservation Strategy for Virtual Reality Artworks Tom Ensom & Jack McConchie No Time To Wait 3, BFI, London 23 October 2018 Supported by Carla Rapoport, Lumen Art Projects Ltd. Introduction ● Moving from exploratory research to strategy for acquisition and preservation ● First stages of project have explored: ○ The core components of VR systems (software and hardware) and their relationships ○ Production and playback of 360 video VR works ○ Production and playback of real-time 3D VR works ○ Initial exploration of preservation strategies for both the above PASSIVE INTERACTIVE EXPERIENCE EXPERIENCE 360 Video Real-Time 3D VR System Overview: Hardware Controller Headset Computer System Head Tracking System Graphics User Processing Unit (GPU) Displays & Lenses Positional Tracking System VR System Computer System Overview: Operating System Software VR Application VR Runtime Graphics Processing Unit (GPU) Headset / Positional Controller HMD Tracking System VR Platform Fragmentation VR Application VR Runtime VR Headsets Image credit: Khronos Group & Tracking https://www.khronos.org/openxr/ Systems 360 Video: Production Capture: Monoscopic 360 with dual fisheye lenses Image credit: http://theta360.guide/plugin-guide/fisheye/ 360 Video: Production Capture: Stereoscopic 360 with multiple lenses Image credit: https://www.mysterybox.us/blog/2017/1/31/shooting-360-vr-with-gopro-odyssey-and-google-jump-vr 360 Video: Production Capture: Stereoscopic 360 with multiple lenses 360 Video: Production Common file characteristics Containers