Mixed Reality & Microsoft Hololens

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Samsung Announces New Windows-Based Virtual-Reality Headset at Microsoft Event 4 October 2017, by Matt Day, the Seattle Times

Samsung announces new Windows-based virtual-reality headset at Microsoft event 4 October 2017, by Matt Day, The Seattle Times Samsung is joining Microsoft's virtual reality push, Microsoft also said that it had acquired AltspaceVR, announcing an immersive headset that pairs with a California virtual reality software startup that was Windows computers. building social and communications tools until it ran into funding problems earlier this year. The Korean electronics giant unveiled its Samsung HMD Odyssey at a Microsoft event in San ©2017 The Seattle Times Francisco recently. It will sell for $499. Distributed by Tribune Content Agency, LLC. The device joins Windows-based immersive headsets built by Lenovo, HP, Acer and Dell, and aimed for release later this year. Microsoft is among the companies seeking a slice of the emerging market for modern head-mounted devices. High-end headsets, like Facebook-owned Oculus's Rift and the HTC Vive, require powerful Windows PCs to run. Others, including the Samsung Gear VR and Google's Daydream, are aimed at the wider audience of people who use smartphones. Microsoft's vision, for now, is tied to the PC, and specifically new features in the Windows operating system designed to make it easier to build and display immersive environments. The company also has its own hardware, but that hasn't been on display recently. Microsoft's HoloLens was a trailblazer when it was unveiled in 2015. The headset, whose visor shows computer-generated images projected onto objects in the wearer's environment without obscuring the view of the real world completely, was subsequently offered for sale to developers and businesses. -

Holographic Computer

MECHANICAL ENGINEERING | DECEMBER 2018 | P.33 bioengineering WEARABLE Holographic Computer Microsoft’s HoloLens mixed reality headset is a breakthrough in human-computer interaction. STORY BY AGAM SHAH • ILLUSTRATION BY ZINA SAUNDERS he Kinect camera, which headset, Thyssenkrupp is equipping Microsoft released in 2010, elevator service technicians with brought unprecedented HoloLens to visualize and identify T levels of gesture and voice problems ahead of a job, and have interaction to video games. “When remote, hands-free access to technical we created Kinect for Xbox 360, and expert information when onsite. we aimed to build a device capable The company says that has helped of recognizing and understanding improve service times. Ford is also people so that computers could using HoloLens, enabling their design operate in ways that are more and engineering teams to visualize human,” said Alex Kipman, technical that projects the graphics onto a full-scale models in 3-D. They’ve fellow at Microsoft, who helped lens. As a user walks around a room converted processes that used to invent the camera. or turns his head, the position or take weeks down to days, and more Microsoft wanted to build on orientation of the graphical image is easily and securely share ideas across the success of the Kinect’s sensor altered so that it appears to the user the company and consider more technology by parlaying it into a as occupying a consistent location concepts than before. And NASA has radically new device—a mixed reality in space. provided HoloLens to astronauts on headset that would project computer- HoloLens has emerged as the go- the International Space Station as a generated images seemingly into real to device for engineers to integrate holographic instruction manual and a space. -

Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration

ORIGINAL RESEARCH published: 14 June 2021 doi: 10.3389/frvir.2021.697367 Eye See What You See: Exploring How Bi-Directional Augmented Reality Gaze Visualisation Influences Co-Located Symmetric Collaboration Allison Jing*, Kieran May, Gun Lee and Mark Billinghurst Empathic Computing Lab, Australian Research Centre for Interactive and Virtual Environment, STEM, The University of South Australia, Mawson Lakes, SA, Australia Gaze is one of the predominant communication cues and can provide valuable implicit information such as intention or focus when performing collaborative tasks. However, little research has been done on how virtual gaze cues combining spatial and temporal characteristics impact real-life physical tasks during face to face collaboration. In this study, we explore the effect of showing joint gaze interaction in an Augmented Reality (AR) interface by evaluating three bi-directional collaborative (BDC) gaze visualisations with three levels of gaze behaviours. Using three independent tasks, we found that all bi- directional collaborative BDC visualisations are rated significantly better at representing Edited by: joint attention and user intention compared to a non-collaborative (NC) condition, and Parinya Punpongsanon, hence are considered more engaging. The Laser Eye condition, spatially embodied with Osaka University, Japan gaze direction, is perceived significantly more effective as it encourages mutual gaze Reviewed by: awareness with a relatively low mental effort in a less constrained workspace. In addition, Naoya Isoyama, Nara Institute of Science and by offering additional virtual representation that compensates for verbal descriptions and Technology (NAIST), Japan hand pointing, BDC gaze visualisations can encourage more conscious use of gaze cues Thuong Hoang, Deakin University, Australia coupled with deictic references during co-located symmetric collaboration. -

Innovation in Shared Virtual Spaces for Mental Health Therapy

Innovation in Shared Virtual Spaces for Mental Health Therapy Jake Backer Advisor: Professor Soussan Djamasbi Worcester Polytechnic Institute May 23, 2021 This report represents the work of one or more WPI undergraduate students submitted to the faculty as evidence of completion of a degree requirement. WPI routinely publishes these reports on the web without editorial or peer review. 1 Contents 1 Abstract 3 2 Introduction 4 3 Background 5 3.1 Mental Health . .5 3.2 Telehealth . .5 3.3 Augmented Reality (AR) . .6 3.4 AR Therapy . .7 3.5 Avatars . .7 4 Designing the Application 8 4.1 Infrastructure . .8 4.1.1 Networking . .9 4.1.2 Movement . 11 4.1.3 Audio Communication . 12 4.1.4 Immersive Experience . 14 4.1.5 Telemetry . 15 5 User Studies 17 5.1 Results . 17 5.2 Discussion and Future User Studies . 19 6 Contribution and Future Work 21 References 24 2 1 Abstract Rates of mental health disorders among adolescents and younger adults are on the rise with the lack of widespread access remaining a critical issue. It has been shown that teletherapy, defined as therapy delivered remotely with the use of a phone or computer system, may be a viable option to replace in-person therapy in situations where in-person therapy is not possible. Sponsored by the User Experience and Decision Making (UXDM) lab at WPI, this IQP is part of a larger project to address the need for mental health therapy in situations where patients do not have access to traditional in-person care. -

Advanced Displays and Techniques for Telepresence COAUTHORS for Papers in This Talk

Advanced Displays and Techniques for Telepresence COAUTHORS for papers in this talk: Young-Woon Cha Rohan Chabra Nate Dierk Mingsong Dou Wil GarreM Gentaro Hirota Kur/s Keller Doug Lanman Mark Livingston David Luebke Andrei State (UNC) 1994 Andrew Maimone Surgical Consulta/on Telepresence Federico Menozzi EMa Pisano, MD Henry Fuchs Kishore Rathinavel UNC Chapel Hill Andrei State Eric Wallen, MD ARPA-E Telepresence Workshop Greg Welch Mary WhiMon April 26, 2016 Xubo Yang Support gratefully acknowledged: CISCO, Microsoft Research, NIH, NVIDIA, NSF Awards IIS-CHS-1423059, HCC-CGV-1319567, II-1405847 (“Seeing the Future: Ubiquitous Computing in EyeGlasses”), and the BeingThere Int’l Research Centre, a collaboration of ETH Zurich, NTU Singapore, UNC Chapel Hill and Singapore National Research Foundation, Media Development Authority, and Interactive Digital Media Program Office. 1 Video Teleconferencing vs Telepresence • Video Teleconferencing • Telepresence – Conven/onal 2D video capture and – Provides illusion of presence in the display remote or combined local&remote space – Single camera, single display at each – Provides proper stereo views from the site is common configura/on for precise loca/on of the user Skype, Google Hangout, etc. – Stereo views change appropriately as user moves – Provides proper eye contact and eye gaze cues among all the par/cipants Cisco TelePresence 3000 Three distant rooms combined into a single space with wall-sized 3D displays 2 Telepresence Component Technologies • Acquisi/on (cameras) • 3D reconstruc/on Cisco -

New Realities Risks in the Virtual World 2

Emerging Risk Report 2018 Technology New realities Risks in the virtual world 2 Lloyd’s disclaimer About the author This report has been co-produced by Lloyd's and Amelia Kallman is a leading London futurist, speaker, Amelia Kallman for general information purposes only. and author. As an innovation and technology While care has been taken in gathering the data and communicator, Amelia regularly writes, consults, and preparing the report Lloyd's does not make any speaks on the impact of new technologies on the future representations or warranties as to its accuracy or of business and our lives. She is an expert on the completeness and expressly excludes to the maximum emerging risks of The New Realities (VR-AR-MR), and extent permitted by law all those that might otherwise also specialises in the future of retail. be implied. Coming from a theatrical background, Amelia started Lloyd's accepts no responsibility or liability for any loss her tech career by chance in 2013 at a creative or damage of any nature occasioned to any person as a technology agency where she worked her way up to result of acting or refraining from acting as a result of, or become their Global Head of Innovation. She opened, in reliance on, any statement, fact, figure or expression operated and curated innovation lounges in both of opinion or belief contained in this report. This report London and Dubai, working with start-ups and corporate does not constitute advice of any kind. clients to develop connections and future-proof strategies. Today she continues to discover and bring © Lloyd’s 2018 attention to cutting-edge start-ups, regularly curating All rights reserved events for WIRED UK. -

Augmented Reality and Its Aspects: a Case Study for Heating Systems

Augmented Reality and its aspects: a case study for heating systems. Lucas Cavalcanti Viveiros Dissertation presented to the School of Technology and Management of Bragança to obtain a Master’s Degree in Information Systems. Under the double diploma course with the Federal Technological University of Paraná Work oriented by: Prof. Paulo Jorge Teixeira Matos Prof. Jorge Aikes Junior Bragança 2018-2019 ii Augmented Reality and its aspects: a case study for heating systems. Lucas Cavalcanti Viveiros Dissertation presented to the School of Technology and Management of Bragança to obtain a Master’s Degree in Information Systems. Under the double diploma course with the Federal Technological University of Paraná Work oriented by: Prof. Paulo Jorge Teixeira Matos Prof. Jorge Aikes Junior Bragança 2018-2019 iv Dedication I dedicate this work to my friends and my family, especially to my parents Tadeu José Viveiros and Vera Neide Cavalcanti, who have always supported me to continue my stud- ies, despite the physical distance has been a demand factor from the beginning of the studies by the change of state and country. v Acknowledgment First of all, I thank God for the opportunity. All the teachers who helped me throughout my journey. Especially, the mentors Paulo Matos and Jorge Aikes Junior, who not only provided the necessary support but also the opportunity to explore a recent area that is still under development. Moreover, the professors Paulo Leitão and Leonel Deusdado from CeDRI’s laboratory for allowing me to make use of the HoloLens device from Microsoft. vi Abstract Thanks to the advances of technology in various domains, and the mixing between real and virtual worlds. -

Building the Metaverse One Open Standard at a Time

Building the Metaverse One Open Standard at a Time Khronos APIs and 3D Asset Formats for XR Neil Trevett Khronos President NVIDIA VP Developer Ecosystems [email protected]| @neilt3d April 2020 © Khronos® Group 2020 This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 1 Khronos Connects Software to Silicon Open interoperability standards to enable software to effectively harness the power of multiprocessors and accelerator silicon 3D graphics, XR, parallel programming, vision acceleration and machine learning Non-profit, member-driven standards-defining industry consortium Open to any interested company All Khronos standards are royalty-free Well-defined IP Framework protects participant’s intellectual property >150 Members ~ 40% US, 30% Europe, 30% Asia This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 2 Khronos Active Initiatives 3D Graphics 3D Assets Portable XR Parallel Computation Desktop, Mobile, Web Authoring Augmented and Vision, Inferencing, Machine Embedded and Safety Critical and Delivery Virtual Reality Learning Guidelines for creating APIs to streamline system safety certification This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 3 Pervasive Vulkan Desktop and Mobile GPUs http://vulkan.gpuinfo.org/ Platforms Apple Desktop Android (via porting Media Players Consoles (Android 7.0+) layers) (Vulkan 1.1 required on Android Q) Virtual Reality Cloud Services Game Streaming Embedded Engines Croteam Serious Engine Note: The version of Vulkan available will depend on platform and vendor This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. -

Openxr 1.0 Reference Guide Page 1

OpenXR 1.0 Reference Guide Page 1 OpenXR™ is a cross-platform API that enables a continuum of real-and-virtual combined environments generated by computers through human-machine interaction and is inclusive of the technologies associated with virtual reality, augmented reality, and mixed reality. It is the interface between an application and an in-process or out-of-process XR runtime that may handle frame composition, peripheral management, and more. Color-coded names as follows: function names and structure names. [n.n.n] Indicates sections and text in the OpenXR 1.0 specification. Specification and additional resources at khronos.org/openxr £ Indicates content that pertains to an extension. OpenXR API Overview A high level overview of a typical OpenXR application including the order of function calls, creation of objects, session state changes, and the rendering loop. OpenXR Action System Concepts [11.1] Create action and action spaces Set up interaction profile bindings Sync and get action states xrCreateActionSet xrSetInteractionProfileSuggestedBindings xrSyncActions name = "gameplay" /interaction_profiles/oculus/touch_controller session activeActionSets = { "gameplay", ...} xrCreateAction "teleport": /user/hand/right/input/a/click actionSet="gameplay" "teleport_ray": /user/hand/right/input/aim/pose xrGetActionStateBoolean ("teleport_ray") name = “teleport” if (state.currentState) // button is pressed /interaction_profiles/htc/vive_controller type = XR_INPUT_ACTION_TYPE_BOOLEAN { actionSet="gameplay" "teleport": /user/hand/right/input/trackpad/click -

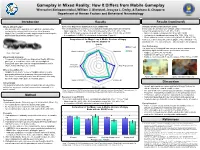

Gameplay in Mixed Reality: How It Differs from Mobile Gameplay Weerachet Sinlapanuntakul, William J

Gameplay in Mixed Reality: How It Differs from Mobile Gameplay Weerachet Sinlapanuntakul, William J. Shelstad, Jessyca L. Derby, & Barbara S. Chaparro Department of Human Factors and Behavioral Neurobiology Introduction Results Results (continued) What is Mixed Reality? Game User Experience Satisfaction Scale (GUESS-18) Simulator Sickness Questionnaire (SSQ) • Mixed Reality (MR) expands a user’s physical environment by The overall satisfaction scores (out of 56) indicated a statistically significance between: In this study, the 20-minute use of Magic Leap is resulted with overlaying the real-world with interactive virtual elements • Magic Leap (M = 42.31, SD = 5.61) and mobile device (M = 38.31, SD = 5.49) concerning symptoms (M = 16.83, SD = 15.51): • Magic Leap 1 is an MR wearable spatial computer that brings the • With α = 0.05, Magic Leap was rated with higher satisfaction, t(15) = 3.09, p = 0.007. • Nausea is considered minimal (M = 9.54, SD = 12.07) physical and digital worlds together (Figure 1). • A comparison of GUESS-18 subscales is shown below (Figure 2). • Oculomotor is considered concerning (M = 17.53, SD = 16.31) • Disorientation is considered concerning (M = 16.53, SD = 17.02) Comparison of the Magic Leap & Mobile Versions of Angry Total scores can be associated with negligible (< 5), minimal (5 – 10), Birds with the GUESS-18 significant (10 – 15), concerning (15 – 20), and bad (> 20) symptoms. Usability 7 Magic Leap User Performance 6 The stars (out of 3) collected from each level played represented a statistically significant difference in performance outcomes: Visual Aesthetics 5 Narratives Mobile Figure 1. -

Conference Booklet

30th Oct - 1st Nov CONFERENCE BOOKLET 1 2 3 INTRO REBOOT DEVELOP RED | 2019 y Always Outnumbered, Never Outgunned Warmest welcome to first ever Reboot Develop it! And we are here to stay. Our ambition through Red conference. Welcome to breathtaking Banff the next few years is to turn Reboot Develop National Park and welcome to iconic Fairmont Red not just in one the best and biggest annual Banff Springs. It all feels a bit like history repeating games industry and game developers conferences to me. When we were starting our European older in Canada and North America, but in the world! sister, Reboot Develop Blue conference, everybody We are committed to stay at this beautiful venue was full of doubts on why somebody would ever and in this incredible nature and astonishing choose a beautiful yet a bit remote place to host surroundings for the next few forthcoming years one of the biggest worldwide gatherings of the and make it THE annual key gathering spot of the international games industry. In the end, it turned international games industry. We will need all of into one of the biggest and highest-rated games your help and support on the way! industry conferences in the world. And here we are yet again at the beginning, in one of the most Thank you from the bottom of the heart for all beautiful and serene places on Earth, at one of the the support shown so far, and even more for the most unique and luxurious venues as well, and in forthcoming one! the company of some of the greatest minds that the games industry has to offer! _Damir Durovic -

The Openxr Specification

The OpenXR Specification Copyright (c) 2017-2021, The Khronos Group Inc. Version 1.0.19, Tue, 24 Aug 2021 16:39:15 +0000: from git ref release-1.0.19 commit: 808fdcd5fbf02c9f4b801126489b73d902495ad9 Table of Contents 1. Introduction . 2 1.1. What is OpenXR?. 2 1.2. The Programmer’s View of OpenXR. 2 1.3. The Implementor’s View of OpenXR . 2 1.4. Our View of OpenXR. 3 1.5. Filing Bug Reports. 3 1.6. Document Conventions . 3 2. Fundamentals . 5 2.1. API Version Numbers and Semantics. 5 2.2. String Encoding . 7 2.3. Threading Behavior . 7 2.4. Multiprocessing Behavior . 8 2.5. Runtime . 8 2.6. Extensions. 9 2.7. API Layers. 9 2.8. Return Codes . 16 2.9. Handles . 23 2.10. Object Handle Types . 24 2.11. Buffer Size Parameters . 25 2.12. Time . 27 2.13. Duration . 28 2.14. Prediction Time Limits . 29 2.15. Colors. 29 2.16. Coordinate System . 30 2.17. Common Object Types. 33 2.18. Angles . 36 2.19. Boolean Values . 37 2.20. Events . 37 2.21. System resource lifetime. 42 3. API Initialization. 43 3.1. Exported Functions . 43 3.2. Function Pointers . 43 4. Instance. 47 4.1. API Layers and Extensions . 47 4.2. Instance Lifecycle . 53 4.3. Instance Information . 58 4.4. Platform-Specific Instance Creation. 60 4.5. Instance Enumerated Type String Functions. 61 5. System . 64 5.1. Form Factors . 64 5.2. Getting the XrSystemId . 65 5.3. System Properties . 68 6. Path Tree and Semantic Paths.