Augmented Reality and Its Aspects: a Case Study for Heating Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Review About Augmented Reality Tools and Developing a Virtual Reality Application

Academic Journal of Science, CD-ROM. ISSN: 2165-6282 :: 03(02):139–146 (2014) $5(9,(:$%287$8*0(17('5($/,7<722/6$1' '(9(/23,1*$9,578$/5($/,7<$33/,&$7,21%$6('21 ('8&$7,21 0XVWDID8ODVDQG6DID0HUYH7DVFL )LUDW8QLYHULVLW\7XUNH\ Augmented Reality (AR) is a technology that gained popularity in recent years. It is defined as placement of virtual images over real view in real time. There are a lot of desktop applications which are using Augmented Reality. The rapid development of technology and easily portable mobile devices cause the increasing of the development of the applications on the mobile device. The elevation of the device technology leads to the applications and cause the generating of the new tools. There are a lot of AR Tool Kits. They differ in many ways such as used methods, Programming language, Used Operating Systems, etc. Firstly, a developer must find the most effective tool kits between them. This study is more of a guide to developers to find the best AR tool kit choice. The tool kit was examined under three main headings. The Parameters such as advantages, disadvantages, platform, and programming language were compared. In addition to the information is given about usage of them and a Virtual Reality application has developed which is based on Education. .H\ZRUGV Augmented reality, ARToolKit, Computer vision, Image processing. ,QWURGXFWLRQ Augmented reality is basically a snapshot of the real environment with virtual environment applications that are brought together. Basically it can be operated on every device which has a camera display and operation system. -

Review: Advancement in Virtual Reality Device

Available online at: http://www.ijmtst.com/vol5issue12.html International Journal for Modern Trends in Science and Technology ISSN: 2455-3778 :: Volume: 05, Issue No: 12, December 2019 Review: Advancement in Virtual Reality Device Dr. Preeti Singh Bahadur 1 | Pushpendra Bahadur2 | Dikshant Delu1 | Shivam Chaudhary1 1Department of Applied Physics, Amity University, Greater Noida(U.P.), 2 Tata Steel, BSL Limited, Sahibabad, Ghaziabad (U.P.)- 201010 To Cite this Article Dr. Preeti Singh Bahadur, Pushpendra Bahadur, Dikshant Delu and Shivam Chaudhary, “Review: Advancement in Virtual Reality Device”, International Journal for Modern Trends in Science and Technology, Vol. 05, Issue 12, December 2019, pp.-40-47. Article Info Received on 21-November-2019, Revised on 12-December-2019, Accepted on 21-December-2019, Published on 26-December-2019. ABSTRACT In this paper we shed light on the advancement in Virtual Reality devices and talk about six latest developments. Virtual reality (VR) is a technology that allows a user to interact with a simulated computer environment, whether that environment is a simulation of the real world or an imaginary world. It is the key to experiencing, feeling and touching the past, the present and the future. It is the means of creating our own world, our own personalized reality. It could range from creating a videogame to taking a virtual tour of the universe, from walking through our own dream house to experiencing a walk through a strange planet. With virtual reality, we can experience the most intimidating and exhausting situations by playing safely and with a learning perspective. However, very few people really know what virtual reality is, what are its basic principles and its open problems. -

Evaluating Performance Benefits of Head Tracking in Modern Video

Evaluating Performance Benefits of Head Tracking in Modern Video Games Arun Kulshreshth Joseph J. LaViola Jr. Department of EECS Department of EECS University of Central Florida University of Central Florida 4000 Central Florida Blvd 4000 Central Florida Blvd Orlando, FL 32816, USA Orlando, FL 32816, USA [email protected] [email protected] ABSTRACT PlayStation Move, TrackIR 5) that support 3D spatial in- teraction have been implemented and made available to con- We present a study that investigates user performance ben- sumers. Head tracking is one example of an interaction tech- efits of using head tracking in modern video games. We nique, commonly used in the virtual and augmented reality explored four di↵erent carefully chosen commercial games communities [2, 7, 9], that has potential to be a useful ap- with tasks which can potentially benefit from head tracking. proach for controlling certain gaming tasks. Recent work on For each game, quantitative and qualitative measures were head tracking and video games has shown some potential taken to determine if users performed better and learned for this type of gaming interface. For example, Sko et al. faster in the experimental group (with head tracking) than [10] proposed a taxonomy of head gestures for first person in the control group (without head tracking). A game ex- shooter (FPS) games and showed that some of their tech- pertise pre-questionnaire was used to classify participants niques (peering, zooming, iron-sighting and spinning) are into casual and expert categories to analyze a possible im- useful in games. In addition, previous studies [13, 14] have pact on performance di↵erences. -

Virtual Reality and Augmented Reality (VR/AR)

Quad Cities Manufacturing Innovation Hub Playbook Series Virtual Reality and Augmented Reality (VR/AR) How to Use This Playbook Each Quad Cities Manufacturing Innovation Hub playbook is created with the business growth needs of our area’s small and medium manufacturers in mind. By utilizing the information in the VR/AR Playbook, you are taking the first steps to creating a competitive advantage for your company by innovating in the face of disruptive technologies. This playbook follows a logical flow to guide you as you learn more about VR/AR (see Fig. 1). Review the sections as they apply to your individual opportunities and resources, either in the order they’re presented or jump around to fit your immediate needs. Figure 1: VR/AR Playbook Information Flow Understand Identify Build the the Find Help Opportunities Business Case Technologies This is your toolkit for plugging into the virtual and augmented reality network in the Quad Cities. Together, all eight of our playbooks work in concert to uplift our regional manufacturers and Department of Defense suppliers through increasing digital readiness; working together to accelerate the understanding and investment in emerging technologies; and foster a culture of innovation in the manufacturing industry. We encourage you to review the other playbooks (see appendix for more information) as well. Whom can I contact at the Quad Cities Manufacturing Innovation Hub with questions? Email [email protected], and a member of the Hub team will respond to your question. About the Quad Cities Manufacturing Innovation Hub and Our Partners The Quad Cities Manufacturing Innovation Hub assists businesses by offering services such as operational assessments, registry in a regional catalog of manufacturers and suppliers, trade and business- to-business events, access to national and international marketing, access to subject matter experts through the Chamber’s Critical Talent Network, connections to the Quad City Manufacturing Lab and national research, and training seminars targeted at key technologies. -

Virtual and Augmented Reality

Virtual and Augmented Reality Virtual and Augmented Reality: An Educational Handbook By Zeynep Tacgin Virtual and Augmented Reality: An Educational Handbook By Zeynep Tacgin This book first published 2020 Cambridge Scholars Publishing Lady Stephenson Library, Newcastle upon Tyne, NE6 2PA, UK British Library Cataloguing in Publication Data A catalogue record for this book is available from the British Library Copyright © 2020 by Zeynep Tacgin All rights for this book reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior permission of the copyright owner. ISBN (10): 1-5275-4813-9 ISBN (13): 978-1-5275-4813-8 TABLE OF CONTENTS List of Illustrations ................................................................................... x List of Tables ......................................................................................... xiv Preface ..................................................................................................... xv What is this book about? .................................................... xv What is this book not about? ............................................ xvi Who is this book for? ........................................................ xvii How is this book used? .................................................. xviii The specific contribution of this book ............................. xix Acknowledgements ........................................................... -

New Realities Risks in the Virtual World 2

Emerging Risk Report 2018 Technology New realities Risks in the virtual world 2 Lloyd’s disclaimer About the author This report has been co-produced by Lloyd's and Amelia Kallman is a leading London futurist, speaker, Amelia Kallman for general information purposes only. and author. As an innovation and technology While care has been taken in gathering the data and communicator, Amelia regularly writes, consults, and preparing the report Lloyd's does not make any speaks on the impact of new technologies on the future representations or warranties as to its accuracy or of business and our lives. She is an expert on the completeness and expressly excludes to the maximum emerging risks of The New Realities (VR-AR-MR), and extent permitted by law all those that might otherwise also specialises in the future of retail. be implied. Coming from a theatrical background, Amelia started Lloyd's accepts no responsibility or liability for any loss her tech career by chance in 2013 at a creative or damage of any nature occasioned to any person as a technology agency where she worked her way up to result of acting or refraining from acting as a result of, or become their Global Head of Innovation. She opened, in reliance on, any statement, fact, figure or expression operated and curated innovation lounges in both of opinion or belief contained in this report. This report London and Dubai, working with start-ups and corporate does not constitute advice of any kind. clients to develop connections and future-proof strategies. Today she continues to discover and bring © Lloyd’s 2018 attention to cutting-edge start-ups, regularly curating All rights reserved events for WIRED UK. -

PROGRAMS for LIBRARIES Alastore.Ala.Org

32 VIRTUAL, AUGMENTED, & MIXED REALITY PROGRAMS FOR LIBRARIES edited by ELLYSSA KROSKI CHICAGO | 2021 alastore.ala.org ELLYSSA KROSKI is the director of Information Technology and Marketing at the New York Law Institute as well as an award-winning editor and author of sixty books including Law Librarianship in the Age of AI for which she won AALL’s 2020 Joseph L. Andrews Legal Literature Award. She is a librarian, an adjunct faculty member at Drexel University and San Jose State University, and an international conference speaker. She received the 2017 Library Hi Tech Award from the ALA/LITA for her long-term contributions in the area of Library and Information Science technology and its application. She can be found at www.amazon.com/author/ellyssa. © 2021 by the American Library Association Extensive effort has gone into ensuring the reliability of the information in this book; however, the publisher makes no warranty, express or implied, with respect to the material contained herein. ISBNs 978-0-8389-4948-1 (paper) Library of Congress Cataloging-in-Publication Data Names: Kroski, Ellyssa, editor. Title: 32 virtual, augmented, and mixed reality programs for libraries / edited by Ellyssa Kroski. Other titles: Thirty-two virtual, augmented, and mixed reality programs for libraries Description: Chicago : ALA Editions, 2021. | Includes bibliographical references and index. | Summary: “Ranging from gaming activities utilizing VR headsets to augmented reality tours, exhibits, immersive experiences, and STEM educational programs, the program ideas in this guide include events for every size and type of academic, public, and school library” —Provided by publisher. Identifiers: LCCN 2021004662 | ISBN 9780838949481 (paperback) Subjects: LCSH: Virtual reality—Library applications—United States. -

Virtual Reality: What Is the State of Play in Education?

Australian Journal of Educational Technology 1998, 14(1), 60-74 Virtual reality: What is the state of play in education? Colin Macpherson Central Queensland University Mike Keppell University of Melbourne Background The term 'virtual reality' (VR) is currently used to describe a range of computer-based systems in which a user can explore a hardware and software generated 'microworld' that bears some resemblance to reality. An early application of such systems was the flight simulator used to train pilots. However, it is in the area of hi-tech games that many of the more recent developments in this field have occurred. Typically, a user will wear a helmet containing either a small video screen positioned in front of each eye, or a device that projects images directly onto the user’s retinas. She might also wear an elaborately wired glove that provides tactile feedback as she attempts to physically interact with the computer- generated visual environment. It was on devices and systems of this nature that our proposed investigation was to concentrate. Although this has remained the case, we have expanded our work to also include VR that mainly uses screen-based graphics – thus reflecting the expanded definition of VR (more of which later). Overall aim Our overall aim was to determine the nature and capabilities of VR devices and systems that have already been developed, and of those that are under development; and to investigate the educational and instructional uses to which these devices and systems are already being put and to which they may be put in the near future. -

Augmented Reality, Virtual Reality, & Health

University of Massachusetts Medical School eScholarship@UMMS National Network of Libraries of Medicine New National Network of Libraries of Medicine New England Region (NNLM NER) Repository England Region 2017-3 Augmented Reality, Virtual Reality, & Health Allison K. Herrera University of Massachusetts Medical School Et al. Let us know how access to this document benefits ou.y Follow this and additional works at: https://escholarship.umassmed.edu/ner Part of the Health Information Technology Commons, Library and Information Science Commons, and the Public Health Commons Repository Citation Herrera AK, Mathews FZ, Gugliucci MR, Bustillos C. (2017). Augmented Reality, Virtual Reality, & Health. National Network of Libraries of Medicine New England Region (NNLM NER) Repository. https://doi.org/ 10.13028/1pwx-hc92. Retrieved from https://escholarship.umassmed.edu/ner/42 Creative Commons License This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 License. This material is brought to you by eScholarship@UMMS. It has been accepted for inclusion in National Network of Libraries of Medicine New England Region (NNLM NER) Repository by an authorized administrator of eScholarship@UMMS. For more information, please contact [email protected]. Augmented Reality, Virtual Reality, & Health Zeb Mathews University of Tennessee Corina Bustillos Texas Tech University Allison Herrera University of Massachusetts Medical School Marilyn Gugliucci University of New England Outline Learning Objectives Introduction & Overview Objectives: • Explore AR & VR technologies and Augmented Reality & Health their impact on health sciences, Virtual Reality & Health with examples of projects & research Technology Funding Opportunities • Know how to apply for funding for your own AR/VR health project University of New England • Learn about one VR project funded VR Project by the NNLM Augmented Reality and Virtual Reality (AR/VR) & Health What is AR and VR? F. -

Augmented Reality for Unity: the Artoolkit Package JM Vezien Jan

Augmented reality for Unity: the ARToolkit package JM Vezien Jan 2016 ARToolkit provides an easy way to develop AR applications in Unity via the ARTookit package for Unity, available for download at: http://artoolkit.org/documentation/doku.php?id=6_Unity:unity_about The installation is covered in: http://artoolkit.org/documentation/doku.php?id=6_Unity:unity_getting_started Basically, you import the package via Assets--> Import Package--> custom Package Current version is 5.3.1 Once imported, the package provides assets in the ARToolkit5-Unity hierarchy. There is also an additional ARToolkit entry in the general menu of unity. A bunch of example scenes are available for trials in ARToolkit5-Unity-->Example scenes. The simplest is SimpleScene.unity, where a red cube is attached to a "Hiro" pattern, like the simpleTest example in the original ARToolkit tutorials. The way ARToolkit for Unity organises information is simple: • The mandatory component for ARToolkit is called a ARController. Aside from managing the camera parameters (for example, selecting the video input), it also records the complete list of markers your application will use, via ARMarker scripts (one per marker). • A "Scene Root" will contain everything you need to AR display. It is a standard (usually empty) object with a AR Origin script. the rest of the scene will be children of it. • The standard camera remains basically the same, but is now driven by a specific ARCamera script. By default, the camera is a video see-through (like a webcam). • Objects to be tracked are usually "empty" geomeries (GameObject--> Create empty) to which one attaches a special AR Tracked Object script. -

Building the Metaverse One Open Standard at a Time

Building the Metaverse One Open Standard at a Time Khronos APIs and 3D Asset Formats for XR Neil Trevett Khronos President NVIDIA VP Developer Ecosystems [email protected]| @neilt3d April 2020 © Khronos® Group 2020 This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 1 Khronos Connects Software to Silicon Open interoperability standards to enable software to effectively harness the power of multiprocessors and accelerator silicon 3D graphics, XR, parallel programming, vision acceleration and machine learning Non-profit, member-driven standards-defining industry consortium Open to any interested company All Khronos standards are royalty-free Well-defined IP Framework protects participant’s intellectual property >150 Members ~ 40% US, 30% Europe, 30% Asia This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 2 Khronos Active Initiatives 3D Graphics 3D Assets Portable XR Parallel Computation Desktop, Mobile, Web Authoring Augmented and Vision, Inferencing, Machine Embedded and Safety Critical and Delivery Virtual Reality Learning Guidelines for creating APIs to streamline system safety certification This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. 2020 - Page 3 Pervasive Vulkan Desktop and Mobile GPUs http://vulkan.gpuinfo.org/ Platforms Apple Desktop Android (via porting Media Players Consoles (Android 7.0+) layers) (Vulkan 1.1 required on Android Q) Virtual Reality Cloud Services Game Streaming Embedded Engines Croteam Serious Engine Note: The version of Vulkan available will depend on platform and vendor This work is licensed under a Creative Commons Attribution 4.0 International License © The Khronos® Group Inc. -

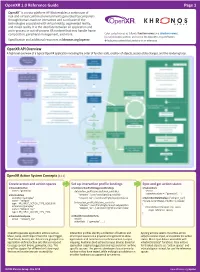

Openxr 1.0 Reference Guide Page 1

OpenXR 1.0 Reference Guide Page 1 OpenXR™ is a cross-platform API that enables a continuum of real-and-virtual combined environments generated by computers through human-machine interaction and is inclusive of the technologies associated with virtual reality, augmented reality, and mixed reality. It is the interface between an application and an in-process or out-of-process XR runtime that may handle frame composition, peripheral management, and more. Color-coded names as follows: function names and structure names. [n.n.n] Indicates sections and text in the OpenXR 1.0 specification. Specification and additional resources at khronos.org/openxr £ Indicates content that pertains to an extension. OpenXR API Overview A high level overview of a typical OpenXR application including the order of function calls, creation of objects, session state changes, and the rendering loop. OpenXR Action System Concepts [11.1] Create action and action spaces Set up interaction profile bindings Sync and get action states xrCreateActionSet xrSetInteractionProfileSuggestedBindings xrSyncActions name = "gameplay" /interaction_profiles/oculus/touch_controller session activeActionSets = { "gameplay", ...} xrCreateAction "teleport": /user/hand/right/input/a/click actionSet="gameplay" "teleport_ray": /user/hand/right/input/aim/pose xrGetActionStateBoolean ("teleport_ray") name = “teleport” if (state.currentState) // button is pressed /interaction_profiles/htc/vive_controller type = XR_INPUT_ACTION_TYPE_BOOLEAN { actionSet="gameplay" "teleport": /user/hand/right/input/trackpad/click