Cross-Layer Resource Control and Scheduling for Improving Interactivity in Android

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Proceedings 2005

LAC2005 Proceedings 3rd International Linux Audio Conference April 21 – 24, 2005 ZKM | Zentrum fur¨ Kunst und Medientechnologie Karlsruhe, Germany Published by ZKM | Zentrum fur¨ Kunst und Medientechnologie Karlsruhe, Germany April, 2005 All copyright remains with the authors www.zkm.de/lac/2005 Content Preface ............................................ ............................5 Staff ............................................... ............................6 Thursday, April 21, 2005 – Lecture Hall 11:45 AM Peter Brinkmann MidiKinesis – MIDI controllers for (almost) any purpose . ....................9 01:30 PM Victor Lazzarini Extensions to the Csound Language: from User-Defined to Plugin Opcodes and Beyond ............................. .....................13 02:15 PM Albert Gr¨af Q: A Functional Programming Language for Multimedia Applications .........21 03:00 PM St´ephane Letz, Dominique Fober and Yann Orlarey jackdmp: Jack server for multi-processor machines . ......................29 03:45 PM John ffitch On The Design of Csound5 ............................... .....................37 04:30 PM Pau Arum´ıand Xavier Amatriain CLAM, an Object Oriented Framework for Audio and Music . .............43 Friday, April 22, 2005 – Lecture Hall 11:00 AM Ivica Ico Bukvic “Made in Linux” – The Next Step .......................... ..................51 11:45 AM Christoph Eckert Linux Audio Usability Issues .......................... ........................57 01:30 PM Marije Baalman Updates of the WONDER software interface for using Wave Field Synthesis . 69 02:15 PM Georg B¨onn Development of a Composer’s Sketchbook ................. ....................73 Saturday, April 23, 2005 – Lecture Hall 11:00 AM J¨urgen Reuter SoundPaint – Painting Music ........................... ......................79 11:45 AM Michael Sch¨uepp, Rene Widtmann, Rolf “Day” Koch and Klaus Buchheim System design for audio record and playback with a computer using FireWire . 87 01:30 PM John ffitch and Tom Natt Recording all Output from a Student Radio Station . -

Lecture 11: Deadlock, Scheduling, & Synchronization October 3, 2019 Instructor: David E

CS 162: Operating Systems and System Programming Lecture 11: Deadlock, Scheduling, & Synchronization October 3, 2019 Instructor: David E. Culler & Sam Kumar https://cs162.eecs.berkeley.edu Read: A&D 6.5 Project 1 Code due TOMORROW! Midterm Exam next week Thursday Outline for Today • (Quickly) Recap and Finish Scheduling • Deadlock • Language Support for Concurrency 10/3/19 CS162 UCB Fa 19 Lec 11.2 Recall: CPU & I/O Bursts • Programs alternate between bursts of CPU, I/O activity • Scheduler: Which thread (CPU burst) to run next? • Interactive programs vs Compute Bound vs Streaming 10/3/19 CS162 UCB Fa 19 Lec 11.3 Recall: Evaluating Schedulers • Response Time (ideally low) – What user sees: from keypress to character on screen – Or completion time for non-interactive • Throughput (ideally high) – Total operations (jobs) per second – Overhead (e.g. context switching), artificial blocks • Fairness – Fraction of resources provided to each – May conflict with best avg. throughput, resp. time 10/3/19 CS162 UCB Fa 19 Lec 11.4 Recall: What if we knew the future? • Key Idea: remove convoy effect –Short jobs always stay ahead of long ones • Non-preemptive: Shortest Job First – Like FCFS where we always chose the best possible ordering • Preemptive Version: Shortest Remaining Time First – A newly ready process (e.g., just finished an I/O operation) with shorter time replaces the current one 10/3/19 CS162 UCB Fa 19 Lec 11.5 Recall: Multi-Level Feedback Scheduling Long-Running Compute Tasks Demoted to Low Priority • Intuition: Priority Level proportional -

Process Scheduling Ii

PROCESS SCHEDULING II CS124 – Operating Systems Spring 2021, Lecture 12 2 Real-Time Systems • Increasingly common to have systems with real-time scheduling requirements • Real-time systems are driven by specific events • Often a periodic hardware timer interrupt • Can also be other events, e.g. detecting a wheel slipping, or an optical sensor triggering, or a proximity sensor reaching a threshold • Event latency is the amount of time between an event occurring, and when it is actually serviced • Usually, real-time systems must keep event latency below a minimum required threshold • e.g. antilock braking system has 3-5 ms to respond to wheel-slide • The real-time system must try to meet its deadlines, regardless of system load • Of course, may not always be possible… 3 Real-Time Systems (2) • Hard real-time systems require tasks to be serviced before their deadlines, otherwise the system has failed • e.g. robotic assembly lines, antilock braking systems • Soft real-time systems do not guarantee tasks will be serviced before their deadlines • Typically only guarantee that real-time tasks will be higher priority than other non-real-time tasks • e.g. media players • Within the operating system, two latencies affect the event latency of the system’s response: • Interrupt latency is the time between an interrupt occurring, and the interrupt service routine beginning to execute • Dispatch latency is the time the scheduler dispatcher takes to switch from one process to another 4 Interrupt Latency • Interrupt latency in context: Interrupt! Task -

Embedded Systems

Sri Chandrasekharendra Saraswathi Viswa Maha Vidyalaya Department of Electronics and Communication Engineering STUDY MATERIAL for III rd Year VI th Semester Subject Name: EMBEDDED SYSTEMS Prepared by Dr.P.Venkatesan, Associate Professor, ECE, SCSVMV University Sri Chandrasekharendra Saraswathi Viswa Maha Vidyalaya Department of Electronics and Communication Engineering PRE-REQUISITE: Basic knowledge of Microprocessors, Microcontrollers & Digital System Design OBJECTIVES: The student should be made to – Learn the architecture and programming of ARM processor. Be familiar with the embedded computing platform design and analysis. Be exposed to the basic concepts and overview of real time Operating system. Learn the system design techniques and networks for embedded systems to industrial applications. Sri Chandrasekharendra Saraswathi Viswa Maha Vidyalaya Department of Electronics and Communication Engineering SYLLABUS UNIT – I INTRODUCTION TO EMBEDDED COMPUTING AND ARM PROCESSORS Complex systems and micro processors– Embedded system design process –Design example: Model train controller- Instruction sets preliminaries - ARM Processor – CPU: programming input and output- supervisor mode, exceptions and traps – Co- processors- Memory system mechanisms – CPU performance- CPU power consumption. UNIT – II EMBEDDED COMPUTING PLATFORM DESIGN The CPU Bus-Memory devices and systems–Designing with computing platforms – consumer electronics architecture – platform-level performance analysis - Components for embedded programs- Models of programs- -

1999 Nendo Supercompiler Te

NEDO-PR- 9 90 3 x-A°-=i wu ? '7-z / □ ixomgffi'g. NEDOBIS T93018 #f%4vl/4=- — 'I'Jff&'a fi ISEDQ mb'Sa 3a 0-7 rx-/<-n>/<^7-T^yovOIElS9f%l TR&1 2^3^ A 249H illK t>*^#itafc?iJI+WE$>i=t^<bLfc3>/U7S#r$u:|-^n S & - *%#$............. (3) (5) m #.......... (7) Abstract............ (9) m #?'Hb3>/W3## i 1.1 # i 1.2 g ##Wb3 7 ©&ffitib[p].................................................... 3 1.2.1 ............................................................................... 3 1.2.2 ^ y ........................................................................ 18 1.2.3 OpenMP 43,fctf OpenMP ©x-^fcKlfOttfcifeSi................. 26 1.2.4 #%3 >;W ly —^ 3 .............................................. 39 1.2.5 VLIW ^;i/3£#Hb.................................................................... 48 1.2.6 ........................ 62 1.2.7 3>;W7 <b)## p/i-f-zL —- > py—............... 72 1.2.8 3 >;w 3©##E#m©##mm.............................................. 82 1.2.9 3°3-tr y +1 © lb fp] : Stanford Hydra Chip Multiprocessor 95 1.2.10 s^^Eibrniiis : hpca -6 .............................. 134 1.3 # b#^Hb3>/W7^#m%................. 144 1.3.1 m # 144 1.3.2 145 1.3.3 m 152 1.3.4 152 2$ j£M^>E3 >hajL-T-^ 155 2.1 M E......................................................................................................................... 155 2.2 j£M5>i(3 > b° j-—x 4“ >^"©&Hlib[p] ........................................................... 157 2.2.. 1 j[£WtS(3>h0a.-:r^ >^'©51^............................................................ 157 2.2.2 : Grid Forum99, U.C.B., JavaGrande Portals Group Meeting iZjotf & ti^jSbfp] — 165 2.2.3 : Supercomputing99 iZlotf & ££l/tribip].................... 182 2.2.4 Grid##43Z^^m^m^^7"A Globus ^©^tn1 G: Hit* £ tiWSbfp] ---- 200 (1) 2.3 ><y(D mmtmrn...... -

Advanced Scheduling

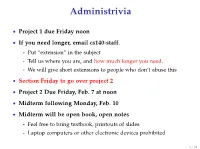

Administrivia • Project 1 due Friday noon • If you need longer, email cs140-staff. - Put “extension” in the subject - Tell us where you are, and how much longer you need. - We will give short extensions to people who don’t abuse this • Section Friday to go over project 2 • Project 2 Due Friday, Feb. 7 at noon • Midterm following Monday, Feb. 10 • Midterm will be open book, open notes - Feel free to bring textbook, printouts of slides - Laptop computers or other electronic devices prohibited 1 / 38 Fair Queuing (FQ) [Demers] • Digression: packet scheduling problem - Which network packet should router send next over a link? - Problem inspired some algorithms we will see today - Plus good to reinforce concepts in a different domain. • For ideal fairness, would use bit-by-bit round-robin (BR) - Or send more bits from more important flows (flow importance can be expressed by assigning numeric weights) Flow 1 Flow 2 Round-robin service Flow 3 Flow 4 2 / 38 SJF • Recall limitations of SJF from last lecture: - Can’t see the future . solved by packet length - Optimizes response time, not turnaround time . but these are the same when sending whole packets - Not fair Packet scheduling • Differences from CPU scheduling - No preemption or yielding—must send whole packets . Thus, can’t send one bit at a time - But know how many bits are in each packet . Can see the future and know how long packet needs link • What scheduling algorithm does this suggest? 3 / 38 Packet scheduling • Differences from CPU scheduling - No preemption or yielding—must send whole packets . -

Inside the Linux 2.6 Completely Fair Scheduler

Inside the Linux 2.6 Completely Fair Scheduler http://www.ibm.com/developerworks/library/l-completely-fair-scheduler/ developerWorks Technical topics Linux Technical library Inside the Linux 2.6 Completely Fair Scheduler Providing fair access to CPUs since 2.6.23 The task scheduler is a key part of any operating system, and Linux® continues to evolve and innovate in this area. In kernel 2.6.23, the Completely Fair Scheduler (CFS) was introduced. This scheduler, instead of relying on run queues, uses a red-black tree implementation for task management. Explore the ideas behind CFS, its implementation, and advantages over the prior O(1) scheduler. M. Tim Jones is an embedded firmware architect and the author of Artificial Intelligence: A Systems Approach, GNU/Linux Application Programming (now in its second edition), AI Application Programming (in its second edition), and BSD Sockets Programming from a Multilanguage Perspective. His engineering background ranges from the development of kernels for geosynchronous spacecraft to embedded systems architecture and networking protocols development. Tim is a Consultant Engineer for Emulex Corp. in Longmont, Colorado. 15 December 2009 Also available in Japanese The Linux scheduler is an interesting study in competing pressures. On one side are the use models in which Linux is applied. Although Linux was originally developed as a desktop operating system experiment, you'll now find it on servers, tiny embedded devices, mainframes, and supercomputers. Not surprisingly, the scheduling loads for these domains differ. On the other side are the technological advances made in the platform, including architectures (multiprocessing, symmetric multithreading, non-uniform memory access [NUMA]) and virtualization. -

(FCFS) Scheduling

Lecture 4: Uniprocessor Scheduling Prof. Seyed Majid Zahedi https://ece.uwaterloo.ca/~smzahedi Outline • History • Definitions • Response time, throughput, scheduling policy, … • Uniprocessor scheduling policies • FCFS, SJF/SRTF, RR, … A Bit of History on Scheduling • By year 2000, scheduling was considered a solved problem “And you have to realize that there are not very many things that have aged as well as the scheduler. Which is just another proof that scheduling is easy.” Linus Torvalds, 2001[1] • End to Dennard scaling in 2004, led to multiprocessor era • Designing new (multiprocessor) schedulers gained traction • Energy efficiency became top concern • In 2016, it was shown that bugs in Linux kernel scheduler could cause up to 138x slowdown in some workloads with proportional energy waist [2] [1] L. Torvalds. The Linux Kernel Mailing List. http://tech-insider.org/linux/research/2001/1215.html, Feb. 2001. [2] Lozi, Jean-Pierre, et al. "The Linux scheduler: a decade of wasted cores." Proceedings of the Eleventh European Conference on Computer Systems. 2016. Definitions • Task, thread, process, job: unit of work • E.g., mouse click, web request, shell command, etc.) • Workload: set of tasks • Scheduling algorithm: takes workload as input, decides which tasks to do first • Overhead: amount of extra work that is done by scheduler • Preemptive scheduler: CPU can be taken away from a running task • Work-conserving scheduler: CPUs won’t be left idle if there are ready tasks to run • For non-preemptive schedulers, work-conserving is not always -

Lottery Scheduler for the Linux Kernel Planificador Lotería Para El Núcleo

Lottery scheduler for the Linux kernel María Mejía a, Adriana Morales-Betancourt b & Tapasya Patki c a Universidad de Caldas and Universidad Nacional de Colombia, Manizales, Colombia, [email protected] b Departamento de Sistemas e Informática at Universidad de Caldas, Manizales, Colombia, [email protected] c Department of Computer Science, University of Arizona, Tucson, USA, [email protected] Received: April 17th, 2014. Received in revised form: September 30th, 2014. Accepted: October 20th, 2014 Abstract This paper describes the design and implementation of Lottery Scheduling, a proportional-share resource management algorithm, on the Linux kernel. A new lottery scheduling class was added to the kernel and was placed between the real-time and the fair scheduling class in the hierarchy of scheduler modules. This work evaluates the scheduler proposed on compute-intensive, I/O-intensive and mixed workloads. The results indicate that the process scheduler is probabilistically fair and prevents starvation. Another conclusion is that the overhead of the implementation is roughly linear in the number of runnable processes. Keywords: Lottery scheduling, Schedulers, Linux kernel, operating system. Planificador lotería para el núcleo de Linux Resumen Este artículo describe el diseño e implementación del planificador Lotería en el núcleo de Linux, este planificador es un algoritmo de administración de proporción igual de recursos, Una nueva clase, el planificador Lotería (Lottery scheduler), fue adicionado al núcleo y ubicado entre la clase de tiempo-real y la clase de planificador completamente equitativo (Complete Fair scheduler-CFS) en la jerarquía de los módulos planificadores. Este trabajo evalúa el planificador propuesto en computación intensiva, entrada-salida intensiva y cargas de trabajo mixtas. -

Thread Scheduling in Multi-Core Operating Systems Redha Gouicem

Thread Scheduling in Multi-core Operating Systems Redha Gouicem To cite this version: Redha Gouicem. Thread Scheduling in Multi-core Operating Systems. Computer Science [cs]. Sor- bonne Université, 2020. English. tel-02977242 HAL Id: tel-02977242 https://hal.archives-ouvertes.fr/tel-02977242 Submitted on 24 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Ph.D thesis in Computer Science Thread Scheduling in Multi-core Operating Systems How to Understand, Improve and Fix your Scheduler Redha GOUICEM Sorbonne Université Laboratoire d’Informatique de Paris 6 Inria Whisper Team PH.D.DEFENSE: 23 October 2020, Paris, France JURYMEMBERS: Mr. Pascal Felber, Full Professor, Université de Neuchâtel Reviewer Mr. Vivien Quéma, Full Professor, Grenoble INP (ENSIMAG) Reviewer Mr. Rachid Guerraoui, Full Professor, École Polytechnique Fédérale de Lausanne Examiner Ms. Karine Heydemann, Associate Professor, Sorbonne Université Examiner Mr. Etienne Rivière, Full Professor, University of Louvain Examiner Mr. Gilles Muller, Senior Research Scientist, Inria Advisor Mr. Julien Sopena, Associate Professor, Sorbonne Université Advisor ABSTRACT In this thesis, we address the problem of schedulers for multi-core architectures from several perspectives: design (simplicity and correct- ness), performance improvement and the development of application- specific schedulers. -

Package Manager: the Core of a GNU/Linux Distribution

Package Manager: The Core of a GNU/Linux Distribution by Andrey Falko A Thesis submitted to the Faculty in partial fulfillment of the requirements for the BACHELOR OF ARTS Accepted Paul Shields, Thesis Adviser Allen Altman, Second Reader Christopher K. Callanan, Third Reader Mary B. Marcy, Provost and Vice President Simon’s Rock College Great Barrington, Massachusetts 2007 Abstract Package Manager: The Core of a GNU/Linux Distribution by Andrey Falko As GNU/Linux operating systems have been growing in popularity, their size has also been growing. To deal with this growth people created special programs to organize the software available for GNU/Linux users. These programs are called package managers. There exists a very wide variety of package managers, all offering their own benefits and deficiencies. This thesis explores all of the major aspects of package management in the GNU/Linux world. It covers what it is like to work without package managers, primitive package man- agement techniques, and modern package management schemes. The thesis presents the creation of a package manager called Vestigium. The creation of Vestigium is an attempt to automate the handling of file collisions between packages, provide a seamless interface for installing both binary packages and packages built from source, and to allow per package optimization capabilities. Some of the features Vestigium is built to have are lacking in current package managers. No current package manager contains all the features which Vestigium is built to have. Additionally, the thesis explains the problems that developers face in maintaining their respective package managers. i Acknowledgments I thank my thesis committee members, Paul Shields, Chris Callanan, and Allen Altman for being patient with my error-ridden drafts. -

Machine Learning for Load Balancing in the Linux Kernel Jingde Chen Subho S

Machine Learning for Load Balancing in the Linux Kernel Jingde Chen Subho S. Banerjee [email protected] [email protected] University of Illinois at Urbana-Champaign University of Illinois at Urbana-Champaign Zbigniew T. Kalbarczyk Ravishankar K. Iyer [email protected] [email protected] University of Illinois at Urbana-Champaign University of Illinois at Urbana-Champaign Abstract ACM Reference Format: The OS load balancing algorithm governs the performance Jingde Chen, Subho S. Banerjee, Zbigniew T. Kalbarczyk, and Rav- gains provided by a multiprocessor computer system. The ishankar K. Iyer. 2020. Machine Learning for Load Balancing in Linux’s Completely Fair Scheduler (CFS) scheduler tracks the Linux Kernel. In 11th ACM SIGOPS Asia-Pacific Workshop on Systems (APSys ’20), August 24–25, 2020, Tsukuba, Japan. ACM, New process loads by average CPU utilization to balance work- York, NY, USA, 8 pages. https://doi.org/10.1145/3409963.3410492 load between processor cores. That approach maximizes the utilization of processing time but overlooks the con- tention for lower-level hardware resources. In servers run- 1 Introduction ning compute-intensive workloads, an imbalanced need for The Linux scheduler, called the Completely Fair Scheduler limited computing resources hinders execution performance. (CFS) algorithm, enforces a fair scheduling policy that aims to This paper solves the above problem using a machine learn- allow all tasks to be executed with a fair quota of processing ing (ML)-based resource-aware load balancer. We describe time. CFS allocates CPU time appropriately among the avail- (1) low-overhead methods for collecting training data; (2) an able hardware threads to allow processes to make forward ML model based on a multi-layer perceptron model that imi- progress.