Modeling Enhancer-Promoter Interactions with Attention-Based Neural Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Reconstruction of the Global Neural Crest Gene Regulatory Network in Vivo

Reconstruction of the global neural crest gene regulatory network in vivo Ruth M Williams1, Ivan Candido-Ferreira1, Emmanouela Repapi2, Daria Gavriouchkina1,4, Upeka Senanayake1, Jelena Telenius2,3, Stephen Taylor2, Jim Hughes2,3, and Tatjana Sauka-Spengler1,∗ Supplemental Material ∗Lead and corresponding author: Tatjana Sauka-Spengler ([email protected]) 1University of Oxford, MRC Weatherall Institute of Molecular Medicine, Radcliffe Department of Medicine, Oxford, OX3 9DS, UK 2University of Oxford, MRC Centre for Computational Biology, MRC Weatherall Institute of Molecular Medicine, Oxford, OX3 9DS, UK 3University of Oxford, MRC Molecular Haematology Unit, MRC Weatherall Institute of Molecular Medicine, Oxford, OX3 9DS, UK 4Present Address: Okinawa Institute of Science and Technology, Molecular Genetics Unit, Onna, 904-0495, Japan A 25 25 25 25 25 20 20 20 20 20 15 15 15 15 15 10 10 10 10 10 log2(R1_5-6ss) log2(R1_5-6ss) log2(R1_8-10ss) log2(R1_8-10ss) log2(R1_non-NC) 5 5 5 5 5 0 r=0.92 0 r=0.99 0 r=0.96 0 r=0.99 0 r=0.96 0 5 10 15 20 25 0 5 10 15 20 25 0 5 10 15 20 25 0 5 10 15 20 25 0 5 10 15 20 25 log2(R2_non-NC) log2(R2_5-6ss) log2(R3_5-6ss) log2(R2_8-10ss) log2(R3_8-10ss) 25 25 25 25 25 20 20 20 20 20 15 15 15 15 15 10 10 10 10 10 log2(R1_5-6ss) log2(R2_5-6ss) log2(R1_8-10ss) log2(R2_8-10ss) log2(R1_non-NC) 5 5 5 5 5 0 r=0.94 0 r=0.96 0 r=0.95 0 r=0.96 0 r=0.95 0 5 10 15 20 25 0 5 10 15 20 25 0 5 10 15 20 25 0 5 10 15 20 25 0 5 10 15 20 25 log2(R3_non-NC) log2(R4_5-6ss) log2(R3_5-6ss) log2(R4_8-10ss) log2(R3_8-10ss) -

The Title of the Dissertation

UNIVERSITY OF CALIFORNIA SAN DIEGO Novel network-based integrated analyses of multi-omics data reveal new insights into CD8+ T cell differentiation and mouse embryogenesis A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Bioinformatics and Systems Biology by Kai Zhang Committee in charge: Professor Wei Wang, Chair Professor Pavel Arkadjevich Pevzner, Co-Chair Professor Vineet Bafna Professor Cornelis Murre Professor Bing Ren 2018 Copyright Kai Zhang, 2018 All rights reserved. The dissertation of Kai Zhang is approved, and it is accept- able in quality and form for publication on microfilm and electronically: Co-Chair Chair University of California San Diego 2018 iii EPIGRAPH The only true wisdom is in knowing you know nothing. —Socrates iv TABLE OF CONTENTS Signature Page ....................................... iii Epigraph ........................................... iv Table of Contents ...................................... v List of Figures ........................................ viii List of Tables ........................................ ix Acknowledgements ..................................... x Vita ............................................. xi Abstract of the Dissertation ................................. xii Chapter 1 General introduction ............................ 1 1.1 The applications of graph theory in bioinformatics ......... 1 1.2 Leveraging graphs to conduct integrated analyses .......... 4 1.3 References .............................. 6 Chapter 2 Systematic -

Gene PMID WBS Locus ABR 26603386 AASDH 26603386

Supplementary material J Med Genet Gene PMID WBS Locus ABR 26603386 AASDH 26603386 ABCA1 21304579 ABCA13 26603386 ABCA3 25501393 ABCA7 25501393 ABCC1 25501393 ABCC3 25501393 ABCG1 25501393 ABHD10 21304579 ABHD11 25501393 yes ABHD2 25501393 ABHD5 21304579 ABLIM1 21304579;26603386 ACOT12 25501393 ACSF2,CHAD 26603386 ACSL4 21304579 ACSM3 26603386 ACTA2 25501393 ACTN1 26603386 ACTN3 26603386;25501393;25501393 ACTN4 21304579 ACTR1B 21304579 ACVR2A 21304579 ACY3 19897463 ACYP1 21304579 ADA 25501393 ADAM12 21304579 ADAM19 25501393 ADAM32 26603386 ADAMTS1 25501393 ADAMTS10 25501393 ADAMTS12 26603386 ADAMTS17 26603386 ADAMTS6 21304579 ADAMTS7 25501393 ADAMTSL1 21304579 ADAMTSL4 25501393 ADAMTSL5 25501393 ADCY3 25501393 ADK 21304579 ADRBK2 25501393 AEBP1 25501393 AES 25501393 AFAP1,LOC84740 26603386 AFAP1L2 26603386 AFG3L1 21304579 AGAP1 26603386 AGAP9 21304579 Codina-Sola M, et al. J Med Genet 2019; 56:801–808. doi: 10.1136/jmedgenet-2019-106080 Supplementary material J Med Genet AGBL5 21304579 AGPAT3 19897463;25501393 AGRN 25501393 AGXT2L2 25501393 AHCY 25501393 AHDC1 26603386 AHNAK 26603386 AHRR 26603386 AIDA 25501393 AIFM2 21304579 AIG1 21304579 AIP 21304579 AK5 21304579 AKAP1 25501393 AKAP6 21304579 AKNA 21304579 AKR1E2 26603386 AKR7A2 21304579 AKR7A3 26603386 AKR7L 26603386 AKT3 21304579 ALDH18A1 25501393;25501393 ALDH1A3 21304579 ALDH1B1 21304579 ALDH6A1 21304579 ALDOC 21304579 ALG10B 26603386 ALG13 21304579 ALKBH7 25501393 ALPK2 21304579 AMPH 21304579 ANG 21304579 ANGPTL2,RALGPS1 26603386 ANGPTL6 26603386 ANK2 21304579 ANKMY1 26603386 ANKMY2 -

Regulation of Adult Neurogenesis in Mammalian Brain

International Journal of Molecular Sciences Review Regulation of Adult Neurogenesis in Mammalian Brain 1,2, 3, 3,4 Maria Victoria Niklison-Chirou y, Massimiliano Agostini y, Ivano Amelio and Gerry Melino 3,* 1 Centre for Therapeutic Innovation (CTI-Bath), Department of Pharmacy & Pharmacology, University of Bath, Bath BA2 7AY, UK; [email protected] 2 Blizard Institute of Cell and Molecular Science, Barts and the London School of Medicine and Dentistry, Queen Mary University of London, London E1 2AT, UK 3 Department of Experimental Medicine, TOR, University of Rome “Tor Vergata”, 00133 Rome, Italy; [email protected] (M.A.); [email protected] (I.A.) 4 School of Life Sciences, University of Nottingham, Nottingham NG7 2HU, UK * Correspondence: [email protected] These authors contributed equally to this work. y Received: 18 May 2020; Accepted: 7 July 2020; Published: 9 July 2020 Abstract: Adult neurogenesis is a multistage process by which neurons are generated and integrated into existing neuronal circuits. In the adult brain, neurogenesis is mainly localized in two specialized niches, the subgranular zone (SGZ) of the dentate gyrus and the subventricular zone (SVZ) adjacent to the lateral ventricles. Neurogenesis plays a fundamental role in postnatal brain, where it is required for neuronal plasticity. Moreover, perturbation of adult neurogenesis contributes to several human diseases, including cognitive impairment and neurodegenerative diseases. The interplay between extrinsic and intrinsic factors is fundamental in regulating neurogenesis. Over the past decades, several studies on intrinsic pathways, including transcription factors, have highlighted their fundamental role in regulating every stage of neurogenesis. However, it is likely that transcriptional regulation is part of a more sophisticated regulatory network, which includes epigenetic modifications, non-coding RNAs and metabolic pathways. -

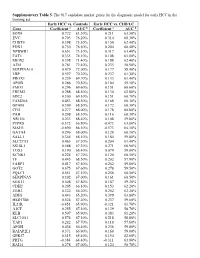

Supplementary Table 5. the 917 Candidate Marker Genes for the Diagnostic Model for Early HCC in the Training Set

Supplementary Table 5. The 917 candidate marker genes for the diagnostic model for early HCC in the training set. Early HCC vs. Controls Early HCC vs. CHB/LC Gene Coefficient a AUC b Coefficient a AUC b SOX9 0.722 81.30% 0.211 63.50% EVC 0.703 76.20% 0.314 68.30% CHST9 0.398 75.50% 0.150 62.40% PDX1 0.730 76.50% 0.204 60.40% NPBWR1 0.651 73.10% 0.317 63.40% FAT1 0.335 74.10% 0.108 61.00% MEIS2 0.398 71.40% 0.188 62.40% A2M 0.761 72.40% 0.235 58.90% SERPINA10 0.479 72.00% 0.177 58.40% LBP 0.597 70.20% 0.237 61.30% PROX1 0.239 69.70% 0.133 61.40% APOB 0.286 70.50% 0.104 59.10% FMO3 0.296 69.60% 0.151 60.60% FREM2 0.288 68.50% 0.130 62.80% SDC2 0.300 69.10% 0.151 60.70% FAM20A 0.453 68.50% 0.168 60.30% GPAM 0.309 68.50% 0.172 60.30% CFH 0.277 68.00% 0.178 60.80% PAH 0.208 68.30% 0.116 60.30% NR1H4 0.233 68.40% 0.108 59.80% PTPRS -0.572 66.80% -0.473 63.00% SIAH3 -0.690 66.10% -0.573 64.10% GATA4 0.296 68.00% 0.128 60.10% SALL1 0.344 68.10% 0.184 59.80% SLC27A5 0.463 67.30% 0.204 61.40% SS18L1 0.588 67.30% 0.271 60.90% TOX3 0.190 68.40% 0.079 59.00% KCNK1 0.224 67.70% 0.120 60.10% TF 0.445 68.50% 0.202 57.90% FARP1 0.417 67.50% 0.252 59.80% GOT2 0.675 67.60% 0.278 59.50% PQLC1 0.651 67.10% 0.258 60.50% SERPINA5 0.302 67.00% 0.161 60.50% SOX13 0.508 67.80% 0.187 59.30% CDH2 0.205 66.10% 0.153 62.20% ITIH2 0.322 66.20% 0.252 62.20% ADIG 0.443 65.20% 0.399 63.80% HSD17B6 0.524 67.20% 0.237 59.60% IL21R -0.451 65.90% -0.321 61.70% A1CF 0.255 67.10% 0.139 58.70% KLB 0.507 65.90% 0.383 61.20% SLC10A1 0.574 67.10% 0.218 58.80% YAP1 0.282 67.70% 0.118 -

The Suppressive Effects of 1,25-Dihydroxyvitamin D3 and Vitamin D Receptor on Brown Adipocyte Differentiation and Mitochondrial Respiration

University of Tennessee, Knoxville TRACE: Tennessee Research and Creative Exchange Masters Theses Graduate School 8-2014 The Suppressive Effects of 1,25-dihydroxyvitamin D3 and Vitamin D Receptor on Brown Adipocyte Differentiation and Mitochondrial Respiration Carolyn Jeanne Ricciardi University of Tennessee - Knoxville, [email protected] Follow this and additional works at: https://trace.tennessee.edu/utk_gradthes Part of the Molecular, Genetic, and Biochemical Nutrition Commons Recommended Citation Ricciardi, Carolyn Jeanne, "The Suppressive Effects of 1,25-dihydroxyvitamin D3 and Vitamin D Receptor on Brown Adipocyte Differentiation and Mitochondrial Respiration. " Master's Thesis, University of Tennessee, 2014. https://trace.tennessee.edu/utk_gradthes/2876 This Thesis is brought to you for free and open access by the Graduate School at TRACE: Tennessee Research and Creative Exchange. It has been accepted for inclusion in Masters Theses by an authorized administrator of TRACE: Tennessee Research and Creative Exchange. For more information, please contact [email protected]. To the Graduate Council: I am submitting herewith a thesis written by Carolyn Jeanne Ricciardi entitled "The Suppressive Effects of 1,25-dihydroxyvitamin D3 and Vitamin D Receptor on Brown Adipocyte Differentiation and Mitochondrial Respiration." I have examined the final electronic copy of this thesis for form and content and recommend that it be accepted in partial fulfillment of the equirr ements for the degree of Master of Science, with a major in Nutrition. -

Table S1. Complete Gene Expression Data from Human Diabetes RT² Profiler™ PCR Array Receptors, Transporters & Channels* A

Table S1. Complete gene expression data from Human Diabetes RT² Profiler™ PCR Array Position Unigene GenBank Symbol Description FC Average Ct Receptors, Transporters & Channels* NGT GDM A01 Hs,5447 NM_000352 ABCC8 ATP-binding cassette, sub-family C (CFTR/MRP), member 8 0.93 35.00 35.00 A04 0Hs,2549 NM_000025 ADRB3 Adrenergic, beta-3-, receptor 0.88 34.92 35.00 A07 Hs,1307 NM_000486 AQP2 Aquaporin 2 (collecting duct) 0.93 35.00 35.00 A09 30Hs,5117 NM_001123 CCR2 Chemokine (C-C motif) receptor 2 1.00 26.28 26.17 A10 94Hs,5916 396NM_006139 CD28 CD28 molecule 0.81 34.51 34.71 A11 29Hs,5126 NM_001712 CEACAM1 Carcinoembryonic antigen-related cell adhesion molecule 1 1.31 26.08 25.59 B01 82Hs,2478 NM_005214 CTLA4 (biliaryCytotoxic glycoprotein) T-lymphocyte -associated protein 4 0.53 30.90 31.71 B11 24Hs,208 NM_000160 GCGR Glucagon receptor 0.93 35.00 35.00 C01 Hs,3891 NM_002062 GLP1R Glucagon-like peptide 1 receptor 0.93 35.00 35.00 C07 03Hs,6434 NM_000201 ICAM1 Intercellular adhesion molecule 1 0.84 28.74 28.89 D02 47Hs,5134 NM_000418 IL4R Interleukin 4 receptor 0.64 34.22 34.75 D06 57Hs,4657 NM_000208 INSR Insulin receptor 0.93 35.00 35.00 E05 44Hs,4312 NM_006178 NSF N-ethylmaleimide-sensitive factor 0.48 28.42 29.37 F08 79Hs,2961 NM_004578 RAB4A RAB4A, member RAS oncogene family 0.88 20.55 20.63 F10 69Hs,7287 NM_000655 SELL Selectin L 0.97 23.89 23.83 F11 56Hs,3806 NM_001042 SLC2A4 Solute carrier family 2 (facilitated glucose transporter), member 4 0.77 34.72 35.00 F12 91Hs,5111 NM_003825 SNAP23 Synaptosomal-associated protein, 23kDa 3.90 -

Transcriptional and Post-Transcriptional Regulation of ATP-Binding Cassette Transporter Expression

Transcriptional and Post-transcriptional Regulation of ATP-binding Cassette Transporter Expression by Aparna Chhibber DISSERTATION Submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in Pharmaceutical Sciences and Pbarmacogenomies in the Copyright 2014 by Aparna Chhibber ii Acknowledgements First and foremost, I would like to thank my advisor, Dr. Deanna Kroetz. More than just a research advisor, Deanna has clearly made it a priority to guide her students to become better scientists, and I am grateful for the countless hours she has spent editing papers, developing presentations, discussing research, and so much more. I would not have made it this far without her support and guidance. My thesis committee has provided valuable advice through the years. Dr. Nadav Ahituv in particular has been a source of support from my first year in the graduate program as my academic advisor, qualifying exam committee chair, and finally thesis committee member. Dr. Kathy Giacomini graciously stepped in as a member of my thesis committee in my 3rd year, and Dr. Steven Brenner provided valuable input as thesis committee member in my 2nd year. My labmates over the past five years have been incredible colleagues and friends. Dr. Svetlana Markova first welcomed me into the lab and taught me numerous laboratory techniques, and has always been willing to act as a sounding board. Michael Martin has been my partner-in-crime in the lab from the beginning, and has made my days in lab fly by. Dr. Yingmei Lui has made the lab run smoothly, and has always been willing to jump in to help me at a moment’s notice. -

Nrf1 and Nrf2 Positively and C-Fos and Fra1 Negatively Regulate the Human Antioxidant Response Element-Mediated Expression of NAD(P)H:Quinone Oxidoreductase1 Gene

Proc. Natl. Acad. Sci. USA Vol. 93, pp. 14960–14965, December 1996 Pharmacology Nrf1 and Nrf2 positively and c-Fos and Fra1 negatively regulate the human antioxidant response element-mediated expression of NAD(P)H:quinone oxidoreductase1 gene RADJENDIRANE VENUGOPAL AND ANIL K. JAISWAL* Department of Pharmacology, Fox Chase Cancer Center, 7701 Burholme Avenue, Philadelphia, PA 19111 Communicated by Y. W. Kan, University of California, San Francisco, CA, August 5, 1996 (received for review February 21, 1996) ABSTRACT Twenty-four base pairs of the human anti- (c-Jun, Jun-D, and Jun-B) form homodimers among them- oxidant response element (hARE) are required for high basal selves and heterodimers with c-Fos and Fra (Fra1 and Fra2) transcription of the NAD(P)H:quinone oxidoreductase1 proteins and bind to the TRE (a single AP1 binding site) and (NQO1) gene and its induction in response to xenobiotics and regulate the expression of target genes (6). Nrf1 and Nrf2 are antioxidants. hARE is a unique cis-element that contains one recently identified NF-E2-related factors that bind to the perfect and one imperfect AP1 element arranged as inverse sequence GCTGAGTCATGATGAGTCA (7, 8). The Nrf1 repeats separated by 3 bp, followed by a ‘‘GC’’ box. We report and Nrf2 contain 742 and 589 amino acids, respectively. Both here that Jun, Fos, Fra, and Nrf nuclear transcription factors these proteins display marked homology to the NF-E2, which bind to the hARE. Overexpression of cDNA derived combi- regulates expression of globin genes in developing erythroid nations of the nuclear proteins Jun and Fos or Jun and Fra1 cells through interaction with upstream AP1-like recognition repressed hARE-mediated chloramphenicol acetyltransferase sites (9). -

Transcription Factors Recognize DNA Shape Without Nucleotide Recognition

bioRxiv preprint doi: https://doi.org/10.1101/143677; this version posted May 29, 2017. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. Transcription+factors+recognize+DNA+shape+without+nucleotide+recognition++ Md.$Abul$Hassan$Samee1,$Benoit$G.$Bruneau1,2,$Katherine$S.$Pollard$1,3$ 1:$Gladstone$Institutes,$1650$Owens$St.,$San$Francisco,$CA$94158$ 2:$$Department$of$Pediatrics$and$Cardiovascular$Research$Institute,$University$of$California,$ San$Francisco,$CA$94158$ 3:$Division$of$Bioinformatics,$Institute$for$Human$Genetics,$and$Institute$for$Computational$ Health$Sciences,$University$of$California,$San$Francisco,$CA$94158$ $ + bioRxiv preprint doi: https://doi.org/10.1101/143677; this version posted May 29, 2017. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. Abstract+ We$hypothesized$that$transcription$factors$(TFs)$recognize$DNA$shape$without$nucleotide$ sequence$recognition.$Motivating$an$independent$role$for$shape,$many$TF$binding$sites$lack$ a$sequenceZmotif,$DNA$shape$adds$specificity$to$sequenceZmotifs,$and$different$sequences$ can$ encode$ similar$ shapes.$ We$ therefore$ asked$ if$ binding$ sites$ of$ a$ TF$ are$ enriched$ for$ specific$patterns$of$DNA$shapeZfeatures,$e.g.,$helical$twist.$We$developed$ShapeMF,$which$ discovers$ these$ shapeZmotifs$ de%novo%without$ taking$ sequence$ information$ into$ account.$ -

Influence of DNA Methylation on Transcription Factor Binding

Influence of DNA methylation on transcription factor binding Inauguraldissertation zur Erlangung der Würde eines Doktors der Philosophie vorgelegt der Philosophisch-Naturwissenschaftlichen Fakultät der Universität Basel von Silvia Domcke aus München, Deutschland Basel, 2017 Originaldokument gespeichert auf dem Dokumentenserver der Universität Basel edoc.unibas.ch Genehmigt von der Philosophisch-Naturwissenschaftlichen Fakultät auf Antrag von Prof. Dr. Dirk Schübeler und Dr. François Spitz. Basel, den 18.04.2017 Prof. Dr. Martin Spiess (Dekan) "While Occam's razor is a useful tool in the physical sciences, it can be a very dangerous implement in biology. It is thus very rash to use simplicity and elegance as a guide in biological research. While DNA could be claimed to be both simple and elegant, it must be remembered that DNA almost certainly originated fairly close to the origin of life when things were necessarily simple or they would not have got going. Biologists must constantly keep in mind that what they see was not designed, but rather evolved." ---- Francis Crick in 'What mad Pursuit' (1988) ---- i ii Acknowledgements This thesis would not have been possible without the support and contribution of many people. I would like to thank my PhD advisor Dirk Schübeler for giving me the opportunity to work in his group and for many excellent as well as thought- provoking scientific discussions. I especially appreciate that it was always possible to get critical input and advice while still having a lot of freedom and flexibility. For direct contributions to this study I would like to thank the co-authors of the published manuscript, in particular Anaïs Bardet. -

Supplement. Transcriptional Factors (TF), Protein Name and Their Description Or Function

Supplement. Transcriptional factors (TF), protein name and their description or function. TF Protein name TF description/function ARID3A AT rich interactive domain 3A (BRIGHT-like) This gene encodes a member of the ARID (AT-rich interaction domain) family of DNA binding proteins. ATF4 Activating Transcription Factor 4 Transcriptional activator. Binds the cAMP response element (CRE) (consensus: 5-GTGACGT[AC][AG]-3), a sequence present in many viral and cellular promoters. CTCF CCCTC-Binding Factor Chromatin binding factor that binds to DNA sequence specific sites. Involved in transcriptional regulation by binding to chromatin insulators and preventing interaction between promoter and nearby enhancers and silencers. The protein can bind a histone acetyltransferase (HAT)-containing complex and function as a transcriptional activator or bind a histone deacetylase (HDAC)-containing complex and function as a transcriptional repressor. E2F1-6 E2F transcription factors 1-6 The protein encoded by this gene is a member of the E2F family of transcription factors. The E2F family plays a crucial role in the control of cell cycle and action of tumor suppressor proteins and is also a target of the transforming proteins of small DNA tumor viruses. The E2F proteins contain several evolutionally conserved domains found in most members of the family. These domains include a DNA binding domain, a dimerization domain which determines interaction with the differentiation regulated transcription factor proteins (DP), a transactivation domain enriched in acidic amino acids, and a tumor suppressor protein association domain which is embedded within the transactivation domain. EBF1 Transcription factor COE1 EBF1 has been shown to interact with ZNF423 and CREB binding proteins.