[Cs.LG] 12 Nov 2015 This Leads to Dependence on the Number of Samples That Is Nearly Order-Optimal

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FM 4-02 Force Health Protection in a Global Environment

FM 4-02 (FM 8-10) FORCE HEALTH PROTECTION IN A GLOBAL ENVIRONMENT HEADQUARTERS, DEPARTMENT OF THE ARMY FEBRUARY 2003 DISTRIBUTION RESTRICTION: Approved for public release; distribution is unlimited. *FM 4-02 (FM 8-10) FIELD MANUAL HEADQUARTERS NO. 4-02 DEPARTMENT OF THE ARMY Washington, DC, 13 February 2003 FORCE HEALTH PROTECTION IN A GLOBAL ENVIRONMENT TABLE OF CONTENTS Page PREFACE .......................................................................................................... vi CHAPTER 1. FORCE HEALTH PROTECTION ............................................ 1-1 1-1. Overview ............................................................................. 1-1 1-2. Joint Vision 2020 ................................................................... 1-1 1-3. Joint Health Service Support Vision ............................................. 1-1 1-4. Healthy and Fit Force .............................................................. 1-1 1-5. Casualty Prevention ................................................................ 1-2 1-6. Casualty Care and Management .................................................. 1-2 CHAPTER 2. FUNDAMENTALS OF FORCE HEALTH PROTECTION IN A GLOBAL ENVIRONMENT ................................................ 2-1 2-1. The Health Service Support System ............................................. 2-1 2-2. Principles of Force Health Protection in a Global Environment ............ 2-2 2-3. The Medical Threat and Medical Intelligence Preparation of the Battle- field .............................................................................. -

Download Moses Mp3 by French Montana Ft Chris Brown and Migos

Download moses mp3 by french montana ft chris brown and migos CLICK HERE TO DOWNLOAD French Montana Ft. Chris Brown & Migos Moses free mp3 download and stream. “Moses,” French Montana’s third single off his Casino Life 2 mixtape features Chris Brown and Migos, along with production from three Atlanta beat-makers. DJ Spinz, Southside, and TM88 all. 8/5/ · "Moses" featuring Chris Brown and Migos is another heater off of French Montana's new tape. French Montana's new tape has such an eclectic /5(). Ca khúc Moses do ca sĩ French Montana, Chris Brown, Migos thể hiện, thuộc thể loại R&B/Hip Hop/Rap. Các bạn có thể nghe, download (tải nhạc) bài hát moses mp3, playlist/album, MV/Video moses miễn phí tại renuzap.podarokideal.ru French Montana ft Chris Brown & Migos (Video) – Hold Up | Mp4 Download. French Montana drops the video “Hold Up,” featuring Chris Brown and Migos. French Montana teamed up with the hottest rap group on the planet and the C-Breezy to drop the video “Hold Up,” an unreleased track from MC4. Migos and Chris Brown steal the show on this. French Montana - Moses ft. Chris Brown & Migos (Official Video) mp3 Duration Size MB / French Montana 1 Omarion Ft. Kid Ink & French Montana - I'm Up (Official Music Video) mp3 Duration Size MB / Omarion 2. Yeah, I’m feeling like Moses I’m all in the ocean, I’m parting, divided up in it like Moses She switching the motions and making them faces just like an emoji Pineapple Fanta, it mixed with the Xanax, she loving the codeine All of my killas they ready to follow me, I feel like Moses [French Montana (Chris Brown):]. -

Mens C H S Piel Verantwo R Tung

UPDATE 2 016 PEOPLE MENS C H GAMING RESPONSIBILITY S PIEL VERANTWOR TUNG INTERIM REPORT FOR THE CASINOS AUSTRIA AND AUSTRIAN LOTTERIES GROUP Our Business Year 2016 Total Workforce* (average annual full-time equivalent; 4,233 as of 31 December 2016) Women 39.3 % Men 60.7 % * Casinos Austria AG (incl. Cuisino, CAST, CCB & CALL, CAIH), Österreichische Lotterien Ges.m.b.H., win2day (incl. Rabcat), WINWIN and tipp3 Taxes and Other Duties 609.25 (in millions euro) Casinos Austria AG and Österreichische Lotterien Ges.m.b.H. Casino Guests 3.01 (in millions) excluding Casinos Austria International Österreichische Casinos Austria AG* 215 Lotterien Ges.m.b.H.* delegates * Services sector Sports Funding 80 (in millions euro) Österreichische Lotterien Ges.m.b.H. Environmental Indicators Energy consumption in kWh 42,846,490 Water consumption in m3 77,469 Total consumption in 2016 at RW44/46 and MC4 sites, casinos and WINWIN outlets Companies in 2016 – Overview as of 31 December 2016 1 SHAREHOLDER STRUCTURE IN % SHAREHOLDER STRUCTURE IN % 4 2 Casinos Austria AG Österreichische Lotterien Gesellschaft m.b.H. 2 1 Bankhaus Schelhammer & Schattera AG 1 Casinos Austria AG 5.3 % 1 68 % 3 2 Medial Beteiligungs-GmbH 2 Lotto-Toto Holding Gesellschaft m.b.H. 38.3 % 32 % • UNIQA • CAME Holding GmbH Shareholders • Raiffeisen Gruppe • CLS Beteiligungs GmbH • Bankhaus Schelhammer & Schattera AG (Bankhaus Schelhammer & Schattera AG) (Privatstiftung Dipl.Ing. Melchart) 3 Österreichische Bundes- und (BAIH Beteiligungsverwaltungs GmbH) Industriebeteiligungen GmbH (ÖBIB) • RSV Beteiligungs GmbH 33.2 % (Novomatic AG) • LTB Beteiligungs GmbH * 4 Novomatic AG (BAIH Beteiligungsverwaltungs GmbH) 17.2 % (Novomatic AG) (Austrian Gaming Holding) * 5 Private Shareholders * Changes in the reporting period: • Österreichischer Rundfunk 6 % acquisition of shareholding by Novomatic AG) * Shareholdings in % CASINOS AUSTRIA AG LOTTO-TOTO HOLDING Gesellschaft m.b.H. -

Beanie Sigel Albums Free Download

Beanie sigel albums free download Continue Also known as B. Siegel, Beanie, Beanie Mac, Beanie S., Beanie Segal, Beanie Seagel, Beanie Segal, Beanie Seigal, Beanie Seigel, Beanie Siegal, Beanie Siegel, Beanie Siegle, Beanie Sigel Production Team, BeanieSigel, Beannie, Beans, Beenie Beenie, Beenie Seigel, Beenie Seigel, Beenie Siegal, Beenie Siegle, Beenie Sigel, Bennie Sigel, Bennie Sigel Listen to this album and over 60 million songs with unlimited streaming plans. 1 month free, then $14.99/month Page 2 You are currently listening to samples. Listen to more than 60 million songs with an unlimited streaming plan. Listen to this album and over 60 million songs with unlimited streaming plans. 1 month free, then $14.99/month Page 3 You are currently listening to samples. Listen to more than 60 million songs with an unlimited streaming plan. Listen to this album and over 60 million songs with unlimited streaming plans. 1 month free, then $14.99/month Page 4 You are currently listening to samples. Listen to more than 60 million songs with an unlimited streaming plan. Listen to this album and over 60 million songs with unlimited streaming plans. 1 month free, then $14.99/month is a discography of Beanie Sigel, an American rapper. Albums Studio Albums List of Studio Albums, with Selected Chart Positions, Sales Figures and Title Certification Album Details Peak Chart Position Sales Certificates US 2000 Label: Roc-A-Fella, Def Jam Formats: CD, LP, cassette, digital download 5 2 1 USA: 695,000 RIAA: Gold: June 26, 2001 Label: Roc-A-Fella, Def Jam Formats: CD, LP, cassette, digital download 5 1 USA: 585,000 RIAA: Gold B. -

Kodak Black Dating Jt, Black List Dating Sites, Forbes Millionaire Dating Online, Top Thai Dating Websites» Datenschutzerklärung Für Das ESAF in Zug Habe /10()

Kodak Black Dating Jt, black list dating sites, forbes millionaire dating online, top thai dating websites» Datenschutzerklärung Für das ESAF in Zug habe /10(). As of , Kodak Black’s is not dating anyone. Kodak is 23 years old. According to CelebsCouples, Kodak Black had at least 1 relationship previously. He has not been previously engaged. Hold up! Did we miss something? Video has surfaced of rapper Kodak Black proposing to Yung Miami of the City Girls?!. In the video, you can see Kodak pulling out a ring and getting down on one knee and the crowd going crazy; after what appears to be Yung Miami saying yes. Dec 11, · Yung Miami On JT Release, Sex Raps, Trina & Meeting Drake - Duration: HOT 97 , views. Kodak Black Talks Decision To Leave Florida, His New Girlfriend. Kodak Black Dating Jt, site de rencontre femme bulgare, best free gay online dating sites, paranormal dating agency 8 read online. 61 ans. Etait en ligne il y a 1 jour. 83 ans. Chattez! 1m 24 ans. 4 photos. Kamisu, 41 ans. 41 ans. Perpignan. En ligne. De zoekresultaten bevatten mogelijk ongepaste woorden. Rapper Kodak Black, who was released from a Florida jail earlier this month, met the wrath of black women over the weekend after making comments about his dating preferences. On Kodak Black was born in Pompano Beach, Florida. He made his million dollar fortune with MC4 & Tunnel Vision. The musician is currently single, his starsign is Gemini and he is now 23 years of age. Kodak Black Facts & Wiki. Jun 28, · Kodak Black Net Worth and Salary in Kodak Black Net Worth. -

Recipes for Success

Recipes for Success Annual Report 2016 Facts and Figures Group Casinos Austria Casinos Austria Österreichische (consolidated) AG International Lotterien (consolidated) Ges.m.b.H. Gaming revenues and bets placed incl. ancillary revenues in millions euro 2016 3,885.95 – 126.37 – 2015 3,599.69 – 133.75 – Gaming revenues in millions euro 2016 – 326.83 – 3,351.98 2015 – 310.091 – 3,084.05 Taxes, duties and social security contributions in Austria in millions euro 2016 Total 609.25 127.45 – 466.12 Gaming-related taxes and duties 525.75 93.41 – 430.94 Other taxes, duties and social security contributions 83.5 34.04 – 35.18 2015 Total 589.35 118.93 – 461.28 Gaming-related taxes and duties 518.83 88.25 – 429.39 Other taxes, duties and social security contributions 70.52 30.68 – 31.89 Operating result/Operating profit in millions euro 2016 150.06 29.99 34.79 57.74 2015 100.47 9.701 8.08 61.921 Annual surplus in millions euro 2016 – 74.22 – 67.70 2015 – 20.35 – 60.32 Group result in millions euro 2016 91.20 – 9.14 – 2015 55.28 – -3.81 – Employees annual average full-time equivalent (FTE) 2016 4,2332 1,8083 1,717 486 2015 4,2562 1,6993 1,787 497 Casino guests in millions 2016 7.81 3.01 4.80 – 2015 7.57 2.72 4.85 – Gaming tables 2016 569 232 337 – 2015 545 211 334 – Slot machines 2016 6,402 2,062 4,340 – 2015 6,365 2,072 4,293 – Video lottery terminals (WINWIN, CAI) 2016 1,666 – 1,038 – 2015 1,393 – 750 – 1 Adjusted. -

Hype the Motion Picture Soundtrack (Full Album) Download Hype the Motion Picture Soundtrack (Full Album) Download

hype the motion picture soundtrack (full album) download Hype the motion picture soundtrack (full album) download. Artist: Asura Album: Realize Released: 2021 Style: Power Metal. Format: MP3 320Kbps. The Residents – Morning Music (2021) Artist: The Residents Album: Morning Music Released: 2021 Style: Art Rock. Format: MP3 320Kbps. Martin Denny – Afro-Desia Remastered (2021) Artist: Martin Denny Album: Afro-Desia Remastered Released: 2021 Style: World Music. Format: MP3 320Kbps. Leo Baroso – Troubadour (2021) Artist: Leo Baroso Album: Troubadour Released: 2021 Style: Dance. Format: MP3 320Kbps. Earl Hines – Keep Moving (2021) Artist: Earl Hines Album: Keep Moving Released: 2021 Style: Jazz. Format: MP3 320Kbps. Julie London – Oldies Selection Best Of Production, Vol. 1 (2021) Artist: Julie London Album: Oldies Selection Best Of Production, Vol. 1 Released: 2021 Style: Jazz. Format: MP3 320Kbps. Dalida – Dalida! (2021) Artist: Dalida Album: Dalida! Released: 2021 Style: Chanson. Format: MP3 320Kbps. Bob James – One Remastered (2021) Artist: Bob James Album: One Remastered Released: 2021 Style: Jazz. Format: MP3 320Kbps. Alice Was A Man – Alice Was A Man (2021) Artist: Alice Was A Man Album: Alice Was A Man Released: 2021 Style: Heavy Metal. Format: MP3 320Kbps. Preston Epps – Bongo Bongo Bongo! Remastered (2021) Artist: Preston Epps Album: Bongo Bongo Bongo! Remastered Released: 2021 Style: World Music. Format: MP3 320Kbps. Infected – Coffins (2021) Artist: Infected Album: Coffins Released: 2021 Style: Death Metal. Format: MP3 320Kbps. Rob Noyes – Arc Minutes (2021) Artist: Rob Noyes Album: Arc Minutes Released: 2021 Style: Indie Folk. Format: MP3 320Kbps. Godless Throne – Damnation Through Design (2021) Artist: Godless Throne Album: Damnation Through Design Released: 2021 Style: Symphonic Death Metal. -

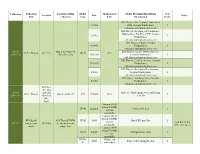

Collection Collection Location Within Media Media Label Media Markings/Identifying # of Collection Location Date Notes Title Collection Type Info Information Items

Collection Collection Location within Media Media Label Media Markings/Identifying # of Collection Location Date Notes Title collection Type Info Information Items RPI Players, The Tempest, November 11/1/02 2002, Scorpio Productions, 1 [email protected] RPI Players, Evening of Performance, Making Nice, No Exit, CUT, Scorpio 2/1/02 1 Productions, [email protected] RPI Players, Pippin, Scorpio 4/24/03 Productions, 1 [email protected] AC 29 Box 1 of Series VI RPI Players, Up the Down Staircase, R.P.I. Players B-7-2-4 DVD N/A (2009-21) (box 29 of 29) 10/24/03 Scorpio Productions, 1 [email protected] RPI Players, Lend me a tenor, Scorpio 11/14/03 Productions, 1 [email protected] RPI Players, Evening of Performance, 2/1/04 Scorpio Productions, 1 [email protected] RPI Player, Anything Goes, Scorpio 4/1/04 Productions, 1 [email protected] B-7-2-4 (See also AC 29 material Players 75th Reunion, 4-16-2005 John R.P.I. Players Box 4, folder 29 CD 4/16/05 N/A 1 (2005-07) found on E. Kolb office desk) Imation CD-R 80min/700MB, CD-R undated Class of '81 pics 1 1x-52x compatible Imation CD-R 80min/700MB, RPI digital all CDs and DVDs CD-R 2005 Gm & PU pix Gala 1 1x-52x each disc is in a 2012-12 images and B-13-8-6 are located in one compatible white disc sleeve music single box Imation CD-R 80min/700MB, CD-R 6/6/05 NRB photo for Beth 1 1x-52x compatible DVD+ Philips 120 2005 Dance Club Spring Recital 1 R min video Collection Collection Location within Media Media Label Media Markings/Identifying -

Envisioning a Compulsory-Licensing System for Digital Samples Through Emergent Technologies

SABBAGH IN PRINTER FINAL (DO NOT DELETE) 9/16/2019 11:13 PM ENVISIONING A COMPULSORY-LICENSING SYSTEM FOR DIGITAL SAMPLES THROUGH EMERGENT TECHNOLOGIES CHRISTOPHER R. SABBAGH† ABSTRACT Despite the rapid development of modern creative culture, federal copyright law has remained largely stable, steeped in decades of tradition and history. For the most part, copyright finds strength in its stability, surviving the rise of recorded music, software programs, and, perhaps the most disruptive technology of our generation, the internet. On the other hand, copyright’s resistance to change can be detrimental, as with digital sampling. Although sampling can be a highly creative practice, and although copyright purports to promote creativity, current copyright law often interferes with the practice of sampling. The result is a largely broken system: Those who can legally sample are usually able to do so because they are wealthy, influential, or both. Those who cannot legally sample often sample illegally. Many scholars have suggested statutory solutions to this problem. Arguably, the most workable solutions are rooted in compulsory licenses. Unfortunately, implementing these solutions is practically difficult. Two recent developments invite us to revisit these proposals. First, with the passage of the Music Modernization Act (“MMA”), Congress has evinced a willingness to “modernize” parts of copyright law. Second, emergent technologies—from the MMA’s musical-works database to blockchain to smart contracts—can be leveraged to more easily implement a compulsory-licensing solution. This time around, rather than simply discuss why this solution is favorable, this Note will focus on how it can be implemented. Copyright © 2019 Christopher R. -

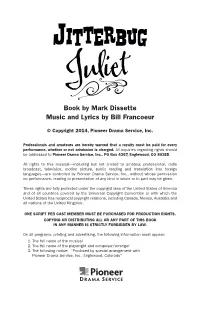

Jitterbug Juliet

Book by Mark Dissette Music and Lyrics by Bill Francoeur © Copyright 2014, Pioneer Drama Service, Inc. Professionals and amateurs are hereby warned that a royalty must be paid for every performance, whether or not admission is charged. All inquiries regarding rights should be addressed to Pioneer Drama Service, Inc., PO Box 4267, Englewood, CO 80155. All rights to this musical—including but not limited to amateur, professional, radio broadcast, television, motion picture, public reading and translation into foreign languages—are controlled by Pioneer Drama Service, Inc., without whose permission no performance, reading or presentation of any kind in whole or in part may be given. These rights are fully protected under the copyright laws of the United States of America and of all countries covered by the Universal Copyright Convention or with which the United States has reciprocal copyright relations, including Canada, Mexico, Australia and all nations of the United Kingdom. ONE SCRIPT PER CAST MEMBER MUST BE PURCHASED FOR PRODUCTION RIGHTS. COPYING OR DISTRIBUTING ALL OR ANY PART OF THIS BOOK IN ANY MANNER IS STRICTLY FORBIDDEN BY LAW. On all programs, printing and advertising, the following information must appear: 1. The full name of the musical 2. The full name of the playwright and composer/arranger 3. The following notice: “Produced by special arrangement with Pioneer Drama Service, Inc., Englewood, Colorado” JITTERBUG JULIET Book by MARK DISSETTE Music and lyrics by BILL FRANCOEUR CAST OF CHARACTERS (In Order of Speaking) # of lines -

Main Chick Remix French Montana Mp3 Download

Main chick remix french montana mp3 download CLICK TO DOWNLOAD 07/07/ · HNHH Premiere! Listen to a huge remix of Kid Ink's 'Main Chick' featuring French Montana, Yo Gotti, Tyga & Lil Bibby. Kid Ink is back with a big remix for his main single about his main chick /5(). Stream Main Chick (feat. Chris Brown, French Montana, Yo Gotti, Tyga & Lil Bibby) by Kid Ink from desktop or your mobile device. Ca khúc Main Chick (Remix) do ca sĩ Chris Brown, French Montana, Yo Gotti, V.A thể hiện, thuộc thể loại R&B/Hip Hop/Rap.Các bạn có thể nghe, download (tải nhạc) bài hát main chick (remix) mp3, playlist/album, MV/Video main chick (remix) miễn phí tại renuzap.podarokideal.ru 08/07/ · Kid Ink Ft. Chris Brown, French Montana, Yo Gotti, Tyga & Lil Bibby – Main Chick (Remix) July 8, abegmusic MUSIC 0 Promote Your Song Here Whatsapp + Kid Ink kicks off the new week today with the official remix for his DJ Mustard-produced track "Main Chick." The lineup on this remix is stellar, featuring French Montana, Yo Gotti, Tyga, and Lil Author: Zach Frydenlund. 07/07/ · Main Chick (Remix 2) Lyrics: I told her "fuck that nigga" / (Mustard on the beat ho) / I don't know your name, but you've heard my name / I know why you came / Tryna get that name, but you've. On May 6, , Kid Ink performed "Main Chick" and "Show Me" on Jimmy Kimmel Live! with Travis Barker and Eric Bellinger substituting for Chris Brown. Remixes. The official remix of the song features Chris Brown, French Montana, Yo Gotti, Tyga, Lil Bibby and a new verse by Kid Ink. -

Slide French Montana Free Mp3 Download French Montana MONTANA (ALBUM DOWNLOAD#) WORKING

slide french montana free mp3 download French Montana MONTANA (ALBUM DOWNLOAD#) WORKING. MP3. Montana (stylised in all caps) is the third studio album by Moroccan-American rapper French Montana,released throughEpic Records on December 6, 2019. It features the singles "No Stylist" featuring Drake, "Slide" featuring Blueface and Lil Tjay,[3] "Writing on the Wall" featuring Post Malone, Cardi B, and Rvssian,[4] "Wiggle It" featuring City Girls, "SuicideDoors" featuring Gunna,[5] and "Twisted" featuring Juicy J, Logic and ASAP Rocky. It also includes the singles "Lockjaw" featuring Kodak Black and"No Shopping" featuring Drake, which were previously released on French Montana's 2016mixtape MC4. Artist: French Montana Album: MONTANA Release: 2019 Genre: Hip-Hop Quality: mp3, 320 kbps. Track list: 01. Montana 02. Suicide Doors (feat. Gunna) 03. 50’s & 100’s (feat. Juicy J) 04. What It Look Like 05. Lifestyle (feat. Kodak Black & Kevin Gates) 06. Salam Alaykum 07. That Way 08. Say Goodbye (feat. Belly) 09. Coke Wave Boys (feat. Chinx & Max B) 10. Writing on the Wall (feat. Post Malone & Cardi B & Rvssian) 11. Out Of Your Mind (feat. Swae Lee & Chris Brown) 12. Wanna Be (feat. PARTYNEXTDOOR) 13. Twisted (feat. Juicy J & Logic & A$AP Rocky) 14. Hoop (feat. Quavo) 15. No Stylist (feat. Drake) 16. Wiggle It (feat. City Girls) 17. Slide (feat. Blueface & Lil Tjay) 18. Saucy 19. No Shopping (feat. Drake) 20. Lockjaw (feat. Kodak Black) Top stories Germany. (Aug 07, 2021) French Montana's new Kanye West-produced Lil' Wayne, Rick Ross collaboration The best part comes when Rick Ross raps, "I think I'm bout to lose it man/ I'm bout to go Gucci Mane," on the hook.