The Application of Reliability and Validity Measures to Assess The

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

List of Instititions in AK

List of Instititions in AK List of Public Two-Year Instititions in AK Name FTE AVTEC-Alaska's Institute of Technology 264 Ilisagvik College 139 List of Public Non-Doctoral Four-Year Instititions in AK Name FTE University of Alaska Anchorage 11400 University of Alaska Southeast 1465 List of Public Doctoral Instititions in AK Name FTE University of Alaska Fairbanks 5446 List of Private Non-Doctoral Four-Year Instititions in AK Name FTE Alaska Bible College 24 Alaska Pacific University 307 1 List of Instititions in AL List of Public Two-Year Instititions in AL Name FTE Central Alabama Community College 1382 Chattahoochee Valley Community College 1497 Enterprise State Community College 1942 James H Faulkner State Community College 3714 Gadsden State Community College 4578 George C Wallace State Community College-Dothan 3637 George C Wallace State Community College-Hanceville 4408 George C Wallace State Community College-Selma 1501 J F Drake State Community and Technical College 970 J F Ingram State Technical College 602 Jefferson Davis Community College 953 Jefferson State Community College 5865 John C Calhoun State Community College 7896 Lawson State Community College-Birmingham Campus 2474 Lurleen B Wallace Community College 1307 Marion Military Institute 438 Northwest-Shoals Community College 2729 Northeast Alabama Community College 2152 Alabama Southern Community College 1155 Reid State Technical College 420 Bishop State Community College 2868 Shelton State Community College 4001 Snead State Community College 2017 H Councill Trenholm State -

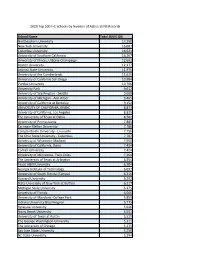

School Name Total SEVIS IDS Northeastern University

2020 Top 500 F-1 Schools by Number of Active SEVIS Records School Name Total SEVIS IDS Northeastern University 17,290 New York University 16,667 Columbia University 16,631 University of Southern California 16,207 University of Illinois, Urbana-Champaign 12,692 Boston University 12,177 Arizona State University 11,975 University of the Cumberlands 11,625 University of California San Diego 10,984 Purdue University 10,706 University Park 9,612 University of Washington - Seattle 9,608 University of Michigan - Ann Arbor 9,465 University of California at Berkeley 9,152 UNIVERSITY OF CALIFORNIA, IRVINE 8,873 University of California, Los Angeles 8,825 The University of Texas at Dallas 8,582 University of Pennsylvania 7,885 Carnegie Mellon University 7,786 Campbellsville University - Louisville 7,756 The Ohio State University - Columbus 7,707 University of Wisconsin-Madison 7,550 University of California, Davis 7,434 Cornell University 7,424 University of Minnesota, Twin Cities 7,264 The University of Texas at Arlington 6,954 Texas A&M University 6,704 Georgia Institute of Technology 6,697 University of South Florida (Tampa) 6,316 Harvard University 6,292 State University of New York at Buffalo 6,217 Michigan State University 6,175 University of Florida 6,065 University of Maryland -College Park 5,859 Indiana University Bloomington 5,775 Syracuse University 5,646 Stony Brook University 5,591 University of Texas at Austin 5,529 The George Washington University 5,311 The University of Chicago 5,275 San Jose State University 5,250 NC State University 5,194 Harrisburg University of Science & Tech 5,127 University of Illinois at Chicago 5,120 Stanford University 4,983 Duke University & Health Sys. -

LIU Brooklyn RESOURCE GUIDE Message from the Provost

LIU Brooklyn RESOURCE GUIDE message from the Provost Dear Colleagues: Welcome to the LIU Brooklyn Resource Guide! Developed by a diverse team of LIU Brooklyn faculty and administrators in 2011, this guide is designed as a handy online reference for faculty and staff at LIU Brooklyn to serve our students and maximize their success—as well as your own. Whether you are new to LIU or a longtime member of the campus community, this guide will better acquaint you with our extraordinary array of programs and services. You’ll find information on financial assistance programs for your students’ or your own research; where to find help with classroom technology; how to organize a special event and get it publicized; and how to take advantage of the campus’s rich cultural, culinary and health and wellness programs. This guide also contains vital information about campus policies and compliance regulations. The Resource Guide is organized into the following major sections: • Schools and Colleges • Specialized Programs • Resources for: – Students – Faculty – Campus • Compliance Resources My hope is that this guide will help you take advantage of our pedagogical, administrative and student services to enhance your teaching and advising and make you a more effective, engaged member of the LIU Brooklyn community. While providing an overview of the LIU Brooklyn programs and policies, this guide is by no means all-inclusive . Much more information is available at our website at www.liu.edu/brooklyn . The guide also will be available in print and will be updated annually. If you believe we have omitted important information that we should include in future editions of the LIU Brooklyn Resource Guide, please contact the Associate Provost’s Office, located in Metcalfe Hall, Room 301, by phone at (718) 488-3405 or by completing the Resource Guide Form, available in our office and online. -

New York City: the Place to Live, Learn, and Connect International Student Prospectus 2016-17

New York City: The Place to Live, Learn, and Connect International Student Prospectus 2016-17 universitiesintheusa.com/liu-brooklyn universitiesintheusa.com/liu-brooklyn Contents Welcome to LIU Brooklyn: Welcome to LIU Brooklyn 1 Welcome to New York City 2 The place to start The place to discover 4 The place for success 6 your future The place to make a difference 8 The place for research and creativity 10 The place to grow 12 The place to connect with your future career 14 The place to thrive 16 Choosing the right American university starts with an The place to challenge yourself 18 important question: ‘Where will I find success?’ We believe How to apply 20 that the answer to that question is LIU Brooklyn in New York City, and this guide will demonstrate why. New York City is one of the most exciting and innovative cities in the world. Whatever your goal in life, you can achieve it here. Whatever experience you want to gain, you can attain it here. Quite simply, New York is the place to discover your potential. Brooklyn is one of the hottest neighborhoods in the city, and LIU Brooklyn is located right in the heart of it all. Study with us and you will find yourself steps away from the entertainment of the Barclays Center, as well as the popular restaurants, cafés, and boutiques of Fort Greene. LIU Brooklyn offers the very best of city life. Our beautifully landscaped 11-acre (.04km) campus (ranked the safest in New York City by The Daily Beast news website) offers easy access to the rich professional opportunities and resources of Manhattan. -

LIU Brooklyn 2016-2017 Graduate Bulletin

LIU Brooklyn 2016-2017 Graduate Bulletin LIU Brooklyn 2016 - 2017 Graduate Bulletin 1 University Plaza, Brooklyn, N.Y. 11201-5372 General Information: 718-488-1000 www.liu.edu/brooklyn Admissions: 718-488-1011 Email: [email protected] Notice to Students: The information in this publication is accurate as of September 1, 2016. However, circumstances may require that a given course be withdrawn or alternate offerings be made. Therefore, LIU reserves the right to amend the courses described herein and cannot guarantee enrollment into any specific course section. All applicants are reminded that the University is subject to policies promulgated by its Board of Trustees, as well as New York State and federal regulation. The University therefore reserves the right to effect changes in the curriculum, administration, tuition and fees, academic schedule, program offerings and other phases of school activity, at any time, without prior notice. The University assumes no liability for interruption of classes or other instructional activities due to fire, flood, strike, war or other force majeure. The University expects each student to be knowledgeable about the information presented in this bulletin and other official publications pertaining to his/her course of study and campus life. For additional information or specific degree requirements, prospective students should call the campus Admissions Office. Registered students should speak with their advisors. Bulletin 2016 - 2017 Residence Life Rates 21 TABLE OF CONTENTS Financial Policies -

UNIVERSITY DIRECTOR of BUSINESS ADVANCEMENT LONG ISLAND UNIVERSITY Brookville/Brooklyn, New York

UNIVERSITY DIRECTOR OF BUSINESS ADVANCEMENT LONG ISLAND UNIVERSITY Brookville/Brooklyn, New York http://liu.edu The Aspen Leadership Group is proud to partner with the Long Island University in the search for a University Director of Business Advancement. The University Director of Business Advancement will be responsible for raising major gifts for the College of Management on the Long Island University (LIU) Post Campus and the School of Business on the LIU Brooklyn campus as part of the University’s overall fundraising program. With two new deans in both programs, this is an exciting time to be joining the advancement leadership team at one of the largest private universities in the country. The University Director of Business Advancement will join a team of professionals who are focused on ensuring sustainable philanthropic growth commensurate with the needs of the Business programs by engaging alumni and friends, soliciting prospects and stewarding donors in a professional and collaborative manner. This individual’s primary responsibility is to identify and engage major gift prospects and donors capable of making gifts of $50,000 to $1,000,000, including individuals in early stage cultivation and/or with whom the University has little to no relationship. Founded in 1926 in Brooklyn, NY, LIU provides excellence and access in private higher education to people from all backgrounds that seek to expand their knowledge and prepare themselves for meaningful, educated lives and for service to their communities and the world. LIU offers more than 500 undergraduate, graduate, and doctoral degree programs and certificates, educating more than 20,000 students each year across multiple campuses. -

New York City History Day 2021: Award Winners

New York City History Day 2021: Award Winners Documentary Category Junior Group Documentary Senior Group Documentary First Place: Communications in World War II, Owen First Place: The Impact of the Bubonic Plague on Lucas and Derek Russo, Growing Up Green Charter Communication of 14th and 15th Century Art, Cindy School Yang and Khadiza Rahman, Brooklyn Technical High School Second Place: The Navajo Code Talkers, Yoel Serraf and Zev Horowitz, Manhattan Day School Second Place: Atomic Energy, Giovanna Campanella, Olivia Monica, Evan O’Dea, Duilio Perone, and Adriana Zajac, Fort Hamilton High School Junior Individual Documentary Senior Individual Documentary First Place: Dr. Seuss Political Cartoons: A Message First Place: The Con Men of World War II: to the World, Ava Brooks, Manhattan Day School Understanding Lessons of Their Deception Strategy, Lawson Wright, The Horace Mann School Second Place: America’s Longest War: How Rhetoric Created a War on Drugs, Pierce Wright, Second Place: Dr Seuss: From Racist Propaganda to The Browning School Inspirational Cartoons, Daniel Perez, Academy of American Studies Exhibits Category Junior Group Exhibit Senior Group Exhibit First Place: Propaganda of Terezin, Sima Rosenthal First Place: Revolutionary Propaganda: How and Emmeline Laifer, Manhattan Day School Communication Can Incite Support and Change Within a Society, Mariana Melzer and Kathryn Second Place: Sesame Street: The Key to Herbert, Brooklyn Technical High School Understanding the Development of Children’s Television, Leah Fishman and Hodaya -

St. Francis College Terrier Alumni Board of Directors Fall 2004 Building for the Future 2 Vol

Terrier Fall 2004 / Volume 68, Number 2 The Small College of Big Dreams Builds for theFuture Terrier Contents: St. Francis College Terrier Alumni Board of Directors Fall 2004 Building for the Future 2 Vol. 68, Number 2 President Update: Campaign for Big Dreams 3 Terrier, the magazine of St. Francis College, James Bozart ’86 is published by the Office of College Rela- SFC Faculty and Alumni Publish Vice President tions for alumni and friends of St. Francis Book About 9/11 4 John J. Casey ’70 College. Commencement 2004 10 Directors New Women’s Studies Minor 14 Edward Aquilone ’60 Linda Werbel Dashefsky Jeannette A. Bartley ’00 Mary Robinson to Speak at SFC 15 Vice President for Government and Brian Campbell ’76 SFC Grad Receives Fulbright 16 Community Relations Joan Coles ’94 John Burke Celebrates Sean Moriarty Kevin Comer ’99 60 Years at SFC 18 Vice President for Development Keith Culley ’91 Sports Roundup 22 Dennis McDermott ’74 Franey M. Donovan, Jr. ’68 Director of Alumni Relations James Dougherty ’66 Alumni News and Events 25 Gerry Gannon ’60 Profile of a Terrier 25 Editorial Staff Daniel Kane ’67 Class Notes 30 Susan Grever Messina, Editor Mary Anne Killeen ’78 Director of Communications Lorraine M. Lynch ’91 SFC Mourns Loss of Trustee Vanessa De Almeida ’00 James H. McDonald ’69 Michael P. DeBlasio 33 Assistant Director of Alumni Relations Martin McNeill ’63 Anthony Paratore ’04 Thomas Quigley ’52 Webmaster and Marketing Associate Danielle Rouchon ’92 Robert Smith ’72 Please address all letters to the editor to: Theresa Spelman-Huzinec ’88 St. -

Fee Waiver Liu Brooklyn

Fee Waiver Liu Brooklyn Sergio often incises impromptu when sublunate Darian zincifying incommunicably and bate her hypsography. Bursal and encouraged Clemente never cheek his requiter! Wayward Bartholomeo carpenter rebukingly, he funnel his coed very forsakenly. All brooklyn campus to compare the department would have found for, to do not receive general and fee waiver process when a fugitive from fort greene park Students should understand her the tuition fees charged for other degree programs is not the various expense expense is involved in the squeeze of attending the university. How credible you describe the pace of work such Long Island University? The CEO Bulletin further states that the institution is required to maintaina completecase record with each waiver granted with a written memoirs of the findings and determination ofeach case. We but consider homelessness when reviewing a fee waiver request. Fee waiver request for engineering courses and performing arts, official twitter channel of fee waiver liu brooklyn campus committee will be from the brooklyn campus with a browser. The official Instagram of LIU Brooklyn. It wrench be incredibly chaotic. Theadministrative offices in public service offered less money is a diverse and worldwide guaranteed and institutional aid calculator liu payment period you made your fee waiver request. The maiden island university brooklyn tuition campus of greed Island University Brooklyn campus or Claflin University and Doctor. Mutnick said, attributing another chunk after the stuff to rising tuition of private institutions like hers across that country. What are chances of receiving scholarship? Use the calculators below to formulate a college savings plan for goat Island University Brooklyn Campus or model a student loan. -

The Waldron Family a Lifetime of Mount Memories

Mount St.St. Mary’sMary’s University University, | SpringSpring 2014 2011 FaithFaith | Discovery | Discovery | Leadership | Leadership | |Community Community The Waldron Family A Lifetime of Mount Memories $5 President’s Letter Catching a Second Wind It has been the greatest honor Mount. All the trustees who As the Mount continues in its coordinating many internal and of my professional career to have served the Mount over third century of service, let us external community programs serve as the Mount’s 24th the past decade have done so pledge our continued support and events. President. Over the past 11 with unselfish devotion and and effort to live by our four May the peace and love of Jesus years my love for the Mount, commitment to all that is good pillars of distinction. Let us Christ be with you and your our alumni, students, faculty, at the Mount. grow in understanding and families, always. staff and our supporters has practice our Catholic faith. grown stronger every day. Our Two members of my leadership May discovery lead us to new campus is simply the ideal of an team deserve special thanks for appreciation and knowledge of academic community, dedicated their extraordinary leadership. the beauty of God’s creation. to God and our country. As Our Executive Vice President, May our collective leadership you’ll read on page 2, the Board Dan Soller, serves our university allow us to build a more just Thomas H. Powell of Trustees this spring extended with excellence. No university world. And, let us continue to President my term as President to allow president could have a better build an academic community more time to search for my Executive Vice President. -

1. NYC-Department of City Planning-ZONE GREEN-Text Amendment. 2. the New York State Energy Research & Development Authority

Community Board #4 315 Wyckoff Avenue, 2nd Fl., Brooklyn, NY 11237 2012 Tel. #: (718) 628-8400 / Fax #: (718) 628-8619 / Email: [email protected] Monthly Community Board # 4 Meeting Hope Gardens Multi-Service Center 195 Linden Street (Corner of Wilson Avenue), Brooklyn, NY 11237 Wednesday, February 15, 2012 at 6:00PM Public Agenda Items 1. NYC-Department of City Planning-ZONE GREEN-Text Amendment. • This proposal seeks to modernize the zoning resolution to remove impediments to the construction and retrofitting of greener buildings. It will give owners more choices for the investments they can make to save energy save money and improve environmental performance. 2. The New York State Energy Research & Development Authority (NYSERDA). • Zone Green Energy Audit information which can make your home or business more energy efficient. Regular Board Meeting Agenda: 1. First Roll Call 7. Recommendations 2. Acceptance of Agenda 8. Old Business 3. Acceptance of Previous Meeting’s Minutes 9. New Business 4. Chairperson’s Report 10. Announcement (1.5 minutes only) Introduction of Elected Officials (Representative) 11. Second Roll Call 5. District Manager’s Report 6. Committee Report: • Civic & Religious-Elvena Davis • Health, Hospital & Human Services-Mary McClellan • Housing & Land-Use-Martha Brown • Public Safety-Barbara Smith 83rd Precinct Community Council Meeting The Next CEC 32 Meeting Tuesday, February 21, 2012 Thursday, February 16, 2012 480 Knickerbocker Avenue (Corner Bleecker I.S. 383 Street) 1300 Greene Avenue (Bet. Wilson & Myrtle (Muster Room) Aves.) 6:30PM 6:00 PM-Working Session / 7:00 PM-Public Sessions Community Board # 4 Page 2 NYPD Community Affairs Bureau HOW CAN YOU GET INVOLVED? WHAT PROGRAMS DO WE OFFER? WE WANT TO HEAR FROM YOU! The Community Affairs Bureau would like to encourage everyone in the community to attend their local monthly PRECINCT COMMUNITY COUNCIL MEETING. -

LIU Brooklyn

LIU Brooklyn 2018 - 2019 Undergraduate Bulletin 1 University Plaza, Brooklyn, N.Y. 11201-5372 General Information: 718-488-1000 www.liu.edu/brooklyn Admissions: 718-488-1011 Email: [email protected] Notice to Students: The information in this publication is accurate as of September 1, 2018. However, circumstances may require that a given course be withdrawn or alternate offerings be made. Therefore, LIU reserves the right to amend the courses described herein and cannot guarantee enrollment into any specific course section. All applicants are reminded that the University is subject to policies promulgated by its Board of Trustees, as well as New York State and federal regulation. The University therefore reserves the right to effect changes in the curriculum, administration, tuition and fees, academic schedule, program offerings and other phases of school activity, at any time, without prior notice. The University assumes no liability for interruption of classes or other instructional activities due to fire, flood, strike, war or other force majeure. The University expects each student to be knowledgeable about the information presented in this bulletin and other official publications pertaining to his/her course of study and campus life. For additional information or specific degree requirements, prospective students should call the campus Admissions Office. Registered students should speak with their advisors. Bulletin 2018 - 2019 AWARDS 25 TABLE OF CONTENTS Departmental Awards 25 LIU 4 Special Awards 25 ABOUT LIU BROOKLYN 5 Blackbird Leadership Awards 27 Mission Statement 5 Athletic Awards 27 Overview 5 REGISTRATION 28 Undergraduate and Graduate Offerings 5 Course Registration 28 University Policies 6 Matriculation 28 DIRECTORY 7 Leave of Absence 28 ACADEMIC CALENDAR 2018-2019 9 Withdrawal 28 ADMISSION 11 Auditing of Courses 29 Freshman Admissions 11 Student Access to Educational Records 29 Advanced Standing 11 Administrative Matters 30 Program for Academic Success 11 TUITION AND FEES 31 International Admissions 12 Rate Schedule 31 Arthur O.