CAP Twelve Years Later: How the “Rules” Have Changed

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Smart Methods for Retrieving Physiological Data in Physiqual

University of Groningen Bachelor thesis Smart methods for retrieving physiological data in Physiqual Marc Babtist & Sebastian Wehkamp Supervisors: Frank Blaauw & Marco Aiello July, 2016 Contents 1 Introduction 4 1.1 Physiqual . 4 1.2 Problem description . 4 1.3 Research questions . 5 1.4 Document structure . 5 2 Related work 5 2.1 IJkdijk project . 5 2.2 MUMPS . 6 3 Background 7 3.1 Scalability . 7 3.2 CAP theorem . 7 3.3 Reliability models . 7 4 Requirements 8 4.1 Scalability . 8 4.2 Reliability . 9 4.3 Performance . 9 4.4 Open source . 9 5 General database options 9 5.1 Relational or Non-relational . 9 5.2 NoSQL categories . 10 5.2.1 Key-value Stores . 10 5.2.2 Document Stores . 11 5.2.3 Column Family Stores . 11 5.2.4 Graph Stores . 12 5.3 When to use which category . 12 5.4 Conclusion . 12 5.4.1 Key-Value . 13 5.4.2 Document stores . 13 5.4.3 Column family stores . 13 5.4.4 Graph Stores . 13 6 Specific database options 13 6.1 Key-value stores: Riak . 14 6.2 Document stores: MongoDB . 14 6.3 Column Family stores: Cassandra . 14 6.4 Performance comparison . 15 6.4.1 Sensor Data Storage Performance . 16 6.4.2 Conclusion . 16 7 Data model 17 7.1 Modeling rules . 17 7.2 Data model Design . 18 2 7.3 Summary . 18 8 Architecture 20 8.1 Basic layout . 20 8.2 Sidekiq . 21 9 Design decisions 21 9.1 Keyspaces . 21 9.2 Gap determination . -

CAP Theorem P

C A CAP Theorem P Thanks to Arvind K., Dong W., and Mihir N. for slides. CAP Theorem • “It is impossible for a web service to provide these three guarantees at the same time (pick 2 of 3): • (Sequential) Consistency • Availability • Partition-tolerance” • Conjectured by Eric Brewer in ’00 • Proved by Gilbert and Lynch in ’02 • But with definitions that do not match what you’d assume (or Brewer meant) • Influenced the NoSQL mania • Highly controversial: “the CAP theorem encourages engineers to make awful decisions.” – Stonebraker • Many misinterpretations 2 CAP Theorem • Consistency: – Sequential consistency (a data item behaves as if there is one copy) • Availability: – Node failures do not prevent survivors from continuing to operate • Partition-tolerance: – The system continues to operate despite network partitions • CAP says that “A distributed system can satisfy any two of these guarantees at the same time but not all three” 3 C in CAP != C in ACID • They are different! • CAP’s C(onsistency) = sequential consistency – Similar to ACID’s A(tomicity) = Visibility to all future operations • ACID’s C(onsistency) = Does the data satisfy schema constraints 4 Sequential consistency • Makes it appear as if there is one copy of the object • Strict ordering on ops from same client • A single linear ordering across client ops – If client a executes operations {a1, a2, a3, ...}, client b executes operations {b1, b2, b3, ...} – Then, globally, clients observe some serialized version of the sequence • e.g., {a1, b1, b2, a2, ...} (or whatever) Notice -

Nosql Databases: Yearning for Disambiguation

NOSQL DATABASES: YEARNING FOR DISAMBIGUATION Chaimae Asaad Alqualsadi, Rabat IT Center, ENSIAS, University Mohammed V in Rabat and TicLab, International University of Rabat, Morocco [email protected] Karim Baïna Alqualsadi, Rabat IT Center, ENSIAS, University Mohammed V in Rabat, Morocco [email protected] Mounir Ghogho TicLab, International University of Rabat Morocco [email protected] March 17, 2020 ABSTRACT The demanding requirements of the new Big Data intensive era raised the need for flexible storage systems capable of handling huge volumes of unstructured data and of tackling the challenges that arXiv:2003.04074v2 [cs.DB] 16 Mar 2020 traditional databases were facing. NoSQL Databases, in their heterogeneity, are a powerful and diverse set of databases tailored to specific industrial and business needs. However, the lack of the- oretical background creates a lack of consensus even among experts about many NoSQL concepts, leading to ambiguity and confusion. In this paper, we present a survey of NoSQL databases and their classification by data model type. We also conduct a benchmark in order to compare different NoSQL databases and distinguish their characteristics. Additionally, we present the major areas of ambiguity and confusion around NoSQL databases and their related concepts, and attempt to disambiguate them. Keywords NoSQL Databases · NoSQL data models · NoSQL characteristics · NoSQL Classification A PREPRINT -MARCH 17, 2020 1 Introduction The proliferation of data sources ranging from social media and Internet of Things (IoT) to industrially generated data (e.g. transactions) has led to a growing demand for data intensive cloud based applications and has created new challenges for big-data-era databases. -

IBM Cloudant: Database As a Service Fundamentals

Front cover IBM Cloudant: Database as a Service Fundamentals Understand the basics of NoSQL document data stores Access and work with the Cloudant API Work programmatically with Cloudant data Christopher Bienko Marina Greenstein Stephen E Holt Richard T Phillips ibm.com/redbooks Redpaper Contents Notices . 5 Trademarks . 6 Preface . 7 Authors. 7 Now you can become a published author, too! . 8 Comments welcome. 8 Stay connected to IBM Redbooks . 9 Chapter 1. Survey of the database landscape . 1 1.1 The fundamentals and evolution of relational databases . 2 1.1.1 The relational model . 2 1.1.2 The CAP Theorem . 4 1.2 The NoSQL paradigm . 5 1.2.1 ACID versus BASE systems . 7 1.2.2 What is NoSQL? . 8 1.2.3 NoSQL database landscape . 9 1.2.4 JSON and schema-less model . 11 Chapter 2. Build more, grow more, and sleep more with IBM Cloudant . 13 2.1 Business value . 15 2.2 Solution overview . 16 2.3 Solution architecture . 18 2.4 Usage scenarios . 20 2.5 Intuitively interact with data using Cloudant Dashboard . 21 2.5.1 Editing JSON documents using Cloudant Dashboard . 22 2.5.2 Configuring access permissions and replication jobs within Cloudant Dashboard. 24 2.6 A continuum of services working together on cloud . 25 2.6.1 Provisioning an analytics warehouse with IBM dashDB . 26 2.6.2 Data refinement services on-premises. 30 2.6.3 Data refinement services on the cloud. 30 2.6.4 Hybrid clouds: Data refinement across on/off–premises. 31 Chapter 3. -

A Big Data Roadmap for the DB2 Professional

A Big Data Roadmap For the DB2 Professional Sponsored by: align http://www.datavail.com Craig S. Mullins Mullins Consulting, Inc. http://www.MullinsConsulting.com © 2014 Mullins Consulting, Inc. Author This presentation was prepared by: Craig S. Mullins President & Principal Consultant Mullins Consulting, Inc. 15 Coventry Ct Sugar Land, TX 77479 Tel: 281-494-6153 Fax: 281.491.0637 Skype: cs.mullins E-mail: [email protected] http://www.mullinsconsultinginc.com This document is protected under the copyright laws of the United States and other countries as an unpublished work. This document contains information that is proprietary and confidential to Mullins Consulting, Inc., which shall not be disclosed outside or duplicated, used, or disclosed in whole or in part for any purpose other than as approved by Mullins Consulting, Inc. Any use or disclosure in whole or in part of this information without the express written permission of Mullins Consulting, Inc. is prohibited. © 2014 Craig S. Mullins and Mullins Consulting, Inc. (Unpublished). All rights reserved. © 2014 Mullins Consulting, Inc. 2 Agenda Uncover the roadmap to Big Data… the terminology and technology used, use cases, and trends. • Gain a working knowledge and definition of Big Data (beyond the simple three V's definition) • Break down and understand the often confusing terminology within the realm of Big Data (e.g. polyglot persistence) • Examine the four predominant NoSQL database systems used in Big Data implementations (graph, key/value, column, and document) • Learn some of the major differences between Big Data/NoSQL implementations vis-a-vis traditional transaction processing • Discover the primary use cases for Big Data and NoSQL versus relational databases © 2014 Mullins Consulting, Inc. -

Introduction to Nosql

NoSQL An Introduction Amonra IT - 2019 What is NoSQL? NoSQL is a non-relational database management systems, different from traditional relational database management systems in some significant ways. It is designed for distributed data stores where very large scale of data storing needs (for example Google or Facebook which collects terabits of data every day for their users). This type of data storing may not require fixed schema, avoid join operations and typically scale horizontally. NoSQL ● Stands for Not Only SQL ● No declarative query language ● No predefined schema ● Key-Value pair storage, Column Store, Document Store, Graph databases ● Eventual consistency rather ACID property ● Unstructured and unpredictable data ● CAP Theorem ● Prioritizes high performance, high availability and scalability NoSQL Really Means… focus on non relational, next generation operational datastores and databases…. Why NoSQL? In today’s time data is becoming easier to access and capture through third parties such as Facebook, Google+ and others. Personal user information, social graphs, geo location data, user-generated content and machine logging data are just a few examples where the data has been increasing exponentially. To avail the above service properly, it is required to process huge amount of data. Which SQL databases were never designed. The evolution of NoSql databases is to handle these huge data properly. Web Applications Driving Data Growth Where to use NoSQL ● Social data ● Search ● Data processing ● Caching ● Data Warehousing ● Logging -

Tradeoffs in Distributed Databases

1 Tradeoffs in Distributed Databases University of Oulu Department of Information Processing Science BSc Thesis Risto Juntunen 17.02.2015 2 Abstract In a distributed database data is spread throughout the network into separated nodes with different DBMS systems (Date, 2000). According to CAP – theorem three database properties - consistency, availability and partition tolerance cannot be achieved simultaneously in distributed database systems. Two of these properties can be achieved but not all three at the same time (Brewer, 2000). Since this theorem there has been some development in network infrastructure. Also new methods to achieve consistency in distributed databases has emerged. This paper discusses trade-offs in distributed databases. Keywords Distributed database, cap theorem Supervisor Title, position First name Last name 3 Contents Abstract ................................................................................................................................................ 2 Contents ............................................................................................................................................... 3 1. Introduction .................................................................................................................................. 4 2. Distributed Databases .................................................................................................................. 5 2.1 Principles of distributed databases ....................................................................................... -

Newsql Principles, Systems and Current Trends

NewSQL Principles, Systems and Current Trends Patrick Valduriez Ricardo Jimenez-Peris Outline • Motivations • Principles and techniques • Taxonomy of NewSQL systems • Current trends Motivations Why NoSQL/NewSQL? • Trends • Big data • Unstructured data • Data interconnection • Hyperlinks, tags, blogs, etc. • Very high scalability • Data size, data rates, concurrent users, etc. • Limits of SQL systems (in fact RDBMSs) • Need for skilled DBA, tuning and well-defined schemas • Full SQL complex • Hard to make updates scalable • Parallel RDBMS use a shared-disk for OLTP, which is hard to scale BigData 2019 © P. Valduriez and R. Jimenez-Peris, 2019 4 Scalability • Ideal: linear scale-up • Sustained performance for a linear increase of ideal database size and load Perf. • By proportional increase of components Components & charge BigData 2019 © P. Valduriez and R. Jimenez-Peris, 2019 5 Vertical vs Horizontal Scaleup • Typically in a shared-nothing computer cluster Switch Switch Switch Pn Pn Pn Pn Scale-up P2 P2 P2 P2 P1 P1 P1 P1 Scale-out BigData 2019 © P. Valduriez and R. Jimenez-Peris, 2019 6 Query Parallelism • Inter-query • Different queries on the same Q1 … Qn data R • For concurrent queries • Inter-operation Op3 • Different operations of the same query on different data Op1 Op2 • For complex queries R S • Intra-operation • The same operation on Op … Op different data • For large queries R1 Rn BigData 2019 © P. Valduriez and R. Jimenez-Peris, 2019 7 The CAP Theorem • Polemical topic • "A database can't provide consistency AND availability during a network partition" • Argument used by NoSQL to justify their lack of ACID properties • Nothing to do with scalability • Two different points of view • Relational databases • Consistency is essential • ACID transactions • Distributed systems • Service availability is essential • Inconsistency tolerated by the user, e.g. -

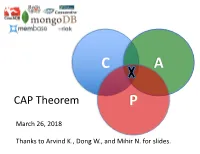

CAP Theorem P

C A CAP Theorem P March 26, 2018 Thanks to Arvind K., Dong W., and Mihir N. for slides. CAP Theorem • “It is impossible for a web service to provide these three guarantees at the same time (pick 2 of 3): • (Sequential) Consistency • Availability • Partition-tolerance” • Conjectured by Eric Brewer in ’00 • Proved by Gilbert and Lynch in ’02 • But with definitions that do not match what you’d assume (or Brewer meant) • Influenced the NoSQL mania • Highly controversial: “the CAP theorem encourages engineers to make awful decisions.” – Stonebraker • Many misinterpretations CAP Theorem • Consistency: – Sequential consistency (a data item behaves as if there is one copy) • Availability: – Node failures do not prevent survivors from continuing to operate • Partition-tolerance: – The system continues to operate despite network partitions • CAP says that “A distributed system can satisfy any two of these guarantees at the same time but not all three” C in CAP != C in ACID • They are different! • CAP’s C(onsistency) = sequential consistency – Similar to ACID’s A(tomicity) = Visibility to all future operations • ACID’s C(onsistency) = Does the data satisfy schema constraints Sequential consistency • Makes it appear as if there is one copy of the object • Strict ordering on ops from same client • A single linear ordering across client ops – If client a executes operations {a1, a2, a3, ...}, client b executes operations {b1, b2, b3, ...} – Then, globally, clients observe some serialized version of the sequence • e.g., {a1, b1, b2, a2, ...} (or whatever) -

Inadequacies of CAP Theorem

International Journal of Computer Applications (0975 – 8887) Volume 151 – No.10, October 2016 Inadequacies of CAP Theorem Omkar Patinge Vineet Karkhanis Amey Barapatre VESIT, University of Mumbai VESIT, University of Mumbai VESIT, University of Mumbai ABSTRACT 1.2 Availability In today's technical world, we are witnessing a strong and Availability ensures that every request is answered, even in increasing desire to scale systems to successfully complete the case of failures. This must be true for both read and write workloads in a reasonable time frame. As a result of this operations. This property is often zeroed down to bounded scaling, an additional penalty of complexity is incurred in the responses in reasonable time. Availability gives the notion of system. In this paper, we have explained the tradeoffs that 100% uptime; there are limitations to its availability. If there have to be taken into consideration while designing databases is only a single piece of data on four nodes and if all four using CAP theorem and the consequences of this tradeoff. nodes die, that data is gone and any request which required it Problems of the CAP theorem itself and its limitations are in order to be processed cannot be handled. discussed. CAP theorem which was able to meet the demands, back when it was proposed, can't catch up to the current 1.3 Partition Tolerance requirements. The problem lies in the open-ended definitions of CAP which are subject to interpretations. PACELC is an alternative to CAP and is able to overcome some of its current problems. PACELC builds on the CAP theorem and it goes one step ahead of CAP by stating that a trade-off also exists, this time between latency and consistency, provides a more complete portrayal of the potential consistency tradeoffs for distributed systems. -

(2020) Analysis of Trade-Offs in Fault-Tolerant Distributed Computing and Replicated Databases

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Leeds Beckett Repository Citation: Gorbenko, A and Karpenko, A and Tarasyuk, O (2020) Analysis of Trade-offs in Fault-Tolerant Distributed Computing and Replicated Databases. In: 2020 IEEE 11th International Conference on Dependable Systems, Services and Technologies (DESSERT). IEEE. ISBN 978-1-7281-9958-0, 978-1-7281-9957-3 DOI: https://doi.org/10.1109/dessert50317.2020.9125078 Link to Leeds Beckett Repository record: http://eprints.leedsbeckett.ac.uk/id/eprint/7186/ Document Version: Book Section c 2020 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. The aim of the Leeds Beckett Repository is to provide open access to our research, as required by funder policies and permitted by publishers and copyright law. The Leeds Beckett repository holds a wide range of publications, each of which has been checked for copyright and the relevant embargo period has been applied by the Research Services team. We operate on a standard take-down policy. If you are the author or publisher of an output and you would like it removed from the repository, please contact us and we will investigate on a case-by-case basis. Each thesis in the repository has been cleared where necessary by the author for third party copyright. -

SQL Vs. Nosql Vs. Newsql

WHITE PAPER SQL vs. NoSQL vs. NewSQL “SQL” is used both as the name of a language and as a type of database. SQL the language is a structured query language designed for managing data in relational database management systems (RDBMS). Relational database management systems are often called SQL databases since they use the SQL language. Since the mid-1980s, SQL has been a standard for querying and managing RDBMS data sets. SQL Databases NoSQL Databases Relational databases continue to provide the foundation While a SQL database is a defined, concrete concept, for the world’s transactions. Think about all the credit NoSQL is not. There is enormous variation in technol- card transactions being handled by mainframes and ogies that fall under the NoSQL category (though they large UNIX servers in the data centers of financial generally share some characteristics). Thus, NoSQL is services companies. a term used for a broad group of data management technologies which vary in features and functionality but SQL features which try to solve some key issues of SQL databases. • Rely on relational tables NoSQL features • Utilize defined data schema • High performance writes and massive scalability • Reduce redundancy through normalization • Do not require a defined schema for writing data • Support JOIN functionality • Primarily eventually-consistent by default • Engineered for data integrity • Support wide range of modern programming • Traditionally scale up, not out languages and tools • Rely on a simple, standardized query language • Near universal in adoption WHITE PAPER NoSQL Basics NoSQL solutions emerged as a reaction to frustration with the cost and inflexibility of legacy RDBMS products like Oracle and IBM DB2, which use SQL as a query language.