CAP Theorem and Distributed Database Consistency

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SD-SQL Server: Scalable Distributed Database System

The International Arab Journal of Information Technology, Vol. 4, No. 2, April 2007 103 SD -SQL Server: Scalable Distributed Database System Soror Sahri CERIA, Université Paris Dauphine, France Abstract: We present SD -SQL Server, a prototype scalable distributed database system. It let a relational table to grow over new storage nodes invis ibly to the application. The evolution uses splits dynamically generating a distributed range partitioning of the table. The splits avoid the reorganization of a growing database, necessary for the current DBMSs and a headache for the administrators. We il lustrate the architecture of our system, its capabilities and performance. The experiments with the well -known SkyServer database show that the overhead of the scalable distributed table management is typically minimal. To our best knowledge, SD -SQL Server is the only DBMS with the discussed capabilities at present. Keywords: Scalable distributed DBS, scalable table, distributed partitioned view, SDDS, performance . Received June 4 , 2005; accepted June 27 , 2006 1. Introduction or k -d based with respect to the partitioning key(s). The application sees a scal able table through a specific The explosive growth of the v olume of data to store in type of updateable distributed view termed (client) databases makes many of them huge and permanently scalable view. Such a view hides the partitioning and growing. Large tables have to be hash ed or partitioned dynamically adjusts itself to the partitioning evolution. over several storage sites. Current DBMSs, e. g., SQL The adjustment is lazy, in the sense it occurs only Server, Oracle or DB2 to name only a few, provide when a query to the scalable table comes in and the static partitioning only. -

Smart Methods for Retrieving Physiological Data in Physiqual

University of Groningen Bachelor thesis Smart methods for retrieving physiological data in Physiqual Marc Babtist & Sebastian Wehkamp Supervisors: Frank Blaauw & Marco Aiello July, 2016 Contents 1 Introduction 4 1.1 Physiqual . 4 1.2 Problem description . 4 1.3 Research questions . 5 1.4 Document structure . 5 2 Related work 5 2.1 IJkdijk project . 5 2.2 MUMPS . 6 3 Background 7 3.1 Scalability . 7 3.2 CAP theorem . 7 3.3 Reliability models . 7 4 Requirements 8 4.1 Scalability . 8 4.2 Reliability . 9 4.3 Performance . 9 4.4 Open source . 9 5 General database options 9 5.1 Relational or Non-relational . 9 5.2 NoSQL categories . 10 5.2.1 Key-value Stores . 10 5.2.2 Document Stores . 11 5.2.3 Column Family Stores . 11 5.2.4 Graph Stores . 12 5.3 When to use which category . 12 5.4 Conclusion . 12 5.4.1 Key-Value . 13 5.4.2 Document stores . 13 5.4.3 Column family stores . 13 5.4.4 Graph Stores . 13 6 Specific database options 13 6.1 Key-value stores: Riak . 14 6.2 Document stores: MongoDB . 14 6.3 Column Family stores: Cassandra . 14 6.4 Performance comparison . 15 6.4.1 Sensor Data Storage Performance . 16 6.4.2 Conclusion . 16 7 Data model 17 7.1 Modeling rules . 17 7.2 Data model Design . 18 2 7.3 Summary . 18 8 Architecture 20 8.1 Basic layout . 20 8.2 Sidekiq . 21 9 Design decisions 21 9.1 Keyspaces . 21 9.2 Gap determination . -

Socrates: the New SQL Server in the Cloud

Socrates: The New SQL Server in the Cloud Panagiotis Antonopoulos, Alex Budovski, Cristian Diaconu, Alejandro Hernandez Saenz, Jack Hu, Hanuma Kodavalla, Donald Kossmann, Sandeep Lingam, Umar Farooq Minhas, Naveen Prakash, Vijendra Purohit, Hugh Qu, Chaitanya Sreenivas Ravella, Krystyna Reisteter, Sheetal Shrotri, Dixin Tang, Vikram Wakade Microsoft Azure & Microsoft Research ABSTRACT 1 INTRODUCTION The database-as-a-service paradigm in the cloud (DBaaS) The cloud is here to stay. Most start-ups are cloud-native. is becoming increasingly popular. Organizations adopt this Furthermore, many large enterprises are moving their data paradigm because they expect higher security, higher avail- and workloads into the cloud. The main reasons to move ability, and lower and more flexible cost with high perfor- into the cloud are security, time-to-market, and a more flexi- mance. It has become clear, however, that these expectations ble “pay-as-you-go” cost model which avoids overpaying for cannot be met in the cloud with the traditional, monolithic under-utilized machines. While all these reasons are com- database architecture. This paper presents a novel DBaaS pelling, the expectation is that a database runs in the cloud architecture, called Socrates. Socrates has been implemented at least as well as (if not better) than on premise. Specifically, in Microsoft SQL Server and is available in Azure as SQL DB customers expect a “database-as-a-service” to be highly avail- Hyperscale. This paper describes the key ideas and features able (e.g., 99.999% availability), support large databases (e.g., of Socrates, and it compares the performance of Socrates a 100TB OLTP database), and be highly performant. -

An Overview of Distributed Databases

International Journal of Information and Computation Technology. ISSN 0974-2239 Volume 4, Number 2 (2014), pp. 207-214 © International Research Publications House http://www. irphouse.com /ijict.htm An Overview of Distributed Databases Parul Tomar1 and Megha2 1Department of Computer Engineering, YMCA University of Science & Technology, Faridabad, INDIA. 2Student Department of Computer Science, YMCA University of Science and Technology, Faridabad, INDIA. Abstract A Database is a collection of data describing the activities of one or more related organizations with a specific well defined structure and purpose. A Database is controlled by Database Management System(DBMS) by maintaining and utilizing large collections of data. A Distributed System is the one in which hardware and software components at networked computers communicate and coordinate their activity only by passing messages. In short a Distributed database is a collection of databases that can be stored at different computer network sites. This paper presents an overview of Distributed Database System along with their advantages and disadvantages. This paper also provides various aspects like replication, fragmentation and various problems that can be faced in distributed database systems. Keywords: Database, Deadlock, Distributed Database Management System, Fragmentation, Replication. 1. Introduction A Database is systematically organized or structuredrepository of indexed information that allows easy retrieval, updating, analysis, and output of data. Each database may involve different database management systems and different architectures that distribute the execution of transactions [1]. A distributed database is a database in which storage devices are not all attached to a common processing unit such as the CPU. It may be stored in multiple computers, located in the same physical location; or may be dispersed over a network of interconnected computers. -

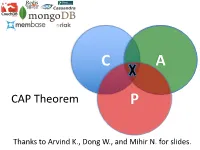

CAP Theorem P

C A CAP Theorem P Thanks to Arvind K., Dong W., and Mihir N. for slides. CAP Theorem • “It is impossible for a web service to provide these three guarantees at the same time (pick 2 of 3): • (Sequential) Consistency • Availability • Partition-tolerance” • Conjectured by Eric Brewer in ’00 • Proved by Gilbert and Lynch in ’02 • But with definitions that do not match what you’d assume (or Brewer meant) • Influenced the NoSQL mania • Highly controversial: “the CAP theorem encourages engineers to make awful decisions.” – Stonebraker • Many misinterpretations 2 CAP Theorem • Consistency: – Sequential consistency (a data item behaves as if there is one copy) • Availability: – Node failures do not prevent survivors from continuing to operate • Partition-tolerance: – The system continues to operate despite network partitions • CAP says that “A distributed system can satisfy any two of these guarantees at the same time but not all three” 3 C in CAP != C in ACID • They are different! • CAP’s C(onsistency) = sequential consistency – Similar to ACID’s A(tomicity) = Visibility to all future operations • ACID’s C(onsistency) = Does the data satisfy schema constraints 4 Sequential consistency • Makes it appear as if there is one copy of the object • Strict ordering on ops from same client • A single linear ordering across client ops – If client a executes operations {a1, a2, a3, ...}, client b executes operations {b1, b2, b3, ...} – Then, globally, clients observe some serialized version of the sequence • e.g., {a1, b1, b2, a2, ...} (or whatever) Notice -

Blockchain Database for a Cyber Security Learning System

Session ETD 475 Blockchain Database for a Cyber Security Learning System Sophia Armstrong Department of Computer Science, College of Engineering and Technology East Carolina University Te-Shun Chou Department of Technology Systems, College of Engineering and Technology East Carolina University John Jones College of Engineering and Technology East Carolina University Abstract Our cyber security learning system involves an interactive environment for students to practice executing different attack and defense techniques relating to cyber security concepts. We intend to use a blockchain database to secure data from this learning system. The data being secured are students’ scores accumulated by successful attacks or defends from the other students’ implementations. As more professionals are departing from traditional relational databases, the enthusiasm around distributed ledger databases is growing, specifically blockchain. With many available platforms applying blockchain structures, it is important to understand how this emerging technology is being used, with the goal of utilizing this technology for our learning system. In order to successfully secure the data and ensure it is tamper resistant, an investigation of blockchain technology use cases must be conducted. In addition, this paper defined the primary characteristics of the emerging distributed ledgers or blockchain technology, to ensure we effectively harness this technology to secure our data. Moreover, we explored using a blockchain database for our data. 1. Introduction New buzz words are constantly surfacing in the ever evolving field of computer science, so it is critical to distinguish the difference between temporary fads and new evolutionary technology. Blockchain is one of the newest and most developmental technologies currently drawing interest. -

Guide to Design, Implementation and Management of Distributed Databases

NATL INST OF STAND 4 TECH H.I C NIST Special Publication 500-185 A111D3 MTfiDfi2 Computer Systems Guide to Design, Technology Implementation and Management U.S. DEPARTMENT OF COMMERCE National Institute of of Distributed Databases Standards and Technology Elizabeth N. Fong Nisr Charles L. Sheppard Kathryn A. Harvill NIST I PUBLICATIONS I 100 .U57 500-185 1991 C.2 NIST Special Publication 500-185 ^/c 5oo-n Guide to Design, Implementation and Management of Distributed Databases Elizabeth N. Fong Charles L. Sheppard Kathryn A. Harvill Computer Systems Laboratory National Institute of Standards and Technology Gaithersburg, MD 20899 February 1991 U.S. DEPARTMENT OF COMMERCE Robert A. Mosbacher, Secretary NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY John W. Lyons, Director Reports on Computer Systems Technology The National institute of Standards and Technology (NIST) has a unique responsibility for computer systems technology within the Federal government, NIST's Computer Systems Laboratory (CSL) devel- ops standards and guidelines, provides technical assistance, and conducts research for computers and related telecommunications systems to achieve more effective utilization of Federal Information technol- ogy resources. CSL's responsibilities include development of technical, management, physical, and ad- ministrative standards and guidelines for the cost-effective security and privacy of sensitive unclassified information processed in Federal computers. CSL assists agencies in developing security plans and in improving computer security awareness training. This Special Publication 500 series reports CSL re- search and guidelines to Federal agencies as well as to organizations in industry, government, and academia. National Institute of Standards and Technology Special Publication 500-185 Natl. Inst. Stand. Technol. -

Concurrency Control Basics

Outline l Introduction/problems, l definitions Introduction/ (transaction, history, conflict, equivalence, Problems serializability, ...), Definitions l locking. Chapter 2: Locking Concurrency Control Basics Klemens Böhm Distributed Data Management: Concurrency Control Basics – 1 Klemens Böhm Distributed Data Management: Concurrency Control Basics – 2 Atomicity, Isolation Synchronisation, Distributed (1) l Transactional guarantees – l Essential feature of databases: in particular, atomicity and isolation. Many users can access the same data concurrently – be it read, be it write. Introduction/ l Atomicity Introduction/ Problems Problems u Example, „bank scenario“: l Consistency must be guaranteed – Definitions Definitions task of synchronization component. Locking Number Person Balance Locking Klemens 5000 l Multi-user mode shall be hidden from users as far as possible: concurrent processing Gunter 200 of requests shall be transparent, u Money transfer – two elementary operations. ‚illusion‘ of being the only user. – debit(Klemens, 500), – credit(Gunter, 500). l Isolation – can be explained with this example, too. l Transactions. Klemens Böhm Distributed Data Management: Concurrency Control Basics – 3 Klemens Böhm Distributed Data Management: Concurrency Control Basics – 4 Synchronisation, Distributed (2) Synchronization in General l Serial execution of application programs Uncontrolled non-serial execution u achieves that illusion leads to other problems, notably inconsistency: l Introduction/ without any synchronization effort, Introduction/ -

Nosql Databases: Yearning for Disambiguation

NOSQL DATABASES: YEARNING FOR DISAMBIGUATION Chaimae Asaad Alqualsadi, Rabat IT Center, ENSIAS, University Mohammed V in Rabat and TicLab, International University of Rabat, Morocco [email protected] Karim Baïna Alqualsadi, Rabat IT Center, ENSIAS, University Mohammed V in Rabat, Morocco [email protected] Mounir Ghogho TicLab, International University of Rabat Morocco [email protected] March 17, 2020 ABSTRACT The demanding requirements of the new Big Data intensive era raised the need for flexible storage systems capable of handling huge volumes of unstructured data and of tackling the challenges that arXiv:2003.04074v2 [cs.DB] 16 Mar 2020 traditional databases were facing. NoSQL Databases, in their heterogeneity, are a powerful and diverse set of databases tailored to specific industrial and business needs. However, the lack of the- oretical background creates a lack of consensus even among experts about many NoSQL concepts, leading to ambiguity and confusion. In this paper, we present a survey of NoSQL databases and their classification by data model type. We also conduct a benchmark in order to compare different NoSQL databases and distinguish their characteristics. Additionally, we present the major areas of ambiguity and confusion around NoSQL databases and their related concepts, and attempt to disambiguate them. Keywords NoSQL Databases · NoSQL data models · NoSQL characteristics · NoSQL Classification A PREPRINT -MARCH 17, 2020 1 Introduction The proliferation of data sources ranging from social media and Internet of Things (IoT) to industrially generated data (e.g. transactions) has led to a growing demand for data intensive cloud based applications and has created new challenges for big-data-era databases. -

IBM Cloudant: Database As a Service Fundamentals

Front cover IBM Cloudant: Database as a Service Fundamentals Understand the basics of NoSQL document data stores Access and work with the Cloudant API Work programmatically with Cloudant data Christopher Bienko Marina Greenstein Stephen E Holt Richard T Phillips ibm.com/redbooks Redpaper Contents Notices . 5 Trademarks . 6 Preface . 7 Authors. 7 Now you can become a published author, too! . 8 Comments welcome. 8 Stay connected to IBM Redbooks . 9 Chapter 1. Survey of the database landscape . 1 1.1 The fundamentals and evolution of relational databases . 2 1.1.1 The relational model . 2 1.1.2 The CAP Theorem . 4 1.2 The NoSQL paradigm . 5 1.2.1 ACID versus BASE systems . 7 1.2.2 What is NoSQL? . 8 1.2.3 NoSQL database landscape . 9 1.2.4 JSON and schema-less model . 11 Chapter 2. Build more, grow more, and sleep more with IBM Cloudant . 13 2.1 Business value . 15 2.2 Solution overview . 16 2.3 Solution architecture . 18 2.4 Usage scenarios . 20 2.5 Intuitively interact with data using Cloudant Dashboard . 21 2.5.1 Editing JSON documents using Cloudant Dashboard . 22 2.5.2 Configuring access permissions and replication jobs within Cloudant Dashboard. 24 2.6 A continuum of services working together on cloud . 25 2.6.1 Provisioning an analytics warehouse with IBM dashDB . 26 2.6.2 Data refinement services on-premises. 30 2.6.3 Data refinement services on the cloud. 30 2.6.4 Hybrid clouds: Data refinement across on/off–premises. 31 Chapter 3. -

Cache Serializability: Reducing Inconsistency in Edge Transactions

Cache Serializability: Reducing Inconsistency in Edge Transactions Ittay Eyal Ken Birman Robbert van Renesse Cornell University tributed databases. Until recently, technical chal- Abstract—Read-only caches are widely used in cloud lenges have forced such large-system operators infrastructures to reduce access latency and load on to forgo transactional consistency, providing per- backend databases. Operators view coherent caches as object consistency instead, often with some form of impractical at genuinely large scale and many client- facing caches are updated in an asynchronous manner eventual consistency. In contrast, backend systems with best-effort pipelines. Existing solutions that support often support transactions with guarantees such as cache consistency are inapplicable to this scenario since snapshot isolation and even full transactional atom- they require a round trip to the database on every cache icity [9], [4], [11], [10]. transaction. Our work begins with the observation that it can Existing incoherent cache technologies are oblivious to be difficult for client-tier applications to leverage transactional data access, even if the backend database supports transactions. We propose T-Cache, a novel the transactions that the databases provide: trans- caching policy for read-only transactions in which incon- actional reads satisfied primarily from edge caches sistency is tolerable (won’t cause safety violations) but cannot guarantee coherency. Yet, by running from undesirable (has a cost). T-Cache improves cache consis- cache, client-tier transactions shield the backend tency despite asynchronous and unreliable communication database from excessive load, and because caches between the cache and the database. We define cache- are typically placed close to the clients, response serializability, a variant of serializability that is suitable latency can be improved. -

A Transaction Processing Method for Distributed Database

Advances in Computer Science Research, volume 87 3rd International Conference on Mechatronics Engineering and Information Technology (ICMEIT 2019) A Transaction Processing Method for Distributed Database Zhian Lin a, Chi Zhang b School of Computer and Cyberspace Security, Communication University of China, Beijing, China [email protected], [email protected] Abstract. This paper introduces the distributed transaction processing model and two-phase commit protocol, and analyses the shortcomings of the two-phase commit protocol. And then we proposed a new distributed transaction processing method which adds heartbeat mechanism into the two- phase commit protocol. Using the method can improve reliability and reduce blocking in distributed transaction processing. Keywords: distributed transaction, two-phase commit protocol, heartbeat mechanism. 1. Introduction Most database services of application systems will be distributed on several servers, especially in some large-scale systems. Distributed transaction processing will be involved in the execution of business logic. At present, two-phase commit protocol is one of the methods to distributed transaction processing in distributed database systems. The two-phase commit protocol includes coordinator (transaction manager) and several participants (databases). In the process of communication between the coordinator and the participants, if the participants without reply for fail, the coordinator can only wait all the time, which can easily cause system blocking. In this paper, heartbeat mechanism is introduced to monitor participants, which avoid the risk of blocking of two-phase commit protocol, and improve the reliability and efficiency of distributed database system. 2. Distributed Transactions 2.1 Distributed Transaction Processing Model In a distributed system, each node is physically independent and they communicates and coordinates each other through the network.