The Perception of Movement Through Musical Sound: Towards A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Connecting Music and Place: Exploring Library Collection Data Using Geo-Visualizations

Evidence Based Library and Information Practice 2017, 12.2 Evidence Based Library and Information Practice Research Article Connecting Music and Place: Exploring Library Collection Data Using Geo-visualizations Carolyn Doi Music & Education Librarian University Library University of Saskatchewan Saskatoon, Saskatchewan, Canada Email: [email protected] Received: 23 Jan. 2017 Accepted: 26 Mar. 2017 2017 Doi. This is an Open Access article distributed under the terms of the Creative Commons‐Attribution‐ Noncommercial‐Share Alike License 4.0 International (http://creativecommons.org/licenses/by-nc-sa/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly attributed, not used for commercial purposes, and, if transformed, the resulting work is redistributed under the same or similar license to this one. Abstract Objectives – This project had two stated objectives: 1) to compare the location and concentration of Saskatchewan-based large ensembles (bands, orchestras, choirs) within the province, with the intention to draw conclusions about the history of community-based musical activity within the province; and 2) to enable location-based browsing of Saskatchewan music materials through an interactive search interface. Methods – Data was harvested from MARC metadata found in the library catalogue for a special collection of Saskatchewan music at the University of Saskatchewan. Microsoft Excel and OpenRefine were used to screen, clean, and enhance the dataset. Data was imported into ArcGIS software, where it was plotted using a geo-visualization showing location and concentrations of musical activity by large ensembles within the province. The geo-visualization also allows users to filter results based on the ensemble type (band, orchestra, or choir). -

1 Making the Clarinet Sing

Making the Clarinet Sing: Enhancing Clarinet Tone, Breathing, and Phrase Nuance through Voice Pedagogy D.M.A Document Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Musical Arts in the Graduate School of The Ohio State University By Alyssa Rose Powell, M.M. Graduate Program in Music The Ohio State University 2020 D.M.A. Document Committee Dr. Caroline A. Hartig, Advisor Dr. Scott McCoy Dr. Eugenia Costa-Giomi Professor Katherine Borst Jones 1 Copyrighted by Alyssa Rose Powell 2020 2 Abstract The clarinet has been favorably compared to the human singing voice since its invention and continues to be sought after for its expressive, singing qualities. How is the clarinet like the human singing voice? What facets of singing do clarinetists strive to imitate? Can voice pedagogy inform clarinet playing to improve technique and artistry? This study begins with a brief historical investigation into the origins of modern voice technique, bel canto, and highlights the way it influenced the development of the clarinet. Bel canto set the standards for tone, expression, and pedagogy in classical western singing which was reflected in the clarinet tradition a hundred years later. Present day clarinetists still use bel canto principles, implying the potential relevance of other facets of modern voice pedagogy. Singing techniques for breathing, tone conceptualization, registration, and timbral nuance are explored along with their possible relevance to clarinet performance. The singer ‘in action’ is presented through an analysis of the phrasing used by Maria Callas in a portion of ‘Donde lieta’ from Puccini’s La Bohème. This demonstrates the influence of text on interpretation for singers. -

ANDERTON Music Festival Capitalism

1 Music Festival Capitalism Chris Anderton Abstract: This chapter adds to a growing subfield of music festival studies by examining the business practices and cultures of the commercial outdoor sector, with a particular focus on rock, pop and dance music events. The events of this sector require substantial financial and other capital in order to be staged and achieve success, yet the market is highly volatile, with relatively few festivals managing to attain longevity. It is argued that these events must balance their commercial needs with the socio-cultural expectations of their audiences for hedonistic, carnivalesque experiences that draw on countercultural understanding of festival culture (the countercultural carnivalesque). This balancing act has come into increased focus as corporate promoters, brand sponsors and venture capitalists have sought to dominate the market in the neoliberal era of late capitalism. The chapter examines the riskiness and volatility of the sector before examining contemporary economic strategies for risk management and audience development, and critiques of these corporatizing and mainstreaming processes. Keywords: music festival; carnivalesque; counterculture; risk management; cool capitalism A popular music festival may be defined as a live event consisting of multiple musical performances, held over one or more days (Shuker, 2017, 131), though the connotations of 2 the word “festival” extend much further than this, as I will discuss below. For the purposes of this chapter, “popular music” is conceived as music that is produced by contemporary artists, has commercial appeal, and does not rely on public subsidies to exist, hence typically ranges from rock and pop through to rap and electronic dance music, but excludes most classical music and opera (Connolly and Krueger 2006, 667). -

Written and Recorded Preparation Guides: Selected Repertoire from the University Interscholastic League Prescribed List for Flute and Piano

Written and Recorded Preparation Guides: Selected Repertoire from the University Interscholastic League Prescribed List for Flute and Piano by Maria Payan, M.M., B.M. A Thesis In Music Performance Submitted to the Graduate Faculty of Texas Tech University in Partial Fulfillment of the Requirements for the Degree of Doctor of Musical Arts Approved Dr. Lisa Garner Santa Chair of Committee Dr. Keith Dye Dr. David Shea Dominick Casadonte Interim Dean of the Graduate School May 2013 Copyright 2013, Maria Payan Texas Tech University, Maria Payan, May 2013 ACKNOWLEDGEMENTS This project could not have started without the extraordinary help and encouragement of Dr. Lisa Garner Santa. The education, time, and support she gave me during my studies at Texas Tech University convey her devotion to her job. I have no words to express my gratitude towards her. In addition, this project could not have been finished without the immense help and patience of Dr. Keith Dye. For his generosity in helping me organize and edit this project, I thank him greatly. Finally, I would like to give my dearest gratitude to Donna Hogan. Without her endless advice and editing, this project would not have been at the level it is today. ii Texas Tech University, Maria Payan, May 2013 TABLE OF CONTENTS ACKNOWLEDGEMENTS .................................................................................. ii LIST OF FIGURES .............................................................................................. v 1. INTRODUCTION ............................................................................................ -

8Th Grade Orchestra Curriculum Map

8th Grade Orchestra Curriculum Map Music Reading: Student will be able to read and perform one octave major and melodic minor scales in the keys of EbM/cm; BbM/gm; FM/dm; CM/am; GM/em; DM/bm; AM/f#m Student will be able to read and perform key changes. Student will be able to read and correctly perform accidentals and understand their duration. Student will be able to read and perform simple tenor clef selections in cello/bass; treble clef in viola Violin Students will be able to read and perform music written on upper ledger lines. Rhythm: Students will review basic time signature concepts Students will read, write and perform compound dotted rhythms, 32nd notes Students will learn and perform basic concepts of asymmetrical meter Students will review/reinforce elements of successful time signature changes Students will be able to perform with internal subdivision Students will be able to count and perform passages successfully with correct rhythms, using professional counting system. Students will be able to synchronize performance of rhythmic motives within the section. Students will be able to synchronize performance of the sections’ rhythm to the other sections of the orchestra. Pitch: Student is proficient in turning his/her own instrument with the fine tuners. Student is able to hear bottom open string of octave ring when top note is played. Students will review and reinforce tuning to perfect 5ths using the following methods: o tuning across the orchestra o listening and tuning to the 5ths on personal instrument. Student performs with good intonation within the section. -

The 2020 NAMM Global Report Provides Members with Industry Data to Guide Decision Making

2020 The 2020 NAMM Global Report Provides Members with Industry Data to Guide Decision Making This copy belongs to Markus Theinert A MESSAGE FROM THE CHAIR AND PRESIDENT/CEO The current economic and health situation reveals just how interconnected we all are. From trade to travel, our world has been turned upside down, but even as we grapple with change, one thing is certain: We are in this together. Regardless of political rhetoric, global supply chains and digital integration are here to stay. Our industry requires global growth to maintain our bottom lines, and data-driven decision-making will define the next century. While growth is never a straight line and there will certainly be bumps in the road, the trends and forces at play provide hope for a brighter, stronger future—one in which membership can power both a feeling of collectivity and the means to succeed. We believe that music unites us and brings out the best in humanity. In pursuit of this noble goal, NAMM is committed to providing tools to support our members all around the world. Whether it’s by offering business-building educational resources or connecting buyers and sellers, or through data analysis such as this report, we seek to empower you to make broad, economic decisions, while growing your network and skills. For this reason, we bring you the 2020 NAMM Global Report, capturing a snapshot of 28 markets. With 132 countries and regions attending The NAMM Show and international firms composing 32 percent of our total membership base, we recognize and value the need for a global industry perspective. -

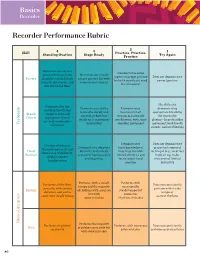

Recorder Performance Rubric

Basics Recorder Recorder Performance Rubric 2 Skill 4 3 Practice, Practice, 1 Standing Ovation Stage Ready Practice Try Again Demonstrates correct Demonstrates some posture with neck and Demonstrates mostly aspects of proper posture Does not demonstrate Posture shoulders relaxed, back proper posture but with but with significant need correct posture straight, chest open, and some inconsistencies for refinement feet flat on the floor Has difficulty Demonstrates low Demonstrates ability Demonstrates demonstrating and deep breath that to breathe deeply and inconsistent air appropriate breathing Breath supports even and control air flow, but stream, occasionally for successful Control appropriate flow of steady air is sometimes overblowing, with some playing—large shoulder air, with no shoulder Technique inconsistent shoulder movement movement, loud breath movement sounds, and overblowing Demonstrates Does not demonstrate Consistently fingers Demonstrates adequate basic knowledge of proper instrumental the notes correctly and Hand dexterity with mostly fingerings but with technique (e.g., incorrect shows ease of dexterity; Position consistent hand position limited dexterity and hand on top, holes displays correct and fingerings inconsistent hand not covered, limited hand position position dexterity) Performs with a steady Performs with Performs all rhythms Does not consistently tempo and the majority occasionally correctly, with correct perform with steady Rhythm of rhythms with accuracy steady tempo but duration, and with a tempo or but -

Developing a New Text for Music 304 at Iowa State University and Measuring Its Viability

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 1-1-2006 Developing a new text for Music 304 at Iowa State University and measuring its viability Ryan Dean Sheeler Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Recommended Citation Sheeler, Ryan Dean, "Developing a new text for Music 304 at Iowa State University and measuring its viability" (2006). Retrospective Theses and Dissertations. 19085. https://lib.dr.iastate.edu/rtd/19085 This Thesis is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Developing a new text for Music 304 at Iowa State University and measuring its viability by Ryan Dean Sheeler A thesis submitted to the graduate faculty in partial fulf llment of the requirements for the degree of MASTER OF ARTS Major: Interdisciplinary Graduate Studies (Arts and Humanities) Program of Study Committee: David Stuart, Major Professor Debra Marquart, English Jeffrey Prater, Music Iowa State University Ames, Iowa 2006 CopyrightO Ryan Dean Sheeler 2006. All rights reserved. 11 Graduate College Iowa State University This is to certify that master's thesis of Ryan Dean Sheeler has met the thesis requirements of Iowa State University Signatures -

Max Neuhaus, R. Murray Schafer, and the Challenges of Noise

University of Kentucky UKnowledge Theses and Dissertations--Music Music 2018 MAX NEUHAUS, R. MURRAY SCHAFER, AND THE CHALLENGES OF NOISE Megan Elizabeth Murph University of Kentucky, [email protected] Digital Object Identifier: https://doi.org/10.13023/etd.2018.233 Right click to open a feedback form in a new tab to let us know how this document benefits ou.y Recommended Citation Murph, Megan Elizabeth, "MAX NEUHAUS, R. MURRAY SCHAFER, AND THE CHALLENGES OF NOISE" (2018). Theses and Dissertations--Music. 118. https://uknowledge.uky.edu/music_etds/118 This Doctoral Dissertation is brought to you for free and open access by the Music at UKnowledge. It has been accepted for inclusion in Theses and Dissertations--Music by an authorized administrator of UKnowledge. For more information, please contact [email protected]. STUDENT AGREEMENT: I represent that my thesis or dissertation and abstract are my original work. Proper attribution has been given to all outside sources. I understand that I am solely responsible for obtaining any needed copyright permissions. I have obtained needed written permission statement(s) from the owner(s) of each third-party copyrighted matter to be included in my work, allowing electronic distribution (if such use is not permitted by the fair use doctrine) which will be submitted to UKnowledge as Additional File. I hereby grant to The University of Kentucky and its agents the irrevocable, non-exclusive, and royalty-free license to archive and make accessible my work in whole or in part in all forms of media, now or hereafter known. I agree that the document mentioned above may be made available immediately for worldwide access unless an embargo applies. -

Conducting Studies Conference 2016

Conducting Studies Conference 2016 24th – 26th June St Anne’s College University of Oxford Conducting Studies Conference 2016 24-26 June, St Anne’s College WELCOME It is with great pleasure that we welcome you to St Anne’s College and the Oxford Conducting Institute Conducting Studies Conference 2016. The conference brings together 44 speakers from around the globe presenting on a wide range of topics demonstrating the rich and multifaceted realm of conducting studies. The practice of conducting has significant impact on music-making across a wide variety of ensembles and musical contexts. While professional organizations and educational institutions have worked to develop the field through conducting masterclasses and conferences focused on professional development, and academic researchers have sought to explicate various aspects of conducting through focussed studies, there has yet to be a space where this knowledge has been brought together and explored as a cohesive topic. The OCI Conducting Studies Conference aims to redress this by bringing together practitioners and researchers into productive dialogue, promoting practice as research and raising awareness of the state of research in the field of conducting studies. We hope that this conference will provide a fruitful exchange of ideas and serve as a lightning rod for the further development of conducting studies research. The OCI Conducting Studies Conference Committee, Cayenna Ponchione-Bailey Dr John Traill Dr Benjamin Loeb Dr Anthony Gritten University of Oxford University of -

A History of Rhythm, Metronomes, and the Mechanization of Musicality

THE METRONOMIC PERFORMANCE PRACTICE: A HISTORY OF RHYTHM, METRONOMES, AND THE MECHANIZATION OF MUSICALITY by ALEXANDER EVAN BONUS A DISSERTATION Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy Department of Music CASE WESTERN RESERVE UNIVERSITY May, 2010 CASE WESTERN RESERVE UNIVERSITY SCHOOL OF GRADUATE STUDIES We hereby approve the thesis/dissertation of _____________________________________________________Alexander Evan Bonus candidate for the ______________________Doctor of Philosophy degree *. Dr. Mary Davis (signed)_______________________________________________ (chair of the committee) Dr. Daniel Goldmark ________________________________________________ Dr. Peter Bennett ________________________________________________ Dr. Martha Woodmansee ________________________________________________ ________________________________________________ ________________________________________________ (date) _______________________2/25/2010 *We also certify that written approval has been obtained for any proprietary material contained therein. Copyright © 2010 by Alexander Evan Bonus All rights reserved CONTENTS LIST OF FIGURES . ii LIST OF TABLES . v Preface . vi ABSTRACT . xviii Chapter I. THE HUMANITY OF MUSICAL TIME, THE INSUFFICIENCIES OF RHYTHMICAL NOTATION, AND THE FAILURE OF CLOCKWORK METRONOMES, CIRCA 1600-1900 . 1 II. MAELZEL’S MACHINES: A RECEPTION HISTORY OF MAELZEL, HIS MECHANICAL CULTURE, AND THE METRONOME . .112 III. THE SCIENTIFIC METRONOME . 180 IV. METRONOMIC RHYTHM, THE CHRONOGRAPHIC -

Anexo:Premios Y Nominaciones De Madonna 1 Anexo:Premios Y Nominaciones De Madonna

Anexo:Premios y nominaciones de Madonna 1 Anexo:Premios y nominaciones de Madonna Premios y nominaciones de Madonna interpretando «Ray of Light» durante la gira Sticky & Sweet en 2008. La canción ganó un MTV Video Music Awards por Video del año y un Grammy a mejor grabación dance. Premios y nominaciones Premio Ganados Nominaciones Total Premios 215 Nominaciones 407 Pendientes Las referencias y notas al pie Madonna es una cantante, compositora y actriz. Nació en Bay City, Michigan, el 16 de agosto de 1958, y creció en Rochester Hills, Michigan, se mudó a Nueva York en 1977 para lanzar su carrera en la danza moderna.[1] Después haber sido miembro de los grupos musicales pop Breakfast Club y Emmy, lanzó su auto-titulado álbum debut, Madonna en 1983 por Sire Records.[2] Recibió la nominación a Mejor artista nuevo en el MTV Video Music Awards (VMA) de 1984 por la canción «Borderline». Madonna fue seguido por una serie de éxitosos sencillos, de sus álbumes de estudio Like a Virgin de 1984 y True Blue en 1986, que le dieron reconocimiento mundial.[3] Madonna, se convirtió en un icono pop, empujando los límites de contenido lírico de la música popular y las imágenes de sus videos musicales, que se convirtió en un fijo en MTV.[4] En 1985, recibió una serie de nominaciones VMA por sus videos musicales y dos nominaciones en a la mejor interpretación vocal pop femenina de los premios Grammy. La revista Billboard la clasificó en lista Top Pop Artist para 1985, así como en el Top Pop Singles Artist en los próximos dos años.