Process for Establishing, Implementing, and Institutionalizing a Traffic September 2016 Incident Management Performance Measurement Program 6

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ohio State Troopers Association

Table of Contents ARTICLE 1 - AGREEMENT ........................................................................... 1 ARTICLE 2 - EFFECT OF AGREEMENT .................................................... 1 ARTICLE 3 - CONFLICT AND AMENDMENT .......................................... 1 ARTICLE 4 - MANAGEMENT RIGHTS ...................................................... 2 ARTICLE 5 - UNION RECOGNITION AND SECURITY .......................... 2 5.01 Bargaining Unit ..................................................................................... 2 5.02 Resolution of Dispute ............................................................................ 2 5.03 Bargaining Unit Work ........................................................................... 3 ARTICLE 6 - NO STRIKE PROVISION ....................................................... 3 6.01 Union Prohibition .................................................................................. 3 6.02 Affirmative Duty ................................................................................... 3 6.03 Disciplinary Actions .............................................................................. 3 6.04 Employer Prohibition ............................................................................ 3 ARTICLE 7 - NON-DISCRIMINATION ........................................................ 3 ARTICLE 8 - OSTA TIME .............................................................................. 4 8.01 Union Delegate and Officer Leave ........................................................ -

Service Patrol Handbook

FEDERAL HIGHWAY ADMINISTRATION SERVICE PATROL HANDBOOK November 2008 NOTICE This document is disseminated under the sponsorship of the department of transportation in the interest of information exchange. The United States Government assumes no liability for its contents or use thereof. This report does not constitute a standard, specification, or regulation. The United States Government does not endorse products or manufacturers. Trade and manufacturers’ names appear in this report only because they are considered essential to the object of the document. i Technical Report Documentation Page 1. Report No. 2. Government Accession No. 3. Recipient’s Catalog No. FHWA-HOP-08-031 4. Title and Subtitle 5. Report Date Service Patrol Handbook November 2008 6. Performing Organization Code 7. Author(s) 8. Performing Organization Report No. Nancy Houston, Craig Baldwin, Andrea Vann Easton, Steve Cyra, P.E., P.T.O.E., Marc Hustad, P.E., Katie Belmore, EIT 9. Performing Organization Name and Address 10. Work Unit No. (TRAIS) Booz Allen Hamilton HNTB Corporation 8283 Greensboro Drive 11414 West Park Place, Suite 300 McLean, Virginia 22102 Milwaukee, WI 53224 11. Contract or Grant No. 12. Sponsoring Agency Name and Address 13. Type of Report and Period Covered Federal Highway Administration, HOTO-1 Final Report U. S. Department of Transportation 1200 New Jersey Avenue SE 14. Sponsoring Agency Code Washington, D. C. 20590 HOTO, FHWA 15. Supplementary Notes Paul Sullivan, FHWA Office of Operations, Office of Transportation Operations, Contracting Officer’s Technical Representative (COTR). Handbook development was performed under contract to Booz Allen Hamilton. 16. Abstract This Handbook provides an overview of the Full-Function Service Patrol (FFSP) and describes desired program characteristics from the viewpoint of an agency that is responsible for funding, managing, and operating the services. -

San Diegd Police Department San Diego, California

03-35 SAN DIEGD POLICE DEPARTMENT SAN DIEGO, CALIFORNIA C/5 C 3 5 Project Summary: Drag-Net San Diego Police Department The Problem: Illegal motor vehicle speed contests, commonly known as street races, throughout the City of San Diego. Analysis: Officers developed a knowledge of the street-racing culture through undercover investigations, interviews with officers who had experience dealing with racers, monitoring Internet websites, interviewing racers, and exploring the legal alternatives that are available. Officers studied data on calls for service, traffic collisions, arrests, and citations related to illegal speed contests. Officers established baseline figures to determine the size of the problem. They identified collateral crimes that were occurring because of the problem. The officers set goals of reducing incidents of street racing to a level that it could be managed with existing resources and to reduce the number of illegally modified vehicles on the roadways. The most important analysis the officers made was whether they could impact the problem, despite its magnitude and history of indifference by society. They realized they had to change society's paradigm about street racing. The Drag-Net Officers decided they would only be successful if they truly made San Diego a safer place. They knew lives could be saved if their analysis was accurate, and the response was effective. Response: Officers used a multi-faceted approach in a comprehensive response strategy: • Undercover operations to identify, apprehend, and prosecute racers -

Rank State Population Troopers Per Capita Total Troopers 1 Florida

Rank State Population Troopers per Capita Total Troopers 1 Florida 18,801,000 8.473 1593 2 Georgia 9,688,000 8.237 798 3 Delaware 898,000 7.272 653 4 Alaska 710,000 5.451 387 5 Vermont 626,000 5.224 327 6 West Virginia 1,853,000 3.756 696 7 Wyoming 564,000 3.475 196 8 Pennsylvania 12,702,000 3.458 4392 9 Massachusetts 6,548,000 3.129 2049 10 New Jersey 8,792,000 3.11 2734 11 Connecticut 3,574,000 3.022 1080 12 New Mexico 2,059,000 2.632 542 13 Montana 989,000 2.568 254 14 Nebraska 1,826,000 2.568 469 15 New Hampshire 1,316,000 2.492 328 16 Maryland 5,774,000 2.473 1428 17 Maine 1,328,000 2.402 319 18 New York 19,378,000 2.333 4521 19 Virginia 8,001,000 2.307 1846 20 North Dakota 673,000 2.199 148 21 Louisiana 4,533,000 2.16 979 22 Oklahoma 3,751,000 2.127 798 23 California 37,254,000 2.086 7773 24 Rhode Island 1,053,000 2.061 217 25 Kentucky 4,339,000 2.053 891 26 Missouri 5,989,000 2.047 1226 27 South Dakota 814,000 1.99 162 28 Kentucky 4,339,000 2.053 891 29 Kentucky 4,339,000 2.053 891 30 Indiana 6,484,000 1.883 1221 31 Michigan 9,884,000 1.817 1796 32 Kansas 2,853,000 1.791 511 33 Nevada 2,701,000 1.785 462 34 North Carolina 9,535,000 1.769 1687 35 South Carolina 4,625,000 1.745 807 36 Oregon 3,831,000 1.731 663 37 Idaho 1,568,000 1.665 261 38 Utah 2,764,000 1.606 444 39 Washington 6,725,000 1.573 1058 40 Arizona 6,392,000 1.492 954 41 Illinois 12,831,000 1.483 1903 42 Colorado 5,029,000 1.469 739 43 Alabama 4,780,000 1.391 665 44 Texas 25,146,000 1.375 3457 45 Ohio 11,537,000 1.252 1445 46 Iowa 3,046,000 1.244 379 47 Tennessee 6,346,000 -

Motorist Assistance Program Is Available 511 in the Kansas City, Topeka, Wichita, and Or Visit Salina Metro Areas

TROOP HEADQUARTERS Troop A - Olathe (913) 782-8100 EMERGENCY Troop B - Topeka (785) 296-3102 PHONE NUMBERS Troop C - Salina You can easily contact the Kansas Highway (785) 827-3065 Patrol Communications Center while traveling. Troop D - Hays These phone numbers can be dialed on your (785) 625-3518 cell phone to report a vehicle breakdown, emergency, accident, erratic driver, or criminal Troop E - Garden City activity: (620) 276-3201 *47 to reach the Kansas Highway Patrol Troop F - Wichita (316) 744-0451 (582) while on the Kansas Turnpike *KTA Troop G - Turnpike (316) 682-4357 ROAD OR WEATHER Troop H - Chanute CONDITIONS (620) 431-2100 To obtain road construction information or inclement weather updates, please call The Motorist Assistance Program is available 511 in the Kansas City, Topeka, Wichita, and or visit www.KanDrive.org Salina metro areas. Travelers on the Kansas Turnpike during the summer months will be assisted by a State Farm Safety Assist vehicle. Technicians will provide only immediate services needed to ensure the safety of Kansas Turnpike Authority customers 122 SW 7th Street and the safe movement of traffic. This program Topeka, KS 66603 is not affiliated with the Kansas Highway Patrol www.KansasHighwayPatrol.org Motorist Assistance Program. KHP civilian motorist assistance technicians aid motorists and help manage traffic in high traffic metro areas by removing disabled and abandoned vehicles from the roadway. PROGRAM GOALS Disabled vehicles on our major highways cause numerous problems when it comes to public safety. To address these problems, the Kansas WHAT TO DO IF YOUR Highway Patrol (KHP) and the Kansas Department VEHICLE BREAKS DOWN of Transportation (KDOT) established a Motorist Assistance Program. -

Traffic Tickets and Other Violations

TRAFFIC TICKETS AND OTHER VIOLATIONS Receiving a traffic citation can be stressful and intimidating. This section discusses some common concerns regarding traffic citations and other traffic violations. IF YOU ARE STOPPED The California Department of Motor Vehicles (DMV) suggests that drivers turn on their right turn signals immediately after an officer alerts them to pull over. Officers will alert a driver to pull over by turning on flashing lights on their patrol car. The driver should pull over as far to the right as possible, and should proceed in a safe manner. It is important for the driver to stay calm. The DMV recommends that when pulling over, drivers should discontinue cell phone use, turn off the radio, and place both hands on the steering wheel. Placing both hands on the steering wheel will allow the officer to see that the driver is not holding a weapon. Drivers should have their driver’s license, registration, and proof of insurance easily accessible to present to an officer during a traffic stop. If the officer issues a citation, the driver does not admit guilt by signing the ticket. Instead, by signing the ticket, the driver promises to appear in court. In many instances, citations can be handled by mail. COURTESY NOTICES The Superior Court of California, County of San Diego – Traffic/Minor Offenses Division has jurisdiction over Vehicle Code infractions occurring in San Diego County. Most traffic citations are based on violations of the Vehicle Code. The court will usually mail a courtesy notice to the driver about two weeks before the court appearance date indicated on the ticket. -

State Patrol Inspector Position Description

2 WISCONSIN STATE PATROL INSPECTOR PD # 19 (11/09/01) 14. POSITION SUMMARY: After successful completion of the State Patrol Academy training program, Inspectors will work under close to limited supervision of a State Patrol Sergeant. After one year of job experience, the level of supervision will progress from limited to general. State Patrol Inspector will personally follow all safety rules and safe work practices established by the Department of Transportation; follow all rules and regulations regarding safety, and maintain the high safety and health standards developed and approved by the Department; report incidents/crashes/near misses that resulted or could have resulted in personal injury; maintain safe working conditions and wear appropriate personal protective equipment in designated areas; offer safety and health suggestions that would reduce risks to workers. Employees in this position exercise arrest authority and are responsible for enforcement of and compliance with state laws, administrative orders and regulations relating to the operation of motor carrier, school buses, and other vehicles. Work involves operation of safety and weight enforcement facilities and use of portable scales for the purpose of detecting violations of motor carrier laws, safety regulations and hazardous materials requirements, safety compliance review, may serve as Field Training Officers, and specific state statutes relating to OWI and criminal offenses. Work includes patrolling public highways. Inspectors have developed extensive knowledge and skills required to function as law enforcement officers. Inspectors have received basics MCSAP training; may serve on the State Patrol Accident Classification Board; may serve on a State Patrol Board of Review; and may serve as appointed member of Division of State Patrol and Department of Transportation committees. -

CALIFORNIA HIGHWAY PATROL Corridor Safety Program—A Collaborative Approach to Traffic Safety

CALIFORNIA HIGHWAY PATROL Corridor Safety Program—A Collaborative Approach to Traffic Safety Judge's Commentary The focus of most problem-oriented projects is crime, but it might just as well be any other of the recurring problems dealt with by police. In fact, this year's winner of the Goldstein Award was focused on a traffic problem—a high rate of fatal accidents on a particular stretch of rural highway in California. The projects' novelty was one reason for its selection. Another was that it was focused on an important, life-threaten- ing problem. However, judges liked many other things about the project, including the following: • Scanning was unusually rigorous. The stretch of highway, or corridor, was selected from among 550 qualifying roadway segments on the basis of its high rate of collisions and fatalities during a three-year period. A particu- Summary larly serious accident resulted in its The Problem: California State Routes (SR) being given high priority for treatment 41 and 46 are rural east-west highways con- by the California Highway Patrol. necting California's Central Valley to the central coast region. After a particularly seri- • Analysis consisted of a detailed review ous multiple fatality collision in 1995, the of accident data, together with input local community asked the California from a task force of state and local Highway Patrol (CHP) to assist them in stakeholders who made a daylong visit reducing such tragic incidents along this to the roadway to see the problem first corridor. A brief look at the collision picture hand. The principal factors involved in along the corridor confirmed the need to act the different kinds of collisions were quickly. -

Searches 11.02 Issue Date 02/01/96 Revision Date 5/29/15 Total Pages 7

FLORIDA HIGHWAY PATROL POLICY MANUAL SUBJECT POLICY NUMBER SEARCHES 11.02 ISSUE DATE 02/01/96 REVISION DATE 5/29/15 TOTAL PAGES 7 11.02.01 PURPOSE To specify procedures used by members of the Florida Highway Patrol governing the search and seizure of persons and/or property. 11.02.02 POLICY It is the policy of the Florida Highway Patrol to employ measures that safeguard the rights granted in the Fourth Amendment of the United States Constitution relating to search and seizures. Article I, section 12 of the Florida Constitution also protects persons and places from unreasonable searches and seizures. 11.02.03 DEFINITIONS A. BIASED POLICING (formerly: Biased Based Profiling)– The selection of an Individual(s) for enforcement action based in whole or in part on a trait common to a group, without actionable intelligence to support consideration of that trait. This includes, but is not limited to, race, ethnic background, national origin, gender, sexual orientation/identity, religion, economic status, age, cultural group, or any other identifiable characteristics. B. PLAIN VIEW – Observation of evidence of a crime or contraband, without searching, from a location where the member has a lawful right to be. C. PROBABLE CAUSE – A fair probability that the evidence will be found, based on an objective assessment of the totality of the circumstances, viewed in light of the member’s training and experience. It means there is a reasonable basis for believing that a crime has been or is being committed, and showing that the items to be seized are at the place to be searched. -

State Highway Patrols-Their Functions and Financing

State Highway Patrols-Their Functions and Financing EDWARD A. GLADSTONE and THOMAS W. COOPER, U.S. Bureau of Public Roads, Office of Planning •CONCERN FOR the safety of the motoring public, and the importance of the state police organizations in enforcing traffic and safety laws, was voiced by the governors of most of the 47 states in which the legislatures met in regular session in 1965. In state after state the governor's message emphasized highway safety and pointed to the need for additional highway patrol troops to curb highway accidents and fatalities. In at least 30 states requests were made for an increase in patrol strength, either by the governor, by legislative committees, or by safety agencies. Collectively, specific requests were made in 21 states for nearly 3, 800 troopers to be added to the patrol strength within the next onP. to four years. A summary of the requests (as of mid-1965) is given in Table 1. If approved, these requests would increase patrol strength by an average of 23 percent. TAB LE 1 REQUESTS FOR ADDITIONAL PATROL TROOPERS, SUBMITTED TO 1965 STATE LEGISLATURES BY GOVERNORS, LEGISLATIVE COMMITTEES, OR OTHERS Number Number St,it,,, (uhPr" stl\te~) State (where stated) Arkansas - New York 112 California 195 North Carolina 200 (4 years) Florida 212 Ohio 4-00 (2 years) Georgia 8o (2 years) Oklahoma 100 (2 years) Illinois 8oo ( 4 years) Pennsylvanla 300 Indiena 150 South Carolina - Iowa 100 (2 years) South Dakota - KMSt\8 50 Tennessee 100 Maryland 4o Texas - Michigan 200 Utah 20 Minnesota 368 (by 1973) Vermont 42 (2 years) Missouri 250 Washington - Nebraska 50 West Virginia - Nevada - Wisconsin - New Mexico 10 Wyoming - Source: Daily legislative bulletins published by the National. -

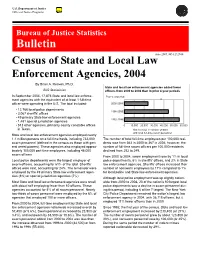

Census of State and Local Law Enforcement Agencies, 2004 by Brian A

U.S. Department of Justice Office of Justice Programs Bureau of Justice Statistics Bulletin June 2007, NCJ 212749 Census of State and Local Law Enforcement Agencies, 2004 By Brian A. Reaves, Ph.D. State and local law enforcement agencies added fewer BJS Statistician officers from 2000 to 2004 than in prior 4-year periods In September 2004, 17,876 State and local law enforce- Four-year period ment agencies with the equivalent of at least 1 full-time officer were operating in the U.S. The total included: 2000-2004 • 12,766 local police departments 1996-2000 • 3,067 sheriffs' offices • 49 primary State law enforcement agencies 1992-1996 • 1,481 special jurisdiction agencies • 513 other agencies, primarily county constable offices 0 10,000 20,000 30,000 40,000 50,000 60,000 in Texas. Net increase in number of State and local full-time sworn personnel State and local law enforcement agencies employed nearly 1.1 million persons on a full-time basis, including 732,000 The number of total full-time employees per 100,000 resi- sworn personnel (defined in the census as those with gen- dents rose from 362 in 2000 to 367 in 2004; however, the eral arrest powers). These agencies also employed approx- number of full-time sworn officers per 100,000 residents imately 105,000 part-time employees, including 46,000 declined from 252 to 249. sworn officers. From 2000 to 2004, sworn employment rose by 1% in local Local police departments were the largest employer of police departments, 6% in sheriffs’ offices, and 2% in State sworn officers, accounting for 61% of the total. -

Street Racing Enforcement and Illegal Emission Modifications -Expanded Course Outline

San Jose Police Department Street Racing Enforcement and Illegal Emission Modifications -Expanded Course Outline- I. Registration A. SJPD Administrative record keeping B. POST administrative record keeping II. DragNet Program History A. Office of Traffic Safety Grant 1. Accidents related to racing 2. Funding of program 3. Successes and failures B. Education aspects of Dragnet program 1. Class educational goals 2. DragNet spreading throughout the area 3. Handouts 4. Copyrights C. Enforcement aspects of Dragnet program 1. Operations 2. Multi agency collaborations 3. 24/7 emission enforcement III. Principles of Traffic Law A. Recognize basic Vehicle Code requirements related to vehicle body modifications 1. Fins 2. Air dams 3. Fenders B. Recognize legal vehicle modifications 1. Tachometer 2. Seats 3. Seatbelts 4. Stickers C. Recognize violations related to vehicle exhaust systems 1. Exhaust tips 2. Baffles 3. Decibels 4. Excessive or unusual noise 1 03/14/2018 D. Recognize violations related to vehicle modifications 1. Lights 2. Window tinting 3. Vehicle height 4. View obstructions E. Recognize violations related to illegal emissions 1. 27156b California Vehicle Code (CVC) 2. Stock motors 3. Show racers 4. Serious racers 5. Common modifications a. Open air elements b. Headers c. Colored tubing d. Camshaft gears e. Fuel pressure regulator f. Other associated modifications F. Recognize legal modifications accompanied by a Bureau of Automotive Repair (BAR) sticker (Referee Label) 1. Location 2. Type of sticker 3. Description of what is to be on the sticker G. Executive Order numbers 1. Character layout 2. California Air Resources Board (CARB) items H. Computers 1. Placement 2. Capabilities 3.