Tangible Interfaces for Manipulating Aggregates of Digital Information

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Misión Madres Del Barrio: a Bolivarian Social Program Recognizing Housework and Creating a Caring Economy in Venezuela

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by KU ScholarWorks MISIÓN MADRES DEL BARRIO: A BOLIVARIAN SOCIAL PROGRAM RECOGNIZING HOUSEWORK AND CREATING A CARING ECONOMY IN VENEZUELA BY Cory Fischer-Hoffman Submitted to the graduate degree program in Latin American Studies and the Graduate Faculty of the University of Kansas in partial fulfillment of the requirements for the degree of Master’s of Arts. Committee members Elizabeth Anne Kuznesof, Phd. ____________________ Chairperson Tamara Falicov, Phd. ____________________ Mehrangiz Najafizadeh, Phd. ____________________ Date defended: May 8, 2008 The Thesis Committee for Cory Fischer-Hoffman certifies that this is the approved Version of the following thesis: MISIÓN MADRES DEL BARRIO: A BOLIVARIAN SOCIAL PROGRAM RECOGNIZING HOUSEWORK AND CREATING A CARING ECONOMY IN VENEZUELA Elizabeth Anne Kuznesof, Phd. ________________________________ Chairperson Date approved:_______________________ ii ACKNOWLEDGEMENTS This thesis is a product of years of activism in the welfare rights, Latin American solidarity, and global justice movements. Thank you to all of those who I have worked and struggled with. I would especially like to acknowledge Monica Peabody, community organizer with Parents Organizing for Welfare and Economic Rights (formerly WROC) and all of the welfare mamas who demand that their caring work be truly valued. Gracias to my compas, Greg, Wiley, Simón, Kaya, Tessa and Caro who keep me grounded and connected to movements for justice, and struggle along side me. Thanks to my thesis committee for helping me navigate through the bureaucracy of academia while asking thoughtful questions and providing valuable guidance. I am especially grateful to the feedback and editing support that my dear friends offered just at the moment when I needed it. -

Μitron 4.0 Specification (Ver. 4.03.00) TEF024-S001-04.03.00/En July 2010 Copyright © 2010 by T-Engine Forum

μITRON 4.0 Specification Ver. 4.03.00 TEF024-S001-04.03.00/en July 2010 μITRON 4.0 Specification (Ver. 4.03.00) TEF024-S001-04.03.00/en July 2010 Copyright © 2010 by T-Engine Forum. This “μITRON 4.0 Specification (Ver. 4.03.00)” was originally released by TRON ASSOCIATION which has been integrated into T-Engine Forum since January 2010. The same specification now has been released by T-Engine Forum as its own specification without modification of the content after going through the IPR procedures. T-Engine Forum owns the copyright of this specification. Permission of T-Engine Forum is necessary for copying, republishing, posting on servers, or redistribution to lists of the contents of this specification. The contents written in this specification may be changed without a prior notice for improvement or other reasons in the future. About this specification, please refer to follows; Publisher T-Engine Forum The 28th Kowa Building 2-20-1 Nishi-gotanda Shinagawa-Ward Tokyo 141-0031 Japan TEL:+81-3-5437-0572 FAX:+81-3-5437-2399 E-mail:[email protected] TEF024-S001-04.03.00/en µITRON4.0 Specification Ver. 4.03.00 Supervised by Ken Sakamura Edited and Published by TRON ASSOCIATION µITRON4.0 Specification (Ver. 4.03.00) The copyright of this specification document belongs to TRON Association. TRON Association grants the permission to copy the whole or a part of this specification document and to redistribute it intact without charge or at cost. However, when a part of this specification document is redistributed, it must clearly state (1) that it is a part of the µITRON4.0 Specification document, (2) which part was taken, and (3) the method to obtain the whole specification document. -

Brochure Download

Where Beauty Begins » World-leading market place for K-Beauty industry » Globalization of K-Beauty – World Beauty Network » Right place of state-of-the-art technology and information exchange » Maximizing customer success with On-and-offline Hybrid Event Innovative solution for the industry’s futuristic growth, K- Beauty Expo 1:1 Match with buyers from more than 40 countries Various Exhibitor Support Programs - Over 30% of the buyers have more than - KOTRA overseas buyers export conference - Buyer Booklet 100 mil USD Sales - On-and-offline MD consultation - Beauty-box giveaways - Worldwide distributor ‘Global Supply Chain’ - Advertising in seminars and lobbies invited to the show Major Buyers Overseas Buyer Ratio by Continents Asia 40.2% CIS 20.9% North America 16.5% CI(영문) Europe 11.0% CI(한글) Latin America 6.3% Oceania 2.5% BI Color Your Days Africa 2.5% Major Visiting Countries U.S.A Russia China Philippines Thailand Japan Hong Kong Vietnam Australia England ROAD MAP World-leading market place for K-beauty industry Event Summary What » K-BEAUTY EXPO KOREA 2021 Organized by » When » 7 – 9, October, 2021 Web » www.kbeautyexpo.com Where » KINTEX 4&5 Hall (21,384㎡) Exhibiting » Cosmetics, Aesthetic, Hair, Body Care, Category Nail, Raw Materials, Packaging, Post-show Results (2016~2019) Smart Beauty Device, etc. Exhibitors Visitors Overseas Buyers On-site Contracts (USD) 51,440 25.7 mil 452 49,393 430 430 48,303 400 21 mil 46,318 2,242 2,682 2,400 16.6 mil 1,459 1,010 673 3 mil 2014 2015 2016 2017 2018 2019 2016 2017 2018 2019 2016 2017 2018 2019 2016 2017 2018 2019 2020 Online Show Results Exhibitors Overseas Buyers Online Visitors 99 100 46,853 (Foreign Visitors, 20%) Consultation Result Consultation Satisfaction Re-attendance 1.6 % 1.6 % Satised 9.9 % 160 15.9 % No. -

Compact, Low-Cost, but Real-Time Distributed Computing for Computer Augmented Environments

Compact, Low-Cost, but Real-Time Distributed Computing for Computer Augmented Environments Hiroaki Takada and Ken Sakamura Department of Information Science, Faculty of Science, University of Tokyo 7-3-1, Hongo, Bunkyo-ku, Tokyo 113, Japan Abstract We have been conducting a research and development project, called the TRON Project, for realizingthe computer augmented environments. The final goal of the project is to realize highly functionally distributed systems (HFDS) in which every kind of objects around our daily life are augmented with embedded computers, be connected with networks, and cooperate each other to provide better living environments for human beings. One of the key technologies towards HFDS is the realization methods of compact and low-cost distributed computing systems with dependability and real-time property. In this paper, the overview of the TRON Project are introduced and various research and development activities for realizing HFDS, especially the subprojects on a standard real- time kernel specification for small-scale embedded systems Figure 1: Computerized Living Environment called ITRON and on a low-cost real-time LAN called "ITRON bus, are described. We also describe the future A typical example of “computing everywhere” envi- directions of the project centered on the TRON-concept ronment is the TRON-concept Intelligent House, which Computer Augmented Building, which is a pilot realization was built experimentally in 1989 (Figure 2). The purpose of HFDS and being planned to be built. was to create a testbed for future intensively computerized living spaces. The house with 330 m2 of floor space was 1 Introduction equipped with around 1000 computers, sensors, and actu- ators, which were loosely connected to form a distributed Recent advances in microprocessor technologies have computing system. -

Purchaser Guide

PURCHASER GUIDE WELCOME TO ASCENT Tucked at the base of revered Camelback Mountain, Ascent is a new private residential enclave, AT THE PHOENICIAN® adjacent to The Phoenician®—one of Arizona’s premier luxury resort destinations. This community incorporates the Sonoran Desert landscape directly into its design, at the most enviable location in Scottsdale. ASCENT AT THE PHOENICIAN AN ICONIC RESORT DESTINATION Ascent is located next to The Phoenician® — a world class hotel property on the edge of Arizona’s Sonora Desert. For decades The Phoenician has guided guests through an evolving journey distinctly designed to nurture, inspire, and rejuvenate. Offering multiple experiences on one diverse property, the resort’s award-winning Luxury Collection includes The Phoenician, The Canyon Suites, The Phoenician Suites, and a full range of world-class amenities. SERENITY NEAR THE HEART OF SCOTTSDALE Tucked against the backdrop of Camelback Mountain, Ascent is steps from the amenities of The Phoenician® and in close proximity to the boutiques, restaurants, and galleries of Old Town Scottsdale and Fashion Square. Homes highlight desert contemporary design with outdoor living spaces and a private homeowner pool and fitness amenity on the south slope of Camelback Mountains. All images, renderings and specifications on this page are for illustrative and representation purposes only and subject to change without notice. PAGE 3 ASCENT AT THE PHOENICIAN WORLD CLASS DINING J&G Steakhouse, Mowry & Cotton, Thirsty Camel, Afternoon Tea – From classic French dishes to hand-crafted cocktails and poolside snacks, The Phoenician® has many innovative dining options to satisfy every mood – and every craving. A WORLD CLASS DESERT PROPERTY An iconic resort destination, The Phoenician® recently completed an extensive renovation. -

A Bold Plan, a Bright Future Loma Linda University

Loma Linda University TheScholarsRepository@LLU: Digital Archive of Research, Scholarship & Creative Works Scope Summer 2007 A bold plan, a bright future Loma Linda University Follow this and additional works at: http://scholarsrepository.llu.edu/scope Part of the Other Medicine and Health Sciences Commons Recommended Citation Loma Linda University, "A bold plan, a bright future" (2007). Scope. http://scholarsrepository.llu.edu/scope/14 This Newsletter is brought to you for free and open access by TheScholarsRepository@LLU: Digital Archive of Research, Scholarship & Creative Works. It has been accepted for inclusion in Scope by an authorized administrator of TheScholarsRepository@LLU: Digital Archive of Research, Scholarship & Creative Works. For more information, please contact [email protected]. In this issue… Commencement 2007 · · · · · · · · · · · · 2 Loma Linda University graduates 1,203 during commencement ceremonies in its 102nd year Leveling the playing field · · · · · · · · · 8 East Campus Hospital unveils its latest efforts to create a healing environment by opening a park with no boundaries Loma Linda 360˚ debuts · · · · · · · · · 12 LLUAHSC launches new broadcast to share stories of outreach, adventure, and service Patients benefit from robotic surgery · · · · · · · · · · · · · · · · 14 Loma Linda surgeons use robotic surgery equipment to reduce the impact of surgery A bold plan, a bright future · · · · · · 17 The CEO and administrator of Loma Linda University Medical Center shares the long- term vision The Centennial Complex project moves forward · · · · · · · · · · · · · · · · 20 The vision of a facility at the technological forefront of medical education takes shape Newscope & Alumni notes · · · · · · 22 On the covers… On the front cover: TOP LEFT : The School of Nursing celebrated its 100th conferring-of-degrees ceremony with the graduation of its first doctoral students. -

Japanese Semiconductor Industry Conference

Japanese Semiconductor Industry Conference April 11-12, 1988 Hotel Century Hyatt Tokyo, J^an Dataoyest nn aaxraiaiwof JED IneOun&BradstRetCorpofation 1290 Ridder Plark Drive San Jose, CaUfonm 95131-2398 (408) 437-8000 Telex: 171973 Fax: (408) 437-0292 Sales/Service Offices: UNITED KINGDOM FRANCE EASTERN U.S. Dataquest UK Limited Damquest SARL Dataquest Boston 13th Floor, Ceittiepoint Tour Gallieni 2 1740 Massachusetts Ave. 103 New Oxford Street 36, avenue GaUieni Boxborough, MA 01719 London WCIA IDD 93175 Bagnolet Cedex (617) 264-4373 England France Tel«: 171973 01-379-6257 (1)48 97 31 00 Fax: (617) 263-0696 Tfelex: 266195 Tfelex: 233 263 Fax: 01-240-3653 Fax: (1)48 97 34 00 GERMANY JAPAN KOREA Dataquest GmbH Dataquest Js^an, Ltd. Dataquest Korea Rosenkavalierplatz 17 Tkiyo Ginza Building/2nd Floor 63-1 Chungjung-ro, 3Ka D-8000 Munich 81 7-14-16 Ginza, Chuo-ku Seodaemun-ku ^^fest Germany Tbkyo 104 Japan Seoul, Korea (089)91 10 64 (03)546-3191 (02)392-7273-5 Tfelex: 5218070 Tfelex: 32768 Telex: 27926 Fax: (089)91 21 89 Fax: (03)546-3198 Fax: (02)745-3199 The content of this report represents our interpretation aiKl analysis of information generally avail able to the public or released by responsible indivi(bials in the subject companies, but is not guaranteed as to accuracy or comfdeteness. It does not contain material provided to us in confidence t^ our clients. This information is not furnished in connection with a sale or offer to sell securities, or in coimec- tion with the solicitation of an offer to buy securities. -

Funnelle Struck by Theft Meningitis Reported 8 Residents Report Stolen Possessions During Early Morning of Feb

presents 3 Gone fishing BRIAN BROOKS MOVING COMPANY A Applications Due: March 8th BIG CITY Students form club wed, feb 27 • 7:30 PM for angling enthusiasts waterman theatre, tyler hall more at oswego.edu/arts Friday, Feb. 22, 2013 • THE INDEPENDENT STUDENT NEWSPAPER OF OSWEGO STATE UNIVERSITY • www.oswegonian.com VOLUME LXXVIII ISSUE III On the Web Case of bacterial Funnelle struck by theft meningitis reported 8 residents report stolen possessions during early morning of Feb. 12 at Oswego State Seamus Lyman Asst. News Editor [email protected] An Oswego State student has con- tracted bacterial meningitis and, as of Wednesday, is recovering at SUNY Up- state Medical University in Syracuse. On Monday, it was announced that the Oswego County Health Department had Seamus Lyman| The Oswegonian begun investigating a suspected case of The ‘Badham Brigade’ wants students to dig the rare type of meningitis. deep for enthusiasm as Lakers head into playoffs. The student is an 18-year-old female and is “responding well to treatment,” according to Diane Oldenburg, senior public health educator at OCHD. UPDATES ALL Elizabeth Burns, director of student WEEK AT: health services at Mary Walker Health oswegonian.com Center said through email that no other students have been diagnosed at this time. According to a press release on Tues- FRIEND OR LIKE US AT day from the OCHD, “bacterial men- facebook.com/oswegonian ingitis is a relatively rare but serious acute illness in which bacteria infect the covering of the brain and the spi- FOLLOW OUR TWEETS nal cord. A vaccine protects against the most common strains of meningococ- twitter.com/TheOswegonian cal bacteria, and the student had been vaccinated. -

Cash: Focus Should Be on Students Cash Said She Decided to Pur- Ranks of School Administration

SUNDAY 161st yEAR • no. 56 JuLy 5, 2015 CLEVELAnD, Tn 58 PAGES • $1.00 Inside Today Cash: focus should be on students Cash said she decided to pur- ranks of school administration. versity, she was the principal of New director sets sue the job in Bradley County “Just through enjoying learn- Station Camp Elementary School goal to ‘make sure because of “the opportunity to ing, I kept going back to school, in Gallatin from 2008 to 2012 and lead a strong district.” got my master’s and my doctorate of Westmoreland Elementary in students succeed’ Her goals for the future are to and moved into administration,” Westmoreland from 2003 to 2008. keep it going strong and to contin- Cash said. “It’s a career that gave Her resume also boasts experi- ue to make new progress, she me the opportunity to be in the ence as an assistant principal and By CHRISTY ARMSTRONG said. classroom at all three levels [ele- a teaching career dating to 1984. Banner Staff Writer Originally from Pickens, S.C., mentary, middle and high school] Having experienced what it is Bradley County Director of Cash arrived in Bradley County and to have that related arts like to be a teacher, Cash said she Schools Dr. Linda Cash just with years of educational experi- background.” realizes the importance of invest- wrapped up her first month on the ence. Cash most recently served as ing time and resources in those job. A high school athlete who the assistant director of schools who are responsible for teaching The new director began June 1 played softball and competed in for Tennessee’s Robertson County Bradley County’s children and after signing a three-year contract track and field, she started her Schools. -

Transtel Agriculture 122530 Documentary 30 X 10 Min

Your Partner for Quality Television DW Transtel is your source for captivating documentaries and a range of exciting programming from the heart of Europe. Whether you are interested in science, nature and the environment, history, the arts, culture and music, or current affairs, DW Transtel has hundreds of programs on offer in English, Spanish and Arabic. Versions in other languages including French, German, Portuguese and Russian are available for selected programs. DW Transtel is part of Deutsche Welle, Germany’s international broadcaster, which has been producing quality television programming for decades. Tune in to the best programming from Europe – tune in to DW Transtel. SCIENCE TECHNOLOGY MEDICINE NATURE ENVIRONMENT ECONOMICS AGRICULTURE WORLD ISSUES HISTORY ARTS CULTURE PEOPLE PLACES CHILDREN YOUTH SPORTS MOTORING M U S I C FICTION ENTERTAINMENT For screening and comparehensive catalog information, please register online at b2b.dw.com DW Transtel | Sales and Distribution | 53110 Bonn, Germany | [email protected] Key VIDEO FORMAT RIGHTS 4K Ultra High Definition WW Available worldwide HD High Definition VoD Video on demand SD Standard Definition M Mobile IFE Inflight LR Limited rights, please contact your regional distribution partner. For screening and comparehensive catalog information, please register online at b2b.dw.com DW Transtel | Sales and Distribution | 53110 Bonn, Germany | [email protected] Table of Contents SCIENCE ORDER NUMBER FORMAT RUNNING TIME Science Workshop 262634 Documentary 7 x 30 min. The Miraculous Cosmos of the Brain 264102 Documentary 13 x 30 min. Future Now – Innovations Shaping Tomorrow 244780 Clips 20 x 5-8 min. Great Moments in Science and Technology 244110 Documentary 98 x 15 min. -

Hereas the Accuracy Levels of Models a Significant Impact on Restaurant Performance and That C Copyrigh T © 200 © 2020 Authors

School of Economics & Business Department of Organisation Management, Marketing and Tourism Volume 6, Issue 3, 2020 Journal of Tourism, Heritage & Services Marketing Editor-in-Chief: Evangelos Christou Journal of Tourism, Heritage & Services Marketing (JTHSM) is an international, open-access, multi-disciplinary, refereed (double blind peer-reviewed) journal aiming to promote and enhance research in tourism, heritage and services marketing, both at macro-economic and at micro- economic level. ISSN: 2529-1947 Terms of use: This document may be saved and copied for your personal and scholarly purposes. This work is protected by intellectual rights license Creative Commons Attribution-NonCommercial- NoDerivatives 4.0 International (CC BY-NC-ND 4.0). Free public access to this work is allowed. Any interested party can freely copy and redistribute the material in any medium or format, provided appropriate credit is given to the original work and its creator. This material cannot be remixed, transformed, build upon, or used for commercial purposes. https://creativecommons.org/licenses/by-nc-nd/4.0 www.jthsm.gr i Journal of Tourism, Heritage & Services Marketing Volume 6, Issue 3, 2020 © 2020 Authors Published by International Hellenic University ISSN: 2529-1947 UDC: 658.8+338.48+339.1+640(05) Published online: 30 October 2020 Free full-text access available at: www.jthsm.gr Some rights reserved. Except otherwise noted, this work is licensed under https://creativecommons.org/licenses/by-nc-nd/4.0 Journal of Tourism, Heritage & Services Marketing is an open access, international, multi- disciplinary, refereed (double blind peer-reviewed) journal aiming to promote and enhance research at both macro-economic and micro-economic levels of tourism, heritage and services marketing. -

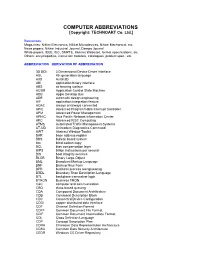

COMPUTER ABBREVIATIONS [Copyright: TECHNOART Co

COMPUTER ABBREVIATIONS [Copyright: TECHNOART Co. Ltd.] References: Magazines: Nikkei Electronics, Nikkei Microdevices, Nikkei Mechanical, etc. News papers: Nikkei Industrial Journal, Dempa Journal White papers: IEEE, IEC, SMPTE, Internet Websites, format specifications, etc. Others: encyclopedias, instruction booklets, catalogues, product spec., etc. ABBREVIATION DERIVATION OF ABBREVIATION 3D DDI 3 Dimensional Device Driver Interface 4GL 4th generation language A3D Aural 3D ABI application binary interface ABS air bearing surface ACSM Application Control State Machine ADB Apple Desktop Bus ADE automatic design engineering AIF application integration feature AOAC always on/always connected APIC Advanced Programmable Interrupt Controller APM Advanced Power Management APNIC Asia Pacific Network Information Center ARC Advanced RISC Computing ATMS Automated Traffic Management Systems AT-UD Unimodem Diagnostics Command AWT Abstract Window Toolkit BAR base address register BBS bulletin board system bcc blind carbon copy BCL bias compensation layer BIPS billion instructions per second BIS boot integrity services BLOB Binary Large Object BML Broadcast Markup Language BNF Backup Naur Form BPR business process reengineering BSDL Boundary Scan Description Language BTL backplane transceiver logic BTRON Business TRON C&C computer and communication CBQ class-based queuing CDA Compound Document Architecture CDB Command Description Block CDC Connected Device Configuration CDDI copper distributed data interface CDF Channel Definition Format CDFF Common