Palette-Based Image Decomposition, Harmonization, and Color Transfer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Theory for Painting Video: Color Perception

Color Theory For Painting Video: Color Perception • http://www.ted.com/talks/lang/eng/beau_lotto_optical_illusions_show_how_we_see.html • Experiment • http://www.youtube.com/watch?v=y8U0YPHxiFQ Intro to color theory • http://www.youtube.com/watch?v=059-0wrJpAU&feature=relmfu Color Theory Principles • The Color Wheel • Color context • Color Schemes • Color Applications and Effects The Color Wheel The Color Wheel • A circular diagram displaying the spectrum of visible colors. The Color Wheel: Primary Colors • Primary Colors: Red, yellow and blue • In traditional color theory, primary colors can not be mixed or formed by any combination of other colors. • All other colors are derived from these 3 hues. The Color Wheel: Secondary Colors • Secondary Colors: Green, orange and purple • These are the colors formed by mixing the primary colors. The Color Wheel: Tertiary Colors • Tertiary Colors: Yellow- orange, red-orange, red-purple, blue-purple, blue-green & yellow-green • • These are the colors formed by mixing a primary and a secondary color. • Often have a two-word name, such as blue-green, red-violet, and yellow-orange. Color Context • How color behaves in relation to other colors and shapes is a complex area of color theory. Compare the contrast effects of different color backgrounds for the same red square. Color Context • Does your impression od the center square change based on the surround? Color Context Additive colors • Additive: Mixing colored Light Subtractive Colors • Subtractive Colors: Mixing colored pigments Color Schemes Color Schemes • Formulas for creating visual unity [often called color harmony] using colors on the color wheel Basic Schemes • Analogous • Complementary • Triadic • Split complement Analogous Color formula used to create color harmony through the selection of three related colors which are next to one another on the color wheel. -

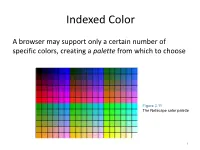

Indexed Color

Indexed Color A browser may support only a certain number of specific colors, creating a palette from which to choose Figure 3.11 The Netscape color palette 1 QUIZ How many bits are needed to represent this palette? Show your work. 2 How to digitize a picture • Sample it → Represent it as a collection of individual dots called pixels • Quantize it → Represent each pixel as one of 224 possible colors (TrueColor) Resolution = The # of pixels used to represent a picture 3 Digitized Images and Graphics Whole picture Figure 3.12 A digitized picture composed of many individual pixels 4 Digitized Images and Graphics Magnified portion of the picture See the pixels? Hands-on: paste the high-res image from the previous slide in Paint, then choose ZOOM = 800 Figure 3.12 A digitized picture composed of many individual pixels 5 QUIZ: Images A low-res image has 200 rows and 300 columns of pixels. • What is the resolution? • If the pixels are represented in True-Color, what is the size of the file? • Same question in High-Color 6 Two types of image formats • Raster Graphics = Storage on a pixel-by-pixel basis • Vector Graphics = Storage in vector (i.e. mathematical) form 7 Raster Graphics GIF format • Each image is made up of only 256 colors (indexed color – similar to palette!) • But they can be a different 256 for each image! • Supports animation! Example • Optimal for line art PNG format (“ping” = Portable Network Graphics) Like GIF but achieves greater compression with wider range of color depth No animations 8 Bitmap format Contains the pixel color -

A New Steganographic Method for Palette-Based Images

IS&T's 1999 PICS Conference A New Steganographic Method for Palette-Based Images Jiri Fridrich Center for Intelligent Systems, SUNY Binghamton, Binghamton, New York Abstract messages. The gaps in human visual and audio systems can be used for information hiding. In the case of images, the In this paper, we present a new steganographic technique human eye is relatively insensitive to high frequencies. This for embedding messages in palette-based images, such as fact has been utilized in many steganographic algorithms, GIF files. The new technique embeds one message bit into which modify the least significant bits of gray levels in one pixel (its pointer to the palette). The pixels for message digital images or digital sound tracks. Additional bits of embedding are chosen randomly using a pseudo-random information can also be inserted into coefficients of image number generator seeded with a secret key. For each pixel transforms, such as discrete cosine transform, Fourier at which one message bit is to be embedded, the palette is transform, etc. Transform techniques are typically more searched for closest colors. The closest color with the same robust with respect to common image processing operations parity as the message bit is then used instead of the original and lossy compression. color. This has the advantage that both the overall change The steganographer’s job is to make the secretly hidden due to message embedding and the maximal change in information difficult to detect given the complete colors of pixels is smaller than in methods that perturb the knowledge of the algorithm used to embed the information least significant bit of indices to a luminance-sorted palette, except the secret embedding key.* This so called such as EZ Stego.1 Indeed, numerical experiments indicate Kerckhoff’s principle is the golden rule of cryptography and that the new technique introduces approximately four times is often accepted for steganography as well. -

Understanding Image Formats and When to Use Them

Understanding Image Formats And When to Use Them Are you familiar with the extensions after your images? There are so many image formats that it’s so easy to get confused! File extensions like .jpeg, .bmp, .gif, and more can be seen after an image’s file name. Most of us disregard it, thinking there is no significance regarding these image formats. These are all different and not cross‐ compatible. These image formats have their own pros and cons. They were created for specific, yet different purposes. What’s the difference, and when is each format appropriate to use? Every graphic you see online is an image file. Most everything you see printed on paper, plastic or a t‐shirt came from an image file. These files come in a variety of formats, and each is optimized for a specific use. Using the right type for the right job means your design will come out picture perfect and just how you intended. The wrong format could mean a bad print or a poor web image, a giant download or a missing graphic in an email Most image files fit into one of two general categories—raster files and vector files—and each category has its own specific uses. This breakdown isn’t perfect. For example, certain formats can actually contain elements of both types. But this is a good place to start when thinking about which format to use for your projects. Raster Images Raster images are made up of a set grid of dots called pixels where each pixel is assigned a color. -

C-316: a Guide to Color

COLLEGE OF AGRICULTURAL, CONSUMER AND ENVIRONMENTAL SCIENCES A Guide to Color Revised by Jennah McKinley1 aces.nmsu.edu/pubs • Cooperative Extension Service • Guide C-316 The College of Agricultural, Consumer and Environmental Sciences is an engine for economic and community development in New Figure 1. Sample color wheel. Mexico, improving the lives of New Color is one of the most important stimuli in the world. It affects our moods and personal characteristics. We speak of blue Mondays, being Mexicans through in the pink, seeing red, and everything coming up roses. Webster de- fines color as the sensation resulting from stimulating the eye’s retina with light waves of certain wavelengths. Those sensations have been academic, research, given names such as red, green, and purple. Color communicates. It tells others about you. What determines and extension your choice of colors in your clothing? In your home? In your office? In your car? Your selection of color is influenced by age, personality, programs. experiences, the occasion, the effect of light, size, texture, and a variety of other factors. Some people have misconceptions about color. They may feel cer- tain colors should never be used together, certain colors are always unflattering, or certain colors indicate a person’s character. These ideas will limit their enjoyment of color and can cause them a great deal of frustration in life. To get a better understanding of color, look at na- ture. Consider these facts: All About Discovery!TM • The prettiest gardens have a wide variety of reds, oranges, pinks, New Mexico State University violets, purples, and yellows all mixed together. -

Color Harmonization Daniel Cohen-Or Olga Sorkine Ran Gal Tommer Leyvand Ying-Qing Xu Tel Aviv University∗ Microsoft Research Asia†

Color Harmonization Daniel Cohen-Or Olga Sorkine Ran Gal Tommer Leyvand Ying-Qing Xu Tel Aviv University∗ Microsoft Research Asia† original image harmonized image Figure 1: Harmonization in action. Our algorithm changes the colors of the background image to harmonize them with the foreground. Abstract colors are sets of colors that hold some special internal relation- ship that provides a pleasant visual perception. Harmony among Harmonic colors are sets of colors that are aesthetically pleasing colors is not determined by specific colors, but rather by their rel- in terms of human visual perception. In this paper, we present a ative position in color space. Generating harmonic colors has been method that enhances the harmony among the colors of a given an open problem among artists and scientists [Holtzschue 2002]. photograph or of a general image, while remaining faithful, as much Munsell [1969] and Goethe [1971] have defined color harmony as as possible, to the original colors. Given a color image, our method balance, in an effort to transfer the concept of color harmony from finds the best harmonic scheme for the image colors. It then allows a subjective perspective to an objective one. Although currently a graceful shifting of hue values so as to fit the harmonic scheme there is no formulation that defines a harmonic set, there is a con- while considering spatial coherence among colors of neighboring sensus among artists that defines when a set is harmonic, and there pixels using an optimization technique. The results demonstrate are some forms, schemes and relations in color space that describe that our method is capable of automatically enhancing the color a harmony of colors [Matsuda 1995; Tokumaru et al. -

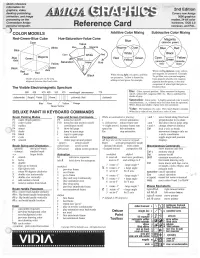

Amiga Graphics Reference Card 2Nd Edition

Quick reference information for graphics, video, 2nd Edition desktop publishing, Covers new Amiga animation, and image 3000 graphics processing on the modes, 24-bit color Commodore Amiga hardware, DOS 2.0 personal computer. Reference Card overscan, and PAL. ) COLOR MODELS Additive Color Mixing Subtractive Color Mixing Red-Green-Blue Cube Hue-Saturation-Value Cone Cyan Value White Blue Blue] Axis Yellow Black ~reen Axis When mixi'hgpigments, cyan, yellow, Red When mixing light, red, green, and blue and magenta are primaries. Example: are primaries. Yell ow is formed by To get blue, mix cyan and magenta. Shades of gray are on the long Cyan pigment absorbs red, magenta adding red and green, for example. diagonal between black and white. pigment absorbs green, so the only component of white light that gets re The Visible Electromagnetic Spectrum flected is blue. 380 420 470 495 535 575 wavelength (nanometers) 770 Hue: Color; spectral position. Often measured in degrees; red=0°, yellow=60°, magenta=300°, etc. Hue is undefined for shades of gray. (ultraviolet) Purple Violet yellowish Red (infrared) Saturation: Color purity. A highly-saturated color is nearly Blue Orange monochromatic, i.e., contains only one color from the spectrum. White, black, and shades of gray have zero saturation. Value: The darkness of a color. How much black it contain's. DELUXE PAINT Ill KEYBOARD COMMANDS White has a value of one, black has a value of zero. Brush Painting Modes Page and Screen Commands While an animation is playing: ; ana, move brush along fixed axis -

Color Harmony: Experimental and Computational Modeling

Color harmony : experimental and computational modeling Christel Chamaret To cite this version: Christel Chamaret. Color harmony : experimental and computational modeling. Image Processing [eess.IV]. Université Rennes 1, 2016. English. NNT : 2016REN1S015. tel-01382750 HAL Id: tel-01382750 https://tel.archives-ouvertes.fr/tel-01382750 Submitted on 17 Oct 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. ANNEE´ 2016 THESE` / UNIVERSITE´ DE RENNES 1 sous le sceau de l'Universit´eBretagne Loire pour le grade de DOCTEUR DE L'UNIVERSITE´ DE RENNES 1 Mention : Informatique Ecole doctorale Matisse pr´esent´eepar Christel Chamaret pr´epar´ee`al'IRISA (Institut de recherches en informatique et syst`emesal´eatoires) et Technicolor Th`esesoutenue `aRennes Color Harmony: le 28 Avril 2016 experimental and devant le jury compos´ede : Pr Alain Tr´emeau Professeur, Universit´ede Saint-Etienne / rapporteur computational Dr Vincent Courboulay Ma^ıtre de conf´erences HDR, Universit´e de La modeling. Rochelle / rapporteur Pr Patrick Le Callet Professeur, Universit´ede Nantes / examinateur Dr Frederic Devinck Ma^ıtrede conf´erencesHDR, Universit´ede Rennes 2 / examinateur Pr Luce Morin Professeur, INSA Rennes / examinateur Dr Olivier Le Meur Ma^ıtrede conf´erencesHDR, Universit´ede Rennes 1 / directeur de th`ese Abstract While the consumption of digital media exploded in the last decade, consequent improvements happened in the area of medical imaging, leading to a better understanding of vision mechanisms. -

The Rendering Pipeline

The Rendering Pipeline Framebuffers • Framebuffer is the interface between the device and the computer’s notion of an image • A memory array in which the computer stores an image – On most computers, separate memory bank from main memory – Many different variations, motivated by cost of memory Framebuffers: True-Color • A true-color (aka 24-bit or 32-bit) framebuffer stores one byte each for red, green, and blue • Each pixel can thus be one of 224 colors • Pay attention to Endian-ness • How can 24-bit and 32-bit mean the same thing here? Framebuffers: Indexed-Color • An indexed-color (8-bit or PseudoColor) framebuffer stores one byte per pixel (also: GIF image format) • This byte indexes into a color map: • How many colors can a pixel be? • Common on low-end displays (cell phones, PDAs, GameBoys) Framebuffers: Indexed Color Illustration of how an indexed palette works A 2-bit indexed-color image. The color of each pixel is represented by a number; each number corresponds to a color in the palette. Image credits: Wikipedia Framebuffers: Hi-Color • Hi-Color is (was?) a popular PC SVGA standard • Packs pixels into 16 bits: – 5 Red, 6 Green, 5 Blue (why would green get more?) – Sometimes just 5,5,5 • Each pixel can be one of 216 colors • Hi-color images can exhibit worse quantization artifacts than a well-mapped 8-bit image Color Quantization A process that reduces the number of distinct colors used in an image Intention that the new image should be as visually similar as possible to the original image Image credits: Wikipedia The Rendering -

AN5072: Introduction to Embedded Graphics – Application Note

Freescale Semiconductor Document Number: AN5072 Application Note Rev 0, 02/2015 Introduction to Embedded Graphics with Freescale Devices by: Luis Olea and Ioseph Martinez Contents 1 Introduction 1 Introduction................................................................1 The purpose of this application note is to explain the basic 1.1 Graphics basic concepts.............. ...................1 concepts and requirements of embedded graphics applications, 2 Freescale graphics devices.............. ..........................7 helping software and hardware engineers easily understand the fundamental concepts behind color display technology, which 2.1 MPC5606S.....................................................7 is being expected by consumers more and more in varied 2.2 MPC5645S.....................................................8 applications from automotive to industrial markets. 2.3 Vybrid.............................................................8 This section helps the reader to familiarize with some of the most used concepts when working with graphics. Knowing 2.4 MAC57Dxx....................... ............................ 8 these definitions is basic for any developer that wants to get 2.5 Kinetis K70......................... ...........................8 started with embedded graphics systems, and these concepts apply to any system that handles graphics irrespective of the 2.6 QorIQ LS1021A................... ......................... 9 hardware used. 2.7 i.MX family........................ ........................... 9 2.8 Quick -

Lynx Express 2D Multimedia Mobile Display Controller Datasheet

L Y N X F A M I L Y SM750 Lynx Express 2D Multimedia Mobile Display Controller Datasheet Revision: 1.4 Updated: September 27, 2012 SM750 Datasheet Notice Silicon Motion, Inc. has made its best efforts to ensure that the information contained in this document is accurate and reliable. However, the information is subject to change without notice. No responsibility is assumed by Silicon Motion, Inc. for the use of this information, nor for infringements of patents or other rights of third parties. Copyright Copyright 2012, Silicon Motion, Inc. All rights reserved. No part of this publication may Notice be reproduced, photocopied, or transmitted in any form, without the prior written consent of Silicon Motion, Inc. Silicon Motion, Inc. reserves the right to make changes to the product specification without reservation and without notice to our users. Revision No. Date Note 0.1 June 2009 First release 0.2 Dec 2009 Second release 0.3 April 2010 Third release 0.4 June 2010 Forth release 0.5 Dec 2010 Design CD update 1.0 May 2011 Add marking and ordering information 1.1 Aug 2011 - Update Figure 12, the ending address of the 2D Engine Data Port change to “0x120000” from “0x150000” - Update CSR08 register descriptions and power-on default value - Add MVDD and MVDD2 DC characteristics in Table 18 and Table 19 1.2 Jan 2012 - Added Standard VGA Register in 2.3. 1.3 Jun 2012 - Updated Top Marking in 13.2 - Updated Product Ordering Information in 14 1.4 Sep 2012 - Fixed bit names in 2.2, 3.2, 4.1, 6.2, 7, and 9.3 All rights strictly reserved. -

Getting Started

_______________________________ Getting Started Version 3.0 Getting Started with PMView Pro Installation & Setup If you are reading this file, you have likely already been through the installation process of PMView Pro. This section is intended to guide you through any subsequent installations and further setup procedures necessary to ensure PMView Pro is working properly on your system. Starting PMView Pro Starting PMView Pro can be as easy as double-clicking any image file. You were given the option during installation to install a shortcut to the desktop and/or the Start Menu as well. On the Start Menu, you will also notice that you can access this file, the Online Help file and PMView itself. PMView Pro also includes potentially unlimited startup options when started using the combination of command line options and script files. You can learn more about command line options and script files from the included help file. File Associations A common issue that may arise at some point after the installation of PMView Pro is the loss of file associations. You will recall that during installation you were presented with a list of file formats to associate with PMView. Even if you selected all formats, other programs may adjust those settings during their installation. The easiest way to have PMView reclaim those associations is to run the PMView Pro installation file again. If you only need to reclaim one or several of those associations, you can do this through the Windows operating system settings. By opening Windows Explorer from the start menu and selection Tools->Folder Options, you will be able to access the system File Type settings.