Differentiable GIF Encoding Framework

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Indexed Color

Indexed Color A browser may support only a certain number of specific colors, creating a palette from which to choose Figure 3.11 The Netscape color palette 1 QUIZ How many bits are needed to represent this palette? Show your work. 2 How to digitize a picture • Sample it → Represent it as a collection of individual dots called pixels • Quantize it → Represent each pixel as one of 224 possible colors (TrueColor) Resolution = The # of pixels used to represent a picture 3 Digitized Images and Graphics Whole picture Figure 3.12 A digitized picture composed of many individual pixels 4 Digitized Images and Graphics Magnified portion of the picture See the pixels? Hands-on: paste the high-res image from the previous slide in Paint, then choose ZOOM = 800 Figure 3.12 A digitized picture composed of many individual pixels 5 QUIZ: Images A low-res image has 200 rows and 300 columns of pixels. • What is the resolution? • If the pixels are represented in True-Color, what is the size of the file? • Same question in High-Color 6 Two types of image formats • Raster Graphics = Storage on a pixel-by-pixel basis • Vector Graphics = Storage in vector (i.e. mathematical) form 7 Raster Graphics GIF format • Each image is made up of only 256 colors (indexed color – similar to palette!) • But they can be a different 256 for each image! • Supports animation! Example • Optimal for line art PNG format (“ping” = Portable Network Graphics) Like GIF but achieves greater compression with wider range of color depth No animations 8 Bitmap format Contains the pixel color -

'" )Timizing Images ) ~I

k ' Anyone wlio linn IIVIII IIMd tliu Wub hns li kely 11H I11tl liy ulow lontlin g Wob sites. It's ', been fn1 11 the ft 1111 cl nlli{lll n1 mlllllll wh111 o th o fil o si of t/11 1 f /11tpli1 LII lt ill llliltl lltt 111t n huw fnst Sllll/ 111 111 11 I 1111 VIIIW 11 111 111 , Mllki l1(1 111't1 11 11 7 W11 1! 1/lllpliiL" 111 llll lllillnti lltlllllltll>ll. 1 l iitllllllllnl 111111 '" )timizing Images y, / l1111 11 11 11111' Lil'J C:ii') I1111 Vi llll ihllillnn l 111 1111 / 11 / ~il/ 11/ y 11111111 In l1 nlp Yll ll IIIIIMIIII IIII I/ 1 11111 I Optimizing JPEGs I 1 I Selective JPEG Optimization with Alpha Channels I l 11 tp111 11 1111 II 1111 111 I 1111 !11 11 1 IIIII nlllll I Optimizing GIFs I Choosing the Right Color Reduction Palette I lljllillll/11 111111 Ill II lillllljliiiX 111 111/r ll I lilnl illllli l 'lilltlllllllip (;~,:; 111 111 I Reducing Colors I Locking Colors I Selective G/F Optimization I illtnrlln lll ll" lllt l" "'ll" i ~IIIH i y C}l •; I Previewing Images in a Web Browser I llnt11tl. II 111 111/ M ullt.li 11 n tlttlmt, 1111111' I Optimizing G/Fs and JPEGs in lmageReady CS2 I IlVII filllll/11111, /111 t/11/l lh, }/ '/ CJ, lind ) If unlnmdtltl In yn tl, /hoy won't chap_ OJ 11111 bo lo1 ""'II· II yo 11'1 11 n pw nt oplimi1 PCS2Web HOT CD-ROM I ing Wol1 IJIIipluc;u, you'll bo impronml d by th o llllpOI'b optimiw ti on cupnbilitlos in ,,, Photoshop CS7 und lmng oRondy CS?. -

A Comprehensive Benchmark for Single Image Compression Artifact Reduction

IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 29, 2020 7845 A Comprehensive Benchmark for Single Image Compression Artifact Reduction Jiaying Liu , Senior Member, IEEE, Dong Liu , Senior Member, IEEE, Wenhan Yang , Member, IEEE, Sifeng Xia , Student Member, IEEE, Xiaoshuai Zhang , and Yuanying Dai Abstract— We present a comprehensive study and evaluation storage processes to save bandwidth and resources. Based on of existing single image compression artifact removal algorithms human visual properties, the codecs make use of redundan- using a new 4K resolution benchmark. This benchmark is called cies in spatial, temporal, and transform domains to provide the Large-Scale Ideal Ultra high-definition 4K (LIU4K), and it compact approximations of encoded content. They effectively includes including diversified foreground objects and background reduce the bit-rate cost but inevitably lead to unsatisfactory scenes with rich structures. Compression artifact removal, as a visual artifacts, e.g. blockiness, ringing, and banding. These common post-processing technique, aims at alleviating undesir- able artifacts, such as blockiness, ringing, and banding caused artifacts are derived from the loss of high-frequency details by quantization and approximation in the compression process. during the quantization process and the discontinuities caused In this work, a systematic listing of the reviewed methods is by block-wise batch processing. These artifacts not only presented based on their basic models (handcrafted models and degrade user visual experience, but they are also detrimental deep networks). The main contributions and novelties of these for successive image processing and computer vision tasks. methods are highlighted, and the main development directions In our work, we focus on the degradation of compressed are summarized, including architectures, multi-domain sources, images. -

A New Steganographic Method for Palette-Based Images

IS&T's 1999 PICS Conference A New Steganographic Method for Palette-Based Images Jiri Fridrich Center for Intelligent Systems, SUNY Binghamton, Binghamton, New York Abstract messages. The gaps in human visual and audio systems can be used for information hiding. In the case of images, the In this paper, we present a new steganographic technique human eye is relatively insensitive to high frequencies. This for embedding messages in palette-based images, such as fact has been utilized in many steganographic algorithms, GIF files. The new technique embeds one message bit into which modify the least significant bits of gray levels in one pixel (its pointer to the palette). The pixels for message digital images or digital sound tracks. Additional bits of embedding are chosen randomly using a pseudo-random information can also be inserted into coefficients of image number generator seeded with a secret key. For each pixel transforms, such as discrete cosine transform, Fourier at which one message bit is to be embedded, the palette is transform, etc. Transform techniques are typically more searched for closest colors. The closest color with the same robust with respect to common image processing operations parity as the message bit is then used instead of the original and lossy compression. color. This has the advantage that both the overall change The steganographer’s job is to make the secretly hidden due to message embedding and the maximal change in information difficult to detect given the complete colors of pixels is smaller than in methods that perturb the knowledge of the algorithm used to embed the information least significant bit of indices to a luminance-sorted palette, except the secret embedding key.* This so called such as EZ Stego.1 Indeed, numerical experiments indicate Kerckhoff’s principle is the golden rule of cryptography and that the new technique introduces approximately four times is often accepted for steganography as well. -

Understanding Image Formats and When to Use Them

Understanding Image Formats And When to Use Them Are you familiar with the extensions after your images? There are so many image formats that it’s so easy to get confused! File extensions like .jpeg, .bmp, .gif, and more can be seen after an image’s file name. Most of us disregard it, thinking there is no significance regarding these image formats. These are all different and not cross‐ compatible. These image formats have their own pros and cons. They were created for specific, yet different purposes. What’s the difference, and when is each format appropriate to use? Every graphic you see online is an image file. Most everything you see printed on paper, plastic or a t‐shirt came from an image file. These files come in a variety of formats, and each is optimized for a specific use. Using the right type for the right job means your design will come out picture perfect and just how you intended. The wrong format could mean a bad print or a poor web image, a giant download or a missing graphic in an email Most image files fit into one of two general categories—raster files and vector files—and each category has its own specific uses. This breakdown isn’t perfect. For example, certain formats can actually contain elements of both types. But this is a good place to start when thinking about which format to use for your projects. Raster Images Raster images are made up of a set grid of dots called pixels where each pixel is assigned a color. -

Dithering and Quantization of Audio and Image

Dithering and Quantization of audio and image Maciej Lipiński - Ext 06135 1. Introduction This project is going to focus on issue of dithering. The main aim of assignment was to develop a program to quantize images and audio signals, which should add noise and to measure mean square errors, comparing the quality of the quantized images with and without noise. The program realizes fallowing: - quantize an image or audio signal using n levels (defined by the user); - measure the MSE (Mean Square Error) between the original and the quantized signals; - add uniform noise in [-d/2,d/2], where d is the quantization step size, using n levels; - quantify the signal (image or audio) after adding the noise, using n levels (user defined); - measure the MSE by comparing the noise-quantized signal with the original; - compare results. The program shows graphic result, presenting original image/audio, quantized image/audio and quantized with dither image/audio. It calculates and displays the values of MSE – mean square error. 2. DITHERING Dither is a form of noise, “erroneous” signal or data which is intentionally added to sample data for the purpose of minimizing quantization error. It is utilized in many different fields where digital processing is used, such as digital audio and images. The quantization and re-quantization of digital data yields error. If that error is repeating and correlated to the signal, the error that results is repeating. In some fields, especially where the receptor is sensitive to such artifacts, cyclical errors yield undesirable artifacts. In these fields dither is helpful to result in less determinable distortions. -

Determining the Need for Dither When Re-Quantizing a 1-D Signal Carlos Fabián Benítez-Quiroz and Shawn D

µ ˆ q Determining the Need for Dither when Re-Quantizing a 1-D Signal Carlos Fabián Benítez-Quiroz and Shawn D. Hunt Electrical and Computer Engineering Department University of Puerto Rico, Mayagüez Campus ABSTRACT Test In The Frequency Domain Another test signal was used real audio with 24 bit precision and a 44.1 kHz sampling rate. The tests were This poster presents novel methods for determining if dither applied and the percentage of accepted frames is shown in The test in frequency is based on the Fourier transform of the sample figure 3 is needed when reducing the bit depth of a one dimensional autocorrelation. The statistic used is the square of the norm of the DFT digital signal. These are statistical based methods in both the multiplied by scale factor. This is also summarized in Table 1. time and frequency domains, and are based on determining Experiments were also run after adding dither. Because of whether the quantization noise with no dither added is white. Table 1. Summary of the statistics used in the tests. the added dither, all tests should reveal that no additional If this is the case, then no undesired harmonics are added in dither is needed. For the tests, segments from the previous the quantization or re-quantization process. Experiments Test Domain Estimator used Distribution Summary of experiment that are known to need dither are dithered and showing the effectiveness of the methods with both synthetic of Estimator Method these used as the test signal. and real one dimensional signals are presented. Box-Pierce Time Say the quantization Test m error is white when Introduction = 2 χ2 Q N r Q ~ m Qbp is greater than a bp k =1 k bp Digital signals have become widespread, and are favored in set threshold. -

Amiga Graphics Reference Card 2Nd Edition

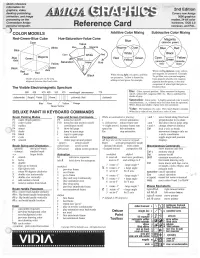

Quick reference information for graphics, video, 2nd Edition desktop publishing, Covers new Amiga animation, and image 3000 graphics processing on the modes, 24-bit color Commodore Amiga hardware, DOS 2.0 personal computer. Reference Card overscan, and PAL. ) COLOR MODELS Additive Color Mixing Subtractive Color Mixing Red-Green-Blue Cube Hue-Saturation-Value Cone Cyan Value White Blue Blue] Axis Yellow Black ~reen Axis When mixi'hgpigments, cyan, yellow, Red When mixing light, red, green, and blue and magenta are primaries. Example: are primaries. Yell ow is formed by To get blue, mix cyan and magenta. Shades of gray are on the long Cyan pigment absorbs red, magenta adding red and green, for example. diagonal between black and white. pigment absorbs green, so the only component of white light that gets re The Visible Electromagnetic Spectrum flected is blue. 380 420 470 495 535 575 wavelength (nanometers) 770 Hue: Color; spectral position. Often measured in degrees; red=0°, yellow=60°, magenta=300°, etc. Hue is undefined for shades of gray. (ultraviolet) Purple Violet yellowish Red (infrared) Saturation: Color purity. A highly-saturated color is nearly Blue Orange monochromatic, i.e., contains only one color from the spectrum. White, black, and shades of gray have zero saturation. Value: The darkness of a color. How much black it contain's. DELUXE PAINT Ill KEYBOARD COMMANDS White has a value of one, black has a value of zero. Brush Painting Modes Page and Screen Commands While an animation is playing: ; ana, move brush along fixed axis -

The Theory of Dithered Quantization

The Theory of Dithered Quantization by Robert Alexander Wannamaker A thesis presented to the University of Waterloo in fulfilment of the thesis requirement for the degree of Doctor of Philosophy in Applied Mathematics Waterloo, Ontario, Canada, 2003 c Robert Alexander Wannamaker 2003 I hereby declare that I am the sole author of this thesis. I authorize the University of Waterloo to lend this thesis to other institutions or individuals for the purpose of scholarly research. I further authorize the University of Waterloo to reproduce this thesis by pho- tocopying or by other means, in total or in part, at the request of other institutions or individuals for the purpose of scholarly research. ii The University of Waterloo requires the signatures of all persons using or pho- tocopying this thesis. Please sign below, and give address and date. iii Abstract A detailed mathematical model is presented for the analysis of multibit quantizing systems. Dithering is examined as a means for eliminating signal-dependent quanti- zation errors, and subtractive and non-subtractive dithered systems are thoroughly explored within the established theoretical framework. Of primary interest are the statistical interdependences of signals in dithered systems and the spectral proper- ties of the total error produced by such systems. Regarding dithered systems, many topics of practical interest are explored. These include the use of spectrally shaped dithers, dither in noise-shaping systems, the efficient generation of multi-channel dithers, and the uses of discrete-valued dither signals. iv Acknowledgements I would like to thank my supervisor, Dr. Stanley P. Lipshitz, for giving me the opportunity to pursue this research and for the valuable guidance which he has provided throughout my time with the Audio Research Group. -

Learning a Virtual Codec Based on Deep Convolutional Neural

JOURNAL OF LATEX CLASS FILES 1 Learning a Virtual Codec Based on Deep Convolutional Neural Network to Compress Image Lijun Zhao, Huihui Bai, Member, IEEE, Anhong Wang, Member, IEEE, and Yao Zhao, Senior Member, IEEE Abstract—Although deep convolutional neural network has parameters are always assigned to the codec in order to been proved to efficiently eliminate coding artifacts caused by achieve low bit-rate coding leading to serious blurring and the coarse quantization of traditional codec, it’s difficult to ringing artifacts [4–6], when the transmission band-width is train any neural network in front of the encoder for gradient’s back-propagation. In this paper, we propose an end-to-end very limited. In order to alleviate the problem of Internet image compression framework based on convolutional neural transmission congestion [7], advanced coding techniques, network to resolve the problem of non-differentiability of the such as de-blocking, and post-processing [8], are still the hot quantization function in the standard codec. First, the feature and open issues to be researched. description neural network is used to get a valid description in The post-processing technique for compressed image can the low-dimension space with respect to the ground-truth image so that the amount of image data is greatly reduced for storage be explicitly embedded into the codec to improve the coding or transmission. After image’s valid description, standard efficiency and reduce the artifacts caused by the coarse image codec such as JPEG is leveraged to further compress quantization. For instance, adaptive de-blocking filtering is image, which leads to image’s great distortion and compression designed as a loop filter and integrated into H.264/MPEG-4 artifacts, especially blocking artifacts, detail missing, blurring, AVC video coding standard [9], which does not require an and ringing artifacts. -

Digital Dither: Decreasing Round-Off Errors in Digital Signal Processing

Digital Dither: Decreasing Round-off Errors in Digital Signal Processing Peter Csordas ,Andras Mersich, Istvan Kollar Budapest University of Technology and Economics Department of Measurement and Information Systems [email protected] , ma35 1 @hszk.bme.hu , [email protected] mt- Difhering is a known method for increasing the after each arithmetic operation (round- off error). The precision of analog-to-digital conversion. In this paper the basic situation is quite different from the above one, since the result problems of quantization and the principles of analog dithering of any arithmetic operation is already discrete in amplitude, are described. The benefits of dithering are demonstrated through even its distribution is usually non-standard. Therefore, practical applications. The possibility and the conditions of using quantization of quantized signals needs to be analyzed. This digital dither in practical applications are eramined. The aim is to cannot be bound to a certain processing unit in the DSP, but find an optimal digifal dither to minimize the round-offerror of digilal signal processing. The effecfs on bias and variance using happens simply during operation and/or during storage of the uniform and triangularly distributed digital dithers are result into memory cells. Moreover, since the signal to be investigated and compared Some DSP iniplenientations are quantized is in digital form, only digital dither can be applied. discussed. and only if additional bits are available to make it possible to use. The method has already been discussed for example in Kevwords - digital dither, round-off error, bias. variance [4], but exact analysis has not been published yet. -

Sonically Optimized Noise Shaping Techniques

Sonically Optimized Noise Shaping Techniques Second edition Alexey Lukin [email protected] 2002 In this article we compare different commercially available noise shaping algo- rithms and introduce a new highly optimized frequency response curve for noise shap- ing filter implemented in our ExtraBit Mastering Processor. Contents INTRODUCTION ......................................................................................................................... 2 TESTED NOISE SHAPERS ......................................................................................................... 2 CRITERIONS OF COMPARISON............................................................................................... 3 RESULTS...................................................................................................................................... 4 NOISE STATISTICS & PERCEIVED LOUDNESS .............................................................................. 4 NOISE SPECTRUMS ..................................................................................................................... 5 No noise shaping (TPDF dithering)..................................................................................... 5 Cool Edit (C1 curve) ............................................................................................................5 Cool Edit (C3 curve) ............................................................................................................6 POW-R (pow-r1 mode)........................................................................................................