CS 61C: Great Ideas in Computer Architecture Lecture 13: Pipelining

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MIPS Architecture • MIPS (Microprocessor Without Interlocked Pipeline Stages) • MIPS Computer Systems Inc

Spring 2011 Prof. Hyesoon Kim MIPS Architecture • MIPS (Microprocessor without interlocked pipeline stages) • MIPS Computer Systems Inc. • Developed from Stanford • MIPS architecture usages • 1990’s – R2000, R3000, R4000, Motorola 68000 family • Playstation, Playstation 2, Sony PSP handheld, Nintendo 64 console • Android • Shift to SOC http://en.wikipedia.org/wiki/MIPS_architecture • MIPS R4000 CPU core • Floating point and vector floating point co-processors • 3D-CG extended instruction sets • Graphics – 3D curved surface and other 3D functionality – Hardware clipping, compressed texture handling • R4300 (embedded version) – Nintendo-64 http://www.digitaltrends.com/gaming/sony- announces-playstation-portable-specs/ Not Yet out • Google TV: an Android-based software service that lets users switch between their TV content and Web applications such as Netflix and Amazon Video on Demand • GoogleTV : search capabilities. • High stream data? • Internet accesses? • Multi-threading, SMP design • High graphics processors • Several CODEC – Hardware vs. Software • Displaying frame buffer e.g) 1080p resolution: 1920 (H) x 1080 (V) color depth: 4 bytes/pixel 4*1920*1080 ~= 8.3MB 8.3MB * 60Hz=498MB/sec • Started from 32-bit • Later 64-bit • microMIPS: 16-bit compression version (similar to ARM thumb) • SIMD additions-64 bit floating points • User Defined Instructions (UDIs) coprocessors • All self-modified code • Allow unaligned accesses http://www.spiritus-temporis.com/mips-architecture/ • 32 64-bit general purpose registers (GPRs) • A pair of special-purpose registers to hold the results of integer multiply, divide, and multiply-accumulate operations (HI and LO) – HI—Multiply and Divide register higher result – LO—Multiply and Divide register lower result • a special-purpose program counter (PC), • A MIPS64 processor always produces a 64-bit result • 32 floating point registers (FPRs). -

Superh RISC Engine SH-1/SH-2

SuperH RISC Engine SH-1/SH-2 Programming Manual September 3, 1996 Hitachi America Ltd. Notice When using this document, keep the following in mind: 1. This document may, wholly or partially, be subject to change without notice. 2. All rights are reserved: No one is permitted to reproduce or duplicate, in any form, the whole or part of this document without Hitachi’s permission. 3. Hitachi will not be held responsible for any damage to the user that may result from accidents or any other reasons during operation of the user’s unit according to this document. 4. Circuitry and other examples described herein are meant merely to indicate the characteristics and performance of Hitachi’s semiconductor products. Hitachi assumes no responsibility for any intellectual property claims or other problems that may result from applications based on the examples described herein. 5. No license is granted by implication or otherwise under any patents or other rights of any third party or Hitachi, Ltd. 6. MEDICAL APPLICATIONS: Hitachi’s products are not authorized for use in MEDICAL APPLICATIONS without the written consent of the appropriate officer of Hitachi’s sales company. Such use includes, but is not limited to, use in life support systems. Buyers of Hitachi’s products are requested to notify the relevant Hitachi sales offices when planning to use the products in MEDICAL APPLICATIONS. Introduction The SuperH RISC engine family incorporates a RISC (Reduced Instruction Set Computer) type CPU. A basic instruction can be executed in one clock cycle, realizing high performance operation. A built-in multiplier can execute multiplication and addition as quickly as DSP. -

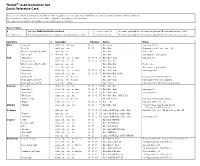

Thumb® 16-Bit Instruction Set Quick Reference Card

Thumb® 16-bit Instruction Set Quick Reference Card This card lists all Thumb instructions available on Thumb-capable processors earlier than ARM®v6T2. In addition, it lists all Thumb-2 16-bit instructions. The instructions shown on this card are all 16-bit in Thumb-2, except where noted otherwise. All registers are Lo (R0-R7) except where specified. Hi registers are R8-R15. Key to Tables § See Table ARM architecture versions. <loreglist+LR> A comma-separated list of Lo registers. plus the LR, enclosed in braces, { and }. <loreglist> A comma-separated list of Lo registers, enclosed in braces, { and }. <loreglist+PC> A comma-separated list of Lo registers. plus the PC, enclosed in braces, { and }. Operation § Assembler Updates Action Notes Move Immediate MOVS Rd, #<imm> N Z Rd := imm imm range 0-255. Lo to Lo MOVS Rd, Rm N Z Rd := Rm Synonym of LSLS Rd, Rm, #0 Hi to Lo, Lo to Hi, Hi to Hi MOV Rd, Rm Rd := Rm Not Lo to Lo. Any to Any 6 MOV Rd, Rm Rd := Rm Any register to any register. Add Immediate 3 ADDS Rd, Rn, #<imm> N Z C V Rd := Rn + imm imm range 0-7. All registers Lo ADDS Rd, Rn, Rm N Z C V Rd := Rn + Rm Hi to Lo, Lo to Hi, Hi to Hi ADD Rd, Rd, Rm Rd := Rd + Rm Not Lo to Lo. Any to Any T2 ADD Rd, Rd, Rm Rd := Rd + Rm Any register to any register. Immediate 8 ADDS Rd, Rd, #<imm> N Z C V Rd := Rd + imm imm range 0-255. -

Readingsample

Embedded Robotics Mobile Robot Design and Applications with Embedded Systems Bearbeitet von Thomas Bräunl Neuausgabe 2008. Taschenbuch. xiv, 546 S. Paperback ISBN 978 3 540 70533 8 Format (B x L): 17 x 24,4 cm Gewicht: 1940 g Weitere Fachgebiete > Technik > Elektronik > Robotik Zu Inhaltsverzeichnis schnell und portofrei erhältlich bei Die Online-Fachbuchhandlung beck-shop.de ist spezialisiert auf Fachbücher, insbesondere Recht, Steuern und Wirtschaft. Im Sortiment finden Sie alle Medien (Bücher, Zeitschriften, CDs, eBooks, etc.) aller Verlage. Ergänzt wird das Programm durch Services wie Neuerscheinungsdienst oder Zusammenstellungen von Büchern zu Sonderpreisen. Der Shop führt mehr als 8 Millionen Produkte. CENTRAL PROCESSING UNIT . he CPU (central processing unit) is the heart of every embedded system and every personal computer. It comprises the ALU (arithmetic logic unit), responsible for the number crunching, and the CU (control unit), responsible for instruction sequencing and branching. Modern microprocessors and microcontrollers provide on a single chip the CPU and a varying degree of additional components, such as counters, timing coprocessors, watchdogs, SRAM (static RAM), and Flash-ROM (electrically erasable ROM). Hardware can be described on several different levels, from low-level tran- sistor-level to high-level hardware description languages (HDLs). The so- called register-transfer level is somewhat in-between, describing CPU compo- nents and their interaction on a relatively high level. We will use this level in this chapter to introduce gradually more complex components, which we will then use to construct a complete CPU. With the simulation system Retro [Chansavat Bräunl 1999], [Bräunl 2000], we will be able to actually program, run, and test our CPUs. -

Reverse Engineering X86 Processor Microcode

Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz, Ruhr-University Bochum https://www.usenix.org/conference/usenixsecurity17/technical-sessions/presentation/koppe This paper is included in the Proceedings of the 26th USENIX Security Symposium August 16–18, 2017 • Vancouver, BC, Canada ISBN 978-1-931971-40-9 Open access to the Proceedings of the 26th USENIX Security Symposium is sponsored by USENIX Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz Ruhr-Universitat¨ Bochum Abstract hardware modifications [48]. Dedicated hardware units to counter bugs are imperfect [36, 49] and involve non- Microcode is an abstraction layer on top of the phys- negligible hardware costs [8]. The infamous Pentium fdiv ical components of a CPU and present in most general- bug [62] illustrated a clear economic need for field up- purpose CPUs today. In addition to facilitate complex and dates after deployment in order to turn off defective parts vast instruction sets, it also provides an update mechanism and patch erroneous behavior. Note that the implementa- that allows CPUs to be patched in-place without requiring tion of a modern processor involves millions of lines of any special hardware. While it is well-known that CPUs HDL code [55] and verification of functional correctness are regularly updated with this mechanism, very little is for such processors is still an unsolved problem [4, 29]. known about its inner workings given that microcode and the update mechanism are proprietary and have not been Since the 1970s, x86 processor manufacturers have throughly analyzed yet. -

Pipeliningpipelining

ChapterChapter 99 PipeliningPipelining Jin-Fu Li Department of Electrical Engineering National Central University Jungli, Taiwan Outline ¾ Basic Concepts ¾ Data Hazards ¾ Instruction Hazards Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 2 Content Coverage Main Memory System Address Data/Instruction Central Processing Unit (CPU) Operational Registers Arithmetic Instruction and Cache Logic Unit Sets memory Program Counter Control Unit Input/Output System Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 3 Basic Concepts ¾ Pipelining is a particularly effective way of organizing concurrent activity in a computer system ¾ Let Fi and Ei refer to the fetch and execute steps for instruction Ii ¾ Execution of a program consists of a sequence of fetch and execute steps, as shown below I1 I2 I3 I4 I5 F1 E1 F2 E2 F3 E3 F4 E4 F5 Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 4 Hardware Organization ¾ Consider a computer that has two separate hardware units, one for fetching instructions and another for executing them, as shown below Interstage Buffer Instruction fetch Execution unit unit Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 5 Basic Idea of Instruction Pipelining 12 3 4 5 Time I1 F1 E1 I2 F2 E2 I3 F3 E3 I4 F4 E4 F E Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 6 A 4-Stage Pipeline 12 3 4 567 Time I1 F1 D1 E1 W1 I2 F2 D2 E2 W2 I3 F3 D3 E3 W3 I4 F4 D4 E4 W4 D: Decode F: Fetch Instruction E: Execute W: Write instruction & fetch operation results operands B1 B2 B3 Advanced Reliable Systems (ARES) Lab. -

The RISC-V Compressed Instruction Set Manual

The RISC-V Compressed Instruction Set Manual Version 1.7 Warning! This draft specification will change before being accepted as standard, so implementations made to this draft specification will likely not conform to the future standard. Andrew Waterman, Yunsup Lee, David Patterson, Krste Asanovi´c CS Division, EECS Department, University of California, Berkeley fwaterman|yunsup|pattrsn|[email protected] May 28, 2015 This document is also available as Technical Report UCB/EECS-2015-157. 2 RISC-V Compressed ISA V1.7 1.1 Introduction This excerpt from the RISC-V User-Level ISA Specification describes the current draft proposal for the RISC-V standard compressed instruction set extension, named \C", which reduces static and dynamic code size by adding short 16-bit instruction encodings for common integer operations. The C extension can be added to any of the base ISAs (RV32I, RV64I, RV128I), and we use the generic term \RVC" to cover any of these. Typically, over half of the RISC-V instructions in a program can be replaced with RVC instructions, resulting in greater than a 25% code-size reduction. Section 1.7 describes a possible extended set of instructions for RVC, for which we would like your opinion. Please send your comments to the isa-dev mailing list at [email protected]. 1.2 Overview RVC uses a simple compression scheme that offers shorter 16-bit versions of common 32-bit RISC-V instructions when: • the immediate or address offset is small, or • one of the registers is the zero register (x0) or the ABI stack pointer (x2), or • the destination register and the first source register are identical, or • the registers used are the 8 most popular ones. -

The 32-Bit PA-RISC Run- Time Architecture Document

The 32-bit PA-RISC Run- time Architecture Document HP-UX 10.20 Version 3.0 (c) Copyright 1985-1997 HEWLETT-PACKARD COMPANY. The information contained in this document is subject to change without notice. HEWLETT-PACKARD MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OFMERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained herein or for incidental or consequential damages in connection with furnishing, performance, or use of this material. Hewlett-Packard assumes no responsibility for the use or reliability of its software on equipment that is not furnished by Hewlett-Packard. This document contains proprietary information which is protected by copyright. All rights are reserved. No part of this document may be photocopied, reproduced, or translated to another language without the prior written consent of Hewlett- Packard Company. CSO/STG/STD/CLO Hewlett-Packard Company 11000 Wolfe Road Cupertino, California 95014 By The Run-time Architecture Team CHAPTER 1 Introduction This document describes the runtime architecture for PA-RISC systems running either the HP-UX or the MPE/iX operating system. Other operating systems running on PA-RISC may also use this runtime architecture or a variant of it. The runtime architecture defines all the conventions and formats necessary to compile, link, and execute a program on one of these operating systems. Its purpose is to ensure that object modules produced by many different compilers can be linked together into a single application, and to specify the interfaces between compilers and linker, and between linker and operating system. -

Design of the RISC-V Instruction Set Architecture

Design of the RISC-V Instruction Set Architecture Andrew Waterman Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-1 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-1.html January 3, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor David Patterson, Chair Professor Krste Asanovi´c Associate Professor Per-Olof Persson Spring 2016 Design of the RISC-V Instruction Set Architecture Copyright 2016 by Andrew Shell Waterman 1 Abstract Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman Doctor of Philosophy in Computer Science University of California, Berkeley Professor David Patterson, Chair The hardware-software interface, embodied in the instruction set architecture (ISA), is arguably the most important interface in a computer system. Yet, in contrast to nearly all other interfaces in a modern computer system, all commercially popular ISAs are proprietary. -

Powerpc User Instruction Set Architecture Book I Version 2.01

PowerPC User Instruction Set Architecture Book I Version 2.01 September 2003 Manager: Joe Wetzel/Poughkeepsie/IBM Technical Content: Ed Silha/Austin/IBM Cathy May/Watson/IBM Brad Frey/Austin/IBM The following paragraph does not apply to the United Kingdom or any country or state where such provisions are inconsistent with local law. The specifications in this manual are subject to change without notice. This manual is provided “AS IS”. Interna- tional Business Machines Corp. makes no warranty of any kind, either expressed or implied, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. International Business Machines Corp. does not warrant that the contents of this publication or the accompanying source code examples, whether individually or as one or more groups, will meet your requirements or that the publication or the accompanying source code examples are error-free. This publication could include technical inaccuracies or typographical errors. Changes are periodically made to the information herein; these changes will be incorporated in new editions of the publication. Address comments to IBM Corporation, Internal Zip 9630, 11400 Burnett Road, Austin, Texas 78758-3493. IBM may use or distribute whatever information you supply in any way it believes appropriate without incurring any obligation to you. The following terms are trademarks of the International Business Machines Corporation in the United States and/or other countries: IBM PowerPC RISC/System 6000 POWER POWER2 POWER4 POWER4+ IBM System/370 Notice to U.S. Government Users—Documentation Related to Restricted Rights—Use, duplication or disclosure is subject to restrictions set fourth in GSA ADP Schedule Contract with IBM Corporation. -

UM0434 E200z3 Powerpc Core Reference Manual

UM0434 e200z3 PowerPC core Reference manual Introduction The primary objective of this user’s manual is to describe the functionality of the e200z3 embedded microprocessor core for software and hardware developers. This book is intended as a companion to the EREF: A Programmer's Reference Manual for Freescale Book E Processors (hereafter referred to as EREF). Book E is a PowerPC™ architecture definition for embedded processors that ensures binary compatibility with the user-instruction set architecture (UISA) portion of the PowerPC architecture as it was jointly developed by Apple, IBM, and Motorola (referred to as the AIM architecture). This document distinguishes among the three levels of the architectural and implementation definition, as follows: ● The Book E architecture—Book E defines a set of user-level instructions and registers that are drawn from the user instruction set architecture (UISA) portion of the AIM definition PowerPC architecture. Book E also includes numerous supervisor-level registers and instructions as they were defined in the AIM version of the PowerPC architecture for the virtual environment architecture (VEA) and the operating environment architecture (OEA). Because the operating system resources (such as the MMU and interrupts) defined by Book E differ greatly from those defined by the AIM architecture, Book E introduces many new registers and instructions. ● Freescale Book E implementation standards (EIS)—In many cases, the Book E architecture definition provides a general framework, leaving specific details up to the implementation. To ensure consistency among its Book E implementations, Freescale has defined implementation standards that provide an additional layer of architecture between Book E and the actual devices. -

PA-RISC 1.1 Architecture and Instruction Set Reference Manual

PA-RISC 1.1 Architecture and Instruction Set Reference Manual HP Part Number: 09740-90039 Printed in U.S.A. February 1994 Third Edition Notice The information contained in this document is subject to change without notice. HEWLETT-PACKARD MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained herein or for incidental or consequential damages in connection with furnishing, performance, or use of this material. Hewlett-Packard assumes no responsibility for the use or reliability of its software on equipment that is not furnished by Hewlett-Packard. This document contains proprietary information which is protected by copyright. All rights are reserved. No part of this document may be photocopied, reproduced, or translated to another language without the prior written consent of Hewlett-Packard Company. Copyright © 1986 – 1994 by HEWLETT-PACKARD COMPANY Printing History The printing date will change when a new edition is printed. The manual part number will change when extensive changes are made. First Edition . November 1990 Second Edition. September 1992 Third Edition . February 1994 Contents Contents . iii Preface. ix 1 Overview . 1-1 Introduction. 1-1 System Features . 1-2 PA-RISC 1.1 Enhancements . 1-2 System Organization . 1-4 2 System Organization . 2-1 Introduction. 2-1 Memory and I/O Addressing . 2-2 Byte Ordering (Big Endian/Little Endian) . 2-3 Levels of PA-RISC. 2-5 Data Types . 2-5 Processing Resources. 2-7 3 Addressing and Access Control.