Digital Design & Computer Arch. Lecture 17: Branch Prediction II

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

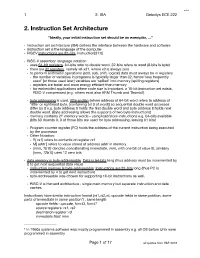

2. Instruction Set Architecture

!1 2. ISA Gebotys ECE 222 2. Instruction Set Architecture “Ideally, your initial instruction set should be an exemplar, …” " Instruction set architecture (ISA) defines the interface between the hardware and software# " instruction set is the language of the computer# " RISCV instructions are 32-bits, instruction[31:0]# " RISC-V assembly1 language notation # " uses 64-bit registers, 64-bits refer to double word, 32-bits refers to word (8-bits is byte).# " there are 32 registers, namely x0-x31, where x0 is always zero # " to perform arithmetic operations (add, sub, shift, logical) data must always be in registers # " the number of variables in programs is typically larger than 32, hence ‘less frequently used’ [or those used later] variables are ‘spilled’ into memory [spilling registers]# " registers are faster and more energy e$cient than memory# " for embedded applications where code size is important, a 16-bit instruction set exists, RISC-V compressed (e.g. others exist also ARM Thumb and Thumb2)# " byte addressing is used, little endian (where address of 64-bit word refers to address of ‘little' or rightmost byte, [containing bit 0 of word]) so sequential double word accesses di%er by 8 e.g. byte address 0 holds the first double word and byte address 8 holds next double word. (Byte addressing allows the supports of two byte instructions)# " memory contains 261 memory words - using load/store instructions e.g. 64-bits available (bits 63 downto 0, 3 of those bits are used for byte addressing, leaving 61 bits)# " Program counter register (PC) -

MIPS Architecture • MIPS (Microprocessor Without Interlocked Pipeline Stages) • MIPS Computer Systems Inc

Spring 2011 Prof. Hyesoon Kim MIPS Architecture • MIPS (Microprocessor without interlocked pipeline stages) • MIPS Computer Systems Inc. • Developed from Stanford • MIPS architecture usages • 1990’s – R2000, R3000, R4000, Motorola 68000 family • Playstation, Playstation 2, Sony PSP handheld, Nintendo 64 console • Android • Shift to SOC http://en.wikipedia.org/wiki/MIPS_architecture • MIPS R4000 CPU core • Floating point and vector floating point co-processors • 3D-CG extended instruction sets • Graphics – 3D curved surface and other 3D functionality – Hardware clipping, compressed texture handling • R4300 (embedded version) – Nintendo-64 http://www.digitaltrends.com/gaming/sony- announces-playstation-portable-specs/ Not Yet out • Google TV: an Android-based software service that lets users switch between their TV content and Web applications such as Netflix and Amazon Video on Demand • GoogleTV : search capabilities. • High stream data? • Internet accesses? • Multi-threading, SMP design • High graphics processors • Several CODEC – Hardware vs. Software • Displaying frame buffer e.g) 1080p resolution: 1920 (H) x 1080 (V) color depth: 4 bytes/pixel 4*1920*1080 ~= 8.3MB 8.3MB * 60Hz=498MB/sec • Started from 32-bit • Later 64-bit • microMIPS: 16-bit compression version (similar to ARM thumb) • SIMD additions-64 bit floating points • User Defined Instructions (UDIs) coprocessors • All self-modified code • Allow unaligned accesses http://www.spiritus-temporis.com/mips-architecture/ • 32 64-bit general purpose registers (GPRs) • A pair of special-purpose registers to hold the results of integer multiply, divide, and multiply-accumulate operations (HI and LO) – HI—Multiply and Divide register higher result – LO—Multiply and Divide register lower result • a special-purpose program counter (PC), • A MIPS64 processor always produces a 64-bit result • 32 floating point registers (FPRs). -

Exploiting Branch Target Injection Jann Horn, Google Project Zero

Exploiting Branch Target Injection Jann Horn, Google Project Zero 1 Outline ● Introduction ● Reverse-engineering branch prediction ● Leaking host memory from KVM 2 Disclaimer ● I haven't worked in CPU design ● I don't really understand how CPUs work ● Large parts of this talk are based on guesses ● This isn't necessarily how all CPUs work 3 Variants overview Spectre Meltdown ● CVE-2017-5753 ● CVE-2017-5715 ● CVE-2017-5754 ● Variant 1 ● Variant 2 ● Variant 3 ● Bounds Check ● Branch Target ● Rogue Data Cache Bypass Injection Load ● Primarily affects ● Primarily affects ● Affects kernels (and interpreters/JITs kernels/hypervisors architecturally equivalent software) 4 Performance ● Modern consumer CPU clock rates: ~4GHz ● Memory is slow: ~170 clock cycles latency on my machine ➢ CPU needs to work around high memory access latencies ● Adding parallelism is easier than making processing faster ➢ CPU needs to do things in parallel for performance ● Performance optimizations can lead to security issues! 5 Performance Optimization Resources ● everyone wants programs to run fast ➢ processor vendors want application authors to be able to write fast code ● architectural behavior requires architecture documentation; performance optimization requires microarchitecture documentation ➢ if you want information about microarchitecture, read performance optimization guides ● Intel: https://software.intel.com/en-us/articles/intel-sdm#optimization ("optimization reference manual") ● AMD: https://developer.amd.com/resources/developer-guides-manuals/ ("Software Optimization Guide") 6 (vaguely based on optimization manuals) Out-of-order execution front end out-of-order engine port (scheduler, renaming, ...) port instruction stream add rax, 9 add rax, 8 inc rbx port inc rbx sub rax, rbx mov [rcx], rax port cmp rax, 16 .. -

Superh RISC Engine SH-1/SH-2

SuperH RISC Engine SH-1/SH-2 Programming Manual September 3, 1996 Hitachi America Ltd. Notice When using this document, keep the following in mind: 1. This document may, wholly or partially, be subject to change without notice. 2. All rights are reserved: No one is permitted to reproduce or duplicate, in any form, the whole or part of this document without Hitachi’s permission. 3. Hitachi will not be held responsible for any damage to the user that may result from accidents or any other reasons during operation of the user’s unit according to this document. 4. Circuitry and other examples described herein are meant merely to indicate the characteristics and performance of Hitachi’s semiconductor products. Hitachi assumes no responsibility for any intellectual property claims or other problems that may result from applications based on the examples described herein. 5. No license is granted by implication or otherwise under any patents or other rights of any third party or Hitachi, Ltd. 6. MEDICAL APPLICATIONS: Hitachi’s products are not authorized for use in MEDICAL APPLICATIONS without the written consent of the appropriate officer of Hitachi’s sales company. Such use includes, but is not limited to, use in life support systems. Buyers of Hitachi’s products are requested to notify the relevant Hitachi sales offices when planning to use the products in MEDICAL APPLICATIONS. Introduction The SuperH RISC engine family incorporates a RISC (Reduced Instruction Set Computer) type CPU. A basic instruction can be executed in one clock cycle, realizing high performance operation. A built-in multiplier can execute multiplication and addition as quickly as DSP. -

Simty: Generalized SIMT Execution on RISC-V Caroline Collange

Simty: generalized SIMT execution on RISC-V Caroline Collange To cite this version: Caroline Collange. Simty: generalized SIMT execution on RISC-V. CARRV 2017: First Work- shop on Computer Architecture Research with RISC-V, Oct 2017, Boston, United States. pp.6, 10.1145/nnnnnnn.nnnnnnn. hal-01622208 HAL Id: hal-01622208 https://hal.inria.fr/hal-01622208 Submitted on 7 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Simty: generalized SIMT execution on RISC-V Caroline Collange Inria [email protected] ABSTRACT and programming languages to target GPU-like SIMT cores. Be- We present Simty, a massively multi-threaded RISC-V processor side simplifying software layers, the unification of CPU and GPU core that acts as a proof of concept for dynamic inter-thread vector- instruction sets eases prototyping and debugging of parallel pro- ization at the micro-architecture level. Simty runs groups of scalar grams. We have implemented Simty in synthesizable VHDL and threads executing SPMD code in lockstep, and assembles SIMD synthesized it for an FPGA target. instructions dynamically across threads. Unlike existing SIMD or We present our approach to generalizing the SIMT model in SIMT processors like GPUs or vector processors, Simty vector- Section 2, then describe Simty’s microarchitecture is Section 3, and izes scalar general-purpose binaries. -

The 32-Bit PA-RISC Run- Time Architecture Document

The 32-bit PA-RISC Run- time Architecture Document HP-UX 10.20 Version 3.0 (c) Copyright 1985-1997 HEWLETT-PACKARD COMPANY. The information contained in this document is subject to change without notice. HEWLETT-PACKARD MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OFMERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained herein or for incidental or consequential damages in connection with furnishing, performance, or use of this material. Hewlett-Packard assumes no responsibility for the use or reliability of its software on equipment that is not furnished by Hewlett-Packard. This document contains proprietary information which is protected by copyright. All rights are reserved. No part of this document may be photocopied, reproduced, or translated to another language without the prior written consent of Hewlett- Packard Company. CSO/STG/STD/CLO Hewlett-Packard Company 11000 Wolfe Road Cupertino, California 95014 By The Run-time Architecture Team CHAPTER 1 Introduction This document describes the runtime architecture for PA-RISC systems running either the HP-UX or the MPE/iX operating system. Other operating systems running on PA-RISC may also use this runtime architecture or a variant of it. The runtime architecture defines all the conventions and formats necessary to compile, link, and execute a program on one of these operating systems. Its purpose is to ensure that object modules produced by many different compilers can be linked together into a single application, and to specify the interfaces between compilers and linker, and between linker and operating system. -

Design of the RISC-V Instruction Set Architecture

Design of the RISC-V Instruction Set Architecture Andrew Waterman Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-1 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-1.html January 3, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor David Patterson, Chair Professor Krste Asanovi´c Associate Professor Per-Olof Persson Spring 2016 Design of the RISC-V Instruction Set Architecture Copyright 2016 by Andrew Shell Waterman 1 Abstract Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman Doctor of Philosophy in Computer Science University of California, Berkeley Professor David Patterson, Chair The hardware-software interface, embodied in the instruction set architecture (ISA), is arguably the most important interface in a computer system. Yet, in contrast to nearly all other interfaces in a modern computer system, all commercially popular ISAs are proprietary. -

PA-RISC 1.1 Architecture and Instruction Set Reference Manual

PA-RISC 1.1 Architecture and Instruction Set Reference Manual HP Part Number: 09740-90039 Printed in U.S.A. February 1994 Third Edition Notice The information contained in this document is subject to change without notice. HEWLETT-PACKARD MAKES NO WARRANTY OF ANY KIND WITH REGARD TO THIS MATERIAL, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. Hewlett-Packard shall not be liable for errors contained herein or for incidental or consequential damages in connection with furnishing, performance, or use of this material. Hewlett-Packard assumes no responsibility for the use or reliability of its software on equipment that is not furnished by Hewlett-Packard. This document contains proprietary information which is protected by copyright. All rights are reserved. No part of this document may be photocopied, reproduced, or translated to another language without the prior written consent of Hewlett-Packard Company. Copyright © 1986 – 1994 by HEWLETT-PACKARD COMPANY Printing History The printing date will change when a new edition is printed. The manual part number will change when extensive changes are made. First Edition . November 1990 Second Edition. September 1992 Third Edition . February 1994 Contents Contents . iii Preface. ix 1 Overview . 1-1 Introduction. 1-1 System Features . 1-2 PA-RISC 1.1 Enhancements . 1-2 System Organization . 1-4 2 System Organization . 2-1 Introduction. 2-1 Memory and I/O Addressing . 2-2 Byte Ordering (Big Endian/Little Endian) . 2-3 Levels of PA-RISC. 2-5 Data Types . 2-5 Processing Resources. 2-7 3 Addressing and Access Control. -

Ep 2182433 A1

(19) & (11) EP 2 182 433 A1 (12) EUROPEAN PATENT APPLICATION published in accordance with Art. 153(4) EPC (43) Date of publication: (51) Int Cl.: 05.05.2010 Bulletin 2010/18 G06F 9/38 (2006.01) (21) Application number: 07768016.3 (86) International application number: PCT/JP2007/063241 (22) Date of filing: 02.07.2007 (87) International publication number: WO 2009/004709 (08.01.2009 Gazette 2009/02) (84) Designated Contracting States: • AOKI, Takashi AT BE BG CH CY CZ DE DK EE ES FI FR GB GR Kawasaki-shi HU IE IS IT LI LT LU LV MC MT NL PL PT RO SE Kanagawa 211-8588 (JP) SI SK TR Designated Extension States: (74) Representative: Ward, James Norman AL BA HR MK RS Haseltine Lake LLP Lincoln House, 5th Floor (71) Applicant: Fujitsu Limited 300 High Holborn Kawasaki-shi, Kanagawa 211-8588 (JP) London WC1V 7JH (GB) (72) Inventors: • TOYOSHIMA, Takashi Kawasaki-shi Kanagawa 211-8588 (JP) (54) INDIRECT BRANCHING PROGRAM, AND INDIRECT BRANCHING METHOD (57) A processor 100 reads an interpreter program the indirect branch instruction in a link register 120c (the 200a to start an interpreter. The interpreter makes branch processor 100 internally stacks the branch destination prediction by executing, in place of an indirect branch addresses also in a return address stack 130) in inverse instruction that is necessary for execution of a source order and reading the addresses from the return address program 200b, storing branch destination addresses in stack 130 in one at a time manner. EP 2 182 433 A1 Printed by Jouve, 75001 PARIS (FR) 1 EP 2 182 433 A1 2 Description vised and brought into practical use. -

PIPELINING Basics

PIPELINING basics • A pipelined architecture for MIPS • Hurdles in pipelining • Simple solutions to pipelining hurdles • Advanced pipelining • Conclusive remarks 1 MIPS pipelined architecture • MIPS simplified architecture can be realized by having each instruction execute in a single clock cycle, approximately as long as the 5 clocks required to complete the 5 phases. Why would this approach be unconvenient? • We already know one reason: the longer cycle would waste time in all instructions that take less to execute (fewer than 5 clocks). 2 MIPS pipelined architecture • There is another relevant reason: • By breaking down into more phases (clock cycles) the execution of each instruction, it is possible to (partially) overlap the execution of more instructions. • At eack clock cycle, while a section of the datapath takes care of an instruction, another section can be used to execute another instruction. 3 MIPS pipelined architecture • If we start a new instruction at each new clock cycle, each of the 5 phases of the multi-cycle MIPS architecture becomes a stage in the pipeline, and the pattern of execution of a sequence of instructions looks like this (Hennessy-Patterson, Fig. A.1): instr. 1 2 3 4 5 6 7 8 9 number instr. i IF ID EX MEM WB instr. i+1 IF ID EX MEM WB instr. i+2 IF ID EX MEM WB instr. i+3 IF ID EX MEM WB instr. i+4 IF ID EX MEM WB 4 MIPS pipelined architecture • Pipelining only works is one does not attempt to execute at the same time two different operations that use the same datapath resource: – as an instance, if the datapath has a single ALU, this cannot compute concurrently the effective address of a load and the subtraction of the operands in another instruction • Using reduced (simple) instructions (namely RISC) makes it fairly easy to determine at each time which datapath resources are free and which are busy. -

Pipelining: Basic/ Intermediate Concepts and Implementation

Lecture 05: Pipelining: Basic/ Intermediate Concepts and Implementation CSE 564 Computer Architecture Summer 2017 Department of Computer Science and Engineering Yonghong Yan [email protected] www.secs.oakland.edu/~yan 1 Contents 1. Pipelining Introduction 2. The Major Hurdle of Pipelining—Pipeline Hazards 3. RISC-V ISA and its Implementation Reading: u Textbook: Appendix C u RISC-V ISA u Chisel Tutorial Pipelining: Its Natural! © Laundry Example © Ann, Brian, Cathy, Dave each have one load of clothes to wash, dry, and fold A B C D u Washer takes 30 minutes u Dryer takes 40 minutes u “Folder” takes 20 minutes © One load: 90 minutes Sequential Laundry 6 PM 7 8 9 10 11 Midnight Time 30 40 20 30 40 20 30 40 20 30 40 20 T a s A k O B r d e r C D © Sequential laundry takes 6 hours for 4 loads © If they learned pipelining, how long would laundry take? Pipelined Laundry Start Work ASAP 6 PM 7 8 9 10 11 Midnight Time 30 40 40 40 40 20 T a Sequential laundry takes 6 hours for 4 loads s A k O r B d e r C D © Pipelined laundry takes 3.5 hours for 4 loads The Basics of a RISC Instruction Set (1/2) © RISC (Reduced Instruction Set Computer) architecture or load-store architecture: u All operations on data apply to data in register and typically change the entire register (32 or 64 bits per register). u The only operations that affect memory are load and store operation. -

In Using the GNU Compiler Collection (GCC)

Using the GNU Compiler Collection For gcc version 6.1.0 (GCC) Richard M. Stallman and the GCC Developer Community Published by: GNU Press Website: http://www.gnupress.org a division of the General: [email protected] Free Software Foundation Orders: [email protected] 51 Franklin Street, Fifth Floor Tel 617-542-5942 Boston, MA 02110-1301 USA Fax 617-542-2652 Last printed October 2003 for GCC 3.3.1. Printed copies are available for $45 each. Copyright c 1988-2016 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.3 or any later version published by the Free Software Foundation; with the Invariant Sections being \Funding Free Software", the Front-Cover Texts being (a) (see below), and with the Back-Cover Texts being (b) (see below). A copy of the license is included in the section entitled \GNU Free Documentation License". (a) The FSF's Front-Cover Text is: A GNU Manual (b) The FSF's Back-Cover Text is: You have freedom to copy and modify this GNU Manual, like GNU software. Copies published by the Free Software Foundation raise funds for GNU development. i Short Contents Introduction ::::::::::::::::::::::::::::::::::::::::::::: 1 1 Programming Languages Supported by GCC ::::::::::::::: 3 2 Language Standards Supported by GCC :::::::::::::::::: 5 3 GCC Command Options ::::::::::::::::::::::::::::::: 9 4 C Implementation-Defined Behavior :::::::::::::::::::: 373 5 C++ Implementation-Defined Behavior ::::::::::::::::: 381 6 Extensions to