Simty: Generalized SIMT Execution on RISC-V Caroline Collange

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

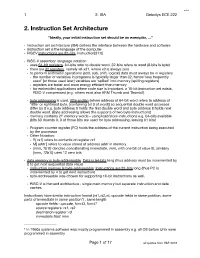

2. Instruction Set Architecture

!1 2. ISA Gebotys ECE 222 2. Instruction Set Architecture “Ideally, your initial instruction set should be an exemplar, …” " Instruction set architecture (ISA) defines the interface between the hardware and software# " instruction set is the language of the computer# " RISCV instructions are 32-bits, instruction[31:0]# " RISC-V assembly1 language notation # " uses 64-bit registers, 64-bits refer to double word, 32-bits refers to word (8-bits is byte).# " there are 32 registers, namely x0-x31, where x0 is always zero # " to perform arithmetic operations (add, sub, shift, logical) data must always be in registers # " the number of variables in programs is typically larger than 32, hence ‘less frequently used’ [or those used later] variables are ‘spilled’ into memory [spilling registers]# " registers are faster and more energy e$cient than memory# " for embedded applications where code size is important, a 16-bit instruction set exists, RISC-V compressed (e.g. others exist also ARM Thumb and Thumb2)# " byte addressing is used, little endian (where address of 64-bit word refers to address of ‘little' or rightmost byte, [containing bit 0 of word]) so sequential double word accesses di%er by 8 e.g. byte address 0 holds the first double word and byte address 8 holds next double word. (Byte addressing allows the supports of two byte instructions)# " memory contains 261 memory words - using load/store instructions e.g. 64-bits available (bits 63 downto 0, 3 of those bits are used for byte addressing, leaving 61 bits)# " Program counter register (PC) -

Pipelining 2

Instruction-Level Parallelism Dynamic Scheduling CS448 1 Dynamic Scheduling • Static Scheduling – Compiler rearranging instructions to reduce stalls • Dynamic Scheduling – Hardware rearranges instruction stream to reduce stalls – Possible to account for dependencies that are only known at run time - e. g. memory reference – Simplifies the compiler – Code built for one pipeline in mind could also run efficiently on a different pipeline – minimizes the need for speculative slot filling - NP hard • Once a common tactic with supercomputers and mainframes but was too expensive for single-chip – Now fits on a desktop PC’s 2 1 Dynamic Scheduling • Dependencies that are close together stall the entire pipeline – DIVD F0, F2, F4 – ADDD F10, F0, F8 – SUBD F12, F8, F14 • The ADD needs the DIV to finish, so there is a stall… which also stalls the SUBD – Looong stall for DIV – But the SUBD is independent so there is no reason why we shouldn’t execute it – Or is there? • Precise Interrupts - Ignore for now • Compiler could rearrange instructions, but so could the hardware 3 Dynamic Scheduling • It would be desirable to decode instructions into the pipe in order but then let them stall individually while waiting for operands before issue to execution units. • Dynamic Scheduling – Out of Order Issue / Execution • Scoreboarding 4 2 Scoreboarding Split ID stage into two: Issue (Decode, check for Structural hazards) Read Operands (Wait until no data hazards, read operands) 5 Scoreboard Overview • Consider another example: – DIVD F0, F2, F4 – ADDD F10, F0, -

EECS 570 Lecture 5 GPU Winter 2021 Prof

EECS 570 Lecture 5 GPU Winter 2021 Prof. Satish Narayanasamy http://www.eecs.umich.edu/courses/eecs570/ Slides developed in part by Profs. Adve, Falsafi, Martin, Roth, Nowatzyk, and Wenisch of EPFL, CMU, UPenn, U-M, UIUC. 1 EECS 570 • Slides developed in part by Profs. Adve, Falsafi, Martin, Roth, Nowatzyk, and Wenisch of EPFL, CMU, UPenn, U-M, UIUC. Discussion this week • Term project • Form teams and start to work on identifying a problem you want to work on Readings For next Monday (Lecture 6): • Michael Scott. Shared-Memory Synchronization. Morgan & Claypool Synthesis Lectures on Computer Architecture (Ch. 1, 4.0-4.3.3, 5.0-5.2.5). • Alain Kagi, Doug Burger, and Jim Goodman. Efficient Synchronization: Let Them Eat QOLB, Proc. 24th International Symposium on Computer Architecture (ISCA 24), June, 1997. For next Wednesday (Lecture 7): • Michael Scott. Shared-Memory Synchronization. Morgan & Claypool Synthesis Lectures on Computer Architecture (Ch. 8-8.3). • M. Herlihy, Wait-Free Synchronization, ACM Trans. Program. Lang. Syst. 13(1): 124-149 (1991). Today’s GPU’s “SIMT” Model CIS 501 (Martin): Vectors 5 Graphics Processing Units (GPU) • Killer app for parallelism: graphics (3D games) Tesla S870 What is Behind such an Evolution? • The GPU is specialized for compute-intensive, highly data parallel computation (exactly what graphics rendering is about) ❒ So, more transistors can be devoted to data processing rather than data caching and flow control ALU ALU Control ALU ALU CPU GPU Cache DRAM DRAM • The fast-growing video game industry -

Exploiting Branch Target Injection Jann Horn, Google Project Zero

Exploiting Branch Target Injection Jann Horn, Google Project Zero 1 Outline ● Introduction ● Reverse-engineering branch prediction ● Leaking host memory from KVM 2 Disclaimer ● I haven't worked in CPU design ● I don't really understand how CPUs work ● Large parts of this talk are based on guesses ● This isn't necessarily how all CPUs work 3 Variants overview Spectre Meltdown ● CVE-2017-5753 ● CVE-2017-5715 ● CVE-2017-5754 ● Variant 1 ● Variant 2 ● Variant 3 ● Bounds Check ● Branch Target ● Rogue Data Cache Bypass Injection Load ● Primarily affects ● Primarily affects ● Affects kernels (and interpreters/JITs kernels/hypervisors architecturally equivalent software) 4 Performance ● Modern consumer CPU clock rates: ~4GHz ● Memory is slow: ~170 clock cycles latency on my machine ➢ CPU needs to work around high memory access latencies ● Adding parallelism is easier than making processing faster ➢ CPU needs to do things in parallel for performance ● Performance optimizations can lead to security issues! 5 Performance Optimization Resources ● everyone wants programs to run fast ➢ processor vendors want application authors to be able to write fast code ● architectural behavior requires architecture documentation; performance optimization requires microarchitecture documentation ➢ if you want information about microarchitecture, read performance optimization guides ● Intel: https://software.intel.com/en-us/articles/intel-sdm#optimization ("optimization reference manual") ● AMD: https://developer.amd.com/resources/developer-guides-manuals/ ("Software Optimization Guide") 6 (vaguely based on optimization manuals) Out-of-order execution front end out-of-order engine port (scheduler, renaming, ...) port instruction stream add rax, 9 add rax, 8 inc rbx port inc rbx sub rax, rbx mov [rcx], rax port cmp rax, 16 .. -

14 Superscalar Processors

UNIT- 5 Chapter 14 Instruction Level Parallelism and Superscalar Processors What is Superscalar? • Use multiple independent instruction pipeline. • Instruction level parallelism • Identify dependencies in program • Eliminate Unnecessary dependencies • Use additional registers & renaming of register references in original code. • Branch prediction improves efficiency What is Superscalar? • RISC-like instructions, one per word • Multiple parallel execution units • Reorders and optimizes instruction stream at run time • Branch prediction with speculative execution of one path • Loads data from memory only when needed, and tries to find the data in the caches first Why Superscalar? • In 1987, scalar instruction • Most operations are on scalar quantities • Improving these operations to get an overall improvement • High performance microprocessors. General Superscalar Organization Superscalar V/S Superpipelined • Ordinary:- • One instruction/clock cycle & can perform one pipeline stage per clock cycle. • Superpipelined:- • 2 pipeline stage per cycle • Execution time:-half a clock cycle • Double internal clock speed gets two tasks per external clock cycle • Superscalar :- • allows parallel fetch execute • Start of the program & at branch target it performs better than above said. Superscalar v Superpipeline Limitations • Instruction level parallelism • Compiler based optimisation • Hardware techniques • Limited by —True data dependency —Procedural dependency —Resource conflicts —Output dependency —Antidependency True Data Dependency • ADD r1, -

Concepts Introduced in Chapter 3 Instruction Level Parallelism (ILP)

Intro Compiler Techs Branch Pred Dynamic Sched Speculation Multi Issue ILP Limits MT Intro Compiler Techs Branch Pred Dynamic Sched Speculation Multi Issue ILP Limits MT Concepts Introduced in Chapter 3 Instruction Level Parallelism (ILP) introduction Pipelining is the overlapping of dierent portions of instruction compiler techniques for ILP execution. advanced branch prediction Instruction level parallelism is the parallel execution of a dynamic scheduling sequence of instructions associated with a single thread of speculation execution. multiple issue scheduling approaches to exploit ILP static (compiler) limits of ILP dynamic (hardware) multithreading Intro Compiler Techs Branch Pred Dynamic Sched Speculation Multi Issue ILP Limits MT Intro Compiler Techs Branch Pred Dynamic Sched Speculation Multi Issue ILP Limits MT Data Dependences Control Dependences An instruction is control dependent on a branch instruction if the instruction will only be executed when the branch has a specic result. An instruction that is control dependent on a branch cannot be moved before the branch so that its execution is not controlled by the branch. Instructions must be independent to be executed in parallel. instruction types of data dependences branch branch True dependences can lead to RAW hazards. name dependences instruction Antidependences can lead to WAR hazards. before transformation after transformation Output dependences can lead to WAW hazards. An instruction that is not control dependent on a branch cannot be moved after the branch so that its execution is controlled by the branch. instruction branch branch instruction before transformation after transformation Intro Compiler Techs Branch Pred Dynamic Sched Speculation Multi Issue ILP Limits MT Intro Compiler Techs Branch Pred Dynamic Sched Speculation Multi Issue ILP Limits MT Static Scheduling Latencies of FP Operations Used in This Chapter The latency here indicates the number of intervening independent instructions that are needed to avoid a stall. -

Intro Evolution of Superscalar Processor

Superscalar Processors Superscalar Processors vs. VLIW • 7.1 Introduction • 7.2 Parallel decoding • 7.3 Superscalar instruction issue • 7.4 Shelving • 7.5 Register renaming • 7.6 Parallel execution • 7.7 Preserving the sequential consistency of instruction execution • 7.8 Preserving the sequential consistency of exception processing • 7.9 Implementation of superscalar CISC processors using a superscalar RISC core • 7.10 Case studies of superscalar processors TECH Computer Science CH01 Superscalar Processor: Intro Emergence and spread of superscalar processors • Parallel Issue • Parallel Execution • {Hardware} Dynamic Instruction Scheduling • Currently the predominant class of processors 4Pentium 4PowerPC 4UltraSparc 4AMD K5- 4HP PA7100- 4DEC α Evolution of superscalar processor Specific tasks of superscalar processing Parallel decoding {and Dependencies check} Decoding and Pre-decoding • What need to be done • Superscalar processors tend to use 2 and sometimes even 3 or more pipeline cycles for decoding and issuing instructions • >> Pre-decoding: 4shifts a part of the decode task up into loading phase 4resulting of pre-decoding f the instruction class f the type of resources required for the execution f in some processor (e.g. UltraSparc), branch target addresses calculation as well 4the results are stored by attaching 4-7 bits • + shortens the overall cycle time or reduces the number of cycles needed The principle of perdecoding Number of perdecode bits used Specific tasks of superscalar processing: Issue 7.3 Superscalar instruction issue • How and when to send the instruction(s) to EU(s) Instruction issue policies of superscalar processors: Issue policies ---Performance, tread-----Æ Issue rate {How many instructions/cycle} Issue policies: Handing Issue Blockages • CISC about 2 • RISC: Issue stopped by True dependency Issue order of instructions • True dependency Æ (Blocked: need to wait) Aligned vs. -

History Scoreboarding Overview Machine Correctness Four Stages

History 1966: scoreboarding in CDC6600, Lecture 6: Scoreboarding and Tomasulo implementing limited dynamic Algorithm scheduling Three years later: Tomasulo in IBM 360/91, introducing register renaming and reservation station Now appearing in todays Dec Alpha, SGI MIPS, SUN UltraSparc, Intel Pentium, IBM PowerPC, and others 1 Zhao Zhang, CPRE 581, Fall 2005 2 Adapted from UCB CS252 S98, Copyright 1998 USB Scoreboarding Overview Machine Correctness Basic idea: E(D,P) = E(S,P) if Use scoreboard to track data (RAW) dependence 1. E(D,P) and E(S,P) execute the same set of through register instructions Main points of design: 2. For any inst i, i receives the outputs in Instructions are sent to FU unit if there is no E(D,P) of its parents in E(S,P) outstanding name dependence 3. In E(D,P) any register or memory word receives RAW data dependence is tracked and enforced the output of inst j, where j is the last by scoreboard instruction writes to the register or memory Register values are passed through the register word in E(S,P) file; a child instruction starts execution after the last parent finishes execution Scoreboarding merit: Be able to execute Pipeline stalls if any name dependence (WAR or independent instructions in parallel without WAW) is detected violating statement 2. Zhao Zhang, CPRE 581, Fall 2005 3 Zhao Zhang, CPRE 581, Fall 2005 4 Four Stages of Scoreboard Control Four Stages of Scoreboard Control Fetch first, then 3.Execution—operate on operands (EX) 1. Issue—decode instructions & check for structural Actions: The functional unit begins execution upon receiving hazards operands. -

Advanced RISC-V Architectures

PULP PLATFORM Open Source Hardware, the way it should be! Working with RISC-V Part 2 of 4 : Advanced RISC-V Architectures Luca Benini <[email protected]> Frank K. Gürkaynak <[email protected]> http://pulp-platform.org @pulp_platform https://www.youtube.com/pulp_platform Working with RISC-V Summary ▪ Part 1 – Introduction to RISC-V ISA ▪ Part 2 – Advanced RISC-V Architectures ▪ Going 64 bit ▪ Bottlenecks ▪ Safety/Security ▪ Vector units ▪ Part 3 – PULP concepts ▪ Part 4 – PULP based chips | ACACES 2020 - July 2020 Working with RISC-V PULP RISC-V Cores from Tiny to App-level 32 bit 64 bit Low Cost DSP Streaming Linux Core Enhanced Compute capable Core Core Core ▪ Zero-riscy ▪ RI5CY ▪ Snitch ▪ Ariane ▪ RV32-ICM ▪ RV32-ICMFX ▪ RV32- ▪ RV64-IC(MA) ▪ Micro-riscy ▪ SIMD ICMDFX ▪ Full ▪ HW loops ▪ RV32-CE privileged ▪ Bit specification manipulation ▪ Fixed point ARM Cortex-M0+ ARM Cortex-M4 ARM Cortex-A5 | ACACES 2020 - July 2020 Working with RISC-V From IoT to HPC ▪ For the first 4 years of PULP, we used only 32bit cores ▪ Most IoT near-sensor applications work well with 32bit cores. ▪ 64bit memory space is not affordable in an MCU-class device ▪ But times change: ▪ Large datasets, high-precision numerical calculations (e.g. double precision FP) at the IoT edge (gateways) and cloud ▪ Software infrastructure (OS – typically linux) with virtual memory assumes 64bit ▪ High-performance computing, being hot again, requires 64bit ▪ Research question – pJ/OP on 64bit data+address space is possible? How? | ACACES 2020 - July 2020 Working with RISC-V -

Ep 2182433 A1

(19) & (11) EP 2 182 433 A1 (12) EUROPEAN PATENT APPLICATION published in accordance with Art. 153(4) EPC (43) Date of publication: (51) Int Cl.: 05.05.2010 Bulletin 2010/18 G06F 9/38 (2006.01) (21) Application number: 07768016.3 (86) International application number: PCT/JP2007/063241 (22) Date of filing: 02.07.2007 (87) International publication number: WO 2009/004709 (08.01.2009 Gazette 2009/02) (84) Designated Contracting States: • AOKI, Takashi AT BE BG CH CY CZ DE DK EE ES FI FR GB GR Kawasaki-shi HU IE IS IT LI LT LU LV MC MT NL PL PT RO SE Kanagawa 211-8588 (JP) SI SK TR Designated Extension States: (74) Representative: Ward, James Norman AL BA HR MK RS Haseltine Lake LLP Lincoln House, 5th Floor (71) Applicant: Fujitsu Limited 300 High Holborn Kawasaki-shi, Kanagawa 211-8588 (JP) London WC1V 7JH (GB) (72) Inventors: • TOYOSHIMA, Takashi Kawasaki-shi Kanagawa 211-8588 (JP) (54) INDIRECT BRANCHING PROGRAM, AND INDIRECT BRANCHING METHOD (57) A processor 100 reads an interpreter program the indirect branch instruction in a link register 120c (the 200a to start an interpreter. The interpreter makes branch processor 100 internally stacks the branch destination prediction by executing, in place of an indirect branch addresses also in a return address stack 130) in inverse instruction that is necessary for execution of a source order and reading the addresses from the return address program 200b, storing branch destination addresses in stack 130 in one at a time manner. EP 2 182 433 A1 Printed by Jouve, 75001 PARIS (FR) 1 EP 2 182 433 A1 2 Description vised and brought into practical use. -

Overview of 15-740

Vector Computers and GPUs 15-740 FALL’18 NATHAN BECKMANN BASED ON SLIDES BY DANIEL SANCHEZ, MIT 1 Today: Vector/GPUs Focus on throughput, not latency Programmer/compiler must expose parallel work directly Works on regular, replicated codes (i.e., data parallel / SIMD) 2 Background: Supercomputer Applications Typical application areas ◦ Military research (nuclear weapons aka “computational fluid dynamics”, cryptography) ◦ Scientific research ◦ Weather forecasting ◦ Oil exploration ◦ Industrial design (car crash simulation) ◦ Bioinformatics ◦ Cryptography All involve huge computations on large data sets In 70s-80s, Supercomputer Vector Machine 4 Vector Supercomputers Epitomized by Cray-1, 1976: Scalar Unit ◦ Load/Store Architecture Vector Extension ◦ Vector Registers ◦ Vector Instructions Implementation ◦ Hardwired Control ◦ Highly Pipelined Functional Units ◦ Interleaved Memory System ◦ No Data Caches ◦ No Virtual Memory 5 Cray-1 (1976) Vector registers V0 V1 V. Mask V2 V3 V. Length V4 V5 V6 V7 FP Add S0 FP Mul S1 Single Port S2 Primary FP Recip Temporary S3 Memory data 64 S4 data Int Add High- T Regs S5 registers registers S6 Int Logic bandwidth 16 banks of S7 memory Int Shift 64-bit words A0 A1 Pop Cnt A2 Primary Temporary A3 64 A4 address Addr Add address A5 B Regs A6 registers Addr Mul registers A7 64-bitx16 NIP CIP memory bank 4 Instruction LIP cycle 50 ns Buffers processor cycle 12.5 ns (80MHz) 7 Vector Programming Model Scalar Registers Vector Registers r15 v15 r0 v0 [0] [1] [2] [VLRMAX-1] Vector Length Register VLR 9 Vector Programming -

5 10 15 20 25 30 35 Class 712 Electrical Computers And

CLASS 712 ELECTRICAL COMPUTERS AND DIGITAL PROCESSING SYSTEMS: 712 - 1 PROCESSING ARCHITECTURES AND INSTRUCTION PROCESSING (E.G., PROCES- SORS) 712 ELECTRICAL COMPUTERS AND DIGITAL PROCESSING SYSTEMS: PROCESSING ARCHITECTURES AND INSTRUCTION PROCESSING (E.G., PROCESSORS) 1 PROCESSING ARCHITECTURE 40 ...External sync or interrupt 2 .Vector processor signal 3 ..Scalar/vector processor 41 ..RISC interface 42 ..Operation 4 ..Distributing of vector data to 43 ...Mode switching vector registers 200 ARCHITECTURE BASED INSTRUCTION 5 ...Masking to control an access PROCESSING to data in vector register 201 .Data flow based system 6 ..Controlling access to external 202 .Stack based computer vector data 203 .Multiprocessor instruction 7 ..Vector processor operation 204 INSTRUCTION ALIGNMENT 8 ...Sequential 205 INSTRUCTION FETCHING 9 ...Concurrent 206 .Of multiple instructions 10 .Array processor simultaneously 11 ..Array processor element 207 .Prefetching interconnection 208 INSTRUCTION DECODING (E.G., BY 12 ...Cube or hypercube MICROINSTRUCTION, START 13 ...Partitioning ADDRESS GENERATOR, HARDWIRED) 14 ...Processing element memory 209 .Decoding instruction to 15 ...Reconfiguring accommodate plural instruction 16 ..Array processor operation interpretations (e.g., different dialects, languages, 17 ...Application specific emulation, etc.) 18 ...Data flow array processor 210 .Decoding instruction to 19 ...Systolic array processor accommodate variable length 20 ...Multimode (e.g., MIMD to SIMD, instruction or operand etc.) 211 .Decoding instruction to