Download (1MB)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

British Isles – Castles, Countrysides and Capitals Scotland • England • Wales • Ireland

12 DAY WORLD HOLIDAY British Isles – Castles, Countrysides and Capitals Scotland • England • Wales • Ireland September 10, 2020 Departure Date: British Isles – Castles, Countrysides and Capitals Discover the history and charms of the 12 Days • 15 Meals British Isles as you visit Scotland, England, Wales and Ireland. See historic royal castles, the beauty of England’s Lake District and Ireland’s countryside…you’ll experience it all on this journey through these four magnificent countries. TOUR HIGHLIGHTS 4 15 Meals (10 breakfasts and 5 dinners) 4 Airport transfers on tour dates when air is provided by Mayflower Cruises & Tours 4 Included visits to Edinburgh, Cardiff and Blarney Castles Experiene the beauty of the Cotswolds 4 Discover the capital cities of Edinburgh, Cardiff and Dublin on included guided tours DAY 1 – Depart the USA 4 Visit Gretna Green, ‘the marriage capital of the UK’ Depart the USA on your overnight flight to Edinburgh, Scotland, where 4 Relax aboard a scenic cruise on Lake Windermere in England’s centuries of history meet a vibrant, cosmopolitan city. famed Lake District 4 Tour the medieval town of York and visit the Minster 4 DAY 2 – Edinburgh, Scotland Tour the childhood home of William Shakespeare during the visit to Upon arrival, you’ll be met by a Mayflower representative and trans- Stratford-upon-Avon ferred to your hotel. The remainder of the day is at leisure to begin im- 4 Enjoy a scenic journey through the Cotswolds, one of England’s most mersing yourself in the Scottish culture. picturesque areas 4 Discover the ancient art of creating Waterford Crystal 4 DAY 3 – Edinburgh Kiss the Blarney Stone during the visit to Blarney Castle’s mysterious The day begins with an included tour of this capital city. -

1 Repression, Rivalry and Racketeering in the Creation Of

Repression, Rivalry and Racketeering in the Creation of Franco’s Spain: the curious case of Emilio Griffiths ‘These things happened. That’s the thing to keep one’s eye on. They happened even though Lord Halifax said they happened […] and they did not happen any less because the Daily Telegraph has suddenly found out about them when it is five years too late’.1 (George Orwell) In mid-November 1936, a Gibraltarian named Abraham Bensusan wrote two letters to the British Secretary of State for the Colonies in London. His subject was the Spanish Civil War, which had, by then, already been raging for three months. Bensusan described how deeply the war in Spain had affected ‘The Rock’. In both letters he hinted darkly at the ‘fascist’ elements in Gibraltar and potentially suspect loyalties amongst the civilian population.2 But his principal aim was to highlight the appalling atrocities being committed by the military rebels in the surrounding region of Spain, the Campo de Gibraltar. Bensusan alleged that civilians, including Gibraltarians, were under threat of arrest or execution in the Campo, often for the most trivial offences, such as carrying a pair of shoes in a ‘communist’ newspaper. In the first letter, having urged that ‘England should formulate a formal protest to the authorities of La Linea… and specially protect Gibraltarians’, Bensusan singled out one man as responsible for the repression in the neighbouring Spanish towns: ‘I am told that a man called Griffith born in Gibraltar is Chief of Falange Española (Fascists) at La Linea, this young man apparently seems to be the murderer over at La Linea […]’ Bensusan returned to his theme in a second letter, four days later. -

Hannibal, Missouri Cardiff Hill

Hannibal, Missouri Cardiff Hill Virtual Tour by Cassidy Alexander, age 13 “Cardiff Hill, beyond the village and above it, was green with vegetation and it lay just far enough away to seem a Delectable Land, dreamy, reposeful, and invit- ing.” So penned Mark Twain in The Adventures of Tom Sawyer, the book that im- mortalized a hill, a town, and a childhood worth re- membering again and again. A statue of Tom and Huck greets visitors as they The wondrous na- “...a Delectable Land, dreamy, reposeful, and inviting.” begin their ascent of ture and beauty of Cardiff Cardiff Hill. President Hill is something that I hope Jimmy Carter visited to share with you. The hill is downtown is nestled be- dressed more fashionably Cardiff Hill with his wife found in Hannibal, Missouri, tween these two majestic than Huck, and sporting an Rosalyn and daughter Amy. Mark Twain’s home town. bookends. attractive cap. He carries a Hannibal is a quaint, hospi- bag slung over his shoulder, Cardiff Hill is an table small town with many presumably carrying the exceptionally steep hill, cov- sights worth discovering. If proverbial dead cat. Huck’s ered with the lush greenery you have read The Adven- hand appears to grasp Tom’s of locust trees and wildflow- Special points of tures of Tom Sawyer, then shoulder as though to say, ers. A statue of Tom Sawyer interest: you know the significance of “Hold on, Tom” while Tom and Huck Finn stands at the Cardiff Hill. For those of you is clearly stepping out to- foot of the hill, a testament • Memorial Bridge who don’t, Cardiff Hill pro- ward some new adventure. -

Our Contact Details

Our contact details Information on our International For Gibraltar [email protected] T +350 200 77731 Health plans, quotation requests For Hong Kong [email protected] T +852 3478 3751 or literature supplies. For Malta [email protected] T +350 200 77731 For Portugal [email protected] T +34 952 93 16 09 For Spain [email protected] T +34 952 93 16 09 All other countries [email protected] T +44 (0)1903 817970 Private Client To send us a new Application Form [email protected] Or by post – see Head Office address Renewal queries For Gibraltar [email protected] For Hong Kong [email protected] For Portugal [email protected] For Spain [email protected] All other countries [email protected] To advise us of any changes to your personal or policy details [email protected] To make a debit/credit card payment T +44 (0)1903 817970 or change your card details Flying Colours This plan provides cover specifically tailored for aviation [email protected] T +44 (0)1903 817970 personnel and their families Or visit our dedicated web page Click here Corporate Clients To send us a new Corporate For Gibraltar [email protected] T +350 200 77731 Application Form For Malta [email protected] T +350 200 77731 For Spain [email protected] T +34 952 93 16 09 All other countries [email protected] T +44 (0)1903 817970 For renewal queries, membership amendments and invoice queries [email protected] T +44 (0)1903 -

Air Transport in Wales During 2019 SB 31/2020

14 October 2020 Air transport in Wales during 2019 SB 31/2020 About this bulletin Key points This statistical bulletin The number of passengers using Cardiff presents information International airport increased by 4.3 per cent about Cardiff in 2019, to 1.63 million (Chart 1). This International airport in includes both arrivals and departures. 2019, the only major Chart 1: Terminal passengers at Cardiff Airport from 2006 to 2019 domestic and 2.5 Domestic passengers international airport in International passengers Wales. No data in this 2.0 Total passengers release relates to the coronavirus (COVID-19) 1.5 pandemic. The 1.0 information was provided by the Civil Aviation 0.5 Authority (CAA). Further Passenger numbers (millions) Passenger numbers information about the 0.0 source data is provided in the notes section. Source: WG analysis of Civil Aviation Authority data During 2019 there were 76 international destinations that operated out of Cardiff International airport, 19 fewer than in 2018. Amsterdam was the most popular international destination in 2019 and Edinburgh the most popular domestic destination (Chart 2). In this bulletin There were around 32,000 flights (inbound and outbound combined) at Long term trends 3 Cardiff International airport in 2019, a 2.6 per cent increase on the 2018 figures (Chart 11). Domestic routes 4 International routes 5 After a period of exceptionally low freight movements, around 1,800 tonnes of freight were moved through Cardiff airport in 2019, an Aircraft movements 7 increase of 23.6 per cent compared to the previous year (Chart 12). Air freight 8 Only 13.0 per cent of passengers travelled to Cardiff Airport on public Air passenger survey 9 transport in 2019 Notes 13 Statistician: Melanie Brown ~ 03000 616029 ~ [email protected] Enquiries from the press: 0300 025 8099 Public enquiries : 0300 025 5050 Twitter: @StatisticsWales Introduction Air transport is an important driver for economic development. -

1. Background Information

1. BACKGROUND INFORMATION The origins of Cardiff Metropolitan University can be traced back to 1865 when the Cardiff School of Art was first opened in the old library in Cardiff. In 1976, the School of Art, the College of Food Technology and Commerce (established 1940), the Cardiff College of Education (established 1950) and Llandaff College of Technology (established 1954) were merged to form the South Glamorgan Institute of Higher Education. The name was changed to Cardiff Institute of Higher Education in 1990. Until 1992, the institution was under the jurisdiction of South Glamorgan Council. Incorporated status was granted in 1992, this process occurring later in Wales than elsewhere in the UK. Key milestones in the development of Cardiff Metropolitan University as an autonomous institution include: 1993 Teaching Degree Awarding Powers (TDAPs) granted by the Privy Council. 1996 Became a college of the University of Wales and changed name to University of Wales Institute, Cardiff (UWIC). 2003 Became a Constituent Institution of the University of Wales. 2009 Research Degree Awarding Powers (RDAPs) granted by the Privy Council. 2011 Withdrew from the University of Wales and changed name to Cardiff Metropolitan University. With just over 1000 staff, more than 12,000 students and an annual turnover of approximately £83 million, Cardiff Metropolitan University is, in terms of size, in the middle range of the UK university sector. A recent investment of £50m in the Estates Strategy has focused on the development of a new Cardiff School of -

Guernsey, 1814-1914: Migration in a Modernising Society

GUERNSEY, 1814-1914: MIGRATION IN A MODERNISING SOCIETY Thesis submitted for the degree of Doctor of Philosophy at the University of Leicester by Rose-Marie Anne Crossan Centre for English Local History University of Leicester March, 2005 UMI Number: U594527 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. Dissertation Publishing UMI U594527 Published by ProQuest LLC 2013. Copyright in the Dissertation held by the Author. Microform Edition © ProQuest LLC. All rights reserved. This work is protected against unauthorized copying under Title 17, United States Code. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106-1346 GUERNSEY, 1814-1914: MIGRATION IN A MODERNISING SOCIETY ROSE-MARIE ANNE CROSSAN Centre for English Local History University of Leicester March 2005 ABSTRACT Guernsey is a densely populated island lying 27 miles off the Normandy coast. In 1814 it remained largely French-speaking, though it had been politically British for 600 years. The island's only town, St Peter Port (which in 1814 accommodated over half the population) had during the previous century developed a thriving commercial sector with strong links to England, whose cultural influence it began to absorb. The rural hinterland was, by contrast, characterised by a traditional autarkic regime more redolent of pre industrial France. By 1914, the population had doubled, but St Peter Port's share had fallen to 43 percent. -

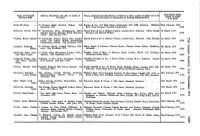

(Surname First) Address, Description and Date of Death of Deceased

Date before which Name of Deceased Address, description and date of death of Names, addresses and descriptions of Persons to whom notices of claims are to be notices of claims (Surname first) Deceased given and names, in parentheses, of Personal Representatives to be given JONES, Eva Rose 18 Florence Road, Norwich, Widow. 2nd Brutton & Co., 132 High Street, Portsmouth, POl 2HR, Solicitors. (Midland 22nd February 1974 December 1973. Bank Trust Company Limited, Portsmouth.) (103) KYRTACOU, Dennis Peter 49 Northwood Drive, Sittingbourne, Kent, Harold Stern & Co., 6 Holborn Viaduct, London E.C.I, Solicitors. (Brian George 1st March 1974 Assistant General Manager (Food Manufac- Kyriacou and Bernard Weller.) (104) turers). 30th October 1973. VICKERS, Robert Stanley 34 Fawnbrake Avenue, Herne Hill, London Harold Stern & Co., 6 Holborn Viaduct, London E.C.I, Solicitors. (Alec Nicolas) 1st March 1974 S.E.24, Fire Officer (British Broadcasting (105) Corporation), Retired. 21st December 1972. W GUMMING, Frederick 48 Chestnut Spring, Lydtard Millicent, Wilt- Home Engall & Freeman, Clarence House, Clarence Street, Staines, Middlesex, 7th March 1974 Sidney. shire. 10th November 1973. Solicitors. (106) GRIFFITHS, Ellen, other- 14 Cressy Road, Hampstead, London N.W.3, Woolley, Tyler & Bury, 47 Museum Street, London, WC1A 1LY, Solicitors. 7th March 1974 wise Ellen Louisa. Spinster. 28th September 1973. (Alexander Henry Ross.) (107) GOODRICH, Philip, other- Oaklands, 11 The Green, Southgate, London Davidson Doughty & Co., 7 Gower Street, London W.C.I, Solicitors. (Rachel 1st March 1974 wise Peter, otherwise N.14, Retired. 18th April 1973. Goodrich.) (108) N Percy. W POWELL, Marjory Scott Parkfield, Kingston Hill, Surrey, Spinster. 5th Boodle Hatfield & Co., 53 Davies Street, Berkeley Square, London, W1Y 2BL, 4th March 1974 December 1973. -

Geography Slides

United Kingdom The official name of the UK is ‘The United Kingdom of Great Britain and Northern Ireland’. Islands of the United Kingdom Many small islands belong to the UK, such as the Isle of Wight. Capital Cities England London Scotland Edinburgh Wales Cardiff Northern Ireland Belfast Facts about London London is the biggest city in Britain and Europe. 8,900,000 people live in London (2018). The Great fire of London In 1666 the Great Fire of London swept through central parts of London. Much of the city had to be rebuilt. Buckingham Palace The official London home of the Queen is Buckingham Palace. Buckingham Palace has 775 rooms including 52 royal and guest bedrooms, 188 staff bedrooms and 78 bathrooms. The Houses of Parliament The Houses of Parliament (also known as Palace of Westminster) is the home to the English government. There are 1000 rooms, 100 staircases and 11 courtyards within The Houses of Parliament. In 1605 a group including Guy Fawkes plotted to blow up Parliament. Fortunately, they were caught before this could happen. Big Ben The tower is known as Elizabeth Tower but most people call it Big Ben. However, Big Ben is actually the name of the 13-ton bell inside the clock. Tower Bridge Tower Bridge, standing at 244m high, was opened in 1894. It is named after The Tower of London. Edinburgh The capital city of Scotland is Edinburgh. Facts about Edinburgh Edinburgh is the second biggest city in Scotland. It is estimated 489,000 people live in Edinburgh (2019). The main spoken language is English. -

Family Moments Cultural Attractions Dublin

FAMILY MOMENTS CULTURAL ATTRACTIONS DUBLIN TRINITY COLLEGE tcd.ie Trinity College is Ireland’s most renowned University and attracts over half a million visitors every year. Visit the historical ‘Book of Kells’ a 680-page manuscript from 800AD. Have a stroll through the picturesque landscaped grounds which are located on College Green over 47 acres of land, right in the middle of Dublin City centre. Clayton Hotel Cardiff Lane is less than a 15-minute walk from the prestigious college. CROKE PARK crokepark.ie The GAA grounds are also known as ‘Croker’, and is a top Irish attraction. Since opened in 1884, Croke Park has played host to uncountable GAA matches, which are associated with Irish sports of Hurling and Gaelic Football. Croke Park has also hosted major international bands and musicians and these include; U2, One Direction, Take That, Red Hot Chilli Peppers, and many more. Clayton Hotel Cardiff Lane is perfectly located just a 10-minute drive from our doors. THE GUINNESS STOREHOUSE guinness-storehouse.com One of the most popular tourist attractions in the world with over 4 million guests, since opening its doors in 2000. The storehouse consists of seven floors, surrounding a glass rooftop bar, where guests can relax and enjoy a pint of Guinness. They’ll even get the opportunity to pour this themselves after a self-guided tour of the brewery. The storehouse is just a 45-minute walk or a 30-minute journey on public transport from Clayton Hotel Cardiff Lane. EPIC Museum epicchq.com You won’t find leprechauns or pots of gold here, but you’ll discover that what it means to be Irish, expands far beyond the borders of Ireland and through the stories of Irish emigrants who became scientists, politicians, poets, artists and even outlaws all over the world. -

Title the Case for the Physician Assistant

■ PROFESSIONAL ISSUES Clinical Medicine 2012, Vol 12, No 3: 200–6 TheMain case title for the physician assistant NickAuthor Ross, head Jim name Parle, Phil Begg and David Kuhns ABSTRACT – The NHS is facing a crisis from the combination to the crisis. As Farmer et al wrote in their recent report on a of EWTD, MMC, the ageing population and rising expecta- prospective trial of physician assistants in Scotland:6 tions; thus its tradition of high quality care is under pressure. ‘…[physician assistants are] moving from the USA to Physician assistants (PAs) are a new profession to the UK, edu- other parts of the world at this time expressly because [they] cated to nationally set standards and, working as dependent can meet the world’s current health workforce gaps’. practitioners, provide care in the medical model. PAs are cur- rently employed by over 20 hospital Trusts as well as in pri- mary care. They offer greater continuity than locum doctors Background and at considerably lower cost. PAs maintain generic compe- The role of the physician assistant was developed in its modern tence and can therefore be utilised as required across different form in the USA in the 1960s with the aim of meeting the needs clinical areas. The stability of PAs in the workforce will be an of underserved populations. The profession has expanded from additional resource for junior doctors on brief rotations. For very small beginnings in the 1960s, and more than 4,500 physi- the full benefits of PAs to be realised, and for the safety of the cian assistants now graduate every year from more than 150 public, statutory registration and prescribing rights are schools (including institutions such as Yale University and required. -

FREUNDE GUTER MUSIK BERLIN Ev

FREUNDE GUTER MUSIK BERLIN e.V. Erkelenzdamm 11 – 13 B IV • 10999 Berlin • Telefon ++49-(0)30-215 6120 [email protected] • www.freunde-guter-musik-berlin.de Works of Music by Visual Artists JANET CARDIFF & GEORGE BURES MILLER »The Murder of Crows« Mixed Media Sound Installation March 14 – May 17, 2009 Opening: Friday, March 13, 2009, 8 p.m. Press preview: Thursday, March 12, 2009, 11 a.m. Nationalgalerie im Hamburger Bahnhof Museum für Gegenwart – Berlin / Historical Hall Invalidenstr. 50-51 • 10557 Berlin Opening hours: Tue-Fri 10 a.m. – 6 p.m., Sat 11 a.m. – 8 p.m., Sun 11 a.m. – 6 p.m. With »The Murder of Crows«, their largest sound installation to date, the Canadian visual artists Janet Cardiff and George Bures Miller continue the explorations they embarked on in the mid-1990s into the sculptural and physical attributes of sound. In the otherwise empty historical hall of the Hamburger Bahnhof, 98 loudspeakers are installed. These emit the sounds of voices, music and soundscapes generated by special stereophonic recording and replay techniques, creating a composition that has a direct physical impact on the listener. The special Ambisonic soundfield system generates a greatly intensified aural and emotional space, so that the acoustic events that transpire in the three-dimensional soundspace have a startling, disconcerting immediacy. The installation is conceived like a film or a play, but one whose images and narrative structures are created by sound alone. The three-part work, composed in collaboration with Freida Abtan, Tilman Ritter and Titus Maderlechner, is 30 minutes long.