Unsupervised Mining of Analogical Frames by Constraint Satisfaction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rules Vs. Analogy in English Past Tenses: a Computational/Experimental Study Adam Albrighta,*, Bruce Hayesb,*

Cognition 90 (2003) 119–161 www.elsevier.com/locate/COGNIT Rules vs. analogy in English past tenses: a computational/experimental study Adam Albrighta,*, Bruce Hayesb,* aDepartment of Linguistics, University of California, Santa Cruz, Santa Cruz, CA 95064-1077, USA bDepartment of Linguistics, University of California, Los Angeles, Los Angeles, CA 90095-1543, USA Received 7 December 2001; revised 11 November 2002; accepted 30 June 2003 Abstract Are morphological patterns learned in the form of rules? Some models deny this, attributing all morphology to analogical mechanisms. The dual mechanism model (Pinker, S., & Prince, A. (1998). On language and connectionism: analysis of a parallel distributed processing model of language acquisition. Cognition, 28, 73–193) posits that speakers do internalize rules, but that these rules are few and cover only regular processes; the remaining patterns are attributed to analogy. This article advocates a third approach, which uses multiple stochastic rules and no analogy. We propose a model that employs inductive learning to discover multiple rules, and assigns them confidence scores based on their performance in the lexicon. Our model is supported over the two alternatives by new “wug test” data on English past tenses, which show that participant ratings of novel pasts depend on the phonological shape of the stem, both for irregulars and, surprisingly, also for regulars. The latter observation cannot be explained under the dual mechanism approach, which derives all regulars with a single rule. To evaluate the alternative hypothesis that all morphology is analogical, we implemented a purely analogical model, which evaluates novel pasts based solely on their similarity to existing verbs. -

Analogical Modeling: an Exemplar-Based Approach to Language"

<DOCINFO AUTHOR "" TITLE "Analogical Modeling: An exemplar-based approach to language" SUBJECT "HCP, Volume 10" KEYWORDS "" SIZE HEIGHT "220" WIDTH "150" VOFFSET "4"> Analogical Modeling human cognitive processing is a forum for interdisciplinary research on the nature and organization of the cognitive systems and processes involved in speaking and understanding natural language (including sign language), and their relationship to other domains of human cognition, including general conceptual or knowledge systems and processes (the language and thought issue), and other perceptual or behavioral systems such as vision and non- verbal behavior (e.g. gesture). ‘Cognition’ should be taken broadly, not only including the domain of rationality, but also dimensions such as emotion and the unconscious. The series is open to any type of approach to the above questions (methodologically and theoretically) and to research from any discipline, including (but not restricted to) different branches of psychology, artificial intelligence and computer science, cognitive anthropology, linguistics, philosophy and neuroscience. It takes a special interest in research crossing the boundaries of these disciplines. Editors Marcelo Dascal, Tel Aviv University Raymond W. Gibbs, University of California at Santa Cruz Jan Nuyts, University of Antwerp Editorial address Jan Nuyts, University of Antwerp, Dept. of Linguistics (GER), Universiteitsplein 1, B 2610 Wilrijk, Belgium. E-mail: [email protected] Editorial Advisory Board Melissa Bowerman, Nijmegen; Wallace Chafe, Santa Barbara, CA; Philip R. Cohen, Portland, OR; Antonio Damasio, Iowa City, IA; Morton Ann Gernsbacher, Madison, WI; David McNeill, Chicago, IL; Eric Pederson, Eugene, OR; François Recanati, Paris; Sally Rice, Edmonton, Alberta; Benny Shanon, Jerusalem; Lokendra Shastri, Berkeley, CA; Dan Slobin, Berkeley, CA; Paul Thagard, Waterloo, Ontario Volume 10 Analogical Modeling: An exemplar-based approach to language Edited by Royal Skousen, Deryle Lonsdale and Dilworth B. -

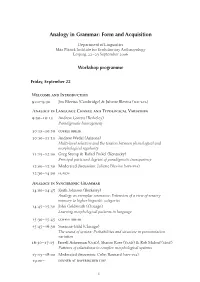

Analogy in Grammar: Form and Acquisition

Analogy in Grammar: Form and Acquisition Department of Linguistics Max Planck Institute for Evolutionary Anthropology Leipzig, $$ –$% September $##& Workshop programme Friday, September 22 W I ": –": Jim Blevins (Cambridge) & Juliette Blevins ( <=;-:A6 ) A L C T V ": –: Andrew Garrett (Berkeley) Paradigmatic heterogeneity : –: : –: Andrew Wedel (Arizona) Multi-level selection and the tension between phonological and morphological regularity : –: Greg Stump & Rafael Finkel (Kentucky) Principal parts and degrees of paradigmatic transparency : –: Moderated discussion: Juliette Blevins ( <=;-:A6 ) : –: A S G : –: Keith Johnson (Berkeley) Analogy as exemplar resonance: Extension of a view of sensory memory to higher linguistic categories : –: John Goldsmith (Chicago) Learning morphological patterns in language : –: : –: Susanne Gahl (Chicago) The sound of syntax: Probabilities and structure in pronunciation variation : – : Farrell Ackerman ( @8?9 ), Sharon Rose ( @8?9 ) & Rob Malouf ( ?9?@ ) Patterns of relatedness in complex morphological systems : –!: Moderated discussion: Colin Bannard ( <=;-:A6 ) ": – B Saturday, September 23 A L A ": –": LouAnn Gerken (Arizona) Linguistic generalization by human infants ": –: Andrea Krott (Birmingham) Banana shoes and bear tables: Children’s processing and interpretation of noun-noun compounds : –: : –: Mike Tomasello ( - ) Analogy in the acquisition of constructions : –: Rens Bod (St. Andrews) Acquisition of syntax by analogy: Computation of new utterances out -

Review of Dieter Wanner's the Power of Analogy: an Essay on Historical Linguistics

Portland State University PDXScholar World Languages and Literatures Faculty Publications and Presentations World Languages and Literatures 2007 Review of Dieter Wanner's The Power of Analogy: An Essay on Historical Linguistics Eva Nuń ez-Mẽ ndeź Portland State University, [email protected] Follow this and additional works at: https://pdxscholar.library.pdx.edu/wll_fac Part of the Comparative and Historical Linguistics Commons, and the First and Second Language Acquisition Commons Let us know how access to this document benefits ou.y Citation Details Núñez-Méndez, Eva. "Review of Dieter Wanner's The Power of Analogy: An Essay on Historical Linguistics," Linguistica e Filologia, 25 (2007), p. 266-267. This Book Review is brought to you for free and open access. It has been accepted for inclusion in World Languages and Literatures Faculty Publications and Presentations by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. Linguistica e Filologia 25 (2007) WANNER, Dieter, The Power of Analogy: An Essay on Historical Lin- guistics, Mouton de Gruyter, New York 2006, pp. xiv + 330, ISBN 978- 3-11-018873-8. Following the division predicated in the Saussurean dichotomy between synchrony and diachrony, this book starts by arguing that this antinomy between the formal and the historical should be relegated to the periphery. Combining diachronic with synchronic linguistic thought, Wanner proposes two adoptions: first, a restricted theoretical base in the form of Concrete Minimalism, and second analogical assimilations as formulated in Analogical Modeling. These two perspectives provide the basis for redirecting the theory in a cognitive direction and focus on the shape of linguistics material and the impact of the historical components of language. -

Chapter 10 Analogy Behrens Final

To appear in: Marianne Hundt, Sandra Mollin and Simone Pfenninger (eds.) (2016). The Changing English Language: Psycholinguistic Perspectives. Cambridge: Cambridge University Press. 10 The role of analogy in language acquisition Heike Behrens1 10.1 Introduction AnalogiCal reasoning is a powerful processing meChanism that allows us to disCover similarities, form Categories and extend them to new Categories. SinCe similarities Can be detected at the concrete and the relational levels, analogy is in principle unbounded. The possibility of multiple mapping makes analogy a very powerful meChanism, but leads to the problem that it is hard to prediCt whiCh analogies will aCtually be drawn. This indeterminaCy results in the faCt that analogy is often invoked as an explanandum in many studies in linguistiCs, language acquisition, and the study of language change, but that the underlying proCesses are hardly ever explained. When cheCking the index of current textbooks on cognitive linguistics and language acquisition, the keyword “analogy” is almost Completely absent, and the concept is typiCally evoked ad hoc to explain a Certain phenomenon. In Contrast, in work on language Change, different types of analogical change have been identified and have beCome teChniCal terms to refer to speCifiC phenomena, such as analogical levelling when irregular forms beCome regularized or analogical extension when new items beCome part of a Category (see Bybee 2010: 66-69, for a historiCal review of the use of the term in language Change and grammatiCalization see Traugott & Trousdale 2014: 37-38). In this paper, I will discuss the possible effects of analogical reasoning for linguistic Category formation from an emergentist and usage-based perspeCtive, and then characterize the interaction of different processes of analogical reasoning in language development, with a speCial foCus on regular/irregular morphology and argument structure. -

Paradigm Uniformity and Analogy: the Capitalistic Versus Militaristic Debate1

International Journal of IJES English Studies UNIVERSITY OF MURCIA www.um.es/ijes Paradigm Uniformity and Analogy: 1 The Capitalistic versus Militaristic Debate * DAVID EDDINGTON Brigham Young University ABSTRACT In American English, /t/ in capitalistic is generally flapped while in militaristic it is not due to the influence of capi[ɾ]al and mili[tʰ]ary. This is called Paradigm Uniformity or PU (Steriade, 2000). Riehl (2003) presents evidence to refute PU which when reanalyzed supports PU. PU is thought to work in tandem with a rule of allophonic distribution, the nature of which is debated. An approach is suggested that eliminates the need for the rule versus PU dichotomy; allophonic distribution is carried out by analogy to stored items in the mental lexicon. Therefore, the influence of the pronunciation of capital on capitalistic is determined in the same way as the pronunciation of /t/ in monomorphemic words such as Mediterranean is. A number of analogical computer simulations provide evidence to support this notion. KEYWORDS: analogy, flapping, tapping, American English, phoneme /t/, Analogical Modeling of Language, allophonic distribution, Paradigm Uniformity. * Address for correspondence: David Eddington. Brigham Young University. Department of Linguistics and English Language. 4064 JFSB. Provo, UT 84602. Phone: (801) 422-7452. Fax: (801) 422-0906. e-mail: [email protected] © Servicio de Publicaciones. Universidad de Murcia. All rights reserved. IJES, vol. 6 (2), 2006, pp. 1-18 2 David Eddington I. INTRODUCTION In traditional approaches to phonology, all surface forms are generated from abstract underlying forms. However, the pre-generative idea that surface forms can influence other surface forms, (e.g. -

The Evolution of Case Grammar

The evolution of case grammar Remi van Trijp language Computational Models of Language science press Evolution 4 Computational Models of Language Evolution Editors: Luc Steels, Remi van Trijp In this series: 1. Steels, Luc. The Talking Heads Experiment: Origins of words and meanings. 2. Vogt, Paul. How mobile robots can self-organize a vocabulary. 3. Bleys, Joris. Language strategies for the domain of colour. 4. van Trijp, Remi. The evolution of case grammar. 5. Spranger, Michael. The evolution of grounded spatial language. ISSN: 2364-7809 The evolution of case grammar Remi van Trijp language science press Remi van Trijp. 2017. The evolution of case grammar (Computational Models of Language Evolution 4). Berlin: Language Science Press. This title can be downloaded at: http://langsci-press.org/catalog/book/52 © 2017, Remi van Trijp Published under the Creative Commons Attribution 4.0 Licence (CC BY 4.0): http://creativecommons.org/licenses/by/4.0/ ISBN: 978-3-944675-45-9 (Digital) 978-3-944675-84-8 (Hardcover) 978-3-944675-85-5 (Softcover) ISSN: 2364-7809 DOI:10.17169/langsci.b52.182 Cover and concept of design: Ulrike Harbort Typesetting: Sebastian Nordhoff, Felix Kopecky, Remi van Trijp Proofreading: Benjamin Brosig, Marijana Janjic, Felix Kopecky Fonts: Linux Libertine, Arimo, DejaVu Sans Mono Typesetting software:Ǝ X LATEX Language Science Press Habelschwerdter Allee 45 14195 Berlin, Germany langsci-press.org Storage and cataloguing done by FU Berlin Language Science Press has no responsibility for the persistence or accuracy of URLs for external or third-party Internet websites referred to in this publication, and does not guarantee that any content on such websites is, or will remain, accurate or appropriate. -

Spanish Velar-Insertion and Analogy

Spanish Velar-insertion and Analogy: A Usage-based Diachronic Analysis DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Steven Richard Fondow, B.A, M.A Graduate Program in Spanish and Portuguese The Ohio State University 2010 Dissertation Committee: Dr. Dieter Wanner, Advisor Dr. Brian Joseph Dr. Terrell Morgan Dr. Wayne Redenbarger Copyright by Steven Richard Fondow 2010 Abstract The theory of Analogical and Exemplar Modeling (AEM) suggests renewed discussion of the formalization of analogy and its possible incorporation in linguistic theory. AEM is a usage-based model founded upon Exemplar Modeling (Bybee 2007, Pierrehumbert 2001) that utilizes several principles of the Analogical Modeling of Language (Skousen 1992, 1995, 2002, Wanner 2005, 2006a), including the ‘homogeneous supracontext’ of the Analogical Model (AM), frequency effects and ‘random-selection’, while also highlighting the speaker’s central and ‘immanent’ role in language (Wanner 2006a, 2006b). Within AEM, analogy is considered a cognitive means of organizing linguistic information. The relationship between input and stored exemplars is established according to potentially any and all salient similarities, linguistic or otherwise. At the same time, this conceptualization of analogy may result in language change as a result of such similarities or variables, as they may be used in the formation of an AM for the input. Crucially, the inflectional paradigm is argued to be a possible variable since it is a higher-order unit of linguistic structure within AEM. This investigation analyzes the analogical process of Spanish velar-insertion according to AEM. -

Modeling Analogy As Probabilistic Grammar∗

Modeling analogy as probabilistic grammar∗ Adam Albright MIT March 2008 1 Introduction Formal implemented models of analogy face two opposing challenges. On the one hand, they must be powerful and flexible enough to handle gradient and probabilistic data. This requires an ability to notice statistical regularities at many different levels of generality, and in many cases, to adjudicate between multiple conflicting patterns by assessing the relative strength of each, and to generalize them to novel items based on their relative strength. At the same time, when we examine evidence from language change, child errors, psycholinguistic experiments, we find that only a small fraction of the logically possible analogical inferences are actually attested. Therefore, an adequate model of analogy must also be restrictive enough to explain why speakers generalize certain statistical properties of the data and not others. Moreover, in the ideal case, restrictions on possible analogies should follow from intrinsic properties of the architecture of the model, and not need to be stipulated post hoc. Current computational models of analogical inference in language are still rather rudimentary, and we are certainly nowhere near possessing a model that captures not only the statistical abilities of speakers, but also their preferences and limitations.1 Nonetheless, the past two decades have seen some key advances. Work in frameworks such as neural networks (Rumelhart and McClelland 1987; MacWhinney and Leinbach 1991; Daugherty and Seidenberg 1994, and much subsequent work) and Analogical Modeling of Language (AML; Skousen 1989) have focused primarily on the first challenge, tackling the gradience of ∗Thanks to Jim Blevins, Bruce Hayes, Donca Steriade, participants of the Analogy in Grammar Workshop (Leipzig, 22–23 September 2006), and especially to two anonymous reviewers, for helpful comments and suggestions; all remaining errors are, of course, my own. -

From Exemplar to Grammar: a Probabilistic Analogy-Based Model of Language Learning

Cognitive Science 33 (2009) 752–793 Copyright Ó 2009 Cognitive Science Society, Inc. All rights reserved. ISSN: 0364-0213 print / 1551-6709 online DOI: 10.1111/j.1551-6709.2009.01031.x From Exemplar to Grammar: A Probabilistic Analogy-Based Model of Language Learning Rens Bod Institute for Logic, Language and Computation, University of Amsterdam Received 19 November 2007; received in revised form 24 September 2008; accepted 25 September 2008 Abstract While rules and exemplars are usually viewed as opposites, this paper argues that they form end points of the same distribution. By representing both rules and exemplars as (partial) trees, we can take into account the fluid middle ground between the two extremes. This insight is the starting point for a new theory of language learning that is based on the following idea: If a language learner does not know which phrase-structure trees should be assigned to initial sentences, s ⁄ he allows (implicitly) for all possible trees and lets linguistic experience decide which is the ‘‘best’’ tree for each sentence. The best tree is obtained by maximizing ‘‘structural analogy’’ between a sentence and previous sen- tences, which is formalized by the most probable shortest combination of subtrees from all trees of previous sentences. Corpus-based experiments with this model on the Penn Treebank and the Childes database indicate that it can learn both exemplar-based and rule-based aspects of language, ranging from phrasal verbs to auxiliary fronting. By having learned the syntactic structures of sentences, we have also learned the grammar implicit in these structures, which can in turn be used to produce new sentences. -

Learning and Learnability in Phonology

Learning and Learnability in Phonology The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation Albright, Adam and Hayes, Bruce. 2011. "Learning and Learnability in Phonology." Handbook of Phonological Theory. As Published http://dx.doi.org/10.1002/9781444343069.ch20 Publisher Wiley-Blackwell Version Author's final manuscript Citable link https://hdl.handle.net/1721.1/128534 Terms of Use Creative Commons Attribution-Noncommercial-Share Alike Detailed Terms http://creativecommons.org/licenses/by-nc-sa/4.0/ Learning and learnability in phonology Adam Albright, MIT Bruce Hayes, UCLA To appear in John Goldsmith, Jason Riggle, and Alan Yu, eds. Handbook of Phonological Theory. Blackwell/Wiley. 1. Chapter content A central scientific problem in phonology is how children rapidly and accurately acquire the intricate structures and patterns seen in the phonology of their native language. The solution to this problem lies in part in the discovery of the right formal theory of phonology, but another crucial element is the development of theories of learning, often in the form of machine- implemented models that attempt to mimic human childrens’ ability. This chapter is a survey of work in this area. 2. Defining the problem Before we can develop a theory of how children learn phonological systems, we must first characterize the knowledge that is to be acquired. Traditionally, phonological analyses have focused on describing the set of attested words, developing rules or constraints that distinguish sequences that occur from those that do not. Although such analyses have proven extremely valuable in developing a set of theoretical tools for capturing phonologically relevant distinctions, it is risky to assume that human learners internalize every pattern that can be described by the theory. -

The Analogical Speaker Or Grammar Put in Its Place René-Joseph Lavie

The Analogical Speaker or grammar put in its place René-Joseph Lavie To cite this version: René-Joseph Lavie. The Analogical Speaker or grammar put in its place. Linguistique. Université de Nanterre - Paris X, 2003. Français. tel-00144458v1 HAL Id: tel-00144458 https://tel.archives-ouvertes.fr/tel-00144458v1 Submitted on 3 May 2007 (v1), last revised 5 May 2014 (v2) HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. UNIVERSITE DE PARIS 10 NANTERRE, FRANCE Département des Sciences du Language Laboratoire: MoDyCo, UMR 7114 Ecole doctorale: Connaissance et Culture René Joseph Lavie The Analogical Speaker or grammar put in its place Doctoral dissertation for the grade of Doctor in Language Sciences defended publicly on november 18th, 2003 Translated by the author with contributions of Josh Parker Original title: Le Locuteur Analogique ou la grammaire mise à sa place Jury: Sylvain Auroux Research Director, CNRS; Ecole Normale Supérieure, Lyon Marcel Cori, Professor, Université de Paris 10 Nanterre Jean-Gabriel Ganascia , Professor, Université Pierre et Marie Curie (Paris 6) Bernard Laks, Professor, Université de Paris 10 Nanterre Bernard Victorri, Research Director, CNRS, Laboratoire LATTICE Director: Bernard Laks 2004 02 01 Content INTRODUCTION .....................................................................................................................................