A Technical Introduction to Digital Video

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Iagrams TH EW)

iagrams TH EW) TP07 TP10 TP08 TP09 TP09 TP01 TP06 TP10 TP08 TP05 TP07 TP03 TP02 TP04 : Power Lin : Signal Lin TP12 TP13 TP15 TP11 TP14 TP22 TP23 TP23 TP22 TP24 TP16 TP18 TP17 TP25 TP21 TP19 TP24 TP20 TP25 TP32 TP33 TP35 TP26 TP33 TP34 TP34 TP27 TP32 TP35 TP31 TP28 TP29 TP30 : Power Line : Signal Line -EW) H Alignment and Adjustments 4-4 FOCUS Adjustment 1. Input a black and white signal. 2. Adjust the tuning control for the clearest picture. 3. Adjust the FOCUS control for well defined scanning lines in the center area of the screen. 4-5 SCREEN Adjustment 1. Input Toshiba Pattern 2. Enter “Service Mode”.(Refer to “4-8-1 Service Mode”) 3. Select “G2-Adjust”. 4. Set the values as below. Table 1. Screen Adjustment Table COLR G B No INCH / CRT REGION IBRM WDRV CDL (Smallest Value) 1 14” / SDI 205 35 100 100 Noraml 220 35 180 100 2 15PF / SDI 215 35 100 100 CIS 3 21” 1.7R / SDI 220 35 180 100 4 21” 1.7R / JCT 220 35 200 150 5 21PF / TSB 220 35 180 65 Noraml 6 21PF / LG 230 35 230 65 7 21PF / SDI 220 35 210 65 8 25PF / SDI 210 35 160 120 9 29” 1.3R / SDI 200 35 170 150 5. Turn the SCREEN VR until “MRCR G B” and “MRWDG” are green and those value are about 100. (The incorrect SCREEN Voltage may result that “MRCR G B” and “MRWDG” should be red) 4-2 Samsung Electronics Alignment and Adjustments 4-6 E2PROM (IC902) Replacement 1. -

AXI Reference Guide

AXI Reference Guide [Guide Subtitle] [optional] UG761 (v13.4) January 18, 2012 [optional] Xilinx is providing this product documentation, hereinafter “Information,” to you “AS IS” with no warranty of any kind, express or implied. Xilinx makes no representation that the Information, or any particular implementation thereof, is free from any claims of infringement. You are responsible for obtaining any rights you may require for any implementation based on the Information. All specifications are subject to change without notice. XILINX EXPRESSLY DISCLAIMS ANY WARRANTY WHATSOEVER WITH RESPECT TO THE ADEQUACY OF THE INFORMATION OR ANY IMPLEMENTATION BASED THEREON, INCLUDING BUT NOT LIMITED TO ANY WARRANTIES OR REPRESENTATIONS THAT THIS IMPLEMENTATION IS FREE FROM CLAIMS OF INFRINGEMENT AND ANY IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. Except as stated herein, none of the Information may be copied, reproduced, distributed, republished, downloaded, displayed, posted, or transmitted in any form or by any means including, but not limited to, electronic, mechanical, photocopying, recording, or otherwise, without the prior written consent of Xilinx. © Copyright 2012 Xilinx, Inc. XILINX, the Xilinx logo, Virtex, Spartan, Kintex, Artix, ISE, Zynq, and other designated brands included herein are trademarks of Xilinx in the United States and other countries. All other trademarks are the property of their respective owners. ARM® and AMBA® are registered trademarks of ARM in the EU and other countries. All other trademarks are the property of their respective owners. Revision History The following table shows the revision history for this document: . Date Version Description of Revisions 03/01/2011 13.1 Second Xilinx release. -

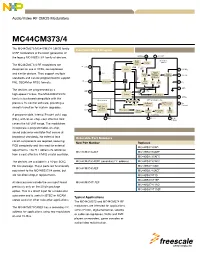

MC44CM373/4 Audio/Video RF CMOS Fact Sheet

Audio/Video RF CMOS Modulators MC44CM373/4 The MC44CM373/MC44CM374 CMOS family of RF modulators is the latest generation of the legacy MC44BS373/4 family of devices. The MC44CM373/4 RF modulators are designed for use in VCRs, set-top boxes and similar devices. They support multiple standards and can be programmed to support PAL, SECAM or NTSC formats. The devices are programmed by a high-speed I2C bus. The MC44CM373/374 family is backward compatible with the previous I2C control software, providing a smooth transition for system upgrades. A programmable, internal Phase-Lock Loop (PLL), with an on-chip, cost-effective tank covers the full UHF range. The modulators incorporate a programmable, on-chip, sound subcarrier oscillator that covers all broadcast standards. No external tank Orderable Part Numbers circuit components are required, reducing New Part Number Replaces PCB complexity and the need for external MC44BS373CAD adjustments. The PLL obtains its reference MC44CM373CAEF MC44BS373CAEF from a cost-effective 4 MHz crystal oscillator. MC44BS373CAFC The devices are available in a 16-pin SOIC, MC44CM373CASEF (secondary I2C address) MC44BS373CAFC Pb-free package. These parts are functionally MC44BS374CAD MC44CM374CAEF equivalent to the MC44BS373/4 series, but MC44BS374CAEF are not direct drop-in replacements. MC44BS374T1D MC44BS374T1EF All devices now include the aux input found MC44CM374T1AEF MC44BS374T1AD previously only on the 20-pin package MC44BS374T1AEF option. This is a direct input for a modulated subcarrier and is useful in BTSC or NICAM Typical Applications stereo sound or other subcarrier applications. The MC44CM373 and MC44CM374 RF modulators are intended for applications The MC44CM373CASEF has a secondary I2C within IP/DSL, digital terrestrial, satellite address for applications using two modulators or cable set-top boxes, VCRs and DVD on one I2C Bus. -

High Definition Analog Component Measurements

Application Note High Definition Analog Component Measurements Requirements for Measuring Analog Component HD Signals for Video Devices The transition to digital has enabled great strides in the of these devices. When an image is captured by a color processing of video signals, allowing a variety of camera and converted from light to an electrical signal, techniques to be applied to the video image. Despite the signal is comprised of three components - Red, these benefits, the final signal received by the customer Green and Blue (RGB). From the combination of these is still converted to an analog signal for display on a three signals, a representation of the original image can be picture monitor. With the proliferation of a wide variety conveyed to a color display. The various video processing of digital devices - set-top boxes, Digital Versatile Disk systems within the signal paths need to process the (DVD) players and PC cards - comes a wide range of three components identically, in order not to introduce video formats in addition to the standard composite any amplitude or channel timing errors. Each of the three output. It is therefore necessary to understand the components R’G’B’ (the ( ’ ) indicates that the signal requirements for measuring analog component High has been gamma corrected) has identical bandwidth, Definition (HD) signals in order to test the performance which increases complexity within the digital domain. High Definition Analog Component Measurements Application Note Y’, R’-Y’, B’-Y’, Commonly Used for Analog Component Analog Video Format 1125/60/2:1 750/60/1:1 525/59.94/1:1, 625/50/1:1 Y’ 0.2126 R’ + 0.7152 G’ + 0.0722 B’ 0.299 R’ + 0.587 G’ + 0.114 B’ R’-Y’ 0.7874 R’ - 0.7152 G’ - 0.0722 B’ 0.701 R’ - 0.587 G’ - 0.114 B’ B’-Y’ - 0.2126 R’ - 0.7152 G’ + 0.9278 B’ - 0.299 R’ - 0.587 G’ + 0.886 B’ Table 1. -

Understanding HD and 3G-SDI Video Poster

Understanding HD & 3G-SDI Video EYE DIGITAL SIGNAL TIMING EYE DIAGRAM The eye diagram is constructed by overlaying portions of the sampled data stream until enough data amplitude is important because of its relation to noise, and because the Y', R'-Y', B'-Y', COMMONLY USED FOR ANALOG COMPONENT ANALOG VIDEO transitions produce the familiar display. A unit interval (UI) is defined as the time between two adjacent signal receiver estimates the required high-frequency compensation (equalization) based on the Format 1125/60/2:1 750/60/1:1 525/59.94/2:1, 625/50/2:1, 1250/50/2:1 transitions, which is the reciprocal of clock frequency. UI is 3.7 ns for digital component 525 / 625 (SMPTE remaining half-clock-frequency energy as the signal arrives. Incorrect amplitude at the Y’ 0.2126 R' + 0.7152 G' + 0.0722 B' 0.299 R' + 0.587 G' + 0.114 B' 259M), 673.4 ps for digital high-definition (SMPTE 292) and 336.7ps for 3G-SDI serial digital (SMPTE 424M) sending end could result in an incorrect equalization applied at the receiving end, thus causing Digital video synchronization is provided by End of Active Video (EAV) and Start of Active Video (SAV) sequences which start with a R'-Y' 0.7874 R' - 0.7152 G' - 0.0722 B' 0.701 R' - 0.587 G' - 0.114 B' as shown in Table 1. A serial receiver determines if the signal is “high” or “low” in the center of each eye, and signal distortions. Overshoot of the rising and falling edge should not exceed 10% of the waveform HORIZONTAL LINE TIMING unique three word pattern: 3FFh (all bits in the word set to 1), 000h (all 0’s), 000h (all 0’s), followed by a fourth “XYZ” word whose B'-Y' -0.2126 R' - 0.7152 G' + 0.9278 B' -0.299 R' - 0.587 G' + 0.886 B' detects the serial data. -

VPC 3205C, VPC 3215C Video Processor Family

PRELIMINARY DATA SHEET MICRONAS VPC 3205C, VPC 3215C Video Processor Family Edition Oct. 19, 1998 6251-457-2PD MICRONAS VPC 3205C, VPC 3215C PRELIMINARY DATA SHEET Contents Page Section Title 4 1. Introduction 4 1.1. System Architecture 4 1.2. Video Processor Family 5 1.3. VPC Applications 6 2. Functional Description 6 2.1. Analog Front-End 6 2.1.1. Input Selector 6 2.1.2. Clamping 6 2.1.3. Automatic Gain Control 6 2.1.4. Analog-to-Digital Converters 6 2.1.5. Digitally Controlled Clock Oscillator 6 2.1.6. Analog Video Output 7 2.2. Adaptive Comb Filter 7 2.3. Color Decoder 8 2.3.1. IF-Compensation 8 2.3.2. Demodulator 8 2.3.3. Chrominance Filter 9 2.3.4. Frequency Demodulator 9 2.3.5. Burst Detection 9 2.3.6. Color Killer Operation 9 2.3.7. PAL Compensation/1-H Comb Filter 10 2.3.8. Luminance Notch Filter 10 2.3.9. Skew Filtering 11 2.4. Horizontal Scaler 11 2.5. Blackline Detector 11 2.6. Control and Data Output Signals 11 2.6.1. Line-Locked Clock Generation 12 2.6.2. Sync Signals 12 2.6.3. DIGIT3000 Output Format 12 2.6.4. Line-Locked 4:2:2 Output Format 12 2.6.5. Line-Locked 4:1:1 Output Format 12 2.6.6. Output Code Levels 12 2.6.7. Output Signal Levels 12 2.6.8. Test Pattern Generator 13 2.6.9. Priority Bus Codec 13 2.7. -

MICRONAS VDP 31Xxb Video Processor Family��������������

查询VDP31XXB供应商 捷多邦,专业PCB打样工厂,24小时加急出货 PRELIMINARY DATA SHEET MICRONAS VDP 31xxB Video Processor Family Edition Sept. 25, 1998 6251-437-2PD MICRONAS VDP 31xxB PRELIMINARY DATA SHEET Contents Page Section Title 5 1. Introduction 6 1.1. VDP Applications 7 2. Functional Description 7 2.1. Analog Front-End 7 2.1.1. Input Selector 7 2.1.2. Clamping 7 2.1.3. Automatic Gain Control 7 2.1.4. Analog-to-Digital Converters 7 2.1.5. ADC Range 7 2.1.6. Digitally Controlled Clock Oscillator 7 2.1.7. Analog Video Output 9 2.2. Adaptive Comb Filter 10 2.3. Color Decoder 10 2.3.1. IF-Compensation 11 2.3.2. Demodulator 11 2.3.3. Chrominance Filter 11 2.3.4. Frequency Demodulator 11 2.3.5. Burst Detection 11 2.3.6. Color Killer Operation 11 2.3.7. PAL Compensation/1-H Comb Filter 12 2.3.8. Luminance Notch Filter 12 2.3.9. Skew Filtering 13 2.4. Horizontal Scaler 13 2.5. Black-Line Detector 13 2.6. Test Pattern Generator 14 2.7. Video Sync Processing 15 2.8. Display Part 15 2.8.1. Luma Contrast Adjustment 15 2.8.2. Black Level Expander 16 2.8.3. Dynamic Peaking 17 2.8.4. Digital Brightness Adjustment 17 2.8.5. Soft Limiter 17 2.8.6. Chroma Input 17 2.8.7. Chroma Interpolation 18 2.8.8. Chroma Transient Improvement 18 2.8.9. Inverse Matrix 18 2.8.10. RGB Processing 18 2.8.11. -

NTSC Specifications

NTSC Modulation Standard ━━━━━━━━━━━━━━━━━━━━━━━━ The Impressionistic Era of TV. It©s Never The Same Color! The first analog Color TV system realized which is backward compatible with the existing B & W signal. To combine a Chroma signal with the existing Luma(Y)signal a quadrature sub-carrier Chroma signal is used. On the Cartesian grid the x & y axes are defined with B−Y & R−Y respectively. When transmitted along with the Luma(Y) G−Y signal can be recovered from the B−Y & R−Y signals. Matrixing ━━━━━━━━━ Let: R = Red \ G = Green Each range from 0 to 1. B = Blue / Y = Matrixed B & W Luma sub-channel. U = Matrixed Blue Chroma sub-channel. U #2900FC 249.76° −U #D3FC00 69.76° V = Matrixed Red Chroma sub-channel. V #FF0056 339.76° −V #00FFA9 159.76° W = Matrixed Green Chroma sub-channel. W #1BFA00 113.52° −W #DF00FA 293.52° HSV HSV Enhanced channels: Hue Hue I = Matrixed Skin Chroma sub-channel. I #FC6600 24.29° −I #0096FC 204.29° Q = Matrixed Purple Chroma sub-channel. Q #8900FE 272.36° −Q #75FE00 92.36° We have: Y = 0.299 × R + 0.587 × G + 0.114 × B B − Y = −0.299 × R − 0.587 × G + 0.886 × B R − Y = 0.701 × R − 0.587 × G − 0.114 × B G − Y = −0.299 × R + 0.413 × G − 0.114 × B = −0.194208 × (B − Y) −0.509370 × (R − Y) (−0.1942078377, −0.5093696834) Encode: If: U[x] = 0.492111 × ( B − Y ) × 0° ┐ Quadrature (0.4921110411) V[y] = 0.877283 × ( R − Y ) × 90° ┘ Sub-Carrier (0.8772832199) Then: W = 1.424415 × ( G − Y ) @ 235.796° Chroma Vector = √ U² + V² Chroma Hue θ = aTan2(V,U) [Radians] If θ < 0 then add 2π.[360°] Decode: SyncDet U: B − Y = -┼- @ 0.000° ÷ 0.492111 V: R − Y = -┼- @ 90.000° ÷ 0.877283 W: G − Y = -┼- @ 235.796° ÷ 1.424415 (1.4244145537, 235.79647610°) or G − Y = −0.394642 × (B − Y) − 0.580622 × (R − Y) (−0.3946423068, −0.5806217020) These scaling factors are for the quadrature Chroma signal before the 0.492111 & 0.877283 unscaling factors are applied to the B−Y & R−Y axes respectively. -

Adv7177/Adv7178

Integrated Digital CCIR-601 to PAL/NTSC Video Encoder ADV7177/ADV7178 FEATURES ITU-R BT601/656 YCrCb to PAL/NTSC video encoder Color-signal control/burst-signal control High quality, 9-bit video DACs Interlaced/noninterlaced operation Integral nonlinearity <1 LSB at 9 bits Complete on-chip video timing generator NTSC-M, PAL-M/N, PAL-B/D/G/H/I OSD support (ADV7177 only) Single 27 MHz crystal/clock required (±2 oversampling) Programmable multimode master/slave operation 75 dB video SNR Macrovision AntiTaping Rev. 7.01 (ADV7178 only)1 32-bit direct digital synthesizer for color subcarrier Closed captioning support Multistandard video output support: On-board voltage reference Composite (CVBS) 2-wire serial MPU interface (I2C®-compatible) Component S-video (Y/C) Single-supply 5 V or 3 V operation Component YUV or RGB Small 44-lead MQFP package Video input data port supports: CCIR-656 4:2:2 8-bit parallel input format Synchronous 27 MHz/13.5 MHz clock output 4:2:2 16-bit parallel input format Full video output drive or low signal drive capability APPLICATIONS 34.7 mA max into 37.5 Ω (doubly terminated 75 R) MPEG-1 and MPEG-2 video, DVD, digital satellite, 5 mA min with external buffers cable systems (set-top boxes/IRDs), digital TVs, Programmable simultaneous composite and S-VHS CD video/karaoke, video games, PC video/multimedia (VHS) Y/C or RGB (SCART)/YUV video outputs Programmable luma filters (low-pass/notch/extended) 1 The Macrovision anticopy process is licensed for noncommercial home use Programmable VBI (vertical blanking interval) only, which is its sole intended use in the device. -

SAA7114 PAL/NTSC/SECAM Video Decoder with Adaptive PAL/NTSC Comb filter, VBI Data Slicer and High Performance Scaler Rev

SAA7114 PAL/NTSC/SECAM video decoder with adaptive PAL/NTSC comb filter, VBI data slicer and high performance scaler Rev. 03 — 17 January 2006 Product data sheet 1. General description The SAA7114 is a video capture device for applications at the image port of Video Graphics Array (VGA) controllers. The SAA7114 is a combination of a two-channel analog preprocessing circuit including source selection, anti-aliasing filter and Analog-to-Digital Converter (ADC), an automatic clamp and gain control, a Clock Generation Circuit (CGC), a digital multistandard decoder containing two-dimensional chrominance/luminance separation by an adaptive comb filter and a high performance scaler, including variable horizontal and vertical up and downscaling and a brightness, contrast and saturation control circuit. It is a highly integrated circuit for desktop video and similar applications. The decoder is based on the principle of line-locked clock decoding and is able to decode the color of PAL, SECAM and NTSC signals into ITU 601 compatible color component values. The SAA7114 accepts CVBS or S-video (Y/C) as analog inputs from TV or VCR sources, including weak and distorted signals. An expansion port (X port) for digital video (bidirectional half duplex, D1 compatible) is also supported to connect to MPEG or a video phone codec. At the so called image port (I port) the SAA7114 supports 8-bit or 16-bit wide output data with auxiliary reference data for interfacing to VGA controllers. The target application for the SAA7114 is to capture and scale video images, to be provided as a digital video stream through the image port of a VGA controller, for display via the frame buffer of the VGA, or for capture to system memory. -

1. Introduction

AN377 TIMING AND SYNCHRONIZATION IN BROADCAST VIDEO 1. Introduction Digitization of video signals has been common practice in broadcast video for many years. Early digital video was commonly encoded on a 10-bit parallel bus, but as higher processing speeds became practical, a serial form of the digitized video signal called the Serial Digital Interface (SDI) was developed and standardized. Serialization of the digital video stream greatly facilitates its distribution within a professional broadcast studio. Master Sync Generator Sync Video Server (Mass Storage) (Genlock) On-site Video Cameras SDI SDI Video Switching/ Processing SDI Transmission Facility SDI SDI Distribution Video Amplifier SDI SDI Router Frame Synchronizer SDI SDI Remote Professional Video Server Video Camera Monitor (Storage) Figure 1. Typical Example of a Professional Broadcast Video In a studio with multiple cameras, it is important that video signals coming from multiple sources are frame aligned or synchronized to allow seamless switching between video sources. For this reason, a synchronization signal is often distributed to all video sources using a master synchronization generator as shown in Figure 1. This allows video switching equipment to select between multiple sources without having to buffer and re-synchronize all of its input video signals. In this application note, we will take a closer look at the various components that make up a broadcast video system and how each of the components play a role in the synchronization chain. Rev. 0.1 8/09 Copyright © 2009 by Silicon Laboratories AN377 AN377 2. Digitizing the Video Signal A video camera uses sensors to capture and convert light to electrical signals that represent the three primary colors– red, green, and blue (RGB). -

Datasheet Search Site |

Integrated Circuit Systems, Inc. ICS2008B SMPTE Time Code Receiver/Generator General Description Features The ICS2008B, SMPTE Time Code Receiver/Generator • Meets SMPTE VITC Specifications chip, is a VLSI device designed in a low power CMOS process. This device provides the timing coordination for • Meets SMPTE and EBU LTC Specifications Multimedia sight and sound events. Although it is aimed at • Time Code Burn-in Window a PC Multimedia environment, the ICS2008B is easily – Programmable position, size and character attributes integrated into products requiring SMPTE time code generation and/or reception in LTC (Longitudinal Time • LTC edge rate control Code) and/or VITC (Vertical Interval Time Code) formats – Conforms to EBU Tr and Tf Specifications and MTC (MIDI Time Code) translation. • Internal and external sync sources – Genlock to video or house sync inputs Taking its input from composite video, S-Video, or an – Improved video timing lock during VCR pause and audio track, the ICS2008B can read SMPTE time code in shuttle modes VITC and LTC formats. Time code output formats are LTC – Internally generated timing from oscillator input and VITC. All are available simultaneously. A UART is – External click input provided for the user to support MTC or tape transport – Internal Timer control. Allows 1/4 Frame MIDI Time Code Messages The processor interface is compatible with the IBM PC and • LTC and VITC Generators ISA bus compatible computers and is easily interfaced to – Real Time SMPTE Rates: other processors and micro-controllers. 30 Hz, 29.97 Hz, 25 Hz, 24Hz – Time Code Modes The ICS2008B is an improved version of the ICS2008, Drop Frame and Color Frame with additional features and capabilities.