World-Driven Access Control for Continuous Sensing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mobile Smart Fundamentals Mma Members Edition June 2014

MOBILE SMART FUNDAMENTALS MMA MEMBERS EDITION JUNE 2014 messaging . advertising . apps . mcommerce www.mmaglobal.com NEW YORK • LONDON • SINGAPORE • SÃO PAULO MOBILE MARKETING ASSOCIATION JUNE 2014 REPORT The Global Board Given our continuous march toward providing marketers the tools they need to successfully leverage mobile, it’s tempting to give you another week-by-week update on our DRUMBEAT. But, as busy as that’s been, I’d like to focus my introduction for this month’s Mobile Smart Fundamentals on the recent announcement we made regarding our Global Board. Our May 6th announcement that we would be welcoming the first CMO in the MMA’s history to take up the position of Global Chairperson was significant for many reasons, not least of which is the incredible insight and leadership that John Costello brings to the role. This was also one of our first steps to truly aligning the MMA to a new marketer-first mission. Subsequently, on June 25th, we were pleased to announce the introduction, re-election and continuation of committed leaders to the MMA’s Global Board (read full press release here). But perhaps most significantly of all, we welcomed a number of new Brand marketers and that list of Brands on the Global Board now includes The Coca-Cola Company, Colgate-Palmolive, Dunkin’ Brands, General Motors, Mondelez International, Procter & Gamble, Unilever, Visa and Walmart. http://www.mmaglobal.com/about/board-of-directors/global To put this into context, the board now comprises 80% CEOs and Top 100 Marketers vs. three years ago where only 19% of the board comprised CEOs and a single marketer, with the majority being mid-level managers. -

Augmented Reality Applied Tolanguage Translation

Ana Rita de Tróia Salvado Licenciado em Ciências da Engenharia Electrotécnica e de Computadores Augmented Reality Applied to Language Translation Dissertação para obtenção do Grau de Mestre em Engenharia Electrotécnica e de Computadores Orientador : Prof. Dr. José António Barata de Oliveira, Prof. Auxiliar, Universidade Nova de Lisboa Júri: Presidente: Doutor João Paulo Branquinho Pimentão, FCT/UNL Arguente: Doutor Tiago Oliveira Machado de Figueiredo Cardoso, FCT/UNL Vogal: Doutor José António Barata de Oliveira, FCT/UNL September, 2015 iii Augmented Reality Applied to Language Translation Copyright c Ana Rita de Tróia Salvado, Faculdade de Ciências e Tecnologia, Universi- dade Nova de Lisboa A Faculdade de Ciências e Tecnologia e a Universidade Nova de Lisboa têm o direito, perpétuo e sem limites geográficos, de arquivar e publicar esta dissertação através de ex- emplares impressos reproduzidos em papel ou de forma digital, ou por qualquer outro meio conhecido ou que venha a ser inventado, e de a divulgar através de repositórios científicos e de admitir a sua cópia e distribuição com objectivos educacionais ou de in- vestigação, não comerciais, desde que seja dado crédito ao autor e editor. iv To my beloved family... vi Acknowledgements "Coming together is a beginning; keeping together is progress; working together is success." - Henry Ford. Life can only be truly enjoyed when people get together to create and share moments and memories. Greatness can be easily achieved by working together and being sup- ported by others. For this reason, I would like to save a special place in this work to thank people who were there and supported me during all this learning process. -

Echtzeitübersetzung Dank Augmented Reality Und Spracherkennung Eine Untersuchung Am Beispiel Der Google Translate-App

ECHTZEITÜBERSETZUNG DANK AUGMENTED REALITY UND SPRACHERKENNUNG EINE UNTERSUCHUNG AM BEISPIEL DER GOOGLE TRANSLATE-APP Julia Herb, BA Matrikelnummer: 01216413 MASTERARBEIT eingereicht im Rahmen des Masterstudiums Translationswissenschaft Spezialisierung: Fachkommunikation an der Leopold-Franzens-Universität Innsbruck Philologisch-Kulturwissenschaftliche Fakultät Institut für Translationswissenschaft betreut von: ao. Univ.-Prof. Mag. Dr. Peter Sandrini Innsbruck, am 22.07.2019 1 Inhaltsverzeichnis Abstract ........................................................................................................................................................... 1 Abbildungsverzeichnis .................................................................................................................................... 2 1. Aktualität des Themas ............................................................................................................................. 4 2. Augmented Reality: die neue Form der Multimedialität ........................................................................ 7 a. Definition und Abgrenzung ................................................................................................................ 7 b. Anwendungsbereiche der AR-Technologie ...................................................................................... 11 3. Verbmobil: neue Dimensionen der maschinellen Übersetzung dank Spracherkennung ....................... 13 a. Besonderheiten eines Echtzeit-Übersetzungs-Projekts .................................................................... -

(12) United States Patent (10) Patent No.: US 8,761,513 B1 Rogowski Et Al

US008761513B1 (12) United States Patent (10) Patent No.: US 8,761,513 B1 Rogowski et al. (45) Date of Patent: Jun. 24, 2014 (54) SYSTEMS AND METHODS FOR DISPLAYING (56) References Cited FOREIGN CHARACTER SETS AND THEIR |U.S. PATENT DOCUMENTS TRANSLATIONS IN REAL TIME ON -- - RESOURCE-CONSTRAINED MOBILE 5,875,421 A 2/1999 Takeuchi DEVICES D453,766 S 2/2002 Grandcolas et al. (71) Applicants: Ryan Leon Rogowski, Naperville, IL (Continued) º FOREIGN PATENT DOCUMENTS EP 2587389 A1 5/2013 (72) Inventors: Ryan Leon Rogowski, Naperville, IL WO 2013040 107 A1 3/2013 (US); Huan-Yu Wu, Taipei (TW); Kevin WO 2013,134090 A1 9/2013 Anthony Clark, Warwick, RI (US) WO 201400 1937 A1 1/2014 OTHER PUBLICATIONS (73) Assignee: Translate Abroad, Inc., Providence, RI (US) Quest Visual Inc, “Word Lens,” Quest Visual website, available at http://questvisual.com/us/Accessed on Jan. 11, 2014. (*) Notice: Subject to any disclaimer, the term of this Continued patent is extended or adjusted under 35 (Continued) U.S.C. 154(b) by 0 days. Primary Examiner – Ruiping Li - (74) Attorney, Agent, or Firm – American Patent Agency (21) Appl. No.: 14/207,155 PC; Daniar Hussain; Karl Dresdner (22) Filed: Mar 12, 2014 (57) ABSTRACT The present invention is related to systems and methods for Related U.S. Application Data translating language text on a mobile camera device offline e K_f e without access to the Internet. More specifically, the present (60) Provisional application No. 61/791,584, filed on Mar. invention relates to systems and methods for displaying text 15, 2013. -

View July 2014 Report

MOBILE SMART FUNDAMENTALS MMA MEMBERS EDITION JULY 2014 messaging . advertising . apps . mcommerce www.mmaglobal.com NEW YORK • LONDON • SINGAPORE • SÃO PAULO MOBILE MARKETING ASSOCIATION JULY 2014 REPORT The Playbook Over the last few months we’ve been building a unique resource that will help our brand marketer members successfully develop and execute a mobile strategy, allowing them to deliver a consistent mobile brand experience on a global scale. Enter our Mobile Marketing Playbook (Press Release). Launched last week and created in partnership with global sporting goods giant, adidas, it aims to explain when, where and how companies can use mobile as core to their marketing efforts. Whilst we’ve seen some incredible work this year, as evidenced by the many great mobile campaigns submitted to our 2014 Smarties Awards Program (currently in pre-screening), one of the challenges marketers still face is how to make mobile an integral part of their mix. The Playbook takes marketers through the process of mobile strategy development from start to finish. It provides best practices around mobile executions, ways to leverage the myriad mobile vehicles, insights into mobile creative effectiveness and how companies can effectively measure and optimize mobile. To address the ever changing needs of and challenges faced by marketers, the Playbook will be regularly updated to reflect shifts in consumer behavior, mobile trends as they are introduced, and innovations that are continuously being developed through and with mobile. This will be accomplished in part by the annual addition of well over 500 case studies into our Case Study Hub, helping to define best practice and to serve as a source of inspiration to our marketer members Members can access the entire Playbook by logging in using your member login and password where directed. -

La Terminologia Nella Traduzione Istituzionale: Il Caso Di Termcoord.Eu (Terminology Coordination Unit – European Parliament)

La terminologia nella traduzione istituzionale: il caso di TermCoord.eu (Terminology Coordination Unit – European Parliament) Rossella Ferraro Milano, 2016 Indice Introduzione 3 Capitolo 1 Il sito TermCoord: per un’analisi dei contenuti tematici Introduzione 5 1.1 Traduzione e terminologia in ambito UE: conferenze e seminari 5 1.1.1 IATE 11 1.1.2 Multilinguismo 12 1.1.3 Neologismi 13 1.1.4 Traduzione 14 1.2 Interviste a specialisti di terminologia 19 1.3 Lingua e linguistica: consulenze e approfondimenti per il traduttore/terminologo 32 1.3.1 Lingua inglese 38 1.3.2 Neologismi 40 1.3.3 Preservazione lingue minoritarie 41 1.3.4 Varianti linguistiche 42 1.3.5 Video Fix 44 1.4 Formazione in terminologia 48 1.4.1 Collaborazioni universitarie 57 Capitolo 2 Il sito TermCoord: pratiche e applicazioni per la terminologia e la traduzione specialistica Introduzione 59 2.1 Traduzione, CAT tools e mediazione culturale 59 2.1.1 Traduzione automatica 70 2.2 Database terminologici 74 2.2.1 Inter-active Terminology for Europe 79 2.3 I glossari 82 2.3.1 Nuove proposte 88 2.4 Dalla site map alle analisi svolte 90 Capitolo 3 IATE Term of the Week: da un corpus a un glossario 1 3.1 Nascita del database terminologico 93 3.2 Iate Term of the Week 95 3.3 Il glossario 96 3.4 Analisi dei dati censiti 115 Capitolo 4 Le interviste ai protagonisti di TermCoord Introduzione 116 4.1 Term extraction 117 4.1.1 IATE 118 4.1.2 Validazione e definizioni 119 4.1.3 Proactive terminology 120 4.2 La comunicazione e la scelta dei contenuti in TermCoord 120 4.2.1 Le interviste 121 4.3 L’importanza della terminologia al Parlamento Europeo 122 4.3.1 L’attività di TermCoord 123 4.3.2 EurTerm 125 4.4 I supporti tecnologici per la traduzione 128 4.5 Trainees’ terminology projects 130 Conclusioni 132 Bibliografia 134 2 Introduzione Il sito web del Parlamento Europeo TermCoord.eu è lo strumento di promozione e divulgazione dell’attività della Terminology Coordination Unit, che opera presso la sede dell’Unione Europea a Lussemburgo. -

Augmented Reality Based Word Translator Tabish Khan, Rishisingh Hora, Ashwin Bendre, Prof

International Journal of Innovative Research in Computer Science & Technology (IJIRCST) ISSN: 2347-5552, Volume-2, Issue-2, March 2014 Augmented Reality Based Word Translator Tabish Khan, Rishisingh Hora, Ashwin Bendre, Prof. Sneha Tirth developed for iOS. The translation it provides is not always Abstract— People travel to different places not knowing 100 percent accurate. It has a hit rate of 90-95 percent. the language used in that region. Hence there is a need to translate these unknown words to recognizable text. This application is developed to help travelers who can get the translated text as an output of our application. Our application is able to recognize the text captured by a mobile phone camera, translate the text and display the translation result back onto the screen of the mobile phone in an augmented manner. Index Terms— Android, Augmented Reality, Optical Character Recognition, Word Translator. NTRODUCTION I. I The motivation of our application is to help tourist FIG 1: Output of word lens navigate in a foreign language environment. The The above figure depicts an example of the word lens application we developed enables the users to get text application which translates the words “Bienvenido Al translated as easy as a button click. The camera captures the Futuro” to the desired English language, the output being, text and returns the translated result in the real time. “Welcome to the future”. The system we developed includes automatic text detection, OCR and text translation. Although the current version of our application is limited to translation of a few languages, III. PROPOSED SYSTEM we can add a feature of text-to-speech, as an extension in Inspired by the existing application, we will use the same our application. -

Final Thesis1

Chapter 3. HISTORICAL BACKGROUND OF QR CODE & AR APPLICATION 3.1. What is a QR Code QR code (abbreviated from Quick Response Code) is the trademark for a type of matrix barcode (or two-dimensional bar code) A QR code is a matrix barcode readable by smart phones and mobile phones with cameras. They are sometimes referred to as 2d codes, 2d barcodes, or mobile codes. QR codes can hold much more information than a regular barcode. The information encoded in a QR code can be a URL, a phone number, an SMS message, a V-card, or any text. They are referred to as QR because they allow the contents to be decoded at high speed. QR codes were developed in 1994 by Denso-Wave, a Toyota subsidiary In Catalog records to offer patrons basic information about location of books and call number, users can scan the code and head to the stacks rather than writing or printing, this means, for example, a student who scans QR codes from a library OPAC in the middle of the night in his or her dorm room could head to the library stacks the next day, click open the app to refer to the call numbers of the titles scanned the night before, and quickly find the books. Also, expect to see QR code scanner/reader apps that allow users to tag, share, comment, collaborate, and more in the future. Taped to video/DVD cases, linking to mobile-friendly video trailers, Codes in the library stacks/end caps or magazine/journal areas that point to online electronic holdings of print materials or related subject guides, linking to library audio tours for orientations etc. -

VIBRANCE VIBRANCE Vol.7, Issue 5

VIBRANCE VIBRANCE Vol.7, Issue 5 MAY 2014, Vol. 7, Issue 5 3+ Monthly E-magazine of Dept. of I.T, I.T.S, Mohan Nagar, Ghaziabad In this Issue! Mobile apps useful in searching jobs…. 2 Word Lens, incredible visual translation app…. 4 Some tips for the success, Alumni Speak ….. 5 Happenings @ Dept. of I.T in the month of May, 2014 ….6 Festa d’ addio, a farewell party for MCA 2011-14 …7 Answers of Guess Who? ….8 Placement Quiz Series…9 Answers to last Quiz…10 Institute of Technology & Science Mohan Nagar, Ghaziabad www.facebook.com/ITS.Education.Group www.facebook.com/ITS.MohanNagar.Ghaziabad www.its.edu.in VIBRANCE Vol.7, Issue 5 In this Issue! Mobile apps useful in searching jobs…. 2 Mobile apps useful in searching Jobs Word Lens, incredible visual translation app…. 4 One of the trends that surfaced from the study had to do with immediacy. The study found that 59% of job seekers thought that their odds of getting a Some tips for the success, response on a job were better if they responded to the posting as soon as it Alumni Speak ….. 5 was online. The ability to be connected anywhere and the urgency of looking for a new job make mobile devices one of the most important job search tools. Happenings @ Dept. of I.T in Some of the apps which can help you in searching job via your mobiles are: the month of May, 2014 ….6 Festa d’ addio, a farewell party for MCA 2011-14 …7 Answers of Guess Who? ….8 Placement Quiz Series…9 Answers to last Quiz…10 1. -

(12) United States Design Patent Do Patent No.: US D706,803 S Rogowski Et Al

US00D706803S (12) United States Design Patent do Patent No.: US D706,803 S Rogowski et al. (45) Date of Patent: : Jun. 10, 2014 (54) SMARTPHONE WITH GRAPHICAL USER D553,140 S 10/2007 Noviello et al. INTERFACE FOR A TEXT SELECTION BOX D601,154 S 9/2009 Pelczarski et al. D626,133 S + 10/2010 Murphy et al. .............. D14/485 (71) Applicants: Ryan Leon Rogowski, Naperville, IL (Continued) (US); Huan-Yu Wu, Taipei (TW); Kevin Anthony Clark, Warwick, RI (US) FOREIGN PATENT DOCUMENTS EP 2587389 A1 5/2013 (72) Inventors: Ryan Leon Rogowski, Naperville, IL WO 2013040 107 A1 3/2013 (US); Huan-Yu Wu, Taipei (TW); Kevin WO 2013 134090 A1 9/2013 Anthony Clark, Warwick, RI (US) WO 201400 1937 A1 1/2014 (73) Assignee: Translate Abroad Inc., Providence, RI OTHER PUBLICATIONS (US) Quest Visual Inc, “Word Lens,” Quest Visual website, available at (**) Term: 14 Years http://questvisual.com/us/Accessed on Jan. 11, 2014. (Continued) (21) Appl. No.: 29/484,905 Primary Examiner — Cynthia Underwood (22) Filed: Mar 13, 2014 (74) Attorney, Agent, or Firm – American Patent Agency PC; Daniar Hussain; Karl Dresdner Related U.S. Application Data (57) CLAIM (63) Continuation of application No. 14/207,155, filed on The ornamental design for a smartphone with graphical user Mar 12, 2014. interface for a text selection box, as shown and described. (51) LOC (10) Cl. ................................................ 14–04 DESCRIPTION (52) U.S. CI. USPC ..…. D14/486 FIG. 1 is a front view of a smartphone with graphical user (58) Field of Classification Search interface for a text selection box showing our novel design; USPC ........ -

Looking-At-11-Useful-Apps-That-Attorneys-May-Have

Looking at 11 useful mobile apps that attorneys may have never heard of These free apps can help attorneys find ways to increase efficiency and cut expenses without sacrificing quality by Patrick Soon and Rebecca Bellow *This article first appeared in Inside Counsel Magazine on June 10, 2014: http://bit.ly/16bMIaC Let’s face it: In this economy the pressure is on for attorneys to find ways to increase efficiency and cut expenses without sacrificing quality. The free apps below can be very helpful in this regard. And while most of these apps are not specifically designed to aid the practice of law, they should nevertheless be included in every lawyer’s digital tool box. Here are the top 11: 1. Asana (Free for teams up to 15) Android; iPhone Asana is an easy-to-use, web-based group-project manager that makes it easier for attorneys and paralegals to work together on a big case. Each team member gets a workspace where they can add comments, attachments and tags to the tasks on which they are collaborating. Each user also gets their own inbox which organizes and updates new information in real time, so e-mailing is not needed. The most important benefit is that the lead attorney can spend more time working on the case itself and less time checking up on the other members of the group. 2. Documents 5 (Free) iPhone This app represents an overhaul of Documents, by Readdle. It has many applications for law offices as it is designed for optimal file management, document viewing and media playing. -

Spring 2014--All Grants Sorted by State

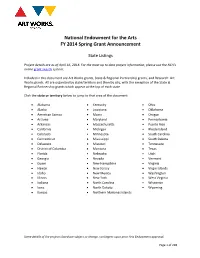

National Endowment for the Arts FY 2014 Spring Grant Announcement State Listings Project details are as of April 16, 2014. For the most up to date project information, please use the NEA's online grant search system. Included in this document are Art Works grants, State & Regional Partnership grants, and Research: Art Works grants. All are organized by state/territory and then by city, with the exception of the State & Regional Partnership grants which appear at the top of each state. Click the state or territory below to jump to that area of the document. • Alabama • Kentucky • Ohio • Alaska • Louisiana • Oklahoma • American Samoa • Maine • Oregon • Arizona • Maryland • Pennsylvania • Arkansas • Massachusetts • Puerto Rico • California • Michigan • Rhode Island • Colorado • Minnesota • South Carolina • Connecticut • Mississippi • South Dakota • Delaware • Missouri • Tennessee • District of Columbia • Montana • Texas • Florida • Nebraska • Utah • Georgia • Nevada • Vermont • Guam • New Hampshire • Virginia • Hawaii • New Jersey • Virgin Islands • Idaho • New Mexico • Washington • Illinois • New York • West Virginia • Indiana • North Carolina • Wisconsin • Iowa • North Dakota • Wyoming • Kansas • Northern Marianas Islands Some details of the projects listed are subject to change, contingent upon prior Arts Endowment approval. Page 1 of 228 Alabama Number of Grants: 5 Total Dollar Amount: $841,700 Alabama State Council on the Arts $741,700 Montgomery, AL FIELD/DISCIPLINE: State & Regional To support Partnership Agreement activities. Auburn University Main Campus $55,000 Auburn, AL FIELD/DISCIPLINE: Visual Arts To support the Alabama Prison Arts and Education Project. Through the Department of Human Development and Family Studies in the College of Human Sciences, the university will provide visual arts workshops taught by emerging and established artists for those who are currently incarcerated.