Nicolas Collins Before Apple There Was Kim – the Microcomputer, Music and Me September, 2009

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

"Bob" Schreiner

Oral History of Robert “Bob” Schreiner Interviewed by: Stephen Diamond Recorded: June 10, 2013 Mountain View, California CHM Reference number: X6873.2014 © 2013 Computer History Museum Oral History of Robert “Bob” Schreiner Stephen Diamond: We're here at the Computer History Museum with Bob Schreiner. It's June 10th, 2013, and we're going to talk about the oral history of Synertek and the 6502. Welcome, Bob. Thanks for being here. Can you introduce yourself to us? Robert “Bob” Schreiner: Okay. My name is Bob Schreiner. I'm an ex-Fairchilder, one of the Fairchildren in the valley, and then involved in running a couple of other small semiconductor companies, and I started a semiconductor company. Diamond: So that would be Synertek. Schreiner: Synertek. Diamond: Tell us about that. Schreiner: Okay. As you know from an earlier session I left Fairchild Semiconductor around 1971. And at the time I left I was running the LSI program at Fairchild, and I was a big believer that the future marketplace for MOS technology would be in the custom area. And since Fairchild let that whole thing fall apart, I decided there's got to be room for a company to start up to do that very thing, work with big producers of hardware and develop custom chips for them so they would have a propriety product that would be difficult to copy. So I wrote a business plan, and I went around to a number of manufacturers. I had a computer guy [General Automation], and I had Bulova Watch Company, and I had a company that made electronic telephones [American Telephones], and who was the fourth guy? Escapes my memory right now, but the pitch basically was, "Your business, which now you manufacture things with discrete components, it's going to change. -

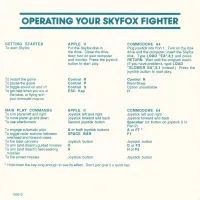

Skyfox Fighter

OPERATING YOUR SKYFOX FIGHTER GETTING STARTED APPLE II COMMODORE 64 To start Skyfox Put the Skyfox disk in Plug joystick into Port 1. Turn on the disk the drive. Close the drive drive and the computer; insert the Skyfox door; turn on your computer disk. Type LOAD "EA",8,1 and press and monitor. Press the joystick RETURN. Wait until the program loads. button to start play. (If you have problems, type LOAD "SLOWER EA",8,1 instead.) Press the joystick button to start play. To restart the game Control R Control R To pause the game Control P Run/Stop To toggle sound on and off Control S Option unavailable To get help when you are at ESC Key H the base, or flying with your computer map up MAIN PLAY COMMANDS APPLE II COMMODORE 64 To turn plane left and right Joystick left and right Joystick left and right To move plane up and down Joystick forward and back Joystick forward and back To use afterburners Second joystick button Spacebar (or button on joystick 2 in Port 2) To engage automatic pilot A or both joystick buttons AorF7* To toggle radar scanner between SPACE BAR F1 overhead and forward views To fire laser cannons Joystick button Joystick button To arm (and disarm) guided missiles G G or F3 To arm (and disarm) heat-seeking H H or F5 missiles To fire armed missiles Joystick button Joystick button • Hold down the key long enough to see' its effect. Don't just give it a quick tap. 103619 GETTING STARTED ATARI ST COMMODORE AMIGA To start Skyfox Put the Skyfox disk in After kickstarting your Amiga, insert the the drive and turn on the Skyfox disk in the drive. -

1981 Synertek Data Catalog

It ..'.'... E; $5.00 !' 5J SYNERTE~ A SUBSIDIARY OF HONEYWELL ~ SYNERTEK § 1981-1982 ,.:: DATA CATALOG -\C GO • 'C -GO...., C ~ )Ii Sl~I g j - Table of Contents Index SYNERTEK 1981-1982 DATA CATALOG Random Access Memories 1 • Read Only Memories 2. Microprocessors 3 I logic CapabIlItIes 41 Systems 51 Quality Assurance 6. SYNERTEK A SUBSIDIARY OF HONEYWELL Generallnfonnatlon P.O.BOXSS2-MS/34 • SANTA CLARA, CA9S0S2 71 TEL.(40S)988-S600 • 1WX:910-33S-D13S Contents CHAPTER 1 Page Random Access Memory RAM Selector Guide 1-2 SY2101. 256 x 4-Bit Static RAM 1-3 SY2111. 256 x 4-Bit Static RAM 1-7 SY2112. 256 x 4-Bit Static RAM 1-11 SY2114. 1024 x 4-Bit Static RAM 1-17 SY2114LV, 1024 x 4-Bit Static RAM 1-21 SY2128, 2048 x 8-Bit Static RAM .............. .. 1-25 SY2142, 1024 x 4-Bit Static RAM 1-26 SY2142LV. 1024 x 4-Bit Static RAM 1-30 SY2147, 4096 x 1-Bit Static RAM '" 1-34 SY2147H, 4096 x 1-Bit Static RAM 1-38 SYM2147. 4096 x 1-Bit Static RAM 1-39 SY2148H, 1024 x 4-Bit Static RAM 1-40 SYM2148, 1024 x 4-Bit Static RAM 1-44 SY2149H. 1024 x 4-Bit Static RAM 1-45 SYM2149H, 1024 x 4-Bit Static RAM 1-49 CHAPTER 2 Read Only Memory ROM Selector Guide 2-2 SY2316NB, 2048 x 8-Bit ROM 2-3 SY2316B-2, 2048 x 8-Bit ROM 2-7 SY2316B-3, 2048 x 8-Bit ROM 2-11 SY2332/3, 4096 x 8-Bit ROM 2-15 SY2332/3-3, 4096 x 8-Bit ROM 2-19 SY2364/A, 8192 x 8-Bit ROM 2-23 SY2365/A, 8192 x 8-Bit ROM 2-27 SY23128, 16,384 x 8-Bit ROM 2-31 SY3308, 1024 x 8-Bit ROM 2-35 SY3316/A, 2048 x 8-Bit ROM 2-36 SYM3316/A, 2048 x 8-Bit ROM 2-40 CHAPTER 3 Microprocessors Microcomputers Z8, Single Chip Microcomputer 3-3 Microprocessors and Peripherals SY1791-02/SY1793-D2, Floppy Disk Controller 3-23 SY2661, Enhanced Programmable Communications Interface 3-39 SY6500, 8-Bit Microprocessor Family 3-53 SY6520, Peripheral Interface Adapter 3-67 SY6521/SY6821, Peripheral Interface Adapter 3-81 SY6522, Versatile Interface Adapter 3-95 SY6530, Memory, 1/0. -

Commodore 64 Users Guide

INTRODUCTION Now that you've become more intimately involved with your Commo- dore 64, we want you to know that our customer support does not stop here. You may not know it, but Commodore has been in business for over 23 years. In the 1970's we introduced the first self-contained per- sonal computer (the PET). We have since become the leading computer company in many countries of the world. Our ability to design and manufacture our own computer chips allows us to bring you new and better personal computers at prices way below what you'd expect for this level of technical excellence. Commodore is committed to supporting not only you, the end user, but also the dealer you bought your computer from, magazines which publish how-to articles showing you new applications or techniques, and . importantly . software developers who produce programs on cartridge, disk and tape for use with your computer. We encourage you to establish or join a Commodore "user club" where you can learn new techniques, exchange ideas and share discoveries. We publish two separate magazines which contain programming tips, information on new products and ideas for computer applications. (See Appendix N). In North America, Commodore provides a "Commodore Information Network" on the CompuServe Information Service . to access this network, all you need is your Commodore 64 computer and our low cost VICMODEMtelephone interface cartridge (or other compatible modem). The following APPENDICEScontain charts, tables, and other informa- tion which help you program your Commodore 64 faster and more efficiently. They also include important information on the wide variety of Commodore products you may be interested in, and a bibliography listing of over 20 books and magazines which can help you develop your programming skills and keep you current on the latest information con- cerning your computer and peripherals. -

The 1-Bit Instrument: the Fundamentals of 1-Bit Synthesis

BLAKE TROISE The 1-Bit Instrument The Fundamentals of 1-Bit Synthesis, Their Implementational Implications, and Instrumental Possibilities ABSTRACT The 1-bit sonic environment (perhaps most famously musically employed on the ZX Spectrum) is defined by extreme limitation. Yet, belying these restrictions, there is a surprisingly expressive instrumental versatility. This article explores the theory behind the primary, idiosyncratically 1-bit techniques available to the composer-programmer, those that are essential when designing “instruments” in 1-bit environments. These techniques include pulse width modulation for timbral manipulation and means of generating virtual polyph- ony in software, such as the pin pulse and pulse interleaving techniques. These methodologies are considered in respect to their compositional implications and instrumental applications. KEYWORDS chiptune, 1-bit, one-bit, ZX Spectrum, pulse pin method, pulse interleaving, timbre, polyphony, history 2020 18 May on guest by http://online.ucpress.edu/jsmg/article-pdf/1/1/44/378624/jsmg_1_1_44.pdf from Downloaded INTRODUCTION As unquestionably evident from the chipmusic scene, it is an understatement to say that there is a lot one can do with simple square waves. One-bit music, generally considered a subdivision of chipmusic,1 takes this one step further: it is the music of a single square wave. The only operation possible in a -bit environment is the variation of amplitude over time, where amplitude is quantized to two states: high or low, on or off. As such, it may seem in- tuitively impossible to achieve traditionally simple musical operations such as polyphony and dynamic control within a -bit environment. Despite these restrictions, the unique tech- niques and auditory tricks of contemporary -bit practice exploit the limits of human per- ception. -

The History of Computer Language Selection

The History of Computer Language Selection Kevin R. Parker College of Business, Idaho State University, Pocatello, Idaho USA [email protected] Bill Davey School of Business Information Technology, RMIT University, Melbourne, Australia [email protected] Abstract: This examines the history of computer language choice for both industry use and university programming courses. The study considers events in two developed countries and reveals themes that may be common in the language selection history of other developed nations. History shows a set of recurring problems for those involved in choosing languages. This study shows that those involved in the selection process can be informed by history when making those decisions. Keywords: selection of programming languages, pragmatic approach to selection, pedagogical approach to selection. 1. Introduction The history of computing is often expressed in terms of significant hardware developments. Both the United States and Australia made early contributions in computing. Many trace the dawn of the history of programmable computers to Eckert and Mauchly’s departure from the ENIAC project to start the Eckert-Mauchly Computer Corporation. In Australia, the history of programmable computers starts with CSIRAC, the fourth programmable computer in the world that ran its first test program in 1949. This computer, manufactured by the government science organization (CSIRO), was used into the 1960s as a working machine at the University of Melbourne and still exists as a complete unit at the Museum of Victoria in Melbourne. Australia’s early entry into computing makes a comparison with the United States interesting. These early computers needed programmers, that is, people with the expertise to convert a problem into a mathematical representation directly executable by the computer. -

DEVICE and CIRCUIT DESIGN for VLSI by Amr Mohsen

31 DEVICE AND CIRCUIT DESIGN FOR VLSI by Amr Mohsen* Intel Corporation 3065 Bowers Avenue Santa Clara, California 95051 ABSTRACT A review of the device and circuit design complexity and limitations for VLSI is presented. VLSI device performance will be limited by second order device effects, interconnection line de lay and current density and chip power dissipation. The complexity of VLSI circuit design will require hierarchial structured design methodology with special consideration of testability dnd more emphasis on redundancy. New organizations of logic function architectures and smart memories will evolve to take advantage of the topological properties of the VLSI silicon technology. * The author is also on the faculty of California Institute of Technology CALTECH CONFERENCE ON VLSI, January 1979 32 Amr Mohsen I. INTRODUCTION: As semiconductor technology has evolved from discrete to small-scale to medium-scale and through large-scale integration levels a rapid decrease in the cost per function provided by the technology have opened up new applications and industries. Today there is a large interest in the next phase of integration: very large scale integration (VLSI). The semi conductor technology will be able to fabricate chips with more than lOOK devices (l) in the 1980's. The continuous scaling of the semi- conductor devices and increase in components counts on a single chip is resulting in more complex technology developments, device and circuit designs and product definition. In this review paper, projections of how device technology will evolve in the future and the problems and limitations of device and circuit design for VLSI are presented. VLSI TECHNOLOGIES: In Fig. -

Mos Technology, 1963-1974: a Dozen Crucial Years

One of IBM’s most important MOS Technology, 1963-1974: A Dozen Crucial Years contributions to MOS research came from the Components Division, which was responsible for developing by Ross Knox Bassett and manufacturing bipolar transistors for its large computer systems and had very little interest in MOS transistors line can be drawn from the as such. As part of its work on Frosch’s and Derick’s work on bipolar transistors, Donald Kerr and silicon dioxide to the MOS (metal- a group of engineers had discovered A that depositing small amounts of oxide-semiconductor) transistor’s domi- nance of semiconductor technology, phosphorous on the silicon-dioxide but it is neither short nor straight. That surface and forming a layer of line has several discernable segments, phosphosilicate glass (PSG) could first from Frosch and Derick’s work, limit the amount of leakage in bipolar until 1963. In this interval, by and transistors and play an important role large, no one thought seriously about a in enhancing the stability of MOS metal-oxide-semiconductor as a viable transistors. Jerome Eldridge and Pieter technology in its own right. The second Balk from IBM Research implemented segment runs from 1963, when the this work by using thin layers of combination of integrated circuits and PSG to make stable MOS devices. the planar manufacturing process had Other important work on the physics FIG. 2. Drawing of Atalla and Kahng’s “silicon-silicon dioxide surface device,” now known as and chemistry of MOS devices done led people to see MOS transistors as a the MOS transistor, from a 1961 Bell Labs technical memorandum by Kahng. -

Vlsi Design Lecture Notes B.Tech (Iv Year – I Sem) (2018-19)

VLSI DESIGN LECTURE NOTES B.TECH (IV YEAR – I SEM) (2018-19) Prepared by Dr. V.M. Senthilkumar, Professor/ECE & Ms.M.Anusha, AP/ECE Department of Electronics and Communication Engineering MALLA REDDY COLLEGE OF ENGINEERING & TECHNOLOGY (Autonomous Institution – UGC, Govt. of India) Recognized under 2(f) and 12 (B) of UGC ACT 1956 (Affiliated to JNTUH, Hyderabad, Approved by AICTE - Accredited by NBA & NAAC – ‘A’ Grade - ISO 9001:2015 Certified) Maisammaguda, Dhulapally (Post Via. Kompally), Secunderabad – 500100, Telangana State, India Unit -1 IC Technologies, MOS & Bi CMOS Circuits Unit -1 IC Technologies, MOS & Bi CMOS Circuits UNIT-I IC Technologies Introduction Basic Electrical Properties of MOS and BiCMOS Circuits MOS I - V relationships DS DS PMOS MOS transistor Threshold Voltage - VT figure of NMOS merit-ω0 Transconductance-g , g ; CMOS m ds Pass transistor & NMOS Inverter, Various BiCMOS pull ups, CMOS Inverter Technologies analysis and design Bi-CMOS Inverters Unit -1 IC Technologies, MOS & Bi CMOS Circuits INTRODUCTION TO IC TECHNOLOGY The development of electronics endless with invention of vaccum tubes and associated electronic circuits. This activity termed as vaccum tube electronics, afterward the evolution of solid state devices and consequent development of integrated circuits are responsible for the present status of communication, computing and instrumentation. • The first vaccum tube diode was invented by john ambrase Fleming in 1904. • The vaccum triode was invented by lee de forest in 1906. Early developments of the Integrated Circuit (IC) go back to 1949. German engineer Werner Jacobi filed a patent for an IC like semiconductor amplifying device showing five transistors on a common substrate in a 2-stage amplifier arrangement. -

![When High-Tech Was Low-Tech : a Retrospective Look at Forward-Thinking Technologies [Multiple Exhibits]](https://docslib.b-cdn.net/cover/4438/when-high-tech-was-low-tech-a-retrospective-look-at-forward-thinking-technologies-multiple-exhibits-614438.webp)

When High-Tech Was Low-Tech : a Retrospective Look at Forward-Thinking Technologies [Multiple Exhibits]

University of South Florida Scholar Commons Library and Community-based Exhibits Library Outreach 9-1-2003 When High-Tech was Low-Tech : A Retrospective Look at Forward-Thinking Technologies [Multiple exhibits] James Anthony Schnur, Follow this and additional works at: https://scholarcommons.usf.edu/npml_outreach_exhibits Scholar Commons Citation Schnur,, James Anthony, "When High-Tech was Low-Tech : A Retrospective Look at Forward-Thinking Technologies [Multiple exhibits]" (2003). Library and Community-based Exhibits. 43. https://scholarcommons.usf.edu/npml_outreach_exhibits/43 This Presentation is brought to you for free and open access by the Library Outreach at Scholar Commons. It has been accepted for inclusion in Library and Community-based Exhibits by an authorized administrator of Scholar Commons. For more information, please contact [email protected]. When High-Tech was Low-Tech A Retrospective Look at Forward-Thinking Technologies Nelson Poynter Memorial Library University of South Florida St. Petersburg When High-Tech was Low-Tech When High-Tech was Low-Tech When High-Tech was Low-Tech The development of transistors after By the late 1970s, early “personal Before the widespread use of “floppy” World War II allowed manufacturers to computers” and game systems began to disks (in both 5¼ and 8 inch formats), build smaller, more sophisticated, and appear in homes. One of the most many early personal computers used less expensive devices. No longer did popular games of this period came from tape drives. “Personal computer consumers have to worry about Atari. This Ultra-Pong console, cassettes” usually held about 64,000 purchasing expensive tubes for heavy, released by Atari in 1977, included bytes of data and could take up to 30 bulky radios and televisions. -

The Ultimate C64 Overview Michael Steil, 25Th Chaos Communication Congress 2008

The Ultimate C64 Overview Michael Steil, http://www.pagetable.com/ 25th Chaos Communication Congress 2008 Retrocomputing is cool as never before. People play Look and Feel C64 games in emulators and listen to SID music, but few people know much about the C64 architecture A C64 only needs to be connected to power and a TV and its limitations, and what programming was like set (or monitor) to be fully functional. When turned back then. This paper attempts to give a comprehen- on, it shows a blue-on-blue theme with a startup mes- sive overview of the Commodore 64, including its in- sage and drops into a BASIC interpreter derived from ternals and quirks, making the point that classic Microsoft BASIC. In order to load and save BASIC computer systems aren't all that hard to understand - programs or use third party software, the C64 re- and that programmers today should be more aware of quires mass storage - either a “datasette” cassette the art that programming once used to be. tape drive or a disk drive like the 5.25" Commodore 1541. Commodore History Unless the user really wanted to interact with the BA- SIC interpreter, he would typically only use the BA- Commodore Business Machines was founded in 1962 SIC instructions LOAD, LIST and RUN in order to by Jack Tramiel. The company specialized on elec- access mass storage. LOAD"$",8 followed by LIST tronic calculators, and in 1976, Commodore bought shows the directory of the disk in the drive, and the chip manufacturer MOS Technology and decided LOAD"filename",8 followed by RUN would load and to have Chuck Peddle from MOS evolve their KIM-1 start a program. -

Imitation and Limitation

Fake Bit: Imitation and Limitation Brett Camper [email protected] ABSTRACT adventure and role-playing games, which are traditionally less A small but growing trend in video game development uses the action-oriented. Several lesser known NES games contributed to “obsolete” graphics and sound of 1980s-era, 8-bit microcomputers the style early on as well, such as Hudson Soft’s Faxanadu (1989) to create “fake 8-bit” games on today’s hardware platforms. This and Milon’s Secret Castle (1986), as well as Konami’s The paper explores the trend by looking at a specific case study, the Goonies II (1987). In more recent decades, the Castlevania series platform-adventure game La-Mulana, which was inspired by the from Konami has also adopted and advanced the form, from Japanese MSX computer platform. Discussion includes the Symphony of the Night (1997) on PlayStation, through Portrait of specific aesthetic traits the game adopts (as well as ignores), and Ruin (2006) for the Nintendo DS. the 8-bit technological structures that caused them in their original La-Mulana is an extremely well made title that ranks among the 1980s MSX incarnation. The role of technology in shaping finest in this genre, displaying unusual craftsmanship and aesthetics, and the persistence of such effects beyond the lifetime cohesiveness. Its player-protagonist is Professor Lemeza, an of the originating technologies, is considered as a more general archaeologist explorer charting out vast underground ruins in a “retro media” phenomenon. distant, unspecified corner of the globe (Indiana Jones is an obvious pop culture reference, but also earlier examples like H.