Demonstrating the Memory RAS Features of Lenovo Thinksystem Servers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Kernel Panic

Kernel Panic Connect, Inc. 1701 Quincy Avenue, Suites 5 & 6, Naperville, IL 60540 Ph: (630) 717-7200 Fax: (630) 717-7243 www.connectrf.com Table of Contents SCO Kernel Patches for Panics on Pentium Systems…………………………………..1 Kernel Panic Debug Methodologies..............................................................................3 SCO Kernel Patches for Panics on Pentium Systems SCO Kernel Patches for Panics on Pentium Systems Web Links http://www.sco.com/cgi-bin/ssl_reference?104530 http://www.sco.com/cgi-bin/ssl_reference?105532 Pentium System Panics with Trap Type 6; no dump is saved to /dev/swap. Keywords panic trap kernel invalid opcode pentium querytlb querytbl 386 mpx unix v4 dump swap double type6 no patch /etc/conf/pack.d/kernel/locore.o image locore Release SCO UNIX System V/386 Release 3.2 Operating System Version 4.0, 4.1 and 4.2 SCO Open Desktop Release 2.0 and 3.0 SCO Open Server System Release 2.0 and 3.0 Problem My system panics with a Trap Type 6, but no memory dump gets written. Cause There is a flaw in the kernel querytlb() routine that allows the Pentium to execute a 386-specific instruction which causes it to panic with an invalid opcode. querytlb() is only called when your system is panicking. The fault causes the system to double- panic and it thus fails to write a panic dump to disk. This means that the panic trap type 6 is concealing another type of panic which will show itself after the following patch has been applied. Solution You can apply the following patch, as root, to: /etc/conf/pack.d/kernel/locore.o Use the procedure that follows. -

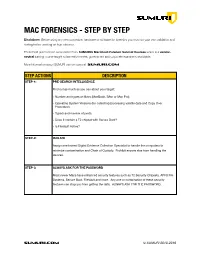

SUMURI Macintosh Forensics Best Practices

! MAC FORENSICS - STEP BY STEP Disclaimer: Before using any new procedure, hardware or software for forensics you must do your own validation and testing before working on true evidence. These best practices are summarized from SUMURI’s Macintosh Forensic Survival Courses which is a vendor- neutral training course taught to law enforcement, government and corporate examiners worldwide. More information about SUMURI can be found at SUMURI.com STEP ACTIONS DESCRIPTION STEP-1: PRE-SEARCH INTELLIGENCE Find out as much as you can about your target: • Number and types of Macs (MacBook, iMac or Mac Pro). • Operating System Versions (for collecting/processing volatile data and Copy Over Procedure). • Type/s and number of ports. • Does it contain a T2 chipset with Secure Boot? • Is FileVault Active? STEP-2: ISOLATE Assign one trained Digital Evidence Collection Specialist to handle the computers to minimize contamination and Chain of Custody. Prohibit anyone else from handling the devices. STEP-3: ALWAYS ASK FOR THE PASSWORD Most newer Macs have enhanced security features such as T2 Security Chipsets, APFS File Systems, Secure Boot, FileVault and more. Any one or combination of these security features can stop you from getting the data. ALWAYS ASK FOR THE PASSWORD. SUMURI.com © SUMURI 2010-2019 ! STEP ACTIONS DESCRIPTION STEP-4: IF COMPUTER IS ON - SCREEN SAVER PASSWORD ACTIVE Options are: • Ask for the Password - Confirm password and proceed to Step-6. • Restart to Image RAM - Connect a RAM Imaging Utility to the Mac such as RECON IMAGER. Conduct a soft-restart (do not power off if possible and image the RAM). -

Linux Kernal II 9.1 Architecture

Page 1 of 7 Linux Kernal II 9.1 Architecture: The Linux kernel is a Unix-like operating system kernel used by a variety of operating systems based on it, which are usually in the form of Linux distributions. The Linux kernel is a prominent example of free and open source software. Programming language The Linux kernel is written in the version of the C programming language supported by GCC (which has introduced a number of extensions and changes to standard C), together with a number of short sections of code written in the assembly language (in GCC's "AT&T-style" syntax) of the target architecture. Because of the extensions to C it supports, GCC was for a long time the only compiler capable of correctly building the Linux kernel. Compiler compatibility GCC is the default compiler for the Linux kernel source. In 2004, Intel claimed to have modified the kernel so that its C compiler also was capable of compiling it. There was another such reported success in 2009 with a modified 2.6.22 version of the kernel. Since 2010, effort has been underway to build the Linux kernel with Clang, an alternative compiler for the C language; as of 12 April 2014, the official kernel could almost be compiled by Clang. The project dedicated to this effort is named LLVMLinxu after the LLVM compiler infrastructure upon which Clang is built. LLVMLinux does not aim to fork either the Linux kernel or the LLVM, therefore it is a meta-project composed of patches that are eventually submitted to the upstream projects. -

Oracle VM Virtualbox User Manual

Oracle VM VirtualBox R User Manual Version 5.0.16 c 2004-2016 Oracle Corporation http://www.virtualbox.org Contents 1 First steps 11 1.1 Why is virtualization useful?............................. 12 1.2 Some terminology................................... 12 1.3 Features overview................................... 13 1.4 Supported host operating systems.......................... 15 1.5 Installing VirtualBox and extension packs...................... 16 1.6 Starting VirtualBox.................................. 17 1.7 Creating your first virtual machine......................... 18 1.8 Running your virtual machine............................ 21 1.8.1 Starting a new VM for the first time.................... 21 1.8.2 Capturing and releasing keyboard and mouse.............. 22 1.8.3 Typing special characters.......................... 23 1.8.4 Changing removable media......................... 24 1.8.5 Resizing the machine’s window...................... 24 1.8.6 Saving the state of the machine...................... 25 1.9 Using VM groups................................... 26 1.10 Snapshots....................................... 26 1.10.1 Taking, restoring and deleting snapshots................. 27 1.10.2 Snapshot contents.............................. 28 1.11 Virtual machine configuration............................ 29 1.12 Removing virtual machines.............................. 30 1.13 Cloning virtual machines............................... 30 1.14 Importing and exporting virtual machines..................... 31 1.15 Global Settings................................... -

Linux Crash HOWTO

Linux Crash HOWTO Norman Patten [email protected] 2002−01−30 Revision History Revision 1.0 2002−01−30 Revised by: NM Initial release. This document describes the installation and usage of the LKCD (Linux Kernel Crash Dump) package. Linux Crash HOWTO Table of Contents 1. Introduction.....................................................................................................................................................1 1.1. Copyright and License......................................................................................................................1 2. How LKCD Works.........................................................................................................................................2 2.1. What You Need.................................................................................................................................2 3. Installation of lkcd..........................................................................................................................................3 3.1. Installing From Source Code............................................................................................................3 3.2. Building and Installing LKCD Utilities............................................................................................3 3.3. What Gets Installed...........................................................................................................................3 3.4. Installing LKCD Utilities From RPM..............................................................................................3 -

Embedded Linux Conference Europe 2019

Embedded Linux Conference Europe 2019 Linux kernel debugging: going beyond printk messages Embedded Labworks By Sergio Prado. São Paulo, October 2019 ® Copyright Embedded Labworks 2004-2019. All rights reserved. Embedded Labworks ABOUT THIS DOCUMENT ✗ This document is available under Creative Commons BY- SA 4.0. https://creativecommons.org/licenses/by-sa/4.0/ ✗ The source code of this document is available at: https://e-labworks.com/talks/elce2019 Embedded Labworks $ WHOAMI ✗ Embedded software developer for more than 20 years. ✗ Principal Engineer of Embedded Labworks, a company specialized in the development of software projects and BSPs for embedded systems. https://e-labworks.com/en/ ✗ Active in the embedded systems community in Brazil, creator of the website Embarcados and blogger (Portuguese language). https://sergioprado.org ✗ Contributor of several open source projects, including Buildroot, Yocto Project and the Linux kernel. Embedded Labworks THIS TALK IS NOT ABOUT... ✗ printk and all related functions and features (pr_ and dev_ family of functions, dynamic debug, etc). ✗ Static analysis tools and fuzzing (sparse, smatch, coccinelle, coverity, trinity, syzkaller, syzbot, etc). ✗ User space debugging. ✗ This is also not a tutorial! We will talk about a lot of tools and techniches and have fun with some demos! Embedded Labworks DEBUGGING STEP-BY-STEP 1. Understand the problem. 2. Reproduce the problem. 3. Identify the source of the problem. 4. Fix the problem. 5. Fixed? If so, celebrate! If not, go back to step 1. Embedded Labworks TYPES OF PROBLEMS ✗ We can consider as the top 5 types of problems in software: ✗ Crash. ✗ Lockup. ✗ Logic/implementation error. ✗ Resource leak. ✗ Performance. -

Linux Kernel Debugging Advanced Operating Systems 2020/2021

Linux Kernel Debugging Advanced Operating Systems 2020/2021 Vlastimil Babka Linux Kernel Developer, SUSE Labs [email protected] Agenda – Debugging Scenarios • Debugging production kernels – Post-mortem analysis: interpreting kernel oops/panic output, creating and analyzing kernel crash dumps – Kernel observability – dynamic debug, tracing, alt-sysrq dumps, live crash session • Debugging during individual kernel development – Debug prints – printk() facilitiy – Debugger (gdb) support • Finding (latent) bugs during collaborative development – Optional runtime checks configurable during build – Testing and fuzzing – Static analysis 2 Enterprise Linux Distro and Bugs (incl. Kernel) • The software installation (e.g. ISO) itself is free (and open source, obviously) • Customers pay for support subscription – Delivery of (tested by QA) package updates – fixing known bugs, CVE’s… but not more! – Getting reported bugs fixed • Bugs specific to customer’s workload, hardware, “luck”, large number of machines… • Upstream will also fix reported bugs, but only with latest kernel and no effort guarantees • Dealing with kernel bugs, e.g. crashes – Find out the root cause (buggy code) with limited possibilities (compared to local development) • Typically no direct access to customer’s system or workload • Long turnarounds for providing a modified debug kernel and reproducing the bug – Write and deliver a fix (upstream first!) or workaround; fix goes to next update • Possibly a livepatch in some cases – Is a lot of fun ;-) 3 Kernel Oops - The Real World Example -

Evaluating Linux Kernel Crash Dumping Mechanisms

Evaluating Linux Kernel Crash Dumping Mechanisms Fernando Luis Vázquez Cao NTT Data Intellilink [email protected] Abstract 1 Introduction There have been several kernel crash dump cap- turing solutions available for Linux for some Mainstream Linux lacked a kernel crash dump- time now and one of them, kdump, has even ing mechanism for a long time despite the made it into the mainline kernel. fact that there were several solutions (such as Diskdump [1], Netdump [2], and LKCD [3]) But the mere fact of having such a feature does available out of tree . Concerns about their in- not necessary imply that we can obtain a dump trusiveness and reliability prevented them from reliably under any conditions. The LKDTT making it into the vanilla kernel. (Linux Kernel Dump Test Tool) project was created to evaluate crash dumping mechanisms Eventually, a handful of crash dumping so- in terms of success rate, accuracy and com- lutions based on kexec [4, 5] appeared: pleteness. Kdump [6, 7], Mini Kernel Dump [8], and Tough Dump [9]. On paper, the kexec-based A major goal of LKDTT is maximizing the approach seemed very reliable and the impact coverage of the tests. For this purpose, LKDTT in the kernel code was certainly small. Thus, forces the system to crash by artificially recre- kdump was eventually proposed as Linux ker- ating crash scenarios (panic, hang, exception, nel’s crash dumping mechanism and subse- stack overflow, hang, etc.), taking into ac- quently accepted. count the hardware conditions (such as ongoing DMA or interrupt state) and the load of the sys- tem. -

Kdump and Introduction to Vmcore Analysis How to Get Started with Inspecting Kernel Failures

KDUMP AND INTRODUCTION TO VMCORE ANALYSIS HOW TO GET STARTED WITH INSPECTING KERNEL FAILURES PATRICK LADD TECHNICAL ACCOUNT MANAGER, RED HAT [email protected] slides available at https://people.redhat.com/pladd SUMMARY TOPICS TO BE COVERED WHAT IS A VMCORE, AND WHEN IS IS CAPTURED? CONFIGURATION OF THE KDUMP UTILITY AND TESTING SETTING UP A VMCORE ANALYSIS SYSTEM USING THE "CRASH" UTILITY FOR INITIAL ANALYSIS OF VMCORE CONTENTS WHAT IS A VMCORE? It is the contents of system RAM. Ordinarily, captured via: makedumpfile VMWare suspend files QEMU suspend-to-disk images # hexdump -C vmcore -s 0x00011d8 -n 200 000011d8 56 4d 43 4f 52 45 49 4e 46 4f 00 00 4f 53 52 45 |VMCOREINFO..OSRE| 000011e8 4c 45 41 53 45 3d 32 2e 36 2e 33 32 2d 35 37 33 |LEASE=2.6.32-573| 000011f8 2e 32 32 2e 31 2e 65 6c 36 2e 78 38 36 5f 36 34 |.22.1.el6.x86_64| 00001208 0a 50 41 47 45 53 49 5a 45 3d 34 30 39 36 0a 53 |.PAGESIZE=4096.S| 00001218 59 4d 42 4f 4c 28 69 6e 69 74 5f 75 74 73 5f 6e |YMBOL(init_uts_n| 00001228 73 29 3d 66 66 66 66 66 66 66 66 38 31 61 39 36 |s)=ffffffff81a96| 00001238 39 36 30 0a 53 59 4d 42 4f 4c 28 6e 6f 64 65 5f |960.SYMBOL(node_| 00001248 6f 6e 6c 69 6e 65 5f 6d 61 70 29 3d 66 66 66 66 |online_map)=ffff| 00001258 66 66 66 66 38 31 63 31 61 36 63 30 0a 53 59 4d |ffff81c1a6c0.SYM| 00001268 42 4f 4c 28 73 77 61 70 70 65 72 5f 70 67 5f 64 |BOL(swapper_pg_d| 00001278 69 72 29 3d 66 66 66 66 66 66 66 66 38 31 61 38 |ir)=ffffffff81a8| 00001288 64 30 30 30 0a 53 59 4d 42 4f 4c 28 5f 73 74 65 |d000.SYMBOL(_ste| 00001298 78 74 29 3d 66 66 66 66 |xt)=ffff| 000012a0 WHEN IS ONE WRITTEN? By default, when the kernel encounters a state in which it cannot gracefully continue execution. -

About NVRAM and PRAM

7/29/2014 About NVRAM and PRAM About NVRAM and PRAM Languages English Learn about your Mac's PRAM or NVRAM, and when and how you might want to reset it. Your Mac stores certain settings in a special memory area even if it is turned off. On Intel‑based Macs, this is stored in memory known as NVRAM; on PowerPC‑based Macs, this is stored in memory known as PRAM. Information stored in NVRAM / PRAM includes: Speaker volume Screen resolution Startup disk selection Recent kernel panic information, if any If you experience issues related to these functions, you may need to reset the NVRAM or PRAM. For example, if your Mac starts up from a startup disk other than the one you've specified in Startup Disk preferences, or if a "question mark" icon appears briefly when your Mac starts up, resetting NVRAM / PRAM may help. Resetting NVRAM / PRAM 1. Shut down your Mac. 2. Locate the following keys on the keyboard: Command (⌘), Option, P, and R. You will need to hold these keys down simultaneously in step 4. 3. Turn on the computer. 4. Press and hold the Command‑Option‑P‑R keys before the gray screen appears. 5. Hold the keys down until the computer restarts and you hear the startup sound for the second time. 6. Release the keys. After resetting NVRAM or PRAM, you may need to reconfigure your settings for speaker volume, screen resolution, startup disk selection, and time zone information. If issues persist, your Mac's logic board battery (not a portable Mac's rechargeable battery) may need to be replaced. -

Basic Kernel Crash Dump Analysis

Basic Kernel Crash Dump Analysis Hardik Vyas Technical Support Engineer (Kernel) 19/04/2016 Agenda PART-1 ● Basics of kernel panic and system hang ● kdump and different methods available to capture a kernel crash dump (vmcore) PART-2 ● Basics of crash ● Initial Analysis ● Memory Subsystem 2 Basics of kernel panic and system hang What is kernel panic ? • A kernel panic is an action taken by an operating system upon detecting an internal fatal error from which it cannot safely recover. • It is possible for kernel code to indicate such a condition by calling the panic function declared in the header file "sys/system.h". 3 Basics of kernel panic and system hang What is kernel panic ? • There are four main causes of kernel panic: ■ Software problems ■ Hardware problems ■ Firmware/BIOS problems ■ Manual or condition panic 4 ■ Software problems: o Bug in kernel o Bug in kernel module o Bug in unsigned (U) proprietary (P) kernel module o Bug in hypervisor etc. Examples of Software Problems: • BUGs / Assertion errors kernel BUG at net/sunrpc/sched.c:695! kernel BUG at /opt/kaspersky/kav4fs/src/kernel/redirfs/rfs_inode.c:61! 5 causes of kernel panic, continued... • Null pointer dereference BUG: unable to handle kernel NULL pointer dereference at (null) BUG: unable to handle kernel NULL pointer dereference at 0000000000000008 • Unable to handle kernel paging request BUG: unable to handle kernel paging request at ffffea00028e8018 6 causes of kernel panic, continued... ■ Hardware problems: o Faulty RAM (Physical Memory) o Faulty Processor o Faulty PCI interfaces and USB devices etc. Examples of Hardware Problems: • Machine Check Exception (MCE) Kernel panic - not syncing: Fatal Machine check 7 causes of kernel panic, continued.. -

Application Note: RHEL Kernel Panic

Application Note RHEL Kernel Panic Marvell®. Essential technology, done right.™ RHEL Kernel Panic Products Affected HPE® FlexFabric 10Gb 4-port FLR-T 57840S Adapter HPE CN1100R Dual Port Converged Network Adapter HPE CN1100R-T Converged Network Adapter HPE FlexFabric® 10Gb 2-port 534M Adapter HPE FlexFabric 20Gb 2-port 630FLB Adapter HPE FlexFabric 20Gb 2-port 630M Adapter HPE FlexFabric 10Gb 2-port FLR-T 57810S Adapter HPE FlexFabric 10Gb 2-port FLR-SFP+ 57810S Adapter HPE FlexFabric 10Gb 2-port 536FLB Adapter HPE Synergy 2820C 10Gb Converged Network Adapter HPE Synergy 3820C 10/20Gb Converged Network Adapter Introduction This application note describes a situation where a kernel panic occurs after the installation of Red Hat® Enterprise Linux® if configured for hardware iSCSI offload boot, unless the Linux network interface ifcfg scripts are modified for certain network adapters. Scope This application note affects any HPE server configured with any of the network adapters listed in the Products Affected table. Description On an HPE server configured with any of the network adapters listed in the Products Affected table and configured for hardware iSCSI offload boot, Red Hat Enterprise Linux 7 and Red Hat Enterprise Linux 6 will kernel panic instead of boot after the installation. Document Classification: Public Doc. No. AH0451401-00 Rev. C May 3, 2021 Page 1 Copyright © 2021 Marvell Application Note RHEL Kernel Panic The kernel panic occurs because the network adapter is configured for hardware offload iSCSI boot and Linux is attempting to boot from the iSCSI LUN. Workaround At the end of the Red Hat Enterprise Linux 6 or Red Hat Enterprise Linux 7 installation, perform the following steps: 1.