Linux Kernel Crash Book Everything You Need to Know

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FIPS 140-2 Non-Proprietary Security Policy

Kernel Crypto API Cryptographic Module version 1.0 FIPS 140-2 Non-Proprietary Security Policy Version 1.3 Last update: 2020-03-02 Prepared by: atsec information security corporation 9130 Jollyville Road, Suite 260 Austin, TX 78759 www.atsec.com © 2020 Canonical Ltd. / atsec information security This document can be reproduced and distributed only whole and intact, including this copyright notice. Kernel Crypto API Cryptographic Module FIPS 140-2 Non-Proprietary Security Policy Table of Contents 1. Cryptographic Module Specification ..................................................................................................... 5 1.1. Module Overview ..................................................................................................................................... 5 1.2. Modes of Operation ................................................................................................................................. 9 2. Cryptographic Module Ports and Interfaces ........................................................................................ 10 3. Roles, Services and Authentication ..................................................................................................... 11 3.1. Roles .......................................................................................................................................................11 3.2. Services ...................................................................................................................................................11 -

Kernel Panic

Kernel Panic Connect, Inc. 1701 Quincy Avenue, Suites 5 & 6, Naperville, IL 60540 Ph: (630) 717-7200 Fax: (630) 717-7243 www.connectrf.com Table of Contents SCO Kernel Patches for Panics on Pentium Systems…………………………………..1 Kernel Panic Debug Methodologies..............................................................................3 SCO Kernel Patches for Panics on Pentium Systems SCO Kernel Patches for Panics on Pentium Systems Web Links http://www.sco.com/cgi-bin/ssl_reference?104530 http://www.sco.com/cgi-bin/ssl_reference?105532 Pentium System Panics with Trap Type 6; no dump is saved to /dev/swap. Keywords panic trap kernel invalid opcode pentium querytlb querytbl 386 mpx unix v4 dump swap double type6 no patch /etc/conf/pack.d/kernel/locore.o image locore Release SCO UNIX System V/386 Release 3.2 Operating System Version 4.0, 4.1 and 4.2 SCO Open Desktop Release 2.0 and 3.0 SCO Open Server System Release 2.0 and 3.0 Problem My system panics with a Trap Type 6, but no memory dump gets written. Cause There is a flaw in the kernel querytlb() routine that allows the Pentium to execute a 386-specific instruction which causes it to panic with an invalid opcode. querytlb() is only called when your system is panicking. The fault causes the system to double- panic and it thus fails to write a panic dump to disk. This means that the panic trap type 6 is concealing another type of panic which will show itself after the following patch has been applied. Solution You can apply the following patch, as root, to: /etc/conf/pack.d/kernel/locore.o Use the procedure that follows. -

Introduction to Debugging the Freebsd Kernel

Introduction to Debugging the FreeBSD Kernel John H. Baldwin Yahoo!, Inc. Atlanta, GA 30327 [email protected], http://people.FreeBSD.org/˜jhb Abstract used either directly by the user or indirectly via other tools such as kgdb [3]. Just like every other piece of software, the The Kernel Debugging chapter of the FreeBSD kernel has bugs. Debugging a ker- FreeBSD Developer’s Handbook [4] covers nel is a bit different from debugging a user- several details already such as entering DDB, land program as there is nothing underneath configuring a system to save kernel crash the kernel to provide debugging facilities such dumps, and invoking kgdb on a crash dump. as ptrace() or procfs. This paper will give a This paper will not cover these topics. In- brief overview of some of the tools available stead, it will demonstrate some ways to use for investigating bugs in the FreeBSD kernel. FreeBSD’s kernel debugging tools to investi- It will cover the in-kernel debugger DDB and gate bugs. the external debugger kgdb which is used to perform post-mortem analysis on kernel crash dumps. 2 Kernel Crash Messages 1 Introduction The first debugging service the FreeBSD kernel provides is the messages the kernel prints on the console when the kernel crashes. When a userland application encounters a When the kernel encounters an invalid condi- bug the operating system provides services for tion (such as an assertion failure or a memory investigating the bug. For example, a kernel protection violation) it halts execution of the may save a copy of the a process’ memory current thread and enters a “panic” state also image on disk as a core dump. -

Oracle® Linux Administrator's Solutions Guide for Release 6

Oracle® Linux Administrator's Solutions Guide for Release 6 E37355-64 August 2017 Oracle Legal Notices Copyright © 2012, 2017, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. No other rights are granted to the U.S. -

Process and Memory Management Commands

Process and Memory Management Commands This chapter describes the Cisco IOS XR software commands used to manage processes and memory. For more information about using the process and memory management commands to perform troubleshooting tasks, see Cisco ASR 9000 Series Aggregation Services Router Getting Started Guide. • clear context, on page 2 • dumpcore, on page 3 • exception coresize, on page 6 • exception filepath, on page 8 • exception pakmem, on page 12 • exception sparse, on page 14 • exception sprsize, on page 16 • follow, on page 18 • monitor threads, on page 25 • process, on page 29 • process core, on page 32 • process mandatory, on page 34 • show context, on page 36 • show dll, on page 39 • show exception, on page 42 • show memory, on page 44 • show memory compare, on page 47 • show memory heap, on page 50 • show processes, on page 54 Process and Memory Management Commands 1 Process and Memory Management Commands clear context clear context To clear core dump context information, use the clear context command in the appropriate mode. clear context location {node-id | all} Syntax Description location{node-id | all} (Optional) Clears core dump context information for a specified node. The node-id argument is expressed in the rack/slot/module notation. Use the all keyword to indicate all nodes. Command Default No default behavior or values Command Modes Administration EXEC EXEC mode Command History Release Modification Release 3.7.2 This command was introduced. Release 3.9.0 No modification. Usage Guidelines To use this command, you must be in a user group associated with a task group that includes appropriate task IDs. -

Also Includes Slides and Contents From

The Compilation Toolchain Cross-Compilation for Embedded Systems Prof. Andrea Marongiu ([email protected]) Toolchain The toolchain is a set of development tools used in association with source code or binaries generated from the source code • Enables development in a programming language (e.g., C/C++) • It is used for a lot of operations such as a) Compilation b) Preparing Libraries Most common toolchain is the c) Reading a binary file (or part of it) GNU toolchain which is part of d) Debugging the GNU project • Normally it contains a) Compiler : Generate object files from source code files b) Linker: Link object files together to build a binary file c) Library Archiver: To group a set of object files into a library file d) Debugger: To debug the binary file while running e) And other tools The GNU Toolchain GNU (GNU’s Not Unix) The GNU toolchain has played a vital role in the development of the Linux kernel, BSD, and software for embedded systems. The GNU project produced a set of programming tools. Parts of the toolchain we will use are: -gcc: (GNU Compiler Collection): suite of compilers for many programming languages -binutils: Suite of tools including linker (ld), assembler (gas) -gdb: Code debugging tool -libc: Subset of standard C library (assuming a C compiler). -bash: free Unix shell (Bourne-again shell). Default shell on GNU/Linux systems and Mac OSX. Also ported to Microsoft Windows. -make: automation tool for compilation and build Program development tools The process of converting source code to an executable binary image requires several steps, each with its own tool. -

Post Mortem Crash Analysis

Post Mortem Crash Analysis Johan Heander & Magnus Malmborn January 14, 2007 Abstract To improve the quality and reliability of embedded systems it is important to gather information about errors in units already sold and deployed. To achieve this, a system for transmitting error information from the customer back to the developers is needed, and the developers must also have a set of tools to analyze the error reports. The purpose of this master thesis was to develop a fully functioning demon- stration system for collection, transmission and interpretation of error reports from Axis network cameras using the Linux operating system. The system has been shown to handle both kernel and application errors and conducts automatic analysis of received data. It also uses standard HTTP protocol for all network transfers making it easy to use even on firewalled net- works. i ii Acknowledgement We would like to thank our LTH supervisor Jonas Skeppstedt for all he has taught us about computer science in general and operating systems and the C programming language in particular. We would also like to thank Mikael Starvik at Axis Communications for quickly providing us with all hardware and information we needed to complete this thesis, and for providing us with support during our implementation and writing. Finally we thank all the developers working at Axis Communications, many of whom have provided input and reflections on our work. iii iv Contents 1 Introduction 1 1.1 Problem description . 1 1.2 Problem analysis . 1 2 Background 3 2.1 Kernel crashes . 3 2.2 User space crashes . -

Dm-X: Protecting Volume-Level Integrity for Cloud Volumes and Local

dm-x: Protecting Volume-level Integrity for Cloud Volumes and Local Block Devices Anrin Chakraborti Bhushan Jain Jan Kasiak Stony Brook University Stony Brook University & Stony Brook University UNC, Chapel Hill Tao Zhang Donald Porter Radu Sion Stony Brook University & Stony Brook University & Stony Brook University UNC, Chapel Hill UNC, Chapel Hill ABSTRACT on Amazon’s Virtual Private Cloud (VPC) [2] (which provides The verified boot feature in recent Android devices, which strong security guarantees through network isolation) store deploys dm-verity, has been overwhelmingly successful in elim- data on untrusted storage devices residing in the public cloud. inating the extremely popular Android smart phone rooting For example, Amazon VPC file systems, object stores, and movement [25]. Unfortunately, dm-verity integrity guarantees databases reside on virtual block devices in the Amazon are read-only and do not extend to writable volumes. Elastic Block Storage (EBS). This paper introduces a new device mapper, dm-x, that This allows numerous attack vectors for a malicious cloud efficiently (fast) and reliably (metadata journaling) assures provider/untrusted software running on the server. For in- volume-level integrity for entire, writable volumes. In a direct stance, to ensure SEC-mandated assurances of end-to-end disk setup, dm-x overheads are around 6-35% over ext4 on the integrity, banks need to guarantee that the external cloud raw volume while offering additional integrity guarantees. For storage service is unable to remove log entries documenting cloud storage (Amazon EBS), dm-x overheads are negligible. financial transactions. Yet, without integrity of the storage device, a malicious cloud storage service could remove logs of a coordinated attack. -

Riscv-Software-Stack-Tutorial-Hpca2015

Software Tools Bootcamp RISC-V ISA Tutorial — HPCA-21 08 February 2015 Albert Ou UC Berkeley [email protected] Preliminaries To follow along, download these slides at http://riscv.org/tutorial-hpca2015.html 2 Preliminaries . Shell commands are prefixed by a “$” prompt. Due to time constraints, we will not be building everything from source in real-time. - Binaries have been prepared for you in the VM image. - Detailed build steps are documented here for completeness but are not necessary if using the VM. Interactive portions of this tutorial are denoted with: $ echo 'Hello world' . Also as a reminder, these slides are marked with an icon in the upper-right corner: 3 Software Stack . Many possible combinations (and growing) . But here we will focus on the most common workflows for RISC-V software development 4 Agenda 1. riscv-tools infrastructure 2. First Steps 3. Spike + Proxy Kernel 4. QEMU + Linux 5. Advanced Cross-Compiling 6. Yocto/OpenEmbedded 5 riscv-tools — Overview “Meta-repository” with Git submodules for every stable component of the RISC-V software toolchain Submodule Contents riscv-fesvr RISC-V Frontend Server riscv-isa-sim Functional ISA simulator (“Spike”) riscv-qemu Higher-performance ISA simulator riscv-gnu-toolchain binutils, gcc, newlib, glibc, Linux UAPI headers riscv-llvm LLVM, riscv-clang submodule riscv-pk RISC-V Proxy Kernel (riscv-linux) Linux/RISC-V kernel port riscv-tests ISA assembly tests, benchmark suite All listed submodules are hosted under the riscv GitHub organization: https://github.com/riscv 6 riscv-tools — Installation . Build riscv-gnu-toolchain (riscv*-*-elf / newlib target), riscv-fesvr, riscv-isa-sim, and riscv-pk: (pre-installed in VM) $ git clone https://github.com/riscv/riscv-tools $ cd riscv-tools $ git submodule update --init --recursive $ export RISCV=<installation path> $ export PATH=${PATH}:${RISCV}/bin $ ./build.sh . -

The Xen Port of Kexec / Kdump a Short Introduction and Status Report

The Xen Port of Kexec / Kdump A short introduction and status report Magnus Damm Simon Horman VA Linux Systems Japan K.K. www.valinux.co.jp/en/ Xen Summit, September 2006 Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 1 / 17 Outline Introduction to Kexec What is Kexec? Kexec Examples Kexec Overview Introduction to Kdump What is Kdump? Kdump Kernels The Crash Utility Xen Porting Effort Kexec under Xen Kdump under Xen The Dumpread Tool Partial Dumps Current Status Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 2 / 17 Introduction to Kexec Outline Introduction to Kexec What is Kexec? Kexec Examples Kexec Overview Introduction to Kdump What is Kdump? Kdump Kernels The Crash Utility Xen Porting Effort Kexec under Xen Kdump under Xen The Dumpread Tool Partial Dumps Current Status Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 3 / 17 Kexec allows you to reboot from Linux into any kernel. as long as the new kernel doesn’t depend on the BIOS for setup. Introduction to Kexec What is Kexec? What is Kexec? “kexec is a system call that implements the ability to shutdown your current kernel, and to start another kernel. It is like a reboot but it is indepedent of the system firmware...” Configuration help text in Linux-2.6.17 Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 4 / 17 . as long as the new kernel doesn’t depend on the BIOS for setup. Introduction to Kexec What is Kexec? What is Kexec? “kexec is a system call that implements the ability to shutdown your current kernel, and to start another kernel. -

What Is an Operating System III 2.1 Compnents II an Operating System

Page 1 of 6 What is an Operating System III 2.1 Compnents II An operating system (OS) is software that manages computer hardware and software resources and provides common services for computer programs. The operating system is an essential component of the system software in a computer system. Application programs usually require an operating system to function. Memory management Among other things, a multiprogramming operating system kernel must be responsible for managing all system memory which is currently in use by programs. This ensures that a program does not interfere with memory already in use by another program. Since programs time share, each program must have independent access to memory. Cooperative memory management, used by many early operating systems, assumes that all programs make voluntary use of the kernel's memory manager, and do not exceed their allocated memory. This system of memory management is almost never seen any more, since programs often contain bugs which can cause them to exceed their allocated memory. If a program fails, it may cause memory used by one or more other programs to be affected or overwritten. Malicious programs or viruses may purposefully alter another program's memory, or may affect the operation of the operating system itself. With cooperative memory management, it takes only one misbehaved program to crash the system. Memory protection enables the kernel to limit a process' access to the computer's memory. Various methods of memory protection exist, including memory segmentation and paging. All methods require some level of hardware support (such as the 80286 MMU), which doesn't exist in all computers. -

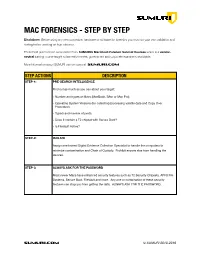

SUMURI Macintosh Forensics Best Practices

! MAC FORENSICS - STEP BY STEP Disclaimer: Before using any new procedure, hardware or software for forensics you must do your own validation and testing before working on true evidence. These best practices are summarized from SUMURI’s Macintosh Forensic Survival Courses which is a vendor- neutral training course taught to law enforcement, government and corporate examiners worldwide. More information about SUMURI can be found at SUMURI.com STEP ACTIONS DESCRIPTION STEP-1: PRE-SEARCH INTELLIGENCE Find out as much as you can about your target: • Number and types of Macs (MacBook, iMac or Mac Pro). • Operating System Versions (for collecting/processing volatile data and Copy Over Procedure). • Type/s and number of ports. • Does it contain a T2 chipset with Secure Boot? • Is FileVault Active? STEP-2: ISOLATE Assign one trained Digital Evidence Collection Specialist to handle the computers to minimize contamination and Chain of Custody. Prohibit anyone else from handling the devices. STEP-3: ALWAYS ASK FOR THE PASSWORD Most newer Macs have enhanced security features such as T2 Security Chipsets, APFS File Systems, Secure Boot, FileVault and more. Any one or combination of these security features can stop you from getting the data. ALWAYS ASK FOR THE PASSWORD. SUMURI.com © SUMURI 2010-2019 ! STEP ACTIONS DESCRIPTION STEP-4: IF COMPUTER IS ON - SCREEN SAVER PASSWORD ACTIVE Options are: • Ask for the Password - Confirm password and proceed to Step-6. • Restart to Image RAM - Connect a RAM Imaging Utility to the Mac such as RECON IMAGER. Conduct a soft-restart (do not power off if possible and image the RAM).