Kdump and Introduction to Vmcore Analysis How to Get Started with Inspecting Kernel Failures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

FIPS 140-2 Non-Proprietary Security Policy

Kernel Crypto API Cryptographic Module version 1.0 FIPS 140-2 Non-Proprietary Security Policy Version 1.3 Last update: 2020-03-02 Prepared by: atsec information security corporation 9130 Jollyville Road, Suite 260 Austin, TX 78759 www.atsec.com © 2020 Canonical Ltd. / atsec information security This document can be reproduced and distributed only whole and intact, including this copyright notice. Kernel Crypto API Cryptographic Module FIPS 140-2 Non-Proprietary Security Policy Table of Contents 1. Cryptographic Module Specification ..................................................................................................... 5 1.1. Module Overview ..................................................................................................................................... 5 1.2. Modes of Operation ................................................................................................................................. 9 2. Cryptographic Module Ports and Interfaces ........................................................................................ 10 3. Roles, Services and Authentication ..................................................................................................... 11 3.1. Roles .......................................................................................................................................................11 3.2. Services ...................................................................................................................................................11 -

Kernel Panic

Kernel Panic Connect, Inc. 1701 Quincy Avenue, Suites 5 & 6, Naperville, IL 60540 Ph: (630) 717-7200 Fax: (630) 717-7243 www.connectrf.com Table of Contents SCO Kernel Patches for Panics on Pentium Systems…………………………………..1 Kernel Panic Debug Methodologies..............................................................................3 SCO Kernel Patches for Panics on Pentium Systems SCO Kernel Patches for Panics on Pentium Systems Web Links http://www.sco.com/cgi-bin/ssl_reference?104530 http://www.sco.com/cgi-bin/ssl_reference?105532 Pentium System Panics with Trap Type 6; no dump is saved to /dev/swap. Keywords panic trap kernel invalid opcode pentium querytlb querytbl 386 mpx unix v4 dump swap double type6 no patch /etc/conf/pack.d/kernel/locore.o image locore Release SCO UNIX System V/386 Release 3.2 Operating System Version 4.0, 4.1 and 4.2 SCO Open Desktop Release 2.0 and 3.0 SCO Open Server System Release 2.0 and 3.0 Problem My system panics with a Trap Type 6, but no memory dump gets written. Cause There is a flaw in the kernel querytlb() routine that allows the Pentium to execute a 386-specific instruction which causes it to panic with an invalid opcode. querytlb() is only called when your system is panicking. The fault causes the system to double- panic and it thus fails to write a panic dump to disk. This means that the panic trap type 6 is concealing another type of panic which will show itself after the following patch has been applied. Solution You can apply the following patch, as root, to: /etc/conf/pack.d/kernel/locore.o Use the procedure that follows. -

The Xen Port of Kexec / Kdump a Short Introduction and Status Report

The Xen Port of Kexec / Kdump A short introduction and status report Magnus Damm Simon Horman VA Linux Systems Japan K.K. www.valinux.co.jp/en/ Xen Summit, September 2006 Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 1 / 17 Outline Introduction to Kexec What is Kexec? Kexec Examples Kexec Overview Introduction to Kdump What is Kdump? Kdump Kernels The Crash Utility Xen Porting Effort Kexec under Xen Kdump under Xen The Dumpread Tool Partial Dumps Current Status Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 2 / 17 Introduction to Kexec Outline Introduction to Kexec What is Kexec? Kexec Examples Kexec Overview Introduction to Kdump What is Kdump? Kdump Kernels The Crash Utility Xen Porting Effort Kexec under Xen Kdump under Xen The Dumpread Tool Partial Dumps Current Status Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 3 / 17 Kexec allows you to reboot from Linux into any kernel. as long as the new kernel doesn’t depend on the BIOS for setup. Introduction to Kexec What is Kexec? What is Kexec? “kexec is a system call that implements the ability to shutdown your current kernel, and to start another kernel. It is like a reboot but it is indepedent of the system firmware...” Configuration help text in Linux-2.6.17 Magnus Damm ([email protected]) Kexec / Kdump Xen Summit, September 2006 4 / 17 . as long as the new kernel doesn’t depend on the BIOS for setup. Introduction to Kexec What is Kexec? What is Kexec? “kexec is a system call that implements the ability to shutdown your current kernel, and to start another kernel. -

What Is an Operating System III 2.1 Compnents II an Operating System

Page 1 of 6 What is an Operating System III 2.1 Compnents II An operating system (OS) is software that manages computer hardware and software resources and provides common services for computer programs. The operating system is an essential component of the system software in a computer system. Application programs usually require an operating system to function. Memory management Among other things, a multiprogramming operating system kernel must be responsible for managing all system memory which is currently in use by programs. This ensures that a program does not interfere with memory already in use by another program. Since programs time share, each program must have independent access to memory. Cooperative memory management, used by many early operating systems, assumes that all programs make voluntary use of the kernel's memory manager, and do not exceed their allocated memory. This system of memory management is almost never seen any more, since programs often contain bugs which can cause them to exceed their allocated memory. If a program fails, it may cause memory used by one or more other programs to be affected or overwritten. Malicious programs or viruses may purposefully alter another program's memory, or may affect the operation of the operating system itself. With cooperative memory management, it takes only one misbehaved program to crash the system. Memory protection enables the kernel to limit a process' access to the computer's memory. Various methods of memory protection exist, including memory segmentation and paging. All methods require some level of hardware support (such as the 80286 MMU), which doesn't exist in all computers. -

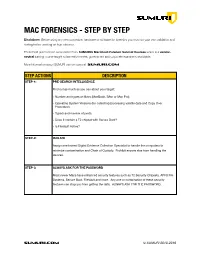

SUMURI Macintosh Forensics Best Practices

! MAC FORENSICS - STEP BY STEP Disclaimer: Before using any new procedure, hardware or software for forensics you must do your own validation and testing before working on true evidence. These best practices are summarized from SUMURI’s Macintosh Forensic Survival Courses which is a vendor- neutral training course taught to law enforcement, government and corporate examiners worldwide. More information about SUMURI can be found at SUMURI.com STEP ACTIONS DESCRIPTION STEP-1: PRE-SEARCH INTELLIGENCE Find out as much as you can about your target: • Number and types of Macs (MacBook, iMac or Mac Pro). • Operating System Versions (for collecting/processing volatile data and Copy Over Procedure). • Type/s and number of ports. • Does it contain a T2 chipset with Secure Boot? • Is FileVault Active? STEP-2: ISOLATE Assign one trained Digital Evidence Collection Specialist to handle the computers to minimize contamination and Chain of Custody. Prohibit anyone else from handling the devices. STEP-3: ALWAYS ASK FOR THE PASSWORD Most newer Macs have enhanced security features such as T2 Security Chipsets, APFS File Systems, Secure Boot, FileVault and more. Any one or combination of these security features can stop you from getting the data. ALWAYS ASK FOR THE PASSWORD. SUMURI.com © SUMURI 2010-2019 ! STEP ACTIONS DESCRIPTION STEP-4: IF COMPUTER IS ON - SCREEN SAVER PASSWORD ACTIVE Options are: • Ask for the Password - Confirm password and proceed to Step-6. • Restart to Image RAM - Connect a RAM Imaging Utility to the Mac such as RECON IMAGER. Conduct a soft-restart (do not power off if possible and image the RAM). -

The Linux 2.4 Kernel's Startup Procedure

The Linux 2.4 Kernel’s Startup Procedure William Gatliff 1. Overview This paper describes the Linux 2.4 kernel’s startup process, from the moment the kernel gets control of the host hardware until the kernel is ready to run user processes. Along the way, it covers the programming environment Linux expects at boot time, how peripherals are initialized, and how Linux knows what to do next. 2. The Big Picture Figure 1 is a function call diagram that describes the kernel’s startup procedure. As it shows, kernel initialization proceeds through a number of distinct phases, starting with basic hardware initialization and ending with the kernel’s launching of /bin/init and other user programs. The dashed line in the figure shows that init() is invoked as a kernel thread, not as a function call. Figure 1. The kernel’s startup procedure. Figure 2 is a flowchart that provides an even more generalized picture of the boot process, starting with the bootloader extracting and running the kernel image, and ending with running user programs. Figure 2. The kernel’s startup procedure, in less detail. The following sections describe each of these function calls, including examples taken from the Hitachi SH7750/Sega Dreamcast version of the kernel. 3. In The Beginning... The Linux boot process begins with the kernel’s _stext function, located in arch/<host>/kernel/head.S. This function is called _start in some versions. Interrupts are disabled at this point, and only minimal memory accesses may be possible depending on the capabilities of the host hardware. -

Kdump, a Kexec-Based Kernel Crash Dumping Mechanism

Kdump, A Kexec-based Kernel Crash Dumping Mechanism Vivek Goyal Eric W. Biederman Hariprasad Nellitheertha IBM Linux NetworkX IBM [email protected] [email protected] [email protected] Abstract important consideration for the success of a so- lution has been the reliability and ease of use. Kdump is a crash dumping solution that pro- Kdump is a kexec based kernel crash dump- vides a very reliable dump generation and cap- ing mechanism, which is being perceived as turing mechanism [01]. It is simple, easy to a reliable crash dumping solution for Linux R . configure and provides a great deal of flexibility This paper begins with brief description of what in terms of dump device selection, dump saving kexec is and what it can do in general case, and mechanism, and plugging-in filtering mecha- then details how kexec has been modified to nism. boot a new kernel even in a system crash event. The idea of kdump has been around for Kexec enables booting into a new kernel while quite some time now, and initial patches for preserving the memory contents in a crash sce- kdump implementation were posted to the nario, and kdump uses this feature to capture Linux kernel mailing list last year [03]. Since the kernel crash dump. Physical memory lay- then, kdump has undergone significant design out and processor state are encoded in ELF core changes to ensure improved reliability, en- format, and these headers are stored in a re- hanced ease of use and cleaner interfaces. This served section of memory. Upon a crash, new paper starts with an overview of the kdump de- kernel boots up from reserved memory and pro- sign and development history. -

Measuring and Improving Memory's Resistance to Operating System

University of Michigan CSE-TR-273-95 Measuring and Improving Memory’s Resistance to Operating System Crashes Wee Teck Ng, Gurushankar Rajamani, Christopher M. Aycock, Peter M. Chen Computer Science and Engineering Division Department of Electrical Engineering and Computer Science University of Michigan {weeteck,gurur,caycock,pmchen}@eecs.umich.edu Abstract: Memory is commonly viewed as an unreliable place to store permanent data because it is per- ceived to be vulnerable to system crashes.1 Yet despite all the negative implications of memory’s unreli- ability, no data exists that quantifies how vulnerable memory actually is to system crashes. The goals of this paper are to quantify the vulnerability of memory to operating system crashes and to propose a method for protecting memory from these crashes. We use software fault injection to induce a wide variety of operating system crashes in DEC Alpha work- stations running Digital Unix, ranging from bit errors in the kernel stack to deleting branch instructions to C-level allocation management errors. We show that memory is remarkably resistant to operating system crashes. Out of the 996 crashes we observed, only 17 corrupted file cache data. Excluding direct corruption from copy overruns, only 2 out of 820 corrupted file cache data. This data contradicts the common assump- tion that operating system crashes often corrupt files in memory. For users who need even greater protec- tion against operating system crashes, we propose a simple, low-overhead software scheme that controls access to file cache buffers using virtual memory protection and code patching. 1 Introduction A modern storage hierarchy combines random-access memory, magnetic disk, and possibly optical disk or magnetic tape to try to keep pace with rapid advances in processor performance. -

Taming Hosted Hypervisors with (Mostly) Deprivileged Execution

Taming Hosted Hypervisors with (Mostly) Deprivileged Execution Chiachih Wu†, Zhi Wang*, Xuxian Jiang† †North Carolina State University, *Florida State University Virtualization is Widely Used 2 “There are now hundreds of thousands of companies around the world using AWS to run all their business, or at least a portion of it. They are located across 190 countries, which is just about all of them on Earth.” Werner Vogels, CTO at Amazon AWS Summit ‘12 “Virtualization penetration has surpassed 50% of all server workloads, and continues to grow.” Magic Quadrant for x86 Server Virtualization Infrastructure June ‘12 Threats to Hypervisors 3 Large Code Bases Hypervisor SLOC Xen (4.0) 194K VMware ESXi1 200K Hyper-V1 100K KVM (2.6.32.28) 33.6K 1: Data source: NOVA (Steinberg et al., EuroSys ’10) Hypervisor Vulnerabilities Vulnerabilities Xen 41 KVM 24 VMware ESXi 43 VMware Workstation 49 Data source: National Vulnerability Database (‘09~’12) Threats to Hosted Hypervisors 4 Applications … Applications Guest OS Guest OS Hypervisor Host OS Physical Hardware Can we prevent the compromised hypervisor from attacking the rest of the system? DeHype 5 Decomposing the KVM hypervisor codebase De-privileged part user-level (93.2% codebase) Privileged part small kernel module (2.3 KSLOC) Guest VM Applications … Applications Applications Applications … Guest OS Guest OS De-privilege Guest OS Guest OS DeHyped DeHyped KVM KVM’ HypeLet KVM ~4% overhead Host OS Host OS Physical Hardware Physical Hardware Challenges 6 Providing the OS services in user mode Minimizing performance overhead Supporting hardware-assisted memory virtualization at user-level Challenge I 7 Providing the OS services in user mode De-privileged Hypervisor Hypervisor User Kernel Hypervisor HypeLet Host OS Host OS Physical Hardware Physical Hardware Original Hosted Hypervisor DeHype’d Hosted Hypervisor Dependency Decoupling 8 Abstracting the host OS interface and providing OS functionalities in user mode For example Memory allocator: kmalloc/kfree, alloc_page, etc. -

Linux Kernal II 9.1 Architecture

Page 1 of 7 Linux Kernal II 9.1 Architecture: The Linux kernel is a Unix-like operating system kernel used by a variety of operating systems based on it, which are usually in the form of Linux distributions. The Linux kernel is a prominent example of free and open source software. Programming language The Linux kernel is written in the version of the C programming language supported by GCC (which has introduced a number of extensions and changes to standard C), together with a number of short sections of code written in the assembly language (in GCC's "AT&T-style" syntax) of the target architecture. Because of the extensions to C it supports, GCC was for a long time the only compiler capable of correctly building the Linux kernel. Compiler compatibility GCC is the default compiler for the Linux kernel source. In 2004, Intel claimed to have modified the kernel so that its C compiler also was capable of compiling it. There was another such reported success in 2009 with a modified 2.6.22 version of the kernel. Since 2010, effort has been underway to build the Linux kernel with Clang, an alternative compiler for the C language; as of 12 April 2014, the official kernel could almost be compiled by Clang. The project dedicated to this effort is named LLVMLinxu after the LLVM compiler infrastructure upon which Clang is built. LLVMLinux does not aim to fork either the Linux kernel or the LLVM, therefore it is a meta-project composed of patches that are eventually submitted to the upstream projects. -

Clusterfuzz: Fuzzing at Google Scale

Black Hat Europe 2019 ClusterFuzz Fuzzing at Google Scale Abhishek Arya Oliver Chang About us ● Chrome Security team (Bugs--) ● Abhishek Arya (@infernosec) ○ Founding Chrome Security member ○ Founder of ClusterFuzz ● Oliver Chang (@halbecaf) ○ Lead developer of ClusterFuzz ○ Tech lead for OSS-Fuzz 2 Fuzzing ● Effective at finding bugs by exploring unexpected states ● Recent developments ○ Coverage guided fuzzing ■ AFL started “smart fuzzing” (Nov’13) ○ Making fuzzing more accessible ■ libFuzzer - in-process fuzzing (Jan’15) ■ OSS-Fuzz - free fuzzing for open source (Dec’16) 3 Fuzzing mythbusting ● Fuzzing is only for security researchers or security teams ● Fuzzing only finds security vulnerabilities ● We don’t need fuzzers if our project is well unit-tested ● Our project is secure if there are no open bugs 4 Scaling fuzzing ● How to fuzz effectively as a Defender? ○ Not just “more cores” ● Security teams can’t write all fuzzers for the entire project ○ Bugs create triage burden ● Should seamlessly fit in software development lifecycle ○ Input: Commit unit-test like fuzzer in source ○ Output: Bugs, Fuzzing Statistics and Code Coverage 5 Fuzzing lifecycle Manual Automated Fuzzing Upload builds Build bucket Cloud Storage Find crash Write fuzzers De-duplicate Minimize Bisect File bug Fix bugs Test if fixed (daily) Close bug 6 Assign bug ClusterFuzz ● Open source - https://github.com/google/clusterfuzz ● Automates everything in the fuzzing lifecycle apart from “fuzzer writing” and “bug fixing” ● Runs 5,000 fuzzers on 25,000 cores, can scale more ● Cross platform (Linux, macOS, Windows, Android) ● Powers OSS-Fuzz and Google’s fuzzing 7 Fuzzing lifecycle 1. Write fuzzers 2. Build fuzzers 3. Fuzz at scale 4. -

Oracle VM Virtualbox User Manual

Oracle VM VirtualBox R User Manual Version 5.0.16 c 2004-2016 Oracle Corporation http://www.virtualbox.org Contents 1 First steps 11 1.1 Why is virtualization useful?............................. 12 1.2 Some terminology................................... 12 1.3 Features overview................................... 13 1.4 Supported host operating systems.......................... 15 1.5 Installing VirtualBox and extension packs...................... 16 1.6 Starting VirtualBox.................................. 17 1.7 Creating your first virtual machine......................... 18 1.8 Running your virtual machine............................ 21 1.8.1 Starting a new VM for the first time.................... 21 1.8.2 Capturing and releasing keyboard and mouse.............. 22 1.8.3 Typing special characters.......................... 23 1.8.4 Changing removable media......................... 24 1.8.5 Resizing the machine’s window...................... 24 1.8.6 Saving the state of the machine...................... 25 1.9 Using VM groups................................... 26 1.10 Snapshots....................................... 26 1.10.1 Taking, restoring and deleting snapshots................. 27 1.10.2 Snapshot contents.............................. 28 1.11 Virtual machine configuration............................ 29 1.12 Removing virtual machines.............................. 30 1.13 Cloning virtual machines............................... 30 1.14 Importing and exporting virtual machines..................... 31 1.15 Global Settings...................................