Sensemaking David T

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Searchers After Horror Understanding H

Mats Nyholm Mats Nyholm Searchers After Horror Understanding H. P. Lovecraft and His Fiction // Searchers After Horror Horror After Searchers // 2021 9 789517 659864 ISBN 978-951-765-986-4 Åbo Akademi University Press Tavastgatan 13, FI-20500 Åbo, Finland Tel. +358 (0)2 215 4793 E-mail: [email protected] Sales and distribution: Åbo Akademi University Library Domkyrkogatan 2–4, FI-20500 Åbo, Finland Tel. +358 (0)2 -215 4190 E-mail: [email protected] SEARCHERS AFTER HORROR Searchers After Horror Understanding H. P. Lovecraft and His Fiction Mats Nyholm Åbo Akademis förlag | Åbo Akademi University Press Åbo, Finland, 2021 CIP Cataloguing in Publication Nyholm, Mats. Searchers after horror : understanding H. P. Lovecraft and his fiction / Mats Nyholm. - Åbo : Åbo Akademi University Press, 2021. Diss.: Åbo Akademi University. ISBN 978-951-765-986-4 ISBN 978-951-765-986-4 ISBN 978-951-765-987-1 (digital) Painosalama Oy Åbo 2021 Abstract The aim of this thesis is to investigate the life and work of H. P. Lovecraft in an attempt to understand his work by viewing it through the filter of his life. The approach is thus historical-biographical in nature, based in historical context and drawing on the entirety of Lovecraft’s non-fiction production in addition to his weird fiction, with the aim being to suggest some correctives to certain prevailing critical views on Lovecraft. These views include the “cosmic school” led by Joshi, the “racist school” inaugurated by Houellebecq, and the “pulp school” that tends to be dismissive of Lovecraft’s work on stylistic grounds, these being the most prevalent depictions of Lovecraft currently. -

New Frontiers of Intelligence Analysis

A Publication of the Global Futures Partnership of the Sherman Kent School for Intelligence Analysis Link Campus University of Malta Gino Germani Center for Comparative Studies of Modernization and Development NEW FRONTIERS OF INTELLIGENCE ANALYSIS Papers presented at the conference on “New Frontiers of Intelligence Analysis: Shared Threats, Diverse Perspectives, New Communities” Rome, Italy, 31 March-2 April 2004 1 All statements of fact, opinion, or analysis expressed in these collected papers are those of the authors. They do not necessarily reflect the official positions or views of the Global Futures Part- nership of the Sherman Kent School for Intelligence Analysis, of the Link Campus University of Malta, or of the Gino Germani Center for the Comparative Study of Modernization and Develop- ment. The papers in this publication have been published with the consent of the authors. This volume has been edited by the New Frontiers conference co-directors. Comments and queries are welcome and may be directed to New Frontiers conference co-di- rector Dr. L. S. Germani : [email protected] All rights reserved. No part of this publication may be reproduced, stored in a retrieval sys- tem, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of the publishers and the authors of the papers. 2 CONTENTS PREFACE 5 Vincenzo Scotti INTRODUCTION 9 Carol Dumaine and L. Sergio Germani Part I - INTRODUCTORY REMARKS TO THE CONFERENCE 1 - INTRODUCTORY REMARKS 15 Gianfranco Fini 2 - INTRODUCTORY REMARKS 17 Nicolò Pollari Part II – PAPERS PRESENTED AT THE CONFERENCE 3 - LOOKING OVER THE HORIZON: STRATEGIC CHOICES, INTELLIGENCE CHALLENGES 23 Robert L. -

The Views of the U.S. Left and Right on Whistleblowers Whistleblowers on Right and U.S

The Views of the U.S. Left and Right on Whistleblowers Concerning Government Secrets By Casey McKenzie Submitted to Central European University Department of International Relations and European Studies In partial fulfillment of the requirements for the degree of Master of Arts Supervisor: Professor Erin Kristin Jenne Word Count: 12,868 CEU eTD Collection Budapest Hungary 2014 Abstract The debates on whistleblowers in the United States produce no simple answers and to make thing more confusing there is no simple political left and right wings. The political wings can be further divided into far-left, moderate-left, moderate-right, far-right. To understand the reactions of these political factions, the correct political spectrum must be applied. By using qualitative content analysis of far-left, moderate-left, moderate-right, far-right news sites I demonstrate the debate over whistleblowers belongs along a establishment vs. anti- establishment spectrum. CEU eTD Collection i Acknowledgments I would like to express my fullest gratitude to my supervisor, Erin Kristin Jenne, for the all the help see gave me and without whose guidance I would have been completely lost. And to Danielle who always hit me in the back of the head when I wanted to give up. CEU eTD Collection ii Table of Contents Abstract ....................................................................................................................................... i Acknowledgments..................................................................................................................... -

Compassthe DIRECTION for the DEMOCRATIC LEFT

compassTHE DIRECTION FOR THE DEMOCRATIC LEFT MAPPING THE CENTRE GROUND Peter Kellner compasscontents Mapping the Centre Ground “This is a good time to think afresh about the way we do politics.The decline of the old ideologies has made many of the old Left-Right arguments redundant.A bold project to design a positive version of the Centre could fill the void.” Compass publications are intended to create real debate and discussion around the key issues facing the democratic left - however the views expressed in this publication are not a statement of Compass policy. compass Mapping the Centre Ground Peter Kellner All three leaders of Britain’s main political parties agree on one thing: elections are won and lost on the centre ground.Tony Blair insists that Labour has won the last three elections as a centre party, and would return to the wilderness were it to revert to left-wing policies. David Cameron says with equal fervour that the Conservatives must embrace the Centre if they are to return to power. Sir Menzies Campbell says that the Liberal Democrats occupy the centre ground out of principle, not electoral calculation, and he has nothing to fear from his rivals invading his space. What are we to make of all this? It is sometimes said that when any proposition commands such broad agreement, it is probably wrong. Does the shared obsession of all three party leaders count as a bad, consensual error – or are they right to compete for the same location on the left-right axis? This article is an attempt to answer that question, via an excursion down memory lane, a search for clear definitions and some speculation about the future of political debate. -

Desiring the Big Bad Blade: Racing the Sheikh in Desert Romances

Desiring the Big Bad Blade: Racing the Sheikh in Desert Romances Amira Jarmakani American Quarterly, Volume 63, Number 4, December 2011, pp. 895-928 (Article) Published by The Johns Hopkins University Press DOI: 10.1353/aq.2011.0061 For additional information about this article http://muse.jhu.edu/journals/aq/summary/v063/63.4.jarmakani.html Access provided by Georgia State University (4 Sep 2013 15:38 GMT) Racing the Sheikh in Desert Romances | 895 Desiring the Big Bad Blade: Racing the Sheikh in Desert Romances Amira Jarmakani Cultural fantasy does not evade but confronts history. — Geraldine Heng, Empire of Magic: Medieval Romance and the Politics of Cultural Fantasy n a relatively small but nevertheless significant subgenre of romance nov- els,1 one may encounter a seemingly unlikely object of erotic attachment, a sheikh, sultan, or desert prince hero who almost always couples with a I 2 white western woman. In the realm of mass-market romance novels, a boom- ing industry, the sheikh-hero of any standard desert romance is but one of the many possible alpha-male heroes, and while he is certainly not the most popular alpha-male hero in the contemporary market, he maintains a niche and even has a couple of blogs and an informative Web site devoted especially to him.3 Given popular perceptions of the Middle East as a threatening and oppressive place for women, it is perhaps not surprising that the heroine in a popular desert romance, Burning Love, characterizes her sheikh thusly: “Sharif was an Arab. To him every woman was a slave, including her. -

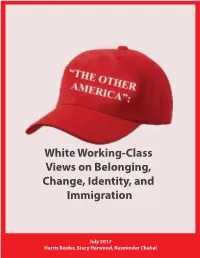

White Working-Class Views on Belonging, Change, Identity, and Immigration

White Working-Class Views on Belonging, Change, Identity, and Immigration July 2017 Harris Beider, Stacy Harwood, Kusminder Chahal Acknowledgments We thank all research participants for your enthusiastic participation in this project. We owe much gratitude to our two fabulous research assistants, Rachael Wilson and Erika McLitus from the University of Illinois, who helped us code all the transcripts, and to Efadul Huq, also from the University of Illinois, for the design and layout of the final report. Thank you to Open Society Foundations, US Programs for funding this study. About the Authors Harris Beider is Professor in Community Cohesion at Coventry University and a Visiting Professor in the School of International and Public Affairs at Columbia University. Stacy Anne Harwood is an Associate Professor in the Department of Urban & Regional Planning at the University of Illinois, Urbana-Champaign. Kusminder Chahal is a Research Associate for the Centre for Trust, Peace and Social Relations at Coventry University. For more information Contact Dr. Harris Beider Centre for Trust, Peace and Social Relations Coventry University, Priory Street Coventry, UK, CV1 5FB email: [email protected] Dr. Stacy Anne Harwood Department of Urban & Regional Planning University of Illinois at Urbana-Champaign 111 Temple Buell Hall, 611 E. Lorado Taft Drive Champaign, IL, USA, 61820 email: [email protected] How to Cite this Report Beider, H., Harwood, S., & Chahal, K. (2017). “The Other America”: White working-class views on belonging, change, identity, and immigration. Centre for Trust, Peace and Social Relations, Coventry University, UK. ISBN: 978-1-84600-077-5 Photography credits: except when noted all photographs were taken by the authors of this report. -

Sherman Kent, Scientific Hubris, and the CIA's Office of National Estimates

Inman Award Essay Beacon and Warning: Sherman Kent, Scientific Hubris, and the CIA’s Office of National Estimates J. Peter Scoblic Beacon and Warning: Sherman Kent, Scientific Hubris, and the CIA’s Office of National Estimates Would-be forecasters have increasingly extolled the predictive potential of Big Data and artificial intelligence. This essay reviews the career of Sherman Kent, the Yale historian who directed the CIA’s Office of National Estimates from 1952 to 1967, with an eye toward evaluating this enthusiasm. Charged with anticipating threats to U.S. national security, Kent believed, as did much of the postwar academy, that contemporary developments in the social sciences enabled scholars to forecast human behavior with far greater accuracy than before. The predictive record of the Office of National Estimates was, however, decidedly mixed. Kent’s methodological rigor enabled him to professionalize U.S. intelligence analysis, making him a model in today’s “post- truth” climate, but his failures offer a cautionary tale for those who insist that technology will soon reveal the future. I believe it is fair to say that, as a on European civilization.2 Kent had no military, group, [19th-century historians] thought diplomatic, or intelligence background — in fact, their knowledge of the past gave them a no government experience of any kind. This would prophetic vision of what was to come.1 seem to make him an odd candidate to serve –Sherman Kent William “Wild Bill” Donovan, a man of intimidating martial accomplishment, whom President Franklin t is no small irony that the man who did D. -

SHERMAN KENT at YALE 62 the Making of an Intelligence Analyst Antonia Woodford

THE YALE HISTORICAL REVIEW AN UNDERGRADUATE PUBLICATION SPRING 2014 THE YALE HISTORICAL REVIEW AN UNDERGRADUATE PUBLICATION The Yale Historical Review provides undergraduates an opportunity to have their exceptional work highlighted and SPRING 2014 encourages the diffusion of original historical ideas on campus by VOLUME III providing a forum for outstanding undergraduate history papers ISSUE II covering any historical topic. The Yale Historical Review Editorial Board For past issues and information regarding gratefully acknowledges the support of the submissions, advertisements, subscriptions, following donors: contributions, and our Editorial Board, please visit our website: FOUNDING PATRONS Matthew and Laura Dominski WWW.YALE.EDU/ In Memory of David J. Magoon YALEHISTORICALREVIEW Sareet Majumdar Brenda and David Oestreich Stauer Or visit our Facebook page: Derek Wang Yale Club of the Treasure Coast WWW.FACEBOOK.COM/ Zixiang Zhao YALEHISTORICALREVIEW FOUNDING CONTRIBUTORS With further questions or to provide Council on Latin American and Iberian feedback, please e-mail us at: Studies at Yale Department of History, Yale University [email protected] Peter Dominksi J.S. Renkert Joe and Marlene Toot Or write to us at: Yale Center for British Art Yale Club of Hartford THE YALE HISTORICAL REVIEW Yale Council on Middle East Studies P.O. BOX #204762 NEW HAVEN, CT 06520 CONTRIBUTORS Annie Yi The Yale Historical Review is published by Greg Weiss Yale students. Yale University is not Department of the History of Art, Yale responsible for its contents. University ON THE COVER: U.S. Coast and Geodetic Survey, Special Publication No. 7: Atlas of the Philippine Islands (Washington, DC: Government Printing Office, 1900), Biodiversity Heritage Li- brary, http://www.biodiversitylibrary.org/item/106585#page/43/mode/1up. -

Spatialized Corporatism Between Town and Countryside

SHS Web of Conferences 63, 02003 (2019) https://doi.org/10.1051/shsconf/20196302003 MODSCAPES 2018 Spatialized corporatism between town and countryside 1,1 Francesca Bonfante 1Politecnico di Milano, ABC Department of Architecture, Built Environment and Construction Engineering, 20133 Milan, Italy. Abstract. This contribution deals with the relationship between town planning, architectural design and landscape in the foundation of “new towns” in Italy. In doing so, I shall focus on the Pontine Marshes, giving due consideration to then emerging theories about the fascist corporate state, whose foundation act may be traced back to Giuseppe Bottai’s “Charter of Labour”. This political-cultural “model” purported a clear hierarchy between settlements, each bound for a specific role, for which specific functions were to be assigned to different parts of the city. Similarly, cultivations in the countryside were to specialise. In the Pontine Marshes, Littoria was to become a provincial capital and Sabaudia a tourist destination, Pontinia an industrial centre and Aprilia an eminently rural town. Whereas the term “corporatism” may remind the guild system of the Middle Age, its 1930s’ revival meant to effectively supports the need for a cohesive organization of socio-economic forces, whose recognition and classification was to support the legal-political order of the state. What was the corporate city supposed to be? Some Italian architects rephrased this question: what was the future city in Italy of the hundred cities? Bringing to the fore the distinguishing character of the settlements concerned, and based on the extensive literature available, this contribution discusses the composition of territorial and urban space, arguing that, in the Pontine Marshes, this entails the hierarchical triad farm-village-city, as well as an extraordinary figurative research at times hovering towards “classicism”, “rationalism” or “picturesque”. -

Holocaust Education Standards Grade 4 Standard 1: SS.4.HE.1

1 Proposed Holocaust Education Standards Grade 4 Standard 1: SS.4.HE.1. Foundations of Holocaust Education SS.4.HE.1.1 Compare and contrast Judaism to other major religions observed around the world, and in the United States and Florida. Grade 5 Standard 1: SS.5.HE.1. Foundations of Holocaust Education SS.5.HE.1.1 Define antisemitism as prejudice against or hatred of the Jewish people. Students will recognize the Holocaust as history’s most extreme example of antisemitism. Teachers will provide students with an age-appropriate definition of with the Holocaust. Grades 6-8 Standard 1: SS.68.HE.1. Foundations of Holocaust Education SS.68.HE.1.1 Define the Holocaust as the planned and systematic, state-sponsored persecution and murder of European Jews by Nazi Germany and its collaborators between 1933 and 1945. Students will recognize the Holocaust as history’s most extreme example of antisemitism. Students will define antisemitism as prejudice against or hatred of Jewish people. Grades 9-12 Standard 1: SS.HE.912.1. Analyze the origins of antisemitism and its use by the National Socialist German Workers' Party (Nazi) regime. SS.912.HE.1.1 Define the terms Shoah and Holocaust. Students will distinguish how the terms are appropriately applied in different contexts. SS.912.HE.1.2 Explain the origins of antisemitism. Students will recognize that the political, social and economic applications of antisemitism led to the organized pogroms against Jewish people. Students will recognize that The Protocols of the Elders of Zion are a hoax and utilized as propaganda against Jewish people both in Europe and internationally. -

Scientific Racism and the Legal Prohibitions Against Miscegenation

Michigan Journal of Race and Law Volume 5 2000 Blood Will Tell: Scientific Racism and the Legal Prohibitions Against Miscegenation Keith E. Sealing John Marshall Law School Follow this and additional works at: https://repository.law.umich.edu/mjrl Part of the Civil Rights and Discrimination Commons, Law and Race Commons, Legal History Commons, and the Religion Law Commons Recommended Citation Keith E. Sealing, Blood Will Tell: Scientific Racism and the Legal Prohibitions Against Miscegenation, 5 MICH. J. RACE & L. 559 (2000). Available at: https://repository.law.umich.edu/mjrl/vol5/iss2/1 This Article is brought to you for free and open access by the Journals at University of Michigan Law School Scholarship Repository. It has been accepted for inclusion in Michigan Journal of Race and Law by an authorized editor of University of Michigan Law School Scholarship Repository. For more information, please contact [email protected]. BLOOD WILL TELL: SCIENTIFIC RACISM AND THE LEGAL PROHIBITIONS AGAINST MISCEGENATION Keith E. Sealing* INTRODUCTION .......................................................................... 560 I. THE PARADIGM ............................................................................ 565 A. The Conceptual Framework ................................ 565 B. The Legal Argument ........................................................... 569 C. Because The Bible Tells Me So .............................................. 571 D. The Concept of "Race". ...................................................... 574 II. -

Anthropology, History Of

Encyclopedia of Race and Racism, Vol1 – finals/ 10/4/2007 11:59 Page 93 Anthropology, History of Jefferson, Thomas. 1944. ‘‘Notes on the State of Virginia.’’ In defend that institution from religious abolitionists, who The Life and Selected Writings of Thomas Jefferson, edited by called for the unity of God’s children, and from Enlight- Adrienne Koch and William Peden. New York: Modern enment critics, who called for liberty, fraternity, and American Library. equality of man. During the early colonial experience in Lewis, R. B. 1844. Light and Truth: Collected from the Bible and Ancient and Modern History Containing the Universal History North America, ‘‘race’’ was not a term that was widely of the Colored and Indian Race; from the Creation of the World employed. Notions of difference were often couched in to the Present Time. Boston: Committee of Colored religious terms, and comparisons between ‘‘heathen’’ and Gentlemen. ‘‘Christian,’’ ‘‘saved’’ and ‘‘unsaved,’’ and ‘‘savage’’ and Morton, Samuel. 1844. Crania Aegyptiaca: Or, Observations on ‘‘civilized’’ were used to distinguish African and indige- Egyptian Ethnography Derived from Anatomy, History, and the nous peoples from Europeans. Beginning in 1661 and Monuments. Philadelphia: J. Penington. continuing through the early eighteenth century, ideas Nash, Gary. 1990. Race and Revolution. Madison, WI: Madison about race began to circulate after Virginia and other House. colonies started passing legislation that made it legal to Pennington, James, W. C. 1969 (1841). A Text Book of the enslave African servants and their children. Origins and History of the Colored People. Detroit, MI: Negro History Press. In 1735 the Swedish naturalist Carl Linnaeus com- pleted his first edition of Systema Naturae, in which he attempted to differentiate various types of people scientifi- Mia Bay cally.