7.14 Historical Perspective and Further Reading

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Performing the Shuffle with the PM2I and Illiac SIMD Interconnection Networks

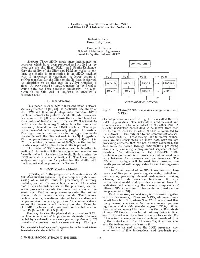

Performing the Shuffle with the PM2I and Illiac SIMD Interconnection Networks Robert R. Seban Howard Jay Siegel Purdue University School of Electrical Engineering West Lafayette, Indiana 47907 Abstract—Three SIMD single stage interconnection networks which have been proposed and studied in the literature are the Illiac, PM2I, and Shuffle-Exchange. Here the ability of the Illiac and PM2I networks to per- form the shuffle interconnection in an SIMD machine with N processors is examined. A lower bound of 3\/N/2 transfers for the Illiac to shuffle data is derived. An algorithm to do this task in 2\/N-l transfers is given. A lower bound of log2N transfers for the PM2I to shuffle data has been published previously. An algo- rithm to do this task in log2N + l in transfers is presented here. 1. Introduction This paper extends SIMD interconnection network studies presented in [28, 31]. In particular, the ability of Fig. 1: PE-to-PE SIMD machine configuration, with the PM2I and Illiac single stage interconnection SIMD machine networks to perform the shuffle interconnection NPEs. is examined. In [28] it is shown that a lower bound on of configuration is shown in Fig. 1. It is called the PE- the number of transfers needed for the PM2I network to to-PE organization. The network is unidirectional and perform the shuffle is log2N, where N is the number of connects each PE to some subset of the other PEs. A processing elements in the SIMD machine. The algo- transfer instruction causes data to be moved from each rithm presented here requires only (log2N) + l transfers. -

Online Sec 6.15.Indd

6.155.9 Historical Perspective and Further Reading Th ere is a tremendous amount of history in multiprocessors; in this section we divide our discussion by both time period and architecture. We start with the SIMD approach and the Illiac IV. We then turn to a short discussion of some other early experimental multiprocessors and progress to a discussion of some of the great debates in parallel processing. Next we discuss the historical roots of the present multiprocessors and conclude by discussing recent advances. SIMD Computers: Attractive Idea, Many Attempts, No Lasting Successes Th e cost of a general multiprocessor is, however, very high and further design options were considered which would decrease the cost without seriously degrading the power or effi ciency of the system. Th e options consist of recentralizing one of the three major components. Centralizing the [control unit] gives rise to the basic organization of [an] . array processor such as the Illiac IV. Bouknight et al. [1972] Th e SIMD model was one of the earliest models of parallel computing, dating back to the fi rst large-scale multiprocessor, the Illiac IV. Th e key idea in that multiprocessor, as in more recent SIMD multiprocessors, is to have a single instruction that operates on many data items at once, using many functional units (see Figure 6.15.1). Although successful in pushing several technologies that proved useful in later projects, it failed as a computer. Costs escalated from the $8 million estimate in 1966 to $31 million by 1972, despite construction of only a quarter of the planned multiprocessor. -

SIMD1 Ñ Illiac IV

Illiac IV History Illiac IV n First massively parallel computer ● SIMD (duplicate the PE, not the CU) ● First large system with semiconductor- based primary memory n Three earlier designs (vacuum tubes and transistors) culminating in the Illiac IV design, all at the University of Illinois ● Logical organization similar to the Solomon (prototyped by Westinghouse) ● Sponsored by DARPA, built by various companies, assembled by Burroughs ● Plan was for 256 PEs, in 4 quadrants of 64 PEs, but only one quadrant was built ● Used at NASA Ames Research Center in mid-1970s 1 Fall 2001, Lecture SIMD1 2 Fall 2001, Lecture SIMD1 Illiac IV Architectural Overview Programming Issues n One CU (control unit), n Consider the following FORTRAN code: 64 64-bit PEs (processing elements), DO 10 I = 1, 64 each PE has a PEM (PE memory) 10 A(I) = B(I) + C(I) ● Put A(1), B(1), C(1) on PU 1, etc. n CU operates on scalars, PEs on vector- n Each PE loads RGA from base+1, aligned arrays adds base+2, stores into base, ● All PEs execute the instruction broadcast where “base” is base of data in PEM by the CU, if they are in active mode n Each PE does this simultaneously, giving a speedup of 64 ● Each PE can perform various arithmetic ● and logical instructions For less than 64 array elements, some processors will sit idle ● Each PE has a memory with 2048 64-bit ● words, accessed in less than 188 ns For more than 64 array elements, some processors might have to do more work ● PEs can operate on data in 64-bit, 32-bit, and 8-bit formats n For some algorithms, it may be desirable to turn off PEs n Data routed between PEs various ways ● 64 PEs compute, then one half passes data to other half, then 32 PEs compute, n I/O is handled by a separate Burroughs etc. -

Vector Machines Vector Machines Today Introduction a Vector Processor Is a CPU That Can Run One Instructiononanentire Vector of Data

Hakam Zaidan Stephen Moore Outline Vector Architectures Properties Applications History Westinghouse Solomon ILLIAC IV CDC STAR 100 Cray‐1 Other Cray Vector Machines Vector Machines Today Introduction A Vector processor is a CPU that can run one instructiononanentire vector of data. The fetched number of instructions are small. They also achieve data parallelism in large scientific and multimedia applications. Styles of Vector Architectures Based on how the operands are fetched, vector processors can be divided into two categories: Memory‐Memory Architecture. Vector‐Register Architecture. Vector Processor Elements Vector Register: Fixed length, single vector, ports for reading and writing. Usually 8 to 32 registers of length 64 or 128 bits. Vector Functional Units (FUs): Usually 4‐8 functional units: FP mult, FP add, and FP divide, in addition to the integer add and logical shift Vector Load Store Unit (LSUs). Scalar registers. Cross‐bar. Vector Processor Properties Results are independent. Known pattern for memory access by the vector instructions. In pipelines, branches and branch problems are reduced. Single vector instruction indicate huge amount of calculations (e.g. loops). Disadvantages With scalar instructions: Relatively slow. Some difficulties in the implementation of the precise exceptions. High cost for on‐chip vector memory systems. Code complexity. Applications Lossy compression. Lossless compression. Multimedia Processing. Standard benchmarking kernels. Handwriting recognition. Speech recognition. Cryptography. Operating system and networking. Databases. Support of language run‐time. History In 1962, Illinois Automatic Computer series of super computers ILLIAC I, ILLIAC II, ILLIAC III, ILLIAC IV (with 64 ALUs 100‐ 150 Mflops). In 1973 TI’s Advance Scientific Computer (ASC) 20‐80 Mflops. -

The CRAY- 1 Computer System

We believe the key to the 10's longevity is its basically simple, clean structure with adequately large (one Mbyte) address space that allows users to get work done. In this way, it has evolved easily with use and with technology. An equally significant factor in Computer G. Bell, S. H. Fuller, and its success is a single operating system environment Systems D. Siewiorek, Editors enabling user program sharing among all machines. The machine has thus attracted users who have built significant languages and applications in a variety of The CRAY- 1 environments. These user-developers are thus the dominant system architects-implementors. Computer System In retrospect, the machine turned out to be larger Richard M. Russell and further from a minicomputer than we expected. Cray Research, Inc. As such it could easily have died or destroyed the tiny DEC organization that started it. We hope that this paper has provided insight into the interactions of its development. This paper describes the CRAY,1, discusses the evolution of its architecture, and gives an account of Acknowledgments. Dan Siewiorek deserves our some of the problems that were overcome during its greatest thanks for helping with a complete editing of manufacture. the text. The referees and editors have been especially The CRAY-1 is the only computer to have been helpful. The important program contributions by users built to date that satisfies ERDA's Class VI are too numerous for us to give by name but here are requirement (a computer capable of processing from most of them: APL, Basic, BLISS, DDT, LISP, Pascal, 20 to 60 million floating point operations per second) Simula, sos, TECO, and Tenex. -

ILLIAC IV Is the Most Powerful by As Much As a Factor of Four

The ILLl AC IV System represents a fundamentally different approach to data processing. The limitation imposed by the velocity of light, once thought to be an absolute upper bound on computing power, has been stepped over by several approaches to computer architecture, of which the ILLIAC IV is the most powerful by as much as a factor of four. The conquest of the limitations of the velocity of light was foreseen by Herman Kahn and A.J. Wiener in 1967, when they wrote: ". .over the past fifteen years this basic criterion of computer performance has increased by a factor of ten every two or three years . While some will argue that we are beginning to reach limits set by basic physical constants, such as the speed of light, this may not be true, especially when one considers new techniques in time sharing, segmentation of programs to add flexibility, and parallel processing computers. .(such as). .the ILLIAC IV #I .a. ILLIAC IV represents a significant step forward in computer systems architecture offering - greatly improved performances: 200 MIPS computation speed 109 bitslsec I10 transfer rate 106 bytes of high-speed integrated circuit memories 2.5 X 109bits of parallel disk storage - contemporary technology: ECL circuits semiconductor memories belted cables - and a new approach to the art of computing using parallelism, which offers an opportunity to programmers to utilize the vast ILLIAC 1 V Quadrant power of the system as effectively as possible. MAJOR SYSTEM ELEMENTS As shown in the accompanying system diagram, the major elements of the ILLIAC IV System are the Array Subsystem, the I10 Subsystem, the Disk File Subsystem, and the B 6700 Control Computer Subsystem. -

Puters. Large-Scale Computer Systems Have the Potential to Achieve Two to Three Orders of Magnitude Speed Improvement Over the Next Decade

COMPUTER SYSTEMS: MHAT THE FUTURE HOLDS* Harold S. Stone University of Massachusetts ABSTRACT Continuing advances in device -technology will result in substantially higher speed devices at rapidly diminishing costs. These changes will in turn have a significant impact on computer architecture in the next decade, and on the wide-scale proliferation of computer systems into new applications. The microprocessor of today will eventually evolve to a processor with the power of a minicomputer or perhaps a medium-scale computer of today. Non- mechanical auxiliary memories are likely to be available as well. The compu- tational power and low cost of these computer systems will see them used in the home, office and industry for a wide variety of new applications. Medium-scale systems will tend to be total systems that are service ori- ented rather than hardware oriented. A major service will be that of the in- formation utility to provide data to a widely distributed pool of on-si te com- puters. Large-scale computer systems have the potential to achieve two to three orders of magnitude speed improvement over the next decade. A large portion of this may come from the faster devices. Another significant portion will come from higher para1 le1 ism. For 1 arge numerical computations , the vector processor of today may evolve to a hybrid vector processor-multiprocessor to provide efficient operation on both scalar and vector types of computations. I. INTRODUCTION The past two decades have seen truly phenomenal advances in computers, but the potential of computers has barely been realized. The advances in computer technology anticipated in the next decade will be so widespread that computers will directly affect the living habits and quality of life of almost every person in the United States, Since computer architecture i s 1argely driven by device techno1 ogy and software interfaces, Section I1 of this paper is devoted to an analysis of the devices that may be available in the 1980s, and to the smaller end of the com- puter scale. -

The CRAY-1 Computer System^

Chapter 44 use them again immediately without additional memory referenc- es, which slow down the computational process in other contem- The CRAY-1 porary computer systems. Computer System^ Other features enhancing the CRAY-l's computational capabili- ties are: its small size, which reduces distances electrical signals Richard M. Russell must travel within the computer's framework and allows a 12.5 nanosecond clock period (the CRAY-1 is the world's fastest scalar This paper describes the CRAY-1, discusses the evolution of its architec- processor); a one million word semiconductor memory equipped ture, and gives an account of some of the problems that were overcome with error detection and correction logic (secded); its 64-bit word during its manufacture. size; and its optimizing Fortran compiler. The CRAY-1 is the only computer to have been built to date that satisfies ERDA's Class VI requirement (a computer capable of processing from 20 to 60 million floating point operations per second) [Keller 1976], Architecture The CRAY-l's Fortran compiler (cft) is designed to give the scientific user immediate access to the benefits of the CRAY-l's vector processing The CRAY-1 has been called "the world's most expensive architecture. An optimizing compiler, CFT, "vectorizes" innermost DO love-seat" [Computer World, 1976]. Certainly, most people's first loops. Compatible with the ANSI 1966 Fortran Standard and with many reaction to the CRAY-1 is that it is so small. But in computer commonly supported Fortran extensions, cft does not require any source design it is a truism that smaller means faster. -

Illiac IV History First Massively Parallel Computer Three Earlier Designs

Illiac IV History Illiac IV I First massively parallel computer ● SIMD (duplicate the PE, not the CU) ● First large system with semiconductor- based primary memory I Three earlier designs (vacuum tubes and transistors) culminating in the Illiac IV design, all at the University of Illinois ● Logical organization similar to the Solomon (prototyped by Westinghouse) ● Sponsored by DARPA, built by various companies, assembled by Burroughs ● Plan was for 256 PEs, in 4 quadrants of 64 PEs, but only one quadrant was built ● Used at NASA Ames Research Center in mid-1970s 1 Fall 2003, SIMD 2 Fall 2003, SIMD Illiac IV Architectural Overview Programming Issues I One CU (control unit), I Consider the following FORTRAN code: 64 64-bit PEs (processing elements), DO 10 I = 1, 64 each PE has a PEM (PE memory) 10 A(I) = B(I) + C(I) ● Put A(1), B(1), C(1) on PU 1, etc. I CU operates on scalars, PEs on vector- I Each PE loads RGA from base+1, aligned arrays adds base+2, stores into base, ● All PEs execute the instruction broadcast where “base” is base of data in PEM by the CU, if they are in active mode I Each PE does this simultaneously, giving a speedup of 64 ● Each PE can perform various arithmetic ● and logical instructions For less than 64 array elements, some processors will sit idle ● Each PE has a memory with 2048 64-bit ● words, accessed in less than 188 ns For more than 64 array elements, some processors might have to do more work ● PEs can operate on data in 64-bit, 32-bit, and 8-bit formats I For some algorithms, it may be desirable to turn off PEs I Data routed between PEs various ways ● 64 PEs compute, then one half passes I I/O is handled by a separate Burroughs data to other half, then 32 PEs compute, etc. -

A Survey of Concurrent Architectures Technical Report: CSL-TR-86-307

COMPUTER SYSTEMS LABORATORY I STANFORD IJNlVERSl-lY - STANFORD, CA 943054055 A Survey of Concurrent Architectures Victor W. K. Mak Technical Report: CSL-TR-86-307 September 1986 The work described herein was supported by’ NASA Ames Research Center under contracts NAG 2-248 and NAGW 419. A Survey of Concurrent Architectures bY Victor W. K. Mak Technical Report: CSL-TR-86-307 September 1986 Computer Systems Laboratory Department of Electrical Engineering Stanford University Stanford, California 94305 Abstract A survey of 18 different concurrent architectures is presented in this report. Although this is by no means complete, it does cover a wide spectrum of both commercial and research architectures. A scheme is proposed to describe concurrent architectures using different dimensions: models of computation, interconnection network, processing element, memory system, and application areas. Key Words and Phrases: Concurrent Architecture, Interconnection Network, Model of Computation, Parallel Processing, Survey, Taxonomy. Copyright @ 1986 bY Victor W. K. Mak Contents 1 Introduction 1 2 Taxonomy of Concurrent Architectures 1 3 Architectures Studied 3 3.1 Systolic Array ................................... 3 3.2 STARAN ..................................... 4 3.3 Illiac IV ...................................... 4 3.4 BSP ........................................ 7 3.5 MPP ........................................ 8 3.6 CHiP ....................................... 9 3.7 NON-VON .................................... 9 3.8 DDSP ...................................... -

COMPUTERS on NASTRAN James L. Rogers, Jr. NASA Langley

THE I_ACT OF "FOURTH GENERATION" COMPUTERS ON NASTRAN James L. Rogers, Jr. NASA Langley Research Center INTRODUCTION The NASTRAN computer program (ref.l) is currently capable of execut- ing on three different "third generation" computers, the CDC 6000 series, the IBM 360/370 series, and the UNIVAC Ii00 series. In the past, NASTRAN has proved to be adaptable to the new hardware and software developments for these computers. The NASTRAN Systems Management Office (NSMO), as part of NASA's research effort to identify desirable formats for future large general-purpose programs, funded studies on the impact of the STAR- i00 (ref. 2) and ILLIAC IV (ref. 3) computers on NASTRAN. The STAR-100 and ILLIAC IV are referred to as "fourth generation" or "4G" computers in this paper. "Fourth generation" is in quotes because the differences between generations of computers is not easily definable. Many new improvements have been made to NASTRAN as it has evolved through the years. With each new release, there have been improved capa- bilities, efficiency improvements, and error corrections. The purpose of this paper is to shed light on the desired characteristics of future large programs, like NASTRAN, if designed for execution on "4G" machines. Concentration will be placed on the following two areas: i. Conversion to these new machines 2. Maintenance on these machines The advantages of operating NASTRAN on a "4G" computer is also discussed. BACKGROUND Figure i shows an example of the system changes NSMO has dealt with in the past and of some changes presently being contended with. Minor changes had to be made to Level 15 of NASTRAN when IBM released their 3330 disk packs. -

The Illiac IV System

~ROCFEDINOS OF THE IEEE, VOL. 60, NO. 4, APRIL 1972 369 49, p. 439, 1966. 'H E. W. Wagner, British Patent No. 1,065796 1967. W. H. F. Talbot, "Photogem Drawing," The Athenaeum, No. 589, p. 114, 1839. lL9 L. E Walkup, US. Patent No. 2,777,957, 1957. I59 K. Tamarlbuchl.and M. L. Smlth."Charge Determiling Species in Non-Aqueous ''O L. E. Walkup, U.S. Patent No. 2.825.614. 1954. Solvents." J. Colloid Interlace Sci., VoI. 22, p. 404, 1966. P. J. Warter, Jr.. ' FactorsDeterminlng Xerographlc Photoreceptor Performance," '60 T. Taniand S. Klkuchi."Spectral Sensitlzarion in Photographyand Electrophotog- Appl. Optics: Suppl. =3 on Electrophotography. p 65, 1969. raphy," Report lnst. Industrial SCI., Univ. Tokyo, Vol. 18, p. 51, 1968. H.Watanabe. et al."The Activation Energy !or OxygenDesorptlon from Zinc Oxide A. Tereninand I. Akimov."Some Experiments on thePhotosensitization MechaTism Surfaces." Jap. J. Appl. Phys., Vol. 4, p. 945. 1965. of Semiconductors by Dyes," J. Phys. Chem., VoI 69. p. 730, 1965. "' J. W. Weigl. Photographic Science, Ed. by W. F. Berg, New York: Focal Press, 1963. IiZ V. D Tughan and R. C. Pink, "Solutions of Metal Soaps in Organic Solvents Part 11," 'I4 R. D. Weiss, "Electrolytic Photography," Phot. Sci. and Enq., Vol. 11. p. 287, 1967. J. Chem. p. 1604, 1951 Soc., 'I5 P. H. Wersema,et al, "Calculatlon of the Electrophoretic Mobility of aSpherical ' h3 V. Tuiagin."Imaging Method Based on Photoelectrophoresis," J. Opt Soc. Amer., Collold Particle," J. CoUoid Interface Sci., Vol. 22. p. 78. 1966. VoI. 59, p. 328. 1969.